Knowledge-Guided Learning for Transceiver Design in Over-the-Air Federated Learning

Paper and Code

Mar 28, 2022

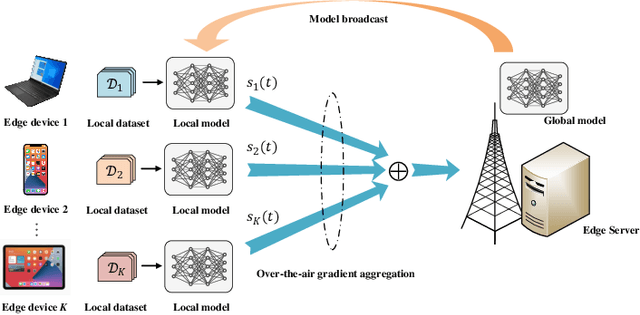

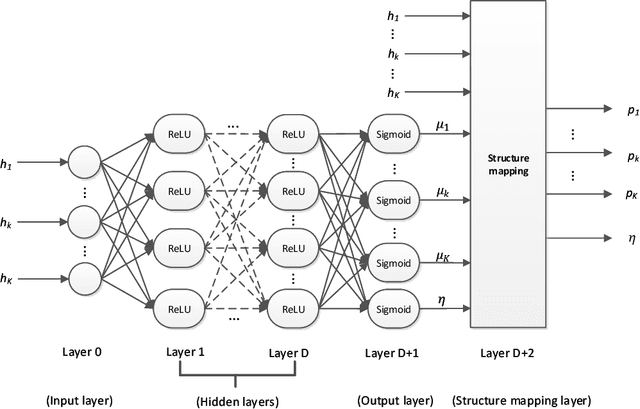

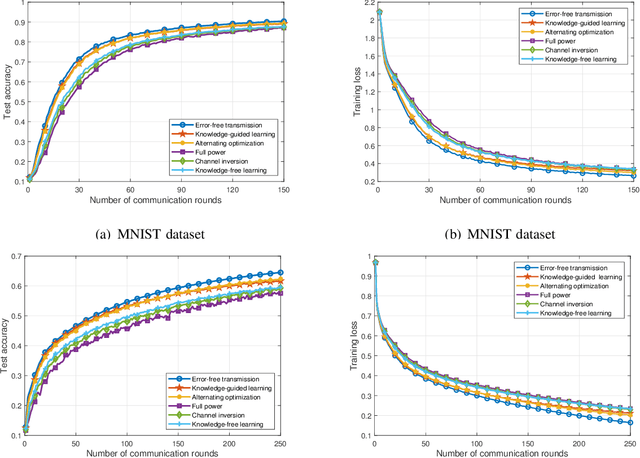

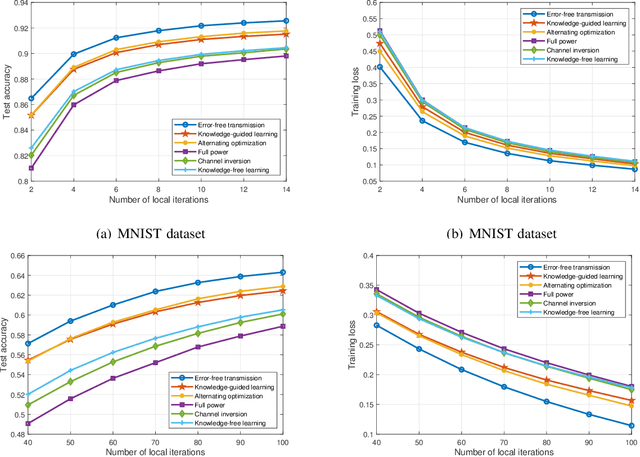

In this paper, we consider communication-efficient over-the-air federated learning (FL), where multiple edge devices with non-independent and identically distributed datasets perform multiple local iterations in each communication round and then concurrently transmit their updated gradients to an edge server over the same radio channel for global model aggregation using over-the-air computation (AirComp). We derive the upper bound of the time-average norm of the gradients to characterize the convergence of AirComp-assisted FL, which reveals the impact of the model aggregation errors accumulated over all communication rounds on convergence. Based on the convergence analysis, we formulate an optimization problem to minimize the upper bound to enhance the learning performance, followed by proposing an alternating optimization algorithm to facilitate the optimal transceiver design for AirComp-assisted FL. As the alternating optimization algorithm suffers from high computation complexity, we further develop a knowledge-guided learning algorithm that exploits the structure of the analytic expression of the optimal transmit power to achieve computation-efficient transceiver design. Simulation results demonstrate that the proposed knowledge-guided learning algorithm achieves a comparable performance as the alternating optimization algorithm, but with a much lower computation complexity. Moreover, both proposed algorithms outperform the baseline methods in terms of convergence speed and test accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge