Yili Fu

Monocular Depth Guided Occlusion-Aware Disparity Refinement via Semi-supervised Learning in Laparoscopic Images

May 13, 2025Abstract:Occlusion and the scarcity of labeled surgical data are significant challenges in disparity estimation for stereo laparoscopic images. To address these issues, this study proposes a Depth Guided Occlusion-Aware Disparity Refinement Network (DGORNet), which refines disparity maps by leveraging monocular depth information unaffected by occlusion. A Position Embedding (PE) module is introduced to provide explicit spatial context, enhancing the network's ability to localize and refine features. Furthermore, we introduce an Optical Flow Difference Loss (OFDLoss) for unlabeled data, leveraging temporal continuity across video frames to improve robustness in dynamic surgical scenes. Experiments on the SCARED dataset demonstrate that DGORNet outperforms state-of-the-art methods in terms of End-Point Error (EPE) and Root Mean Squared Error (RMSE), particularly in occlusion and texture-less regions. Ablation studies confirm the contributions of the Position Embedding and Optical Flow Difference Loss, highlighting their roles in improving spatial and temporal consistency. These results underscore DGORNet's effectiveness in enhancing disparity estimation for laparoscopic surgery, offering a practical solution to challenges in disparity estimation and data limitations.

Attention-Aware Laparoscopic Image Desmoking Network with Lightness Embedding and Hybrid Guided Embedding

Apr 11, 2024

Abstract:This paper presents a novel method of smoke removal from the laparoscopic images. Due to the heterogeneous nature of surgical smoke, a two-stage network is proposed to estimate the smoke distribution and reconstruct a clear, smoke-free surgical scene. The utilization of the lightness channel plays a pivotal role in providing vital information pertaining to smoke density. The reconstruction of smoke-free image is guided by a hybrid embedding, which combines the estimated smoke mask with the initial image. Experimental results demonstrate that the proposed method boasts a Peak Signal to Noise Ratio that is $2.79\%$ higher than the state-of-the-art methods, while also exhibits a remarkable $38.2\%$ reduction in run-time. Overall, the proposed method offers comparable or even superior performance in terms of both smoke removal quality and computational efficiency when compared to existing state-of-the-art methods. This work will be publicly available on http://homepage.hit.edu.cn/wpgao

Putting People in their Place: Monocular Regression of 3D People in Depth

Dec 15, 2021

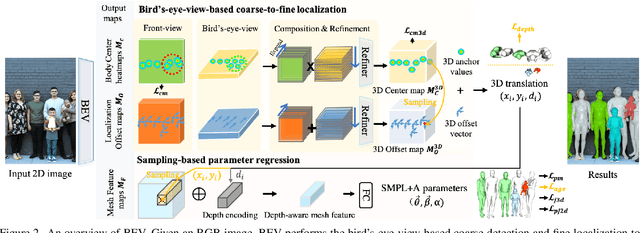

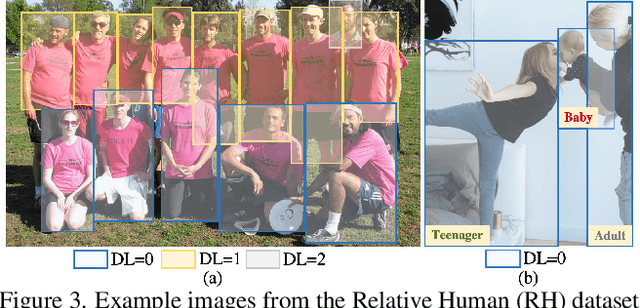

Abstract:Given an image with multiple people, our goal is to directly regress the pose and shape of all the people as well as their relative depth. Inferring the depth of a person in an image, however, is fundamentally ambiguous without knowing their height. This is particularly problematic when the scene contains people of very different sizes, e.g. from infants to adults. To solve this, we need several things. First, we develop a novel method to infer the poses and depth of multiple people in a single image. While previous work that estimates multiple people does so by reasoning in the image plane, our method, called BEV, adds an additional imaginary Bird's-Eye-View representation to explicitly reason about depth. BEV reasons simultaneously about body centers in the image and in depth and, by combing these, estimates 3D body position. Unlike prior work, BEV is a single-shot method that is end-to-end differentiable. Second, height varies with age, making it impossible to resolve depth without also estimating the age of people in the image. To do so, we exploit a 3D body model space that lets BEV infer shapes from infants to adults. Third, to train BEV, we need a new dataset. Specifically, we create a "Relative Human" (RH) dataset that includes age labels and relative depth relationships between the people in the images. Extensive experiments on RH and AGORA demonstrate the effectiveness of the model and training scheme. BEV outperforms existing methods on depth reasoning, child shape estimation, and robustness to occlusion. The code and dataset will be released for research purposes.

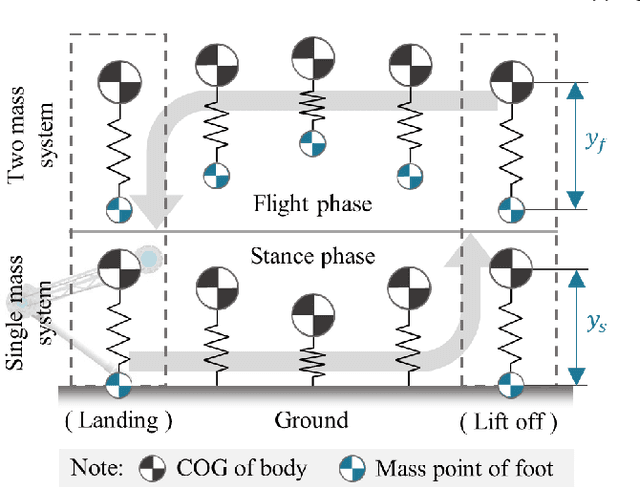

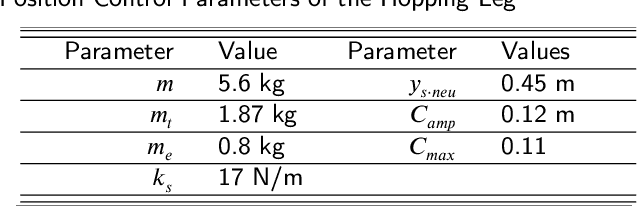

Maximize the Foot Clearance for a Hopping Robotic Leg Considering Motor Saturation

Jul 30, 2021

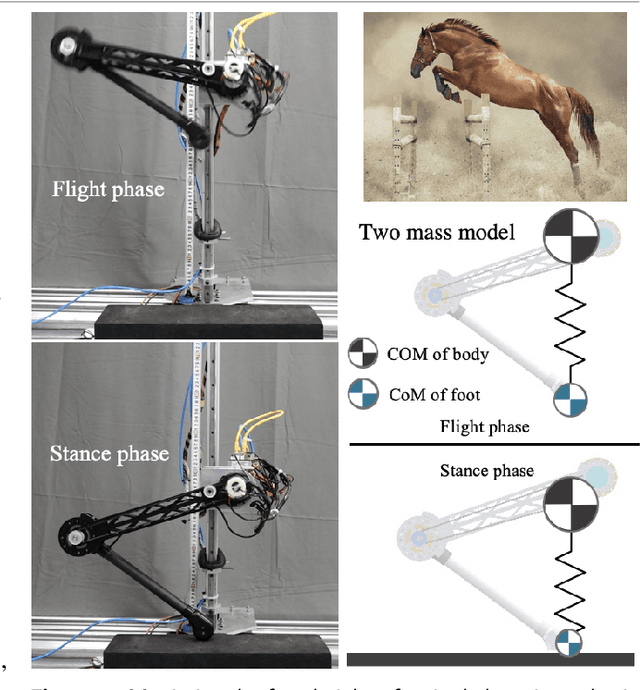

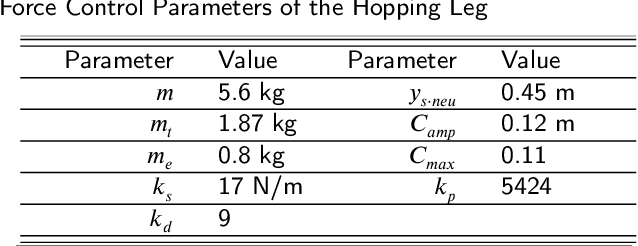

Abstract:A hopping leg, no matter in legged animals or humans, usually behaves like a spring during the periodic hopping. Hopping like a spring is efficient and without the requirement of complicated control algorithms. Position and force control are two main methods to realize such a spring-like behaviour. The position control usually consumes the torque resources to ensure the position accuracy and compensate the tracking errors. In comparison, the force control strategy is able to maintain a high elasticity. Currently, the position and force control both leads to the discount of motor saturation ratio as well as the bandwidth of the control system, and thus attenuates the performance of the actuator. To augment the performance, this letter proposes a motor saturation strategy based on the force control to maximize the output torque of the actuator and realize the continuous hopping motion with natural dynamics. The proposed strategy is able to maximize the saturation ratio of motor and thus maximize the foot clearance of the single leg. The dynamics of the two-mass model is utilized to increase the force bandwidth and the performance of the actuator. A single leg with two degrees of freedom is designed as the experiment platform. The actuator consists of a powerful electric motor, a harmonic gear and encoder. The effectiveness of this method is verified through simulations and experiments using a robotic leg actuated by powerful high reduction ratio actuators.

Synthetic Training for Monocular Human Mesh Recovery

Oct 27, 2020

Abstract:Recovering 3D human mesh from monocular images is a popular topic in computer vision and has a wide range of applications. This paper aims to estimate 3D mesh of multiple body parts (e.g., body, hands) with large-scale differences from a single RGB image. Existing methods are mostly based on iterative optimization, which is very time-consuming. We propose to train a single-shot model to achieve this goal. The main challenge is lacking training data that have complete 3D annotations of all body parts in 2D images. To solve this problem, we design a multi-branch framework to disentangle the regression of different body properties, enabling us to separate each component's training in a synthetic training manner using unpaired data available. Besides, to strengthen the generalization ability, most existing methods have used in-the-wild 2D pose datasets to supervise the estimated 3D pose via 3D-to-2D projection. However, we observe that the commonly used weak-perspective model performs poorly in dealing with the external foreshortening effect of camera projection. Therefore, we propose a depth-to-scale (D2S) projection to incorporate the depth difference into the projection function to derive per-joint scale variants for more proper supervision. The proposed method outperforms previous methods on the CMU Panoptic Studio dataset according to the evaluation results and achieves comparable results on the Human3.6M body and STB hand benchmarks. More impressively, the performance in close shot images gets significantly improved using the proposed D2S projection for weak supervision, while maintains obvious superiority in computational efficiency.

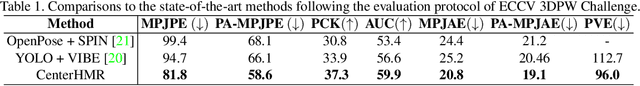

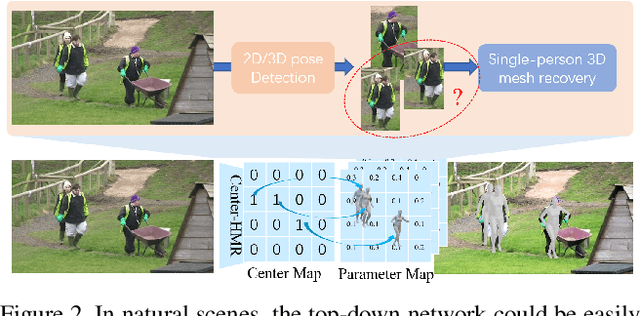

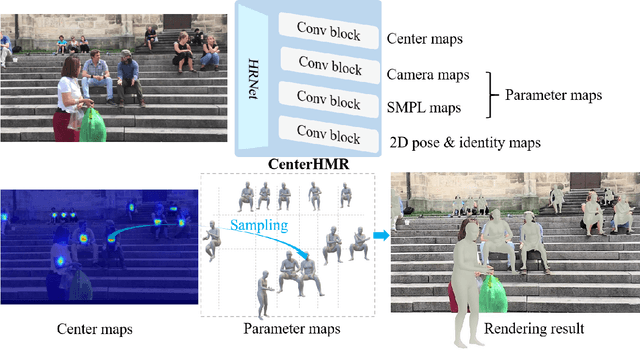

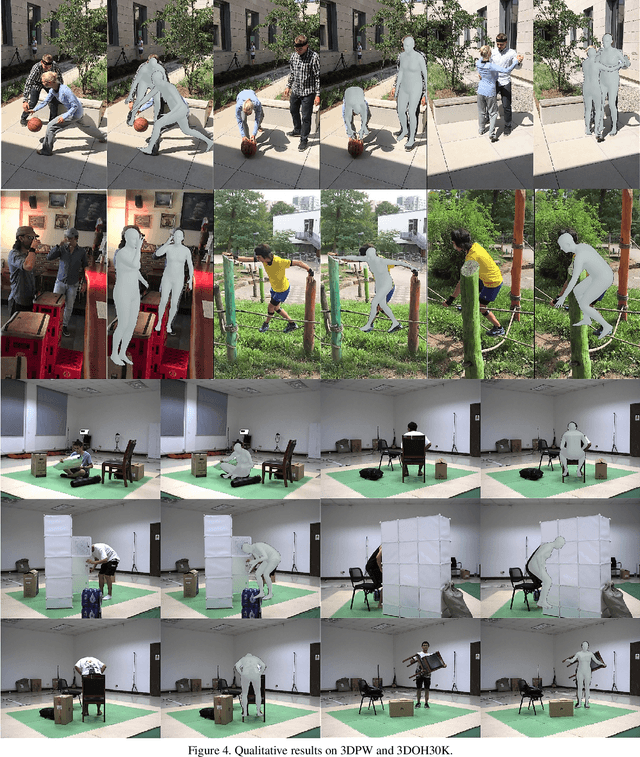

CenterHMR: a Bottom-up Single-shot Method for Multi-person 3D Mesh Recovery from a Single Image

Aug 27, 2020

Abstract:In this paper, we propose a method to recover multi-person 3D mesh from a single image. Existing methods follow a multi-stage detection-based pipeline, where the 3D mesh of each person is regressed from the cropped image patch. They have to suffer from the high complexity of the multi-stage process and the ambiguity of the image-level features. For example, it is hard for them to estimate multi-person 3D mesh from the inseparable crowded cases. Instead, in this paper, we present a novel bottom-up single-shot method, Center-based Human Mesh Recovery network (CenterHMR). The model is trained to simultaneously predict two maps, which represent the location of each human body center and the corresponding parameter vector of 3D human mesh at each center. This explicit center-based representation guarantees the pixel-level feature encoding. Besides, the 3D mesh result of each person is estimated from the features centered at the visible body parts, which improves the robustness under occlusion. CenterHMR surpasses previous methods on multi-person in-the-wild benchmark 3DPW and occlusion dataset 3DOH50K. Besides, CenterHMR has achieved a 2-nd place on ECCV 2020 3DPW Challenge. The code is released on https://github.com/Arthur151/CenterHMR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge