Yanle Hu

Indescribable Multi-modal Spatial Evaluator

Mar 02, 2023

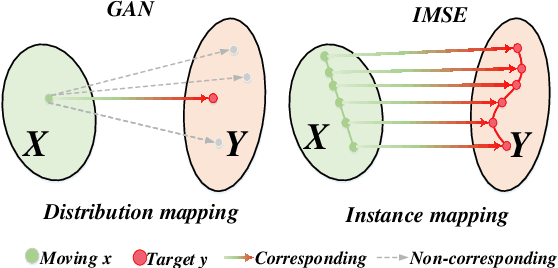

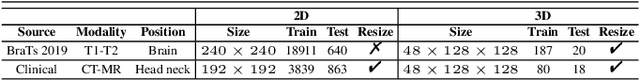

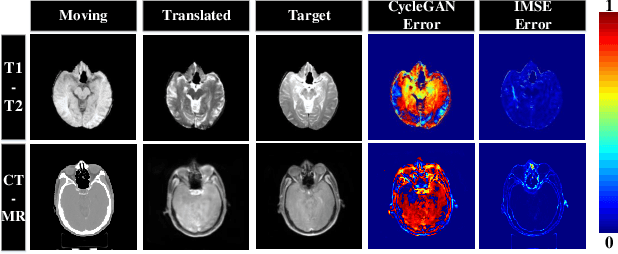

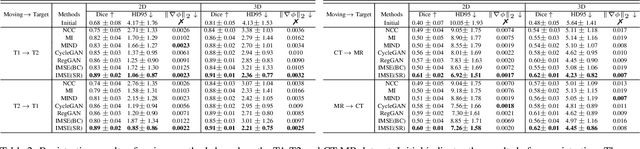

Abstract:Multi-modal image registration spatially aligns two images with different distributions. One of its major challenges is that images acquired from different imaging machines have different imaging distributions, making it difficult to focus only on the spatial aspect of the images and ignore differences in distributions. In this study, we developed a self-supervised approach, Indescribable Multi-model Spatial Evaluator (IMSE), to address multi-modal image registration. IMSE creates an accurate multi-modal spatial evaluator to measure spatial differences between two images, and then optimizes registration by minimizing the error predicted of the evaluator. To optimize IMSE performance, we also proposed a new style enhancement method called Shuffle Remap which randomizes the image distribution into multiple segments, and then randomly disorders and remaps these segments, so that the distribution of the original image is changed. Shuffle Remap can help IMSE to predict the difference in spatial location from unseen target distributions. Our results show that IMSE outperformed the existing methods for registration using T1-T2 and CT-MRI datasets. IMSE also can be easily integrated into the traditional registration process, and can provide a convenient way to evaluate and visualize registration results. IMSE also has the potential to be used as a new paradigm for image-to-image translation. Our code is available at https://github.com/Kid-Liet/IMSE.

A Long Short-term Memory Based Recurrent Neural Network for Interventional MRI Reconstruction

Apr 12, 2022

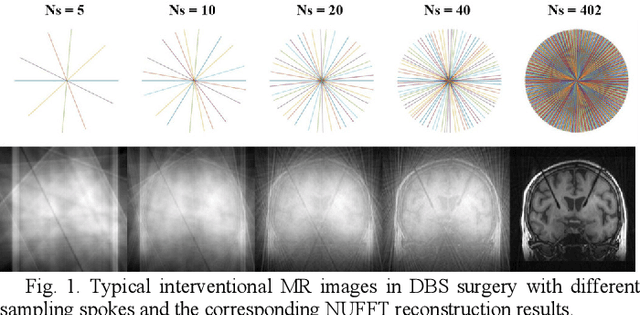

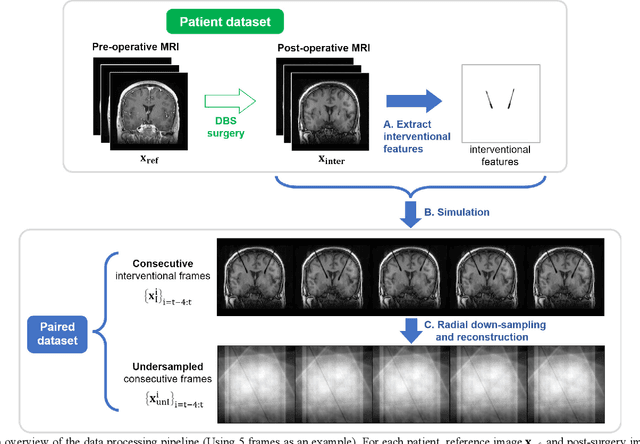

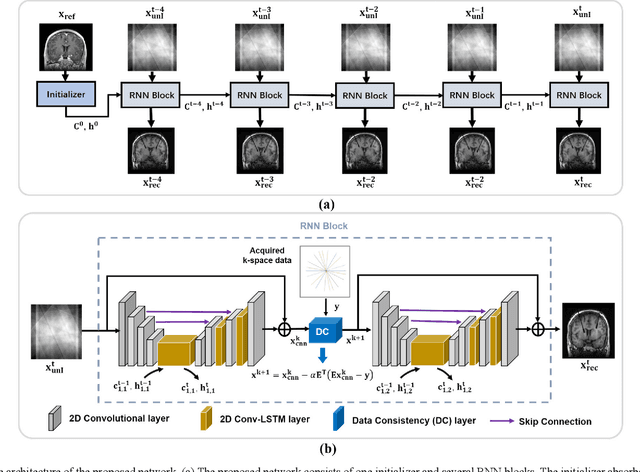

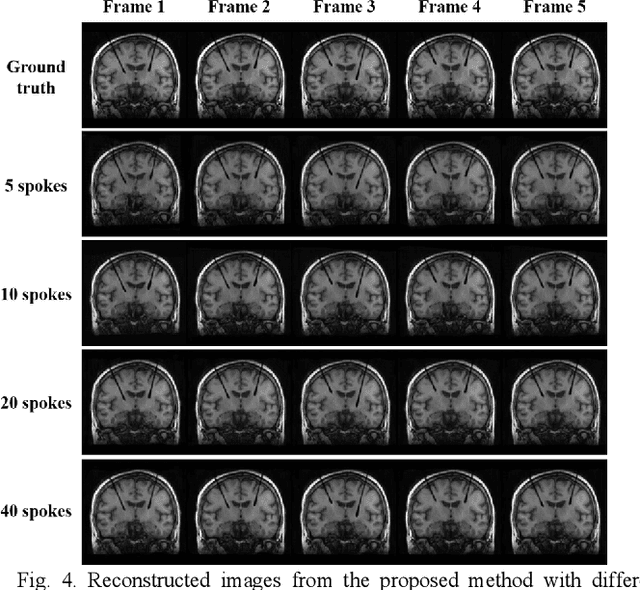

Abstract:Interventional magnetic resonance imaging (i-MRI) for surgical guidance could help visualize the interventional process such as deep brain stimulation (DBS), improving the surgery performance and patient outcome. Different from retrospective reconstruction in conventional dynamic imaging, i-MRI for DBS has to acquire and reconstruct the interventional images sequentially online. Here we proposed a convolutional long short-term memory (Conv-LSTM) based recurrent neural network (RNN), or ConvLR, to reconstruct interventional images with golden-angle radial sampling. By using an initializer and Conv-LSTM blocks, the priors from the pre-operative reference image and intra-operative frames were exploited for reconstructing the current frame. Data consistency for radial sampling was implemented by a soft-projection method. To improve the reconstruction accuracy, an adversarial learning strategy was adopted. A set of interventional images based on the pre-operative and post-operative MR images were simulated for algorithm validation. Results showed with only 10 radial spokes, ConvLR provided the best performance compared with state-of-the-art methods, giving an acceleration up to 40 folds. The proposed algorithm has the potential to achieve real-time i-MRI for DBS and can be used for general purpose MR-guided intervention.

Breaking the Dilemma of Medical Image-to-image Translation

Oct 13, 2021

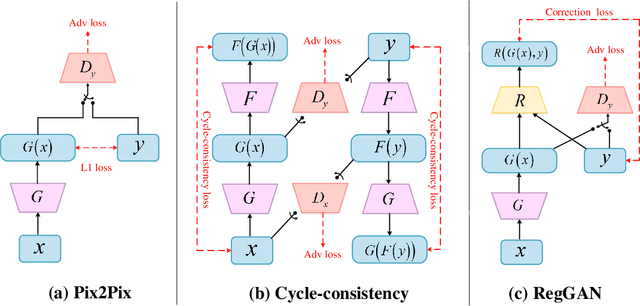

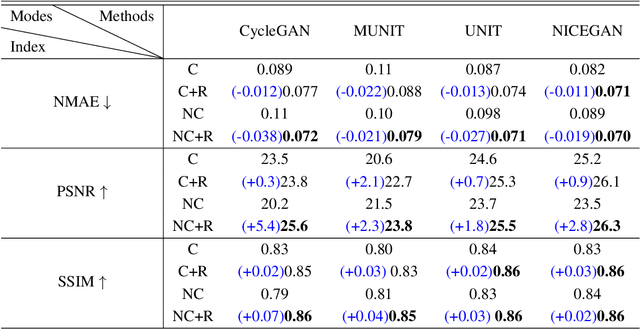

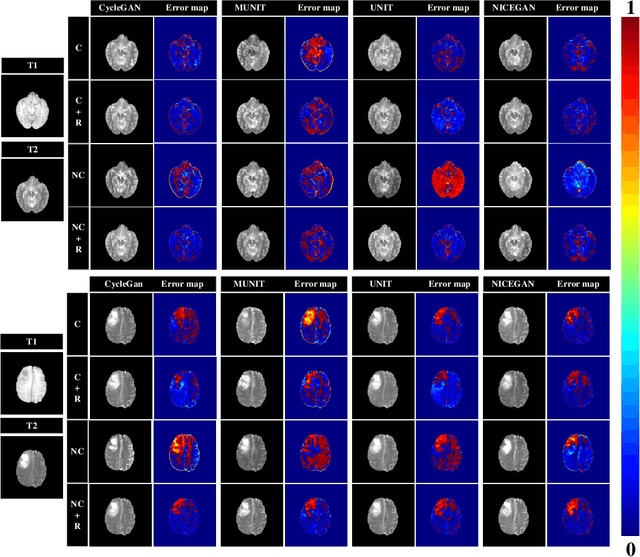

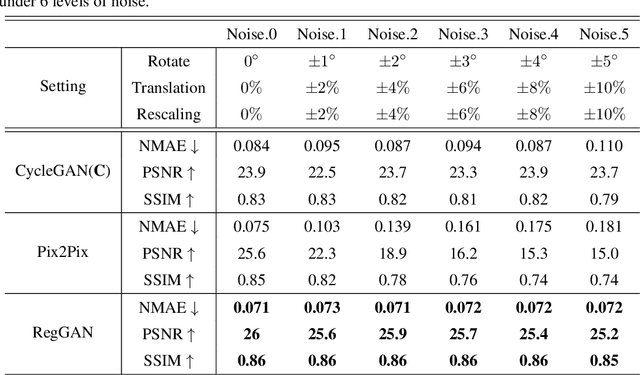

Abstract:Supervised Pix2Pix and unsupervised Cycle-consistency are two modes that dominate the field of medical image-to-image translation. However, neither modes are ideal. The Pix2Pix mode has excellent performance. But it requires paired and well pixel-wise aligned images, which may not always be achievable due to respiratory motion or anatomy change between times that paired images are acquired. The Cycle-consistency mode is less stringent with training data and works well on unpaired or misaligned images. But its performance may not be optimal. In order to break the dilemma of the existing modes, we propose a new unsupervised mode called RegGAN for medical image-to-image translation. It is based on the theory of "loss-correction". In RegGAN, the misaligned target images are considered as noisy labels and the generator is trained with an additional registration network to fit the misaligned noise distribution adaptively. The goal is to search for the common optimal solution to both image-to-image translation and registration tasks. We incorporated RegGAN into a few state-of-the-art image-to-image translation methods and demonstrated that RegGAN could be easily combined with these methods to improve their performances. Such as a simple CycleGAN in our mode surpasses latest NICEGAN even though using less network parameters. Based on our results, RegGAN outperformed both Pix2Pix on aligned data and Cycle-consistency on misaligned or unpaired data. RegGAN is insensitive to noises which makes it a better choice for a wide range of scenarios, especially for medical image-to-image translation tasks in which well pixel-wise aligned data are not available

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge