Yaning Wang

Super-Resolution and Denoising of Corneal B-Scan OCT Imaging Using Diffusion Model Plug-and-Play Priors

Feb 02, 2026Abstract:Optical coherence tomography (OCT) is pivotal in corneal imaging for both surgical planning and diagnosis. However, high-speed acquisitions often degrade spatial resolution and increase speckle noise, posing challenges for accurate interpretation. We propose an advanced super-resolution framework leveraging diffusion model plug-and-play (PnP) priors to achieve 4x spatial resolution enhancement alongside effective denoising of OCT Bscan images. Our approach formulates reconstruction as a principled Bayesian inverse problem, combining Markov chain Monte Carlo sampling with pretrained generative priors to enforce anatomical consistency. We comprehensively validate the framework using \emph{in vivo} fisheye corneal datasets, to assess robustness and scalability under diverse clinical settings. Comparative experiments against bicubic interpolation, conventional supervised U-Net baselines, and alternative diffusion priors demonstrate that our method consistently yields more precise anatomical structures, improved delineation of corneal layers, and superior noise suppression. Quantitative results show state-of-the-art performance in peak signal-to-noise ratio, structural similarity index, and perceptual metrics. This work highlights the potential of diffusion-driven plug-and-play reconstruction to deliver high-fidelity, high-resolution OCT imaging, supporting more reliable clinical assessments and enabling advanced image-guided interventions. Our findings suggest the approach can be extended to other biomedical imaging modalities requiring robust super-resolution and denoising.

Real-time topology-aware M-mode OCT segmentation for robotic deep anterior lamellar keratoplasty (DALK) guidance

Feb 02, 2026Abstract:Robotic deep anterior lamellar keratoplasty (DALK) requires accurate real time depth feedback to approach Descemet's membrane (DM) without perforation. M-mode intraoperative optical coherence tomography (OCT) provides high temporal resolution depth traces, but speckle noise, attenuation, and instrument induced shadowing often result in discontinuous or ambiguous layer interfaces that challenge anatomically consistent segmentation at deployment frame rates. We present a lightweight, topology aware M-mode segmentation pipeline based on UNeXt that incorporates anatomical topology regularization to stabilize boundary continuity and layer ordering under low signal to noise ratio conditions. The proposed system achieves end to end throughput exceeding 80 Hz measured over the complete preprocessing inference overlay pipeline on a single GPU, demonstrating practical real time guidance beyond model only timing. This operating margin provides temporal headroom to reject low quality or dropout frames while maintaining a stable effective depth update rate. Evaluation on a standard rabbit eye M-mode dataset using an established baseline protocol shows improved qualitative boundary stability compared with topology agnostic controls, while preserving deployable real time performance.

Physics-based generation of multilayer corneal OCT data via Gaussian modeling and MCML for AI-driven diagnostic and surgical guidance applications

Feb 02, 2026Abstract:Training deep learning models for corneal optical coherence tomography (OCT) imaging is limited by the availability of large, well-annotated datasets. We present a configurable Monte Carlo simulation framework that generates synthetic corneal B-scan optical OCT images with pixel-level five-layer segmentation labels derived directly from the simulation geometry. A five-layer corneal model with Gaussian surfaces captures curvature and thickness variability in healthy and keratoconic eyes. Each layer is assigned optical properties from the literature and light transport is simulated using Monte Carlo modeling of light transport in multi-layered tissues (MCML), while incorporating system features such as the confocal PSF and sensitivity roll-off. This approach produces over 10,000 high-resolution (1024x1024) image-label pairs and supports customization of geometry, photon count, noise, and system parameters. The resulting dataset enables systematic training, validation, and benchmarking of AI models under controlled, ground-truth conditions, providing a reproducible and scalable resource to support the development of diagnostic and surgical guidance applications in image-guided ophthalmology.

Wide-field high-resolution microscopy via high-speed galvo scanning and real-time mosaicking

Feb 02, 2026Abstract:Wide-field high-resolution microscopy requires fast scanning and accurate image mosaicking to cover large fields of view without compromising image quality. However, conventional galvanometric scanning, particularly under sinusoidal driving, can introduce nonuniform spatial sampling, leading to geometric inconsistencies and brightness variations across the scanned field. To address these challenges, we present an image mosaicking framework for wide-field microscopic imaging that is applicable to both linear and sinusoidal galvanometric scanning strategies. The proposed approach combines a translation-based geometric mosaicking model with region-of-interest (ROI) based brightness correction and seam-aware feathering to improve radiometric consistency across large fields of view. The method relies on calibrated scan parameters and synchronized scan--camera control, without requiring image-content-based registration. Using the proposed framework, wide-field mosaicked images were successfully reconstructed under both linear and sinusoidal scanning strategies, achieving a field of view of up to $2.5 \times 2.5~\mathrm{cm}^2$ with a total acquisition time of approximately $6~\mathrm{s}$ per dataset. Quantitative evaluation shows that both scanning strategies demonstrate improved image quality, including enhanced brightness uniformity, increased contrast-to-noise ratio (CNR), and reduced seam-related artifacts after image processing, while preserving a lateral resolution of $7.81~μ\mathrm{m}$. Overall, the presented framework provides a practical and efficient solution for scan-based wide-field microscopic mosaicking.

End-to-end reconstruction of OCT optical properties and speckle-reduced structural intensity via physics-based learning

Feb 02, 2026Abstract:Inverse scattering in optical coherence tomography (OCT) seeks to recover both structural images and intrinsic tissue optical properties, including refractive index, scattering coefficient, and anisotropy. This inverse problem is challenging due to attenuation, speckle noise, and strong coupling among parameters. We propose a regularized end-to-end deep learning framework that jointly reconstructs optical parameter maps and speckle-reduced OCT structural intensity for layer visualization. Trained with Monte Carlo-simulated ground truth, our network incorporates a physics-based OCT forward model that generates predicted signals from the estimated parameters, providing physics-consistent supervision for parameter recovery and artifact suppression. Experiments on the synthetic corneal OCT dataset demonstrate robust optical map recovery under noise, improved resolution, and enhanced structural fidelity. This approach enables quantitative multi-parameter tissue characterization and highlights the benefit of combining physics-informed modeling with deep learning for computational OCT.

PSI3D: Plug-and-Play 3D Stochastic Inference with Slice-wise Latent Diffusion Prior

Dec 20, 2025Abstract:Diffusion models are highly expressive image priors for Bayesian inverse problems. However, most diffusion models cannot operate on large-scale, high-dimensional data due to high training and inference costs. In this work, we introduce a Plug-and-play algorithm for 3D stochastic inference with latent diffusion prior (PSI3D) to address massive ($1024\times 1024\times 128$) volumes. Specifically, we formulate a Markov chain Monte Carlo approach to reconstruct each two-dimensional (2D) slice by sampling from a 2D latent diffusion model. To enhance inter-slice consistency, we also incorporate total variation (TV) regularization stochastically along the concatenation axis. We evaluate our performance on optical coherence tomography (OCT) super-resolution. Our method significantly improves reconstruction quality for large-scale scientific imaging compared to traditional and learning-based baselines, while providing robust and credible reconstructions.

Super-Resolution Optical Coherence Tomography Using Diffusion Model-Based Plug-and-Play Priors

May 20, 2025Abstract:We propose an OCT super-resolution framework based on a plug-and-play diffusion model (PnP-DM) to reconstruct high-quality images from sparse measurements (OCT B-mode corneal images). Our method formulates reconstruction as an inverse problem, combining a diffusion prior with Markov chain Monte Carlo sampling for efficient posterior inference. We collect high-speed under-sampled B-mode corneal images and apply a deep learning-based up-sampling pipeline to build realistic training pairs. Evaluations on in vivo and ex vivo fish-eye corneal models show that PnP-DM outperforms conventional 2D-UNet baselines, producing sharper structures and better noise suppression. This approach advances high-fidelity OCT imaging in high-speed acquisition for clinical applications.

Kalman filter/deep-learning hybrid automatic boundary tracking of optical coherence tomography data for deep anterior lamellar keratoplasty (DALK)

Jan 25, 2025Abstract:Deep anterior lamellar keratoplasty (DALK) is a highly challenging partial thickness cornea transplant surgery that replaces the anterior cornea above Descemet's membrane (DM) with a donor cornea. In our previous work, we proposed the design of an optical coherence tomography (OCT) sensor integrated needle to acquire real-time M-mode images to provide depth feedback during OCT-guided needle insertion during Big Bubble DALK procedures. Machine learning and deep learning techniques were applied to M-mode images to automatically identify the DM in OCT M-scan data. However, such segmentation methods often produce inconsistent or jagged segmentation of the DM which reduces the model accuracy. Here we present a Kalman filter based OCT M-scan boundary tracking algorithm in addition to AI-based precise needle guidance to improve automatic DM segmentation for OCT-guided DALK procedures. By using the Kalman filter, the proposed method generates a smoother layer segmentation result from OCT M-mode images for more accurate tracking of the DM layer and epithelium. Initial ex vivo testing demonstrates that the proposed approach significantly increases the segmentation accuracy compared to conventional methods without the Kalman filter. Our proposed model can provide more consistent and precise depth sensing results, which has great potential to improve surgical safety and ultimately contributes to better patient outcomes.

RSGaussian:3D Gaussian Splatting with LiDAR for Aerial Remote Sensing Novel View Synthesis

Dec 24, 2024

Abstract:This study presents RSGaussian, an innovative novel view synthesis (NVS) method for aerial remote sensing scenes that incorporate LiDAR point cloud as constraints into the 3D Gaussian Splatting method, which ensures that Gaussians grow and split along geometric benchmarks, addressing the overgrowth and floaters issues occurs. Additionally, the approach introduces coordinate transformations with distortion parameters for camera models to achieve pixel-level alignment between LiDAR point clouds and 2D images, facilitating heterogeneous data fusion and achieving the high-precision geo-alignment required in aerial remote sensing. Depth and plane consistency losses are incorporated into the loss function to guide Gaussians towards real depth and plane representations, significantly improving depth estimation accuracy. Experimental results indicate that our approach has achieved novel view synthesis that balances photo-realistic visual quality and high-precision geometric estimation under aerial remote sensing datasets. Finally, we have also established and open-sourced a dense LiDAR point cloud dataset along with its corresponding aerial multi-view images, AIR-LONGYAN.

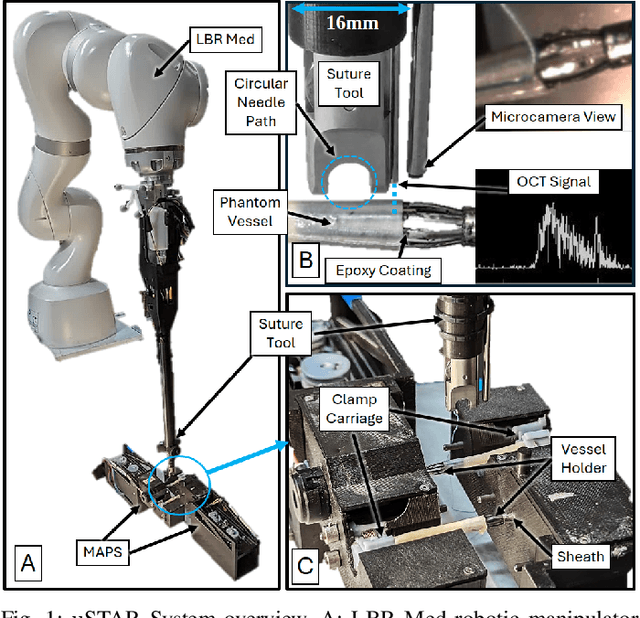

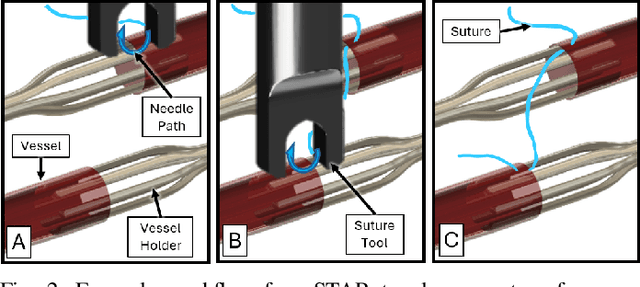

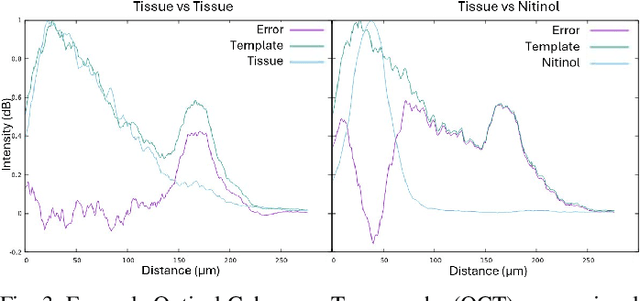

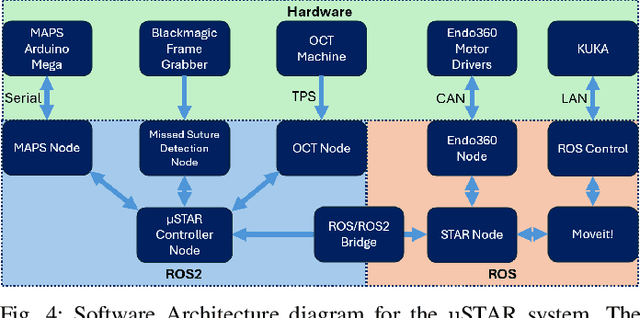

Autonomous Robotic System with Optical Coherence Tomography Guidance for Vascular Anastomosis

Oct 10, 2024

Abstract:Vascular anastomosis, the surgical connection of blood vessels, is essential in procedures such as organ transplants and reconstructive surgeries. The precision required limits accessibility due to the extensive training needed, with manual suturing leading to variable outcomes and revision rates up to 7.9%. Existing robotic systems, while promising, are either fully teleoperated or lack the capabilities necessary for autonomous vascular anastomosis. We present the Micro Smart Tissue Autonomous Robot (micro-STAR), an autonomous robotic system designed to perform vascular anastomosis on small-diameter vessels. The micro-STAR system integrates a novel suturing tool equipped with Optical Coherence Tomography (OCT) fiber-optic sensor and a microcamera, enabling real-time tissue detection and classification. Our system autonomously places sutures and manipulates tissue with minimal human intervention. In an ex vivo study, micro-STAR achieved outcomes competitive with experienced surgeons in terms of leak pressure, lumen reduction, and suture placement variation, completing 90% of sutures without human intervention. This represents the first instance of a robotic system autonomously performing vascular anastomosis on real tissue, offering significant potential for improving surgical precision and expanding access to high-quality care.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge