Yanhai Gan

Explore the Ideology of Deep Learning in ENSO Forecasts

Jan 05, 2026Abstract:The El Ni{~n}o-Southern Oscillation (ENSO) exerts profound influence on global climate variability, yet its prediction remains a grand challenge. Recent advances in deep learning have significantly improved forecasting skill, but the opacity of these models hampers scientific trust and operational deployment. Here, we introduce a mathematically grounded interpretability framework based on bounded variation function. By rescuing the "dead" neurons from the saturation zone of the activation function, we enhance the model's expressive capacity. Our analysis reveals that ENSO predictability emerges dominantly from the tropical Pacific, with contributions from the Indian and Atlantic Oceans, consistent with physical understanding. Controlled experiments affirm the robustness of our method and its alignment with established predictors. Notably, we probe the persistent Spring Predictability Barrier (SPB), finding that despite expanded sensitivity during spring, predictive performance declines-likely due to suboptimal variable selection. These results suggest that incorporating additional ocean-atmosphere variables may help transcend SPB limitations and advance long-range ENSO prediction.

Frequency-Compensated Network for Daily Arctic Sea Ice Concentration Prediction

Apr 23, 2025Abstract:Accurately forecasting sea ice concentration (SIC) in the Arctic is critical to global ecosystem health and navigation safety. However, current methods still is confronted with two challenges: 1) these methods rarely explore the long-term feature dependencies in the frequency domain. 2) they can hardly preserve the high-frequency details, and the changes in the marginal area of the sea ice cannot be accurately captured. To this end, we present a Frequency-Compensated Network (FCNet) for Arctic SIC prediction on a daily basis. In particular, we design a dual-branch network, including branches for frequency feature extraction and convolutional feature extraction. For frequency feature extraction, we design an adaptive frequency filter block, which integrates trainable layers with Fourier-based filters. By adding frequency features, the FCNet can achieve refined prediction of edges and details. For convolutional feature extraction, we propose a high-frequency enhancement block to separate high and low-frequency information. Moreover, high-frequency features are enhanced via channel-wise attention, and temporal attention unit is employed for low-frequency feature extraction to capture long-range sea ice changes. Extensive experiments are conducted on a satellite-derived daily SIC dataset, and the results verify the effectiveness of the proposed FCNet. Our codes and data will be made public available at: https://github.com/oucailab/FCNet .

LSKSANet: A Novel Architecture for Remote Sensing Image Semantic Segmentation Leveraging Large Selective Kernel and Sparse Attention Mechanism

Jun 03, 2024

Abstract:In this paper, we proposed large selective kernel and sparse attention network (LSKSANet) for remote sensing image semantic segmentation. The LSKSANet is a lightweight network that effectively combines convolution with sparse attention mechanisms. Specifically, we design large selective kernel module to decomposing the large kernel into a series of depth-wise convolutions with progressively increasing dilation rates, thereby expanding the receptive field without significantly increasing the computational burden. In addition, we introduce the sparse attention to keep the most useful self-attention values for better feature aggregation. Experimental results on the Vaihingen and Postdam datasets demonstrate the superior performance of the proposed LSKSANet over state-of-the-art methods.

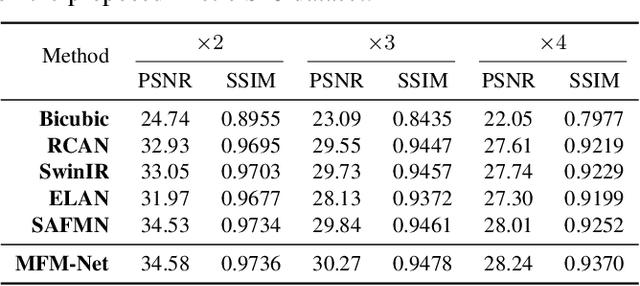

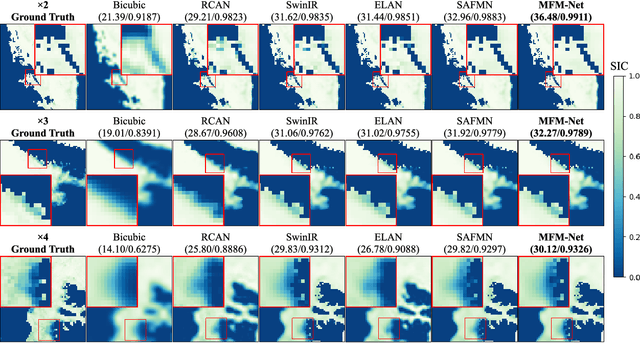

Arctic Sea Ice Image Super-Resolution Based on Multi-Scale Convolution and Dual-Gating Mechanism

Jun 03, 2024

Abstract:Arctic Sea Ice Concentration (SIC) is the ratio of ice-covered area to the total sea area of the Arctic Ocean, which is a key indicator for maritime activities. Nowadays, we often use passive microwave images to display SIC, but it has low spatial resolution, and most of the existing super-resolution methods of Arctic SIC don't take the integration of spatial and channel features into account and can't effectively integrate the multi-scale feature. To overcome the aforementioned issues, we propose MFM-Net for Arctic SIC super-resolution, which concurrently aggregates multi-scale information while integrating spatial and channel features. Extensive experiments on Arctic SIC dataset from the AMSR-E/AMSR-2 SIC DT-ASI products from Ocean University of China validate the effectiveness of porposed MFM-Net.

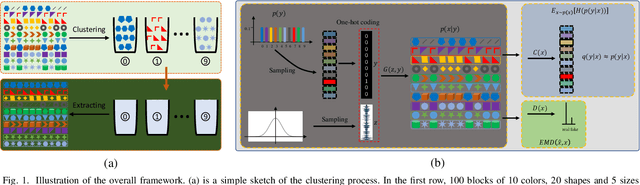

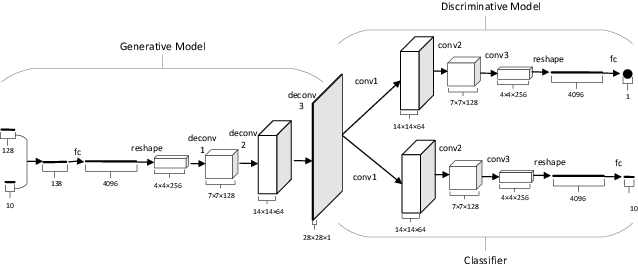

Learning the Precise Feature for Cluster Assignment

Jun 11, 2021

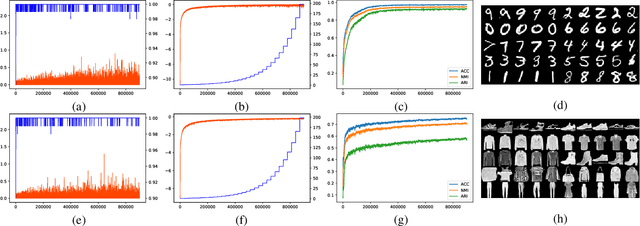

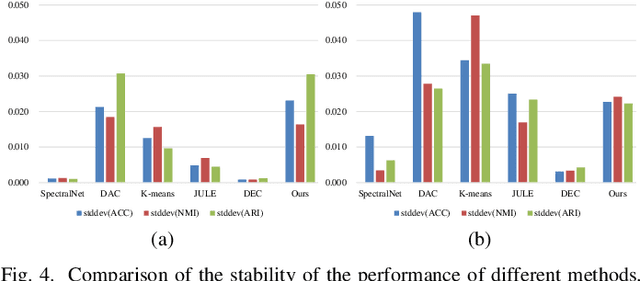

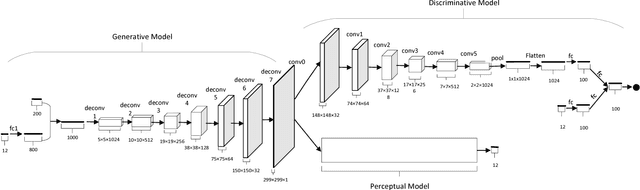

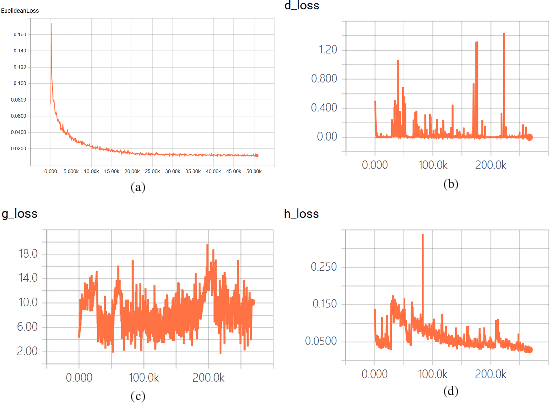

Abstract:Clustering is one of the fundamental tasks in computer vision and pattern recognition. Recently, deep clustering methods (algorithms based on deep learning) have attracted wide attention with their impressive performance. Most of these algorithms combine deep unsupervised representation learning and standard clustering together. However, the separation of representation learning and clustering will lead to suboptimal solutions because the two-stage strategy prevents representation learning from adapting to subsequent tasks (e.g., clustering according to specific cues). To overcome this issue, efforts have been made in the dynamic adaption of representation and cluster assignment, whereas current state-of-the-art methods suffer from heuristically constructed objectives with representation and cluster assignment alternatively optimized. To further standardize the clustering problem, we audaciously formulate the objective of clustering as finding a precise feature as the cue for cluster assignment. Based on this, we propose a general-purpose deep clustering framework which radically integrates representation learning and clustering into a single pipeline for the first time. The proposed framework exploits the powerful ability of recently developed generative models for learning intrinsic features, and imposes an entropy minimization on the distribution of the cluster assignment by a dedicated variational algorithm. Experimental results show that the performance of the proposed method is superior, or at least comparable to, the state-of-the-art methods on the handwritten digit recognition, fashion recognition, face recognition and object recognition benchmark datasets.

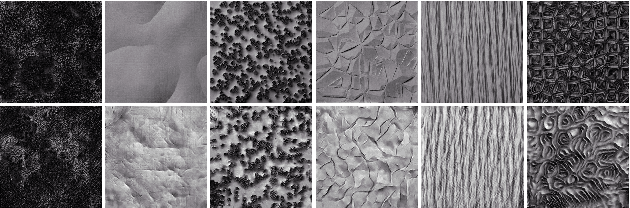

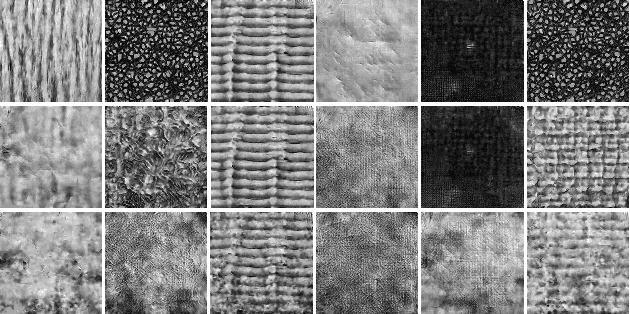

Perception Driven Texture Generation

Mar 24, 2017

Abstract:This paper investigates a novel task of generating texture images from perceptual descriptions. Previous work on texture generation focused on either synthesis from examples or generation from procedural models. Generating textures from perceptual attributes have not been well studied yet. Meanwhile, perceptual attributes, such as directionality, regularity and roughness are important factors for human observers to describe a texture. In this paper, we propose a joint deep network model that combines adversarial training and perceptual feature regression for texture generation, while only random noise and user-defined perceptual attributes are required as input. In this model, a preliminary trained convolutional neural network is essentially integrated with the adversarial framework, which can drive the generated textures to possess given perceptual attributes. An important aspect of the proposed model is that, if we change one of the input perceptual features, the corresponding appearance of the generated textures will also be changed. We design several experiments to validate the effectiveness of the proposed method. The results show that the proposed method can produce high quality texture images with desired perceptual properties.

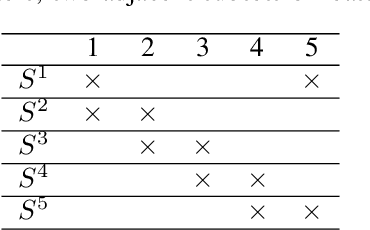

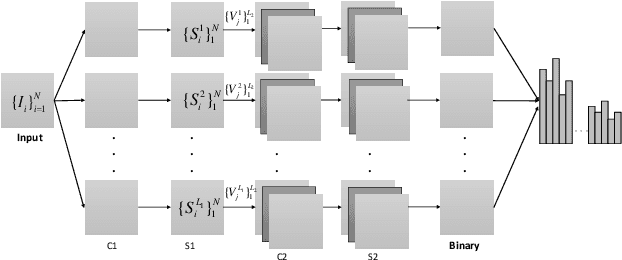

A PCA-Based Convolutional Network

May 14, 2015

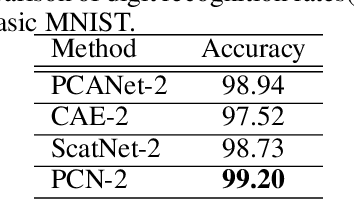

Abstract:In this paper, we propose a novel unsupervised deep learning model, called PCA-based Convolutional Network (PCN). The architecture of PCN is composed of several feature extraction stages and a nonlinear output stage. Particularly, each feature extraction stage includes two layers: a convolutional layer and a feature pooling layer. In the convolutional layer, the filter banks are simply learned by PCA. In the nonlinear output stage, binary hashing is applied. For the higher convolutional layers, the filter banks are learned from the feature maps that were obtained in the previous stage. To test PCN, we conducted extensive experiments on some challenging tasks, including handwritten digits recognition, face recognition and texture classification. The results show that PCN performs competitive with or even better than state-of-the-art deep learning models. More importantly, since there is no back propagation for supervised finetuning, PCN is much more efficient than existing deep networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge