Yang Chi

Representing Interlingual Meaning in Lexical Databases

Jan 22, 2023Abstract:In today's multilingual lexical databases, the majority of the world's languages are under-represented. Beyond a mere issue of resource incompleteness, we show that existing lexical databases have structural limitations that result in a reduced expressivity on culturally-specific words and in mapping them across languages. In particular, the lexical meaning space of dominant languages, such as English, is represented more accurately while linguistically or culturally diverse languages are mapped in an approximate manner. Our paper assesses state-of-the-art multilingual lexical databases and evaluates their strengths and limitations with respect to their expressivity on lexical phenomena of linguistic diversity.

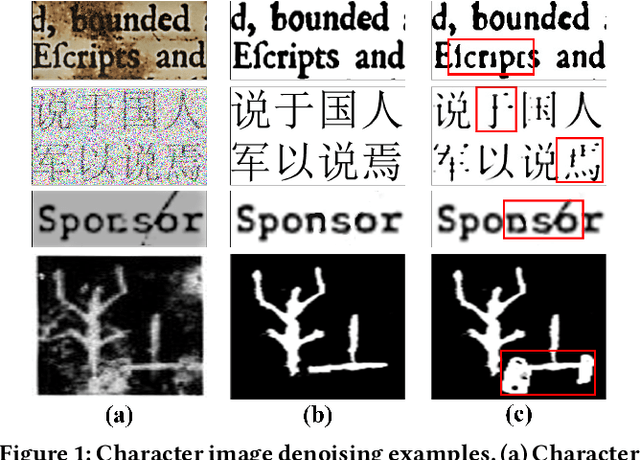

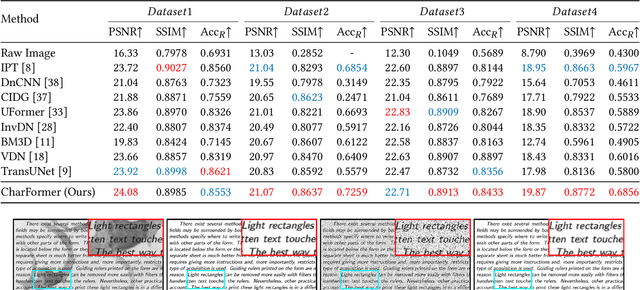

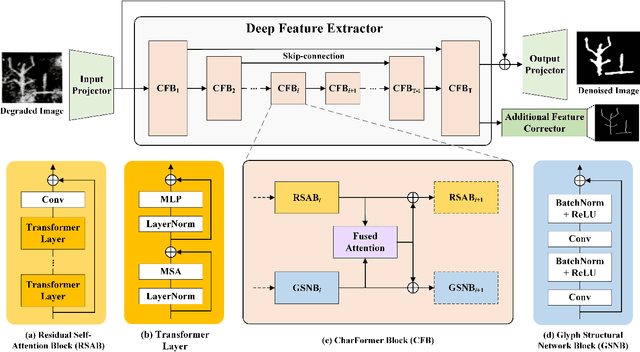

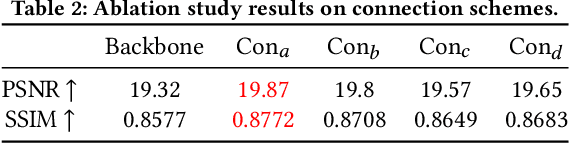

CharFormer: A Glyph Fusion based Attentive Framework for High-precision Character Image Denoising

Jul 19, 2022

Abstract:Degraded images commonly exist in the general sources of character images, leading to unsatisfactory character recognition results. Existing methods have dedicated efforts to restoring degraded character images. However, the denoising results obtained by these methods do not appear to improve character recognition performance. This is mainly because current methods only focus on pixel-level information and ignore critical features of a character, such as its glyph, resulting in character-glyph damage during the denoising process. In this paper, we introduce a novel generic framework based on glyph fusion and attention mechanisms, i.e., CharFormer, for precisely recovering character images without changing their inherent glyphs. Unlike existing frameworks, CharFormer introduces a parallel target task for capturing additional information and injecting it into the image denoising backbone, which will maintain the consistency of character glyphs during character image denoising. Moreover, we utilize attention-based networks for global-local feature interaction, which will help to deal with blind denoising and enhance denoising performance. We compare CharFormer with state-of-the-art methods on multiple datasets. The experimental results show the superiority of CharFormer quantitatively and qualitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge