Yajie Cui

Patch-wise Auto-Encoder for Visual Anomaly Detection

Aug 01, 2023Abstract:Anomaly detection without priors of the anomalies is challenging. In the field of unsupervised anomaly detection, traditional auto-encoder (AE) tends to fail based on the assumption that by training only on normal images, the model will not be able to reconstruct abnormal images correctly. On the contrary, we propose a novel patch-wise auto-encoder (Patch AE) framework, which aims at enhancing the reconstruction ability of AE to anomalies instead of weakening it. Each patch of image is reconstructed by corresponding spatially distributed feature vector of the learned feature representation, i.e., patch-wise reconstruction, which ensures anomaly-sensitivity of AE. Our method is simple and efficient. It advances the state-of-the-art performances on Mvtec AD benchmark, which proves the effectiveness of our model. It shows great potential in practical industrial application scenarios.

Application-Driven AI Paradigm for Human Action Recognition

Sep 30, 2022

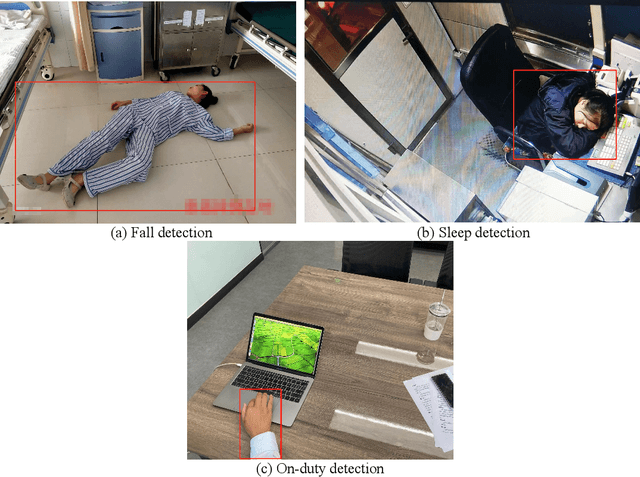

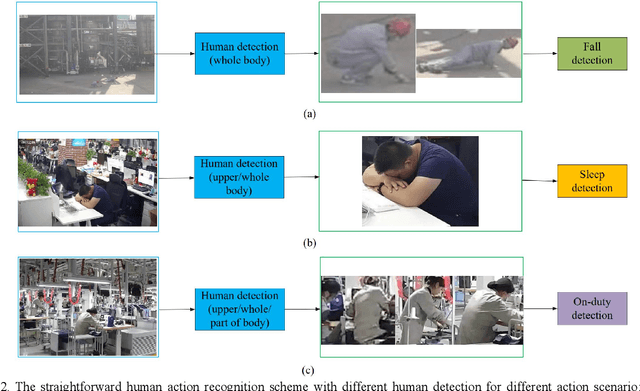

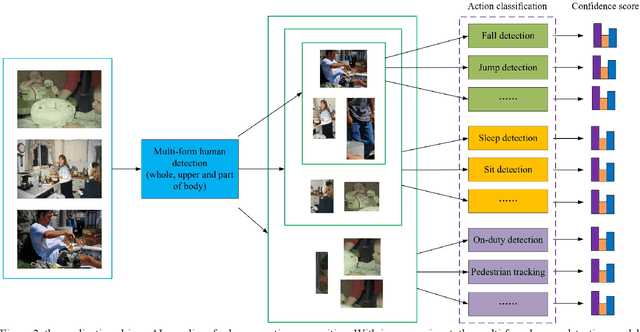

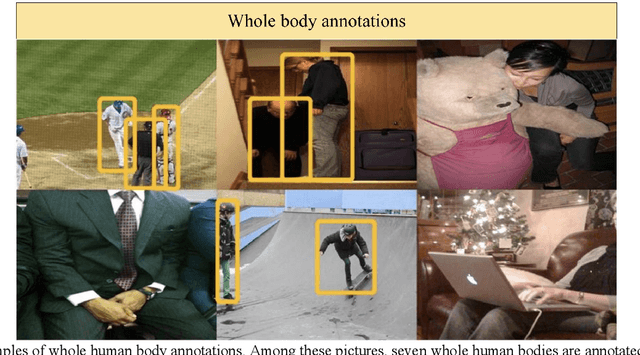

Abstract:Human action recognition in computer vision has been widely studied in recent years. However, most algorithms consider only certain action specially with even high computational cost. That is not suitable for practical applications with multiple actions to be identified with low computational cost. To meet various application scenarios, this paper presents a unified human action recognition framework composed of two modules, i.e., multi-form human detection and corresponding action classification. Among them, an open-source dataset is constructed to train a multi-form human detection model that distinguishes a human being's whole body, upper body or part body, and the followed action classification model is adopted to recognize such action as falling, sleeping or on-duty, etc. Some experimental results show that the unified framework is effective for various application scenarios. It is expected to be a new application-driven AI paradigm for human action recognition.

Data-Centric AI Paradigm Based on Application-Driven Fine-grained Dataset Design

Sep 23, 2022

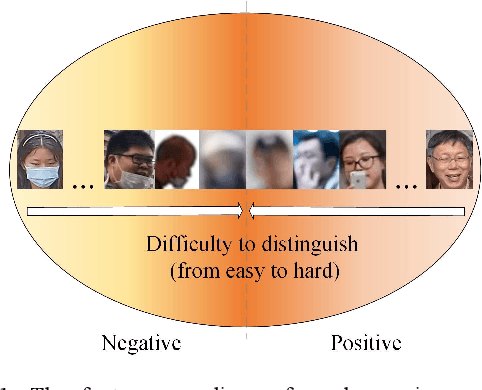

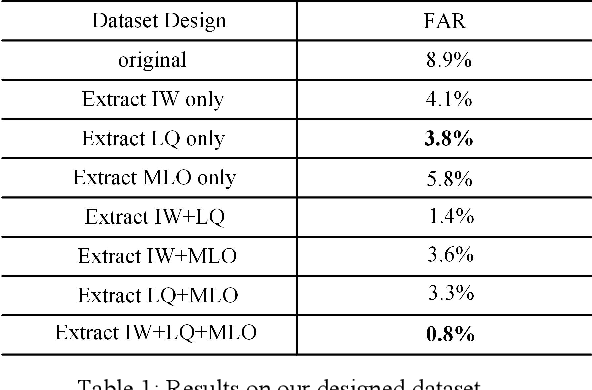

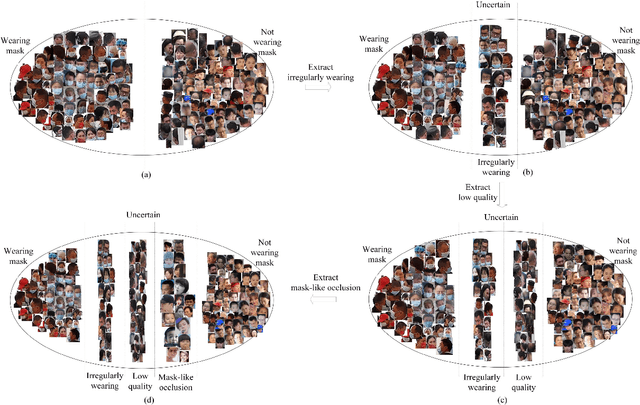

Abstract:Deep learning has a wide range of applications in industrial scenario, but reducing false alarm (FA) remains a major difficulty. Optimizing network architecture or network parameters is used to tackle this challenge in academic circles, while ignoring the essential characteristics of data in application scenarios, which often results in increased FA in new scenarios. In this paper, we propose a novel paradigm for fine-grained design of datasets, driven by industrial applications. We flexibly select positive and negative sample sets according to the essential features of the data and application requirements, and add the remaining samples to the training set as uncertainty classes. We collect more than 10,000 mask-wearing recognition samples covering various application scenarios as our experimental data. Compared with the traditional data design methods, our method achieves better results and effectively reduces FA. We make all contributions available to the research community for broader use. The contributions will be available at https://github.com/huh30/OpenDatasets.

A Waste Copper Granules Rating System Based on Machine Vision

Jul 14, 2022

Abstract:In the field of waste copper granules recycling, engineers should be able to identify all different sorts of impurities in waste copper granules and estimate their mass proportion relying on experience before rating. This manual rating method is costly, lacking in objectivity and comprehensiveness. To tackle this problem, we propose a waste copper granules rating system based on machine vision and deep learning. We firstly formulate the rating task into a 2D image recognition and purity regression task. Then we design a two-stage convolutional rating network to compute the mass purity and rating level of waste copper granules. Our rating network includes a segmentation network and a purity regression network, which respectively calculate the semantic segmentation heatmaps and purity results of the waste copper granules. After training the rating network on the augmented datasets, experiments on real waste copper granules demonstrate the effectiveness and superiority of the proposed network. Specifically, our system is superior to the manual method in terms of accuracy, effectiveness, robustness, and objectivity.

A Survey on Unsupervised Industrial Anomaly Detection Algorithms

Apr 28, 2022

Abstract:In line with the development of Industry 4.0, more and more attention is attracted to the field of surface defect detection. Improving efficiency as well as saving labor costs has steadily become a matter of great concern in industry field, where deep learning-based algorithms performs better than traditional vision inspection methods in recent years. While existing deep learning-based algorithms are biased towards supervised learning, which not only necessitates a huge amount of labeled data and a significant amount of labor, but it is also inefficient and has certain limitations. In contrast, recent research shows that unsupervised learning has great potential in tackling above disadvantages for visual anomaly detection. In this survey, we summarize current challenges and provide a thorough overview of recently proposed unsupervised algorithms for visual anomaly detection covering five categories, whose innovation points and frameworks are described in detail. Meanwhile, information on publicly available datasets containing surface image samples are provided. By comparing different classes of methods, the advantages and disadvantages of anomaly detection algorithms are summarized. It is expected to assist both the research community and industry in developing a broader and cross-domain perspective.

Deep Partial Multi-View Learning

Nov 12, 2020

Abstract:Although multi-view learning has made signifificant progress over the past few decades, it is still challenging due to the diffificulty in modeling complex correlations among different views, especially under the context of view missing. To address the challenge, we propose a novel framework termed Cross Partial Multi-View Networks (CPM-Nets), which aims to fully and flflexibly take advantage of multiple partial views. We fifirst provide a formal defifinition of completeness and versatility for multi-view representation and then theoretically prove the versatility of the learned latent representations. For completeness, the task of learning latent multi-view representation is specififically translated to a degradation process by mimicking data transmission, such that the optimal tradeoff between consistency and complementarity across different views can be implicitly achieved. Equipped with adversarial strategy, our model stably imputes missing views, encoding information from all views for each sample to be encoded into latent representation to further enhance the completeness. Furthermore, a nonparametric classifification loss is introduced to produce structured representations and prevent overfifitting, which endows the algorithm with promising generalization under view-missing cases. Extensive experimental results validate the effectiveness of our algorithm over existing state of the arts for classifification, representation learning and data imputation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge