Xutian Deng

Learning Autonomous Ultrasound via Latent Task Representation and Robotic Skills Adaptation

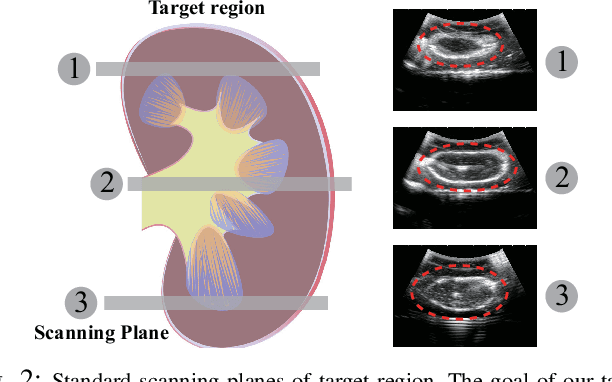

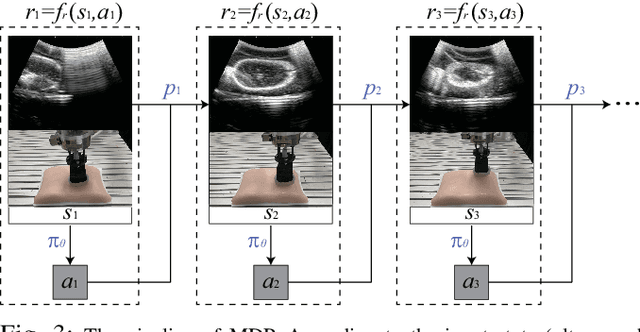

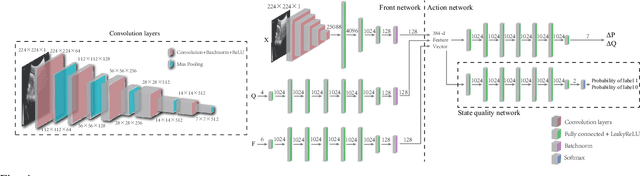

Jul 25, 2023Abstract:As medical ultrasound is becoming a prevailing examination approach nowadays, robotic ultrasound systems can facilitate the scanning process and prevent professional sonographers from repetitive and tedious work. Despite the recent progress, it is still a challenge to enable robots to autonomously accomplish the ultrasound examination, which is largely due to the lack of a proper task representation method, and also an adaptation approach to generalize learned skills across different patients. To solve these problems, we propose the latent task representation and the robotic skills adaptation for autonomous ultrasound in this paper. During the offline stage, the multimodal ultrasound skills are merged and encapsulated into a low-dimensional probability model through a fully self-supervised framework, which takes clinically demonstrated ultrasound images, probe orientations, and contact forces into account. During the online stage, the probability model will select and evaluate the optimal prediction. For unstable singularities, the adaptive optimizer fine-tunes them to near and stable predictions in high-confidence regions. Experimental results show that the proposed approach can generate complex ultrasound strategies for diverse populations and achieve significantly better quantitative results than our previous method.

Learning Ultrasound Scanning Skills from Human Demonstrations

Nov 09, 2021Abstract:Recently, the robotic ultrasound system has become an emerging topic owing to the widespread use of medical ultrasound. However, it is still a challenging task to model and to transfer the ultrasound skill from an ultrasound physician. In this paper, we propose a learning-based framework to acquire ultrasound scanning skills from human demonstrations. First, the ultrasound scanning skills are encapsulated into a high-dimensional multi-modal model in terms of interactions among ultrasound images, the probe pose and the contact force. The parameters of the model are learned using the data collected from skilled sonographers' demonstrations. Second, a sampling-based strategy is proposed with the learned model to adjust the extracorporeal ultrasound scanning process to guide a newbie sonographer or a robot arm. Finally, the robustness of the proposed framework is validated with the experiments on real data from sonographers.

Learning Robotic Ultrasound Scanning Skills via Human Demonstrations and Guided Explorations

Nov 02, 2021

Abstract:Medical ultrasound has become a routine examination approach nowadays and is widely adopted for different medical applications, so it is desired to have a robotic ultrasound system to perform the ultrasound scanning autonomously. However, the ultrasound scanning skill is considerably complex, which highly depends on the experience of the ultrasound physician. In this paper, we propose a learning-based approach to learn the robotic ultrasound scanning skills from human demonstrations. First, the robotic ultrasound scanning skill is encapsulated into a high-dimensional multi-modal model, which takes the ultrasound images, the pose/position of the probe and the contact force into account. Second, we leverage the power of imitation learning to train the multi-modal model with the training data collected from the demonstrations of experienced ultrasound physicians. Finally, a post-optimization procedure with guided explorations is proposed to further improve the performance of the learned model. Robotic experiments are conducted to validate the advantages of our proposed framework and the learned models.

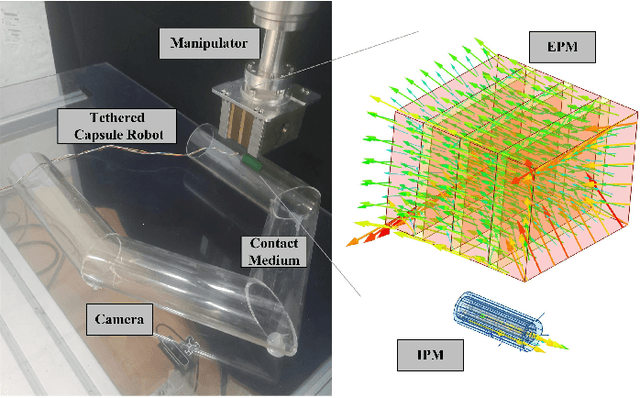

Learning Friction Model for Magnet-actuated Tethered Capsule Robot

Oct 01, 2021

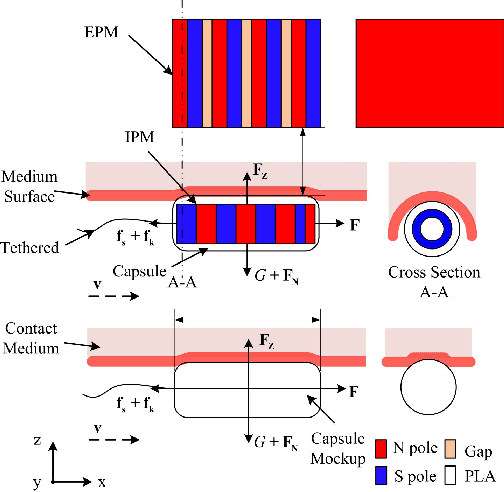

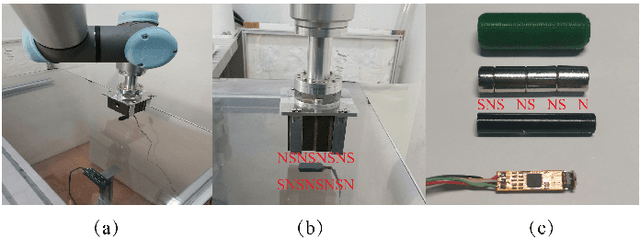

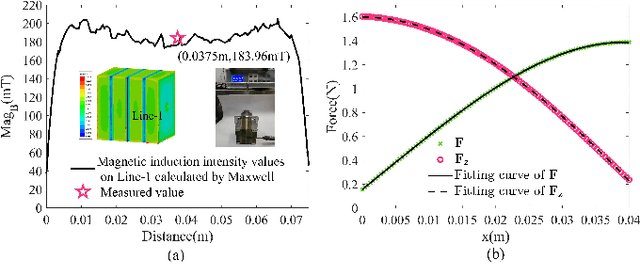

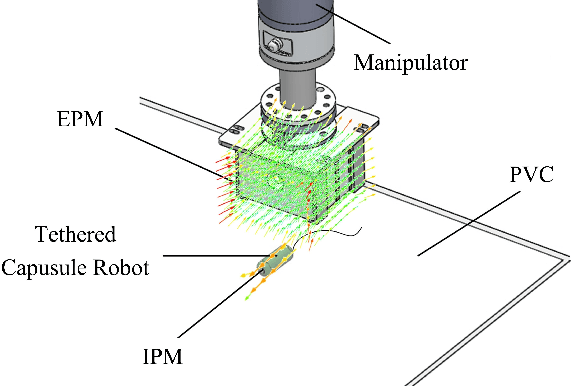

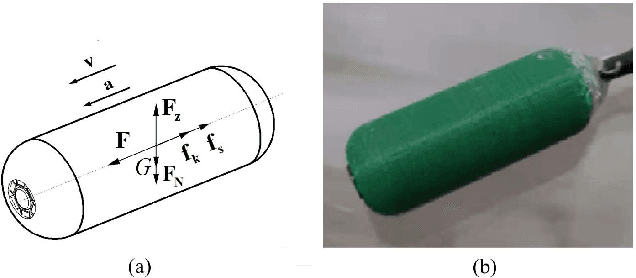

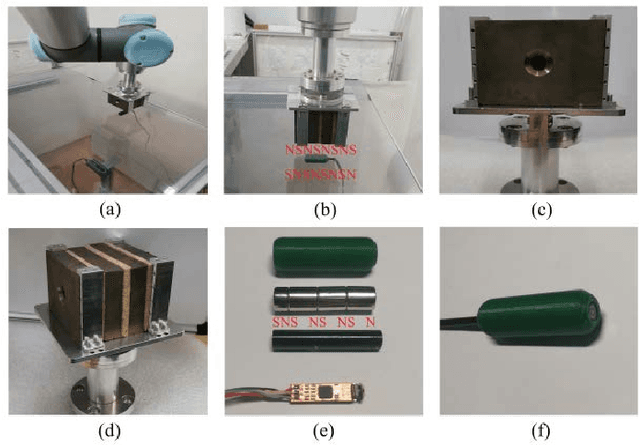

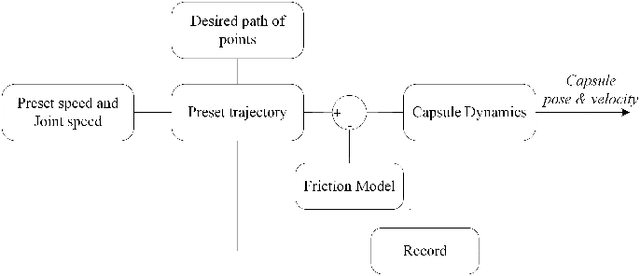

Abstract:The potential diagnostic applications of magnet-actuated capsules have been greatly increased in recent years. For most of these potential applications, accurate position control of the capsule have been highly demanding. However, the friction between the robot and the environment as well as the drag force from the tether play a significant role during the motion control of the capsule. Moreover, these forces especially the friction force are typically hard to model beforehand. In this paper, we first designed a magnet-actuated tethered capsule robot, where the driving magnet is mounted on the end of a robotic arm. Then, we proposed a learning-based approach to model the friction force between the capsule and the environment, with the goal of increasing the control accuracy of the whole system. Finally, several real robot experiments are demonstrated to showcase the effectiveness of our proposed approach.

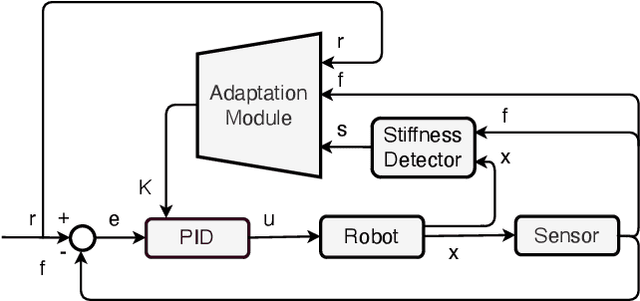

Learning Based Adaptive Force Control of Robotic Manipulation Based on Real-Time Object Stiffness Detection

Sep 14, 2021

Abstract:Force control is essential for medical robots when touching and contacting the patient's body. To increase the stability and efficiency in force control, an Adaption Module could be used to adjust the parameters for different contact situations. We propose an adaptive controller with an Adaption Module which can produce control parameters based on force feedback and real-time stiffness detection. We develop methods for learning the optimal policies by value iteration and using the data generated from those policies to train the Adaptive Module. We test this controller on different zones of a person's arm. All the parameters used in practice are learned from data. The experiments show that the proposed adaptive controller can exert various target forces on different zones of the arm with fast convergence and good stability.

Learning Friction Model for Tethered Capsule Robot

Aug 16, 2021

Abstract:With the potential applications of capsule robots in medical endoscopy, accurate dynamic control of the capsule robot is becoming more and more important. In the scale of a capsule robot, the friction between capsule and the environment plays an essential role in the dynamic model, which is usually difficult to model beforehand. In the paper, a tethered capsule robot system driven by a robot manipulator is built, where a strong magnetic Halbach array is mounted on the robot's end-effector to adjust the state of the capsule. To increase the control accuracy, the friction between capsule and the environment is learned with demonstrated trajectories. With the learned friction model, experimental results demonstrate an improvement of 5.6% in terms of tracking error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge