Xinzheng Zhang

Progressive Multi-Level Alignments for Semi-Supervised Domain Adaptation SAR Target Recognition Using Simulated Data

Nov 07, 2024Abstract:Recently, an intriguing research trend for automatic target recognition (ATR) from synthetic aperture radar (SAR) imagery has arisen: using simulated data to train ATR models is a feasible solution to the issue of inadequate measured data. To close the domain gap that exists between the real and simulated data, the unsupervised domain adaptation (UDA) techniques are frequently exploited to construct ATR models. However, for UDA, the target domain lacks labeled data to direct the model training, posing a great challenge to ATR performance. To address the above problem, a semi-supervised domain adaptation (SSDA) framework has been proposed adopting progressive multi-level alignments for simulated data-aided SAR ATR. First, a progressive wavelet transform data augmentation (PWTDA) is presented by analyzing the discrepancies of wavelet decomposition sub-bands of two domain images, obtaining the domain-level alignment. Specifically, the domain gap is narrowed by mixing the wavelet transform high-frequency sub-band components. Second, we develop an asymptotic instance-prototype alignment (AIPA) strategy to push the source domain instances close to the corresponding target prototypes, aiming to achieve category-level alignment. Moreover, the consistency alignment is implemented by excavating the strong-weak augmentation consistency of both individual samples and the multi-sample relationship, enhancing the generalization capability of the model. Extensive experiments on the Synthetic and Measured Paired Labeled Experiment (SAMPLE) dataset, indicate that our approach obtains recognition accuracies of 99.63% and 98.91% in two common experimental settings with only one labeled sample per class of the target domain, outperforming the most advanced SSDA techniques.

Energy Score-based Pseudo-Label Filtering and Adaptive Loss for Imbalanced Semi-supervised SAR target recognition

Nov 06, 2024

Abstract:Automatic target recognition (ATR) is an important use case for synthetic aperture radar (SAR) image interpretation. Recent years have seen significant advancements in SAR ATR technology based on semi-supervised learning. However, existing semi-supervised SAR ATR algorithms show low recognition accuracy in the case of class imbalance. This work offers a non-balanced semi-supervised SAR target recognition approach using dynamic energy scores and adaptive loss. First, an energy score-based method is developed to dynamically select unlabeled samples near to the training distribution as pseudo-labels during training, assuring pseudo-label reliability in long-tailed distribution circumstances. Secondly, loss functions suitable for class imbalances are proposed, including adaptive margin perception loss and adaptive hard triplet loss, the former offsets inter-class confusion of classifiers, alleviating the imbalance issue inherent in pseudo-label generation. The latter effectively tackles the model's preference for the majority class by focusing on complex difficult samples during training. Experimental results on extremely imbalanced SAR datasets demonstrate that the proposed method performs well under the dual constraints of scarce labels and data imbalance, effectively overcoming the model bias caused by data imbalance and achieving high-precision target recognition.

Robust Unsupervised Small Area Change Detection from SAR Imagery Using Deep Learning

Nov 22, 2020

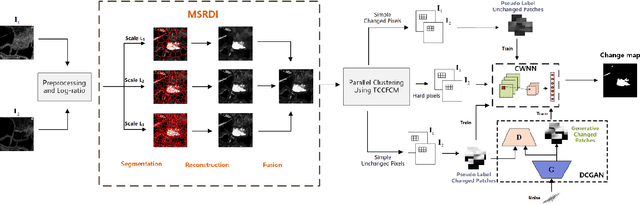

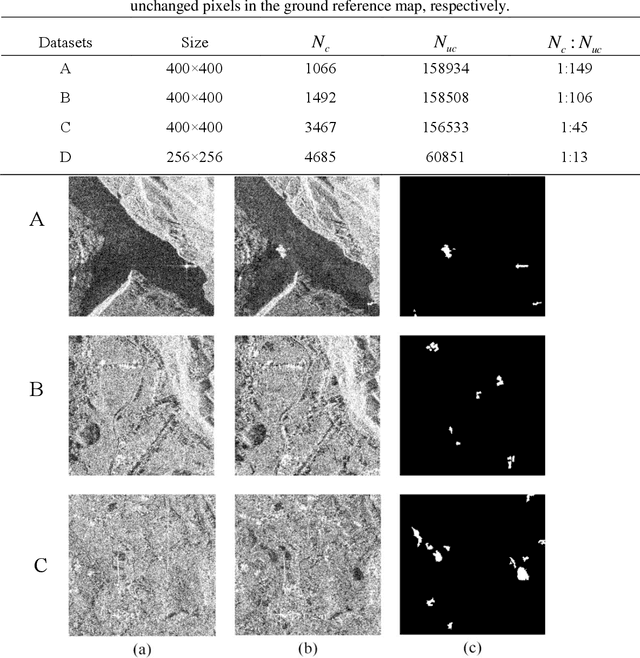

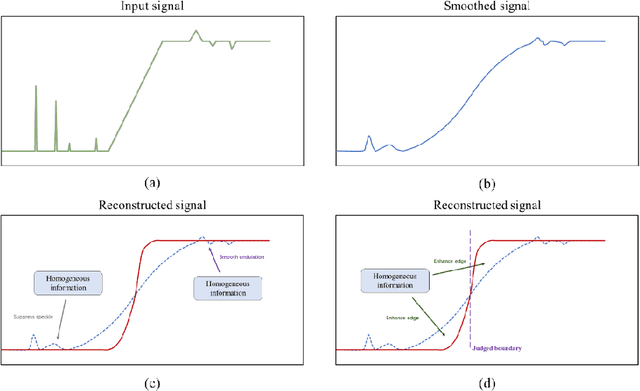

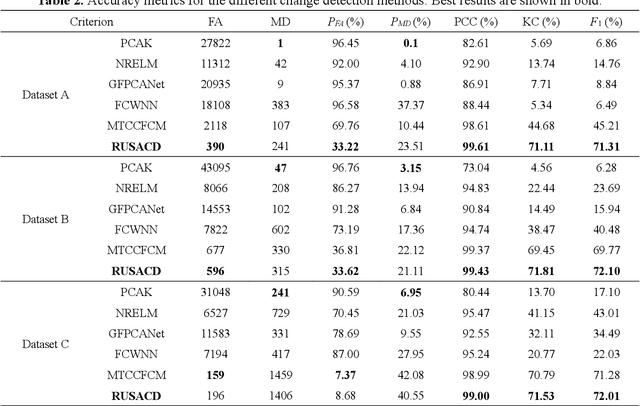

Abstract:Small area change detection from synthetic aperture radar (SAR) is a highly challenging task. In this paper, a robust unsupervised approach is proposed for small area change detection from multi-temporal SAR images using deep learning. First, a multi-scale superpixel reconstruction method is developed to generate a difference image (DI), which can suppress the speckle noise effectively and enhance edges by exploiting local, spatially homogeneous information. Second, a two-stage centre-constrained fuzzy c-means clustering algorithm is proposed to divide the pixels of the DI into changed, unchanged and intermediate classes with a parallel clustering strategy. Image patches belonging to the first two classes are then constructed as pseudo-label training samples, and image patches of the intermediate class are treated as testing samples. Finally, a convolutional wavelet neural network (CWNN) is designed and trained to classify testing samples into changed or unchanged classes, coupled with a deep convolutional generative adversarial network (DCGAN) to increase the number of changed class within the pseudo-label training samples. Numerical experiments on four real SAR datasets demonstrate the validity and robustness of the proposed approach, achieving up to 99.61% accuracy for small area change detection.

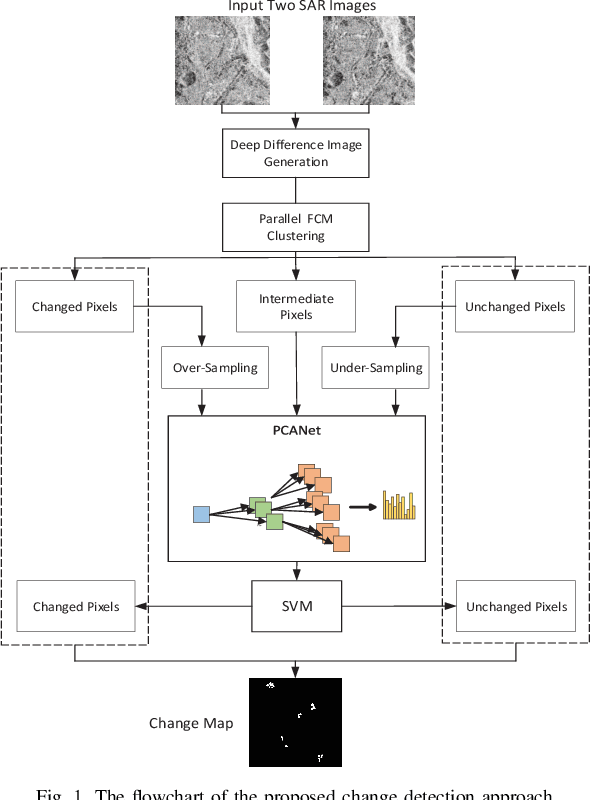

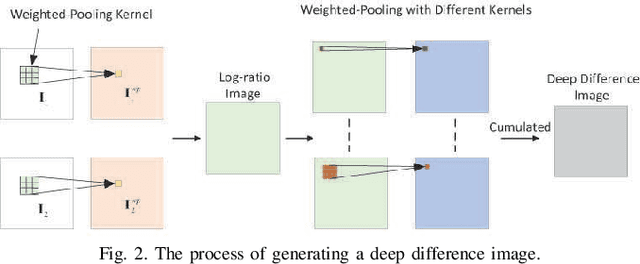

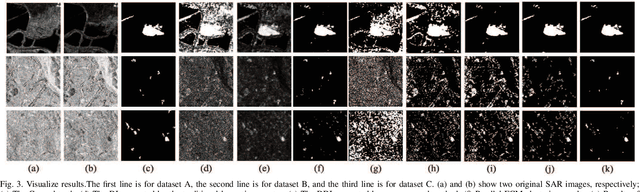

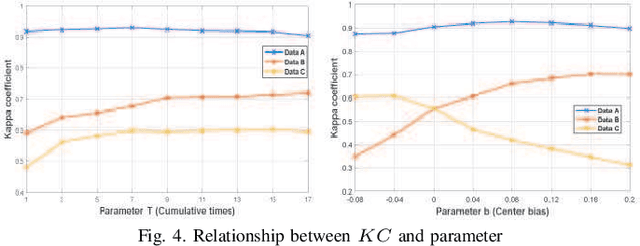

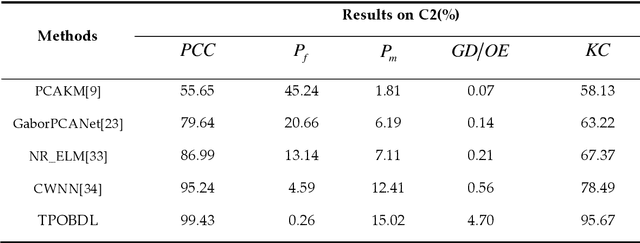

A Robust Imbalanced SAR Image Change Detection Approach Based on Deep Difference Image and PCANet

Mar 03, 2020

Abstract:In this research, a novel robust change detection approach is presented for imbalanced multi-temporal synthetic aperture radar (SAR) image based on deep learning. Our main contribution is to develop a novel method for generating difference image and a parallel fuzzy c-means (FCM) clustering method. The main steps of our proposed approach are as follows: 1) Inspired by convolution and pooling in deep learning, a deep difference image (DDI) is obtained based on parameterized pooling leading to better speckle suppression and feature enhancement than traditional difference images. 2) Two different parameter Sigmoid nonlinear mapping are applied to the DDI to get two mapped DDIs. Parallel FCM are utilized on these two mapped DDIs to obtain three types of pseudo-label pixels, namely, changed pixels, unchanged pixels, and intermediate pixels. 3) A PCANet with support vector machine (SVM) are trained to classify intermediate pixels to be changed or unchanged. Three imbalanced multi-temporal SAR image sets are used for change detection experiments. The experimental results demonstrate that the proposed approach is effective and robust for imbalanced SAR data, and achieve up to 99.52% change detection accuracy superior to most state-of-the-art methods.

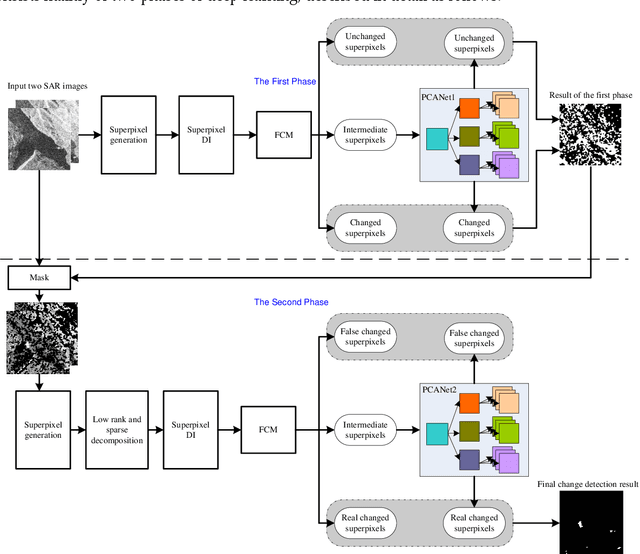

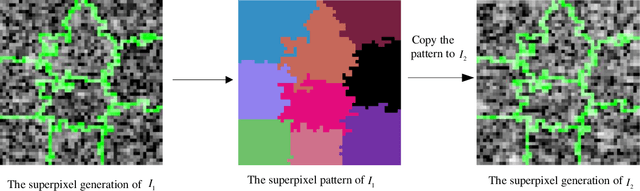

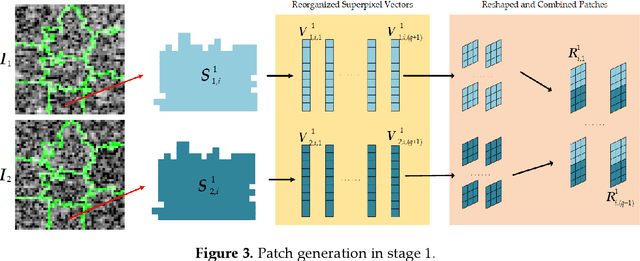

Two-Phase Object-Based Deep Learning for Multi-temporal SAR Image Change Detection

Jan 17, 2020

Abstract:Change detection is one of the fundamental applications of synthetic aperture radar (SAR) images. However, speckle noise presented in SAR images has a much negative effect on change detection. In this research, a novel two-phase object-based deep learning approach is proposed for multi-temporal SAR image change detection. Compared with traditional methods, the proposed approach brings two main innovations. One is to classify all pixels into three categories rather than two categories: unchanged pixels, changed pixels caused by strong speckle (false changes), and changed pixels formed by real terrain variation (real changes). The other is to group neighboring pixels into segmented into superpixel objects (from pixels) such as to exploit local spatial context. Two phases are designed in the methodology: 1) Generate objects based on the simple linear iterative clustering algorithm, and discriminate these objects into changed and unchanged classes using fuzzy c-means (FCM) clustering and a deep PCANet. The prediction of this Phase is the set of changed and unchanged superpixels. 2) Deep learning on the pixel sets over the changed superpixels only, obtained in the first phase, to discriminate real changes from false changes. SLIC is employed again to achieve new superpixels in the second phase. Low rank and sparse decomposition are applied to these new superpixels to suppress speckle noise significantly. A further clustering step is applied to these new superpixels via FCM. A new PCANet is then trained to classify two kinds of changed superpixels to achieve the final change maps. Numerical experiments demonstrate that, compared with benchmark methods, the proposed approach can distinguish real changes from false changes effectively with significantly reduced false alarm rates, and achieve up to 99.71% change detection accuracy using multi-temporal SAR imagery.

AFA-PredNet: The action modulation within predictive coding

Apr 11, 2018

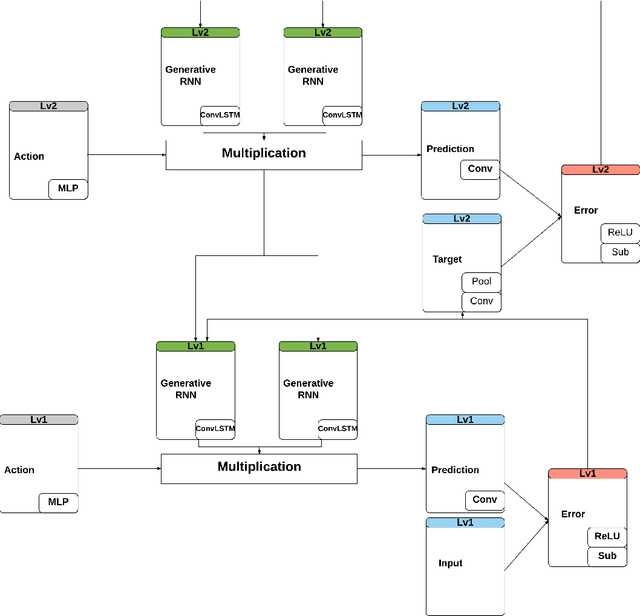

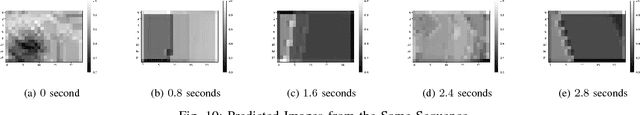

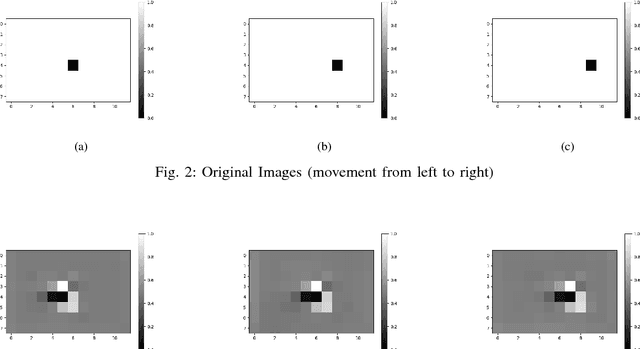

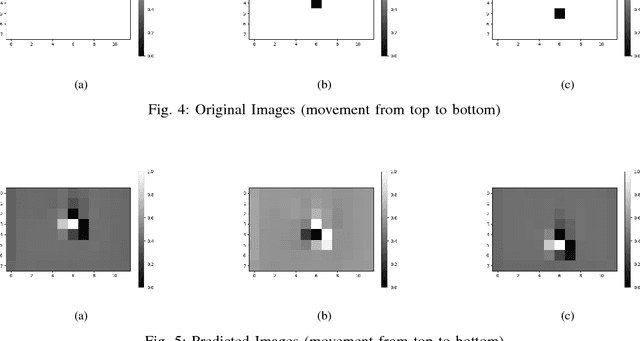

Abstract:The predictive processing (PP) hypothesizes that the predictive inference of our sensorimotor system is encoded implicitly in the regularities between perception and action. We propose a neural architecture in which such regularities of active inference are encoded hierarchically. We further suggest that this encoding emerges during the embodied learning process when the appropriate action is selected to minimize the prediction error in perception. Therefore, this predictive stream in the sensorimotor loop is generated in a top-down manner. Specifically, it is constantly modulated by the motor actions and is updated by the bottom-up prediction error signals. In this way, the top-down prediction originally comes from the prior experience from both perception and action representing the higher levels of this hierarchical cognition. In our proposed embodied model, we extend the PredNet Network, a hierarchical predictive coding network, with the motor action units implemented by a multi-layer perceptron network (MLP) to modulate the network top-down prediction. Two experiments, a minimalistic world experiment, and a mobile robot experiment are conducted to evaluate the proposed model in a qualitative way. In the neural representation, it can be observed that the causal inference of predictive percept from motor actions can be also observed while the agent is interacting with the environment.

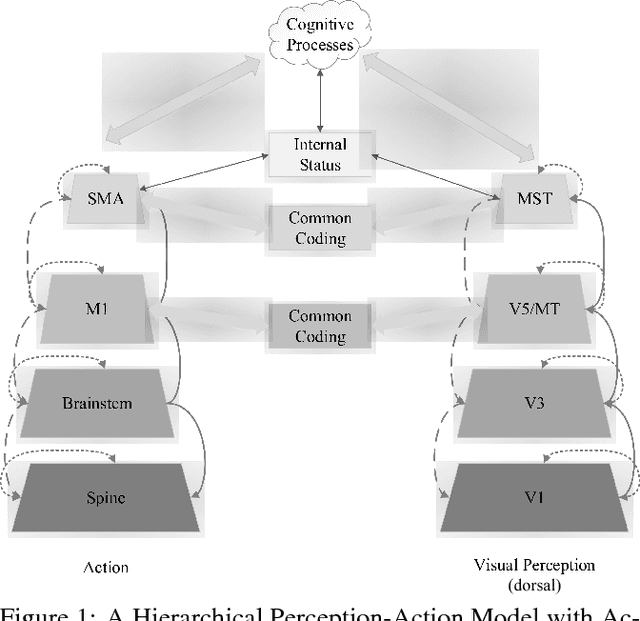

A Hierarchical Emotion Regulated Sensorimotor Model: Case Studies

May 11, 2016

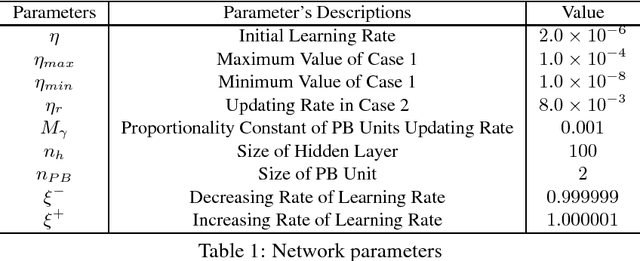

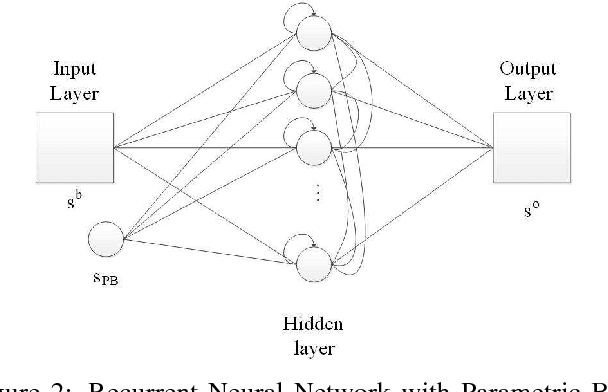

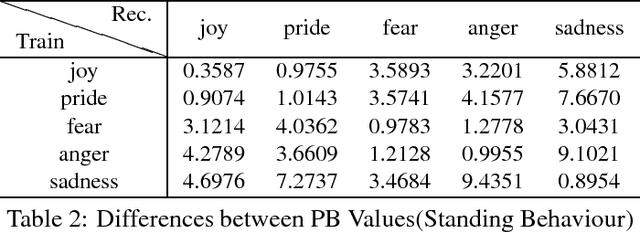

Abstract:Inspired by the hierarchical cognitive architecture and the perception-action model (PAM), we propose that the internal status acts as a kind of common-coding representation which affects, mediates and even regulates the sensorimotor behaviours. These regulation can be depicted in the Bayesian framework, that is why cognitive agents are able to generate behaviours with subtle differences according to their emotion or recognize the emotion by perception. A novel recurrent neural network called recurrent neural network with parametric bias units (RNNPB) runs in three modes, constructing a two-level emotion regulated learning model, was further applied to testify this theory in two different cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge