Xiayue Fan

Variable-frame CNNLSTM for Breast Nodule Classification using Ultrasound Videos

Feb 17, 2025

Abstract:The intersection of medical imaging and artificial intelligence has become an important research direction in intelligent medical treatment, particularly in the analysis of medical images using deep learning for clinical diagnosis. Despite the advances, existing keyframe classification methods lack extraction of time series features, while ultrasonic video classification based on three-dimensional convolution requires uniform frame numbers across patients, resulting in poor feature extraction efficiency and model classification performance. This study proposes a novel video classification method based on CNN and LSTM, introducing NLP's long and short sentence processing scheme into video classification for the first time. The method reduces CNN-extracted image features to 1x512 dimension, followed by sorting and compressing feature vectors for LSTM training. Specifically, feature vectors are sorted by patient video frame numbers and populated with padding value 0 to form variable batches, with invalid padding values compressed before LSTM training to conserve computing resources. Experimental results demonstrate that our variable-frame CNNLSTM method outperforms other approaches across all metrics, showing improvements of 3-6% in F1 score and 1.5% in specificity compared to keyframe methods. The variable-frame CNNLSTM also achieves better accuracy and precision than equal-frame CNNLSTM. These findings validate the effectiveness of our approach in classifying variable-frame ultrasound videos and suggest potential applications in other medical imaging modalities.

CRT-Net: A Generalized and Scalable Framework for the Computer-Aided Diagnosis of Electrocardiogram Signals

May 28, 2021

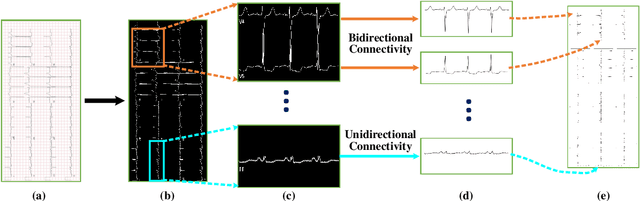

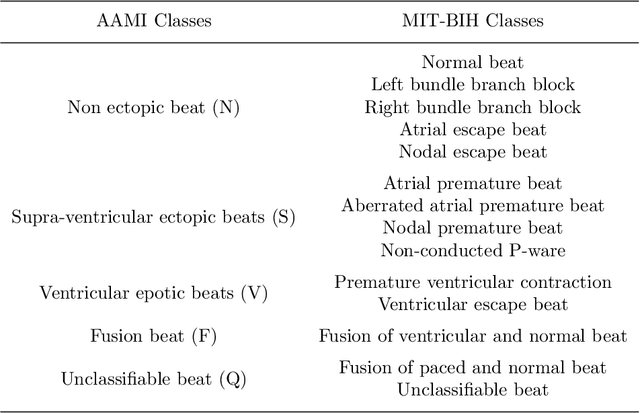

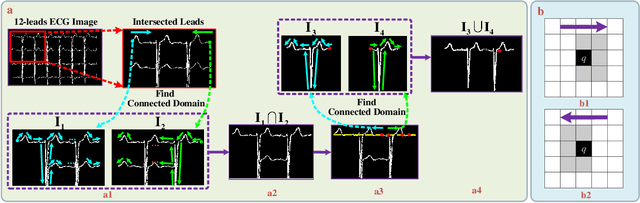

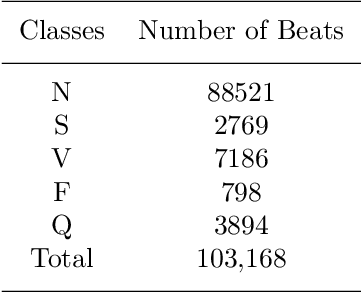

Abstract:Electrocardiogram (ECG) signals play critical roles in the clinical screening and diagnosis of many types of cardiovascular diseases. Despite deep neural networks that have been greatly facilitated computer-aided diagnosis (CAD) in many clinical tasks, the variability and complexity of ECG in the clinic still pose significant challenges in both diagnostic performance and clinical applications. In this paper, we develop a robust and scalable framework for the clinical recognition of ECG. Considering the fact that hospitals generally record ECG signals in the form of graphic waves of 2-D images, we first extract the graphic waves of 12-lead images into numerical 1-D ECG signals by a proposed bi-directional connectivity method. Subsequently, a novel deep neural network, namely CRT-Net, is designed for the fine-grained and comprehensive representation and recognition of 1-D ECG signals. The CRT-Net can well explore waveform features, morphological characteristics and time domain features of ECG by embedding convolution neural network(CNN), recurrent neural network(RNN), and transformer module in a scalable deep model, which is especially suitable in clinical scenarios with different lengths of ECG signals captured from different devices. The proposed framework is first evaluated on two widely investigated public repositories, demonstrating the superior performance of ECG recognition in comparison with state-of-the-art. Moreover, we validate the effectiveness of our proposed bi-directional connectivity and CRT-Net on clinical ECG images collected from the local hospital, including 258 patients with chronic kidney disease (CKD), 351 patients with Type-2 Diabetes (T2DM), and around 300 patients in the control group. In the experiments, our methods can achieve excellent performance in the recognition of these two types of disease.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge