Xiaoxiong Zhong

Multimodal Online Federated Learning with Modality Missing in Internet of Things

May 22, 2025Abstract:The Internet of Things (IoT) ecosystem generates vast amounts of multimodal data from heterogeneous sources such as sensors, cameras, and microphones. As edge intelligence continues to evolve, IoT devices have progressed from simple data collection units to nodes capable of executing complex computational tasks. This evolution necessitates the adoption of distributed learning strategies to effectively handle multimodal data in an IoT environment. Furthermore, the real-time nature of data collection and limited local storage on edge devices in IoT call for an online learning paradigm. To address these challenges, we introduce the concept of Multimodal Online Federated Learning (MMO-FL), a novel framework designed for dynamic and decentralized multimodal learning in IoT environments. Building on this framework, we further account for the inherent instability of edge devices, which frequently results in missing modalities during the learning process. We conduct a comprehensive theoretical analysis under both complete and missing modality scenarios, providing insights into the performance degradation caused by missing modalities. To mitigate the impact of modality missing, we propose the Prototypical Modality Mitigation (PMM) algorithm, which leverages prototype learning to effectively compensate for missing modalities. Experimental results on two multimodal datasets further demonstrate the superior performance of PMM compared to benchmarks.

Denoising and Adaptive Online Vertical Federated Learning for Sequential Multi-Sensor Data in Industrial Internet of Things

Jan 03, 2025

Abstract:With the continuous improvement in the computational capabilities of edge devices such as intelligent sensors in the Industrial Internet of Things, these sensors are no longer limited to mere data collection but are increasingly capable of performing complex computational tasks. This advancement provides both the motivation and the foundation for adopting distributed learning approaches. This study focuses on an industrial assembly line scenario where multiple sensors, distributed across various locations, sequentially collect real-time data characterized by distinct feature spaces. To leverage the computational potential of these sensors while addressing the challenges of communication overhead and privacy concerns inherent in centralized learning, we propose the Denoising and Adaptive Online Vertical Federated Learning (DAO-VFL) algorithm. Tailored to the industrial assembly line scenario, DAO-VFL effectively manages continuous data streams and adapts to shifting learning objectives. Furthermore, it can address critical challenges prevalent in industrial environment, such as communication noise and heterogeneity of sensor capabilities. To support the proposed algorithm, we provide a comprehensive theoretical analysis, highlighting the effects of noise reduction and adaptive local iteration decisions on the regret bound. Experimental results on two real-world datasets further demonstrate the superior performance of DAO-VFL compared to benchmarks algorithms.

Asynchronous Federated Learning with Incentive Mechanism Based on Contract Theory

Oct 10, 2023

Abstract:To address the challenges posed by the heterogeneity inherent in federated learning (FL) and to attract high-quality clients, various incentive mechanisms have been employed. However, existing incentive mechanisms are typically utilized in conventional synchronous aggregation, resulting in significant straggler issues. In this study, we propose a novel asynchronous FL framework that integrates an incentive mechanism based on contract theory. Within the incentive mechanism, we strive to maximize the utility of the task publisher by adaptively adjusting clients' local model training epochs, taking into account factors such as time delay and test accuracy. In the asynchronous scheme, considering client quality, we devise aggregation weights and an access control algorithm to facilitate asynchronous aggregation. Through experiments conducted on the MNIST dataset, the simulation results demonstrate that the test accuracy achieved by our framework is 3.12% and 5.84% higher than that achieved by FedAvg and FedProx without any attacks, respectively. The framework exhibits a 1.35% accuracy improvement over the ideal Local SGD under attacks. Furthermore, aiming for the same target accuracy, our framework demands notably less computation time than both FedAvg and FedProx.

Semi-Asynchronous Federated Edge Learning Mechanism via Over-the-air Computation

May 06, 2023

Abstract:Over-the-air Computation (AirComp) has been demonstrated as an effective transmission scheme to boost the efficiency of federated edge learning (FEEL). However, existing FEEL systems with AirComp scheme often employ traditional synchronous aggregation mechanisms for local model aggregation in each global round, which suffer from the stragglers issues. In this paper, we propose a semi-asynchronous aggregation FEEL mechanism with AirComp scheme (PAOTA) to improve the training efficiency of the FEEL system in the case of significant heterogeneity in data and devices. Taking the staleness and divergence of model updates from edge devices into consideration, we minimize the convergence upper bound of the FEEL global model by adjusting the uplink transmit power of edge devices at each aggregation period. The simulation results demonstrate that our proposed algorithm achieves convergence performance close to that of the ideal Local SGD. Furthermore, with the same target accuracy, the training time required for PAOTA is less than that of the ideal Local SGD and the synchronous FEEL algorithm via AirComp.

Auction Based Clustered Federated Learning in Mobile Edge Computing System

Mar 12, 2021

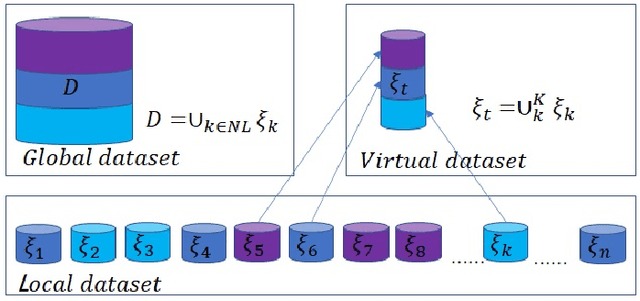

Abstract:In recent years, mobile clients' computing ability and storage capacity have greatly improved, efficiently dealing with some applications locally. Federated learning is a promising distributed machine learning solution that uses local computing and local data to train the Artificial Intelligence (AI) model. Combining local computing and federated learning can train a powerful AI model under the premise of ensuring local data privacy while making full use of mobile clients' resources. However, the heterogeneity of local data, that is, Non-independent and identical distribution (Non-IID) and imbalance of local data size, may bring a bottleneck hindering the application of federated learning in mobile edge computing (MEC) system. Inspired by this, we propose a cluster-based clients selection method that can generate a federated virtual dataset that satisfies the global distribution to offset the impact of data heterogeneity and proved that the proposed scheme could converge to an approximate optimal solution. Based on the clustering method, we propose an auction-based clients selection scheme within each cluster that fully considers the system's energy heterogeneity and gives the Nash equilibrium solution of the proposed scheme for balance the energy consumption and improving the convergence rate. The simulation results show that our proposed selection methods and auction-based federated learning can achieve better performance with the Convolutional Neural Network model (CNN) under different data distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge