Heqiang Wang

Multimodal Online Federated Learning with Modality Missing in Internet of Things

May 22, 2025Abstract:The Internet of Things (IoT) ecosystem generates vast amounts of multimodal data from heterogeneous sources such as sensors, cameras, and microphones. As edge intelligence continues to evolve, IoT devices have progressed from simple data collection units to nodes capable of executing complex computational tasks. This evolution necessitates the adoption of distributed learning strategies to effectively handle multimodal data in an IoT environment. Furthermore, the real-time nature of data collection and limited local storage on edge devices in IoT call for an online learning paradigm. To address these challenges, we introduce the concept of Multimodal Online Federated Learning (MMO-FL), a novel framework designed for dynamic and decentralized multimodal learning in IoT environments. Building on this framework, we further account for the inherent instability of edge devices, which frequently results in missing modalities during the learning process. We conduct a comprehensive theoretical analysis under both complete and missing modality scenarios, providing insights into the performance degradation caused by missing modalities. To mitigate the impact of modality missing, we propose the Prototypical Modality Mitigation (PMM) algorithm, which leverages prototype learning to effectively compensate for missing modalities. Experimental results on two multimodal datasets further demonstrate the superior performance of PMM compared to benchmarks.

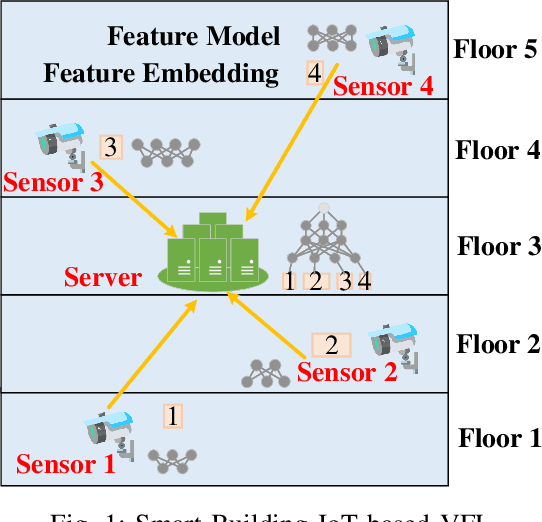

Denoising and Adaptive Online Vertical Federated Learning for Sequential Multi-Sensor Data in Industrial Internet of Things

Jan 03, 2025

Abstract:With the continuous improvement in the computational capabilities of edge devices such as intelligent sensors in the Industrial Internet of Things, these sensors are no longer limited to mere data collection but are increasingly capable of performing complex computational tasks. This advancement provides both the motivation and the foundation for adopting distributed learning approaches. This study focuses on an industrial assembly line scenario where multiple sensors, distributed across various locations, sequentially collect real-time data characterized by distinct feature spaces. To leverage the computational potential of these sensors while addressing the challenges of communication overhead and privacy concerns inherent in centralized learning, we propose the Denoising and Adaptive Online Vertical Federated Learning (DAO-VFL) algorithm. Tailored to the industrial assembly line scenario, DAO-VFL effectively manages continuous data streams and adapts to shifting learning objectives. Furthermore, it can address critical challenges prevalent in industrial environment, such as communication noise and heterogeneity of sensor capabilities. To support the proposed algorithm, we provide a comprehensive theoretical analysis, highlighting the effects of noise reduction and adaptive local iteration decisions on the regret bound. Experimental results on two real-world datasets further demonstrate the superior performance of DAO-VFL compared to benchmarks algorithms.

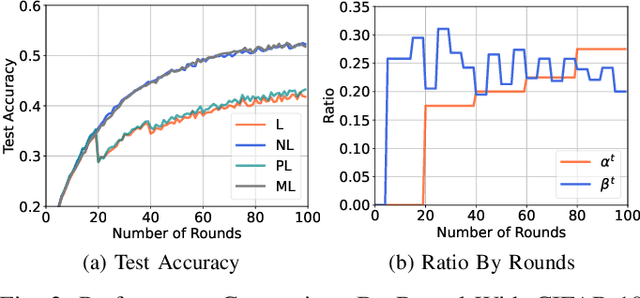

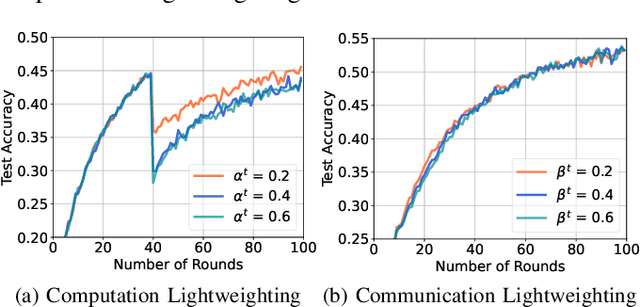

Computation and Communication Efficient Lightweighting Vertical Federated Learning

Mar 30, 2024

Abstract:The exploration of computational and communication efficiency within Federated Learning (FL) has emerged as a prominent and crucial field of study. While most existing efforts to enhance these efficiencies have focused on Horizontal FL, the distinct processes and model structures of Vertical FL preclude the direct application of Horizontal FL-based techniques. In response, we introduce the concept of Lightweight Vertical Federated Learning (LVFL), targeting both computational and communication efficiencies. This approach involves separate lightweighting strategies for the feature model, to improve computational efficiency, and for feature embedding, to enhance communication efficiency. Moreover, we establish a convergence bound for our LVFL algorithm, which accounts for both communication and computational lightweighting ratios. Our evaluation of the algorithm on a image classification dataset reveals that LVFL significantly alleviates computational and communication demands while preserving robust learning performance. This work effectively addresses the gaps in communication and computational efficiency within Vertical FL.

Online Vertical Federated Learning for Cooperative Spectrum Sensing

Dec 18, 2023Abstract:The increasing demand for wireless communication underscores the need to optimize radio frequency spectrum utilization. An effective strategy for leveraging underutilized licensed frequency bands is cooperative spectrum sensing (CSS), which enable multiple secondary users (SUs) to collaboratively detect the spectrum usage of primary users (PUs) prior to accessing the licensed spectrum. The increasing popularity of machine learning has led to a shift from traditional CSS methods to those based on deep learning. However, deep learning-based CSS methods often rely on centralized learning, posing challenges like communication overhead and data privacy risks. Recent research suggests vertical federated learning (VFL) as a potential solution, with its core concept centered on partitioning the deep neural network into distinct segments, with each segment is trained separately. However, existing VFL-based CSS works do not fully address the practical challenges arising from streaming data and the objective shift. In this work, we introduce online vertical federated learning (OVFL), a robust framework designed to address the challenges of ongoing data stream and shifting learning goals. Our theoretical analysis reveals that OVFL achieves a sublinear regret bound, thereby evidencing its efficiency. Empirical results from our experiments show that OVFL outperforms benchmarks in CSS tasks. We also explore the impact of various parameters on the learning performance.

On the Local Cache Update Rules in Streaming Federated Learning

Mar 28, 2023Abstract:In this study, we address the emerging field of Streaming Federated Learning (SFL) and propose local cache update rules to manage dynamic data distributions and limited cache capacity. Traditional federated learning relies on fixed data sets, whereas in SFL, data is streamed, and its distribution changes over time, leading to discrepancies between the local training dataset and long-term distribution. To mitigate this problem, we propose three local cache update rules - First-In-First-Out (FIFO), Static Ratio Selective Replacement (SRSR), and Dynamic Ratio Selective Replacement (DRSR) - that update the local cache of each client while considering the limited cache capacity. Furthermore, we derive a convergence bound for our proposed SFL algorithm as a function of the distribution discrepancy between the long-term data distribution and the client's local training dataset. We then evaluate our proposed algorithm on two datasets: a network traffic classification dataset and an image classification dataset. Our experimental results demonstrate that our proposed local cache update rules significantly reduce the distribution discrepancy and outperform the baseline methods. Our study advances the field of SFL and provides practical cache management solutions in federated learning.

Friends to Help: Saving Federated Learning from Client Dropout

May 26, 2022

Abstract:Federated learning (FL) is an outstanding distributed machine learning framework due to its benefits on data privacy and communication efficiency. Since full client participation in many cases is infeasible due to constrained resources, partial participation FL algorithms have been investigated that proactively select/sample a subset of clients, aiming to achieve learning performance close to the full participation case. This paper studies a passive partial client participation scenario that is much less well understood, where partial participation is a result of external events, namely client dropout, rather than a decision of the FL algorithm. We cast FL with client dropout as a special case of a larger class of FL problems where clients can submit substitute (possibly inaccurate) local model updates. Based on our convergence analysis, we develop a new algorithm FL-FDMS that discovers friends of clients (i.e., clients whose data distributions are similar) on-the-fly and uses friends' local updates as substitutes for the dropout clients, thereby reducing the substitution error and improving the convergence performance. A complexity reduction mechanism is also incorporated into FL-FDMS, making it both theoretically sound and practically useful. Experiments on MNIST and CIFAR-10 confirmed the superior performance of FL-FDMS in handling client dropout in FL.

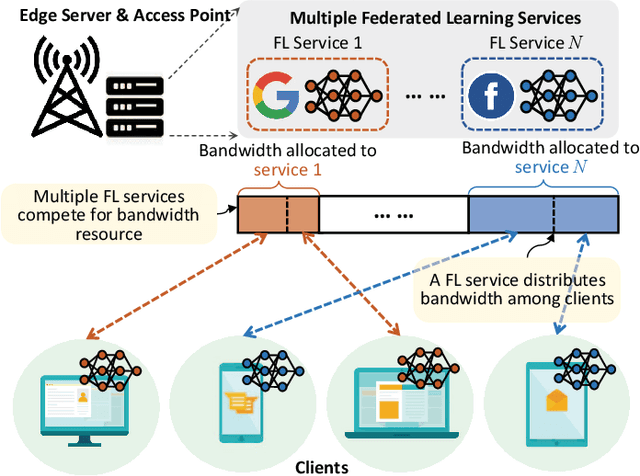

Bandwidth Allocation for Multiple Federated Learning Services in Wireless Edge Networks

Jan 10, 2021

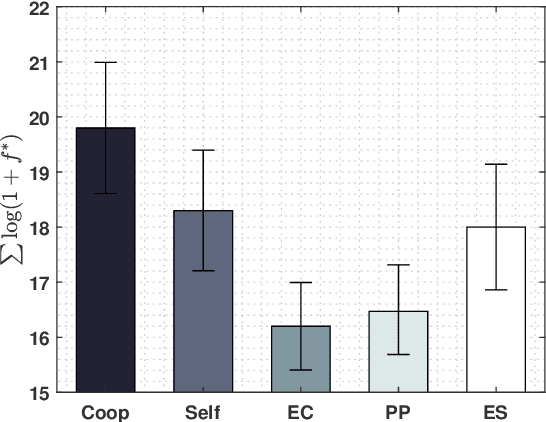

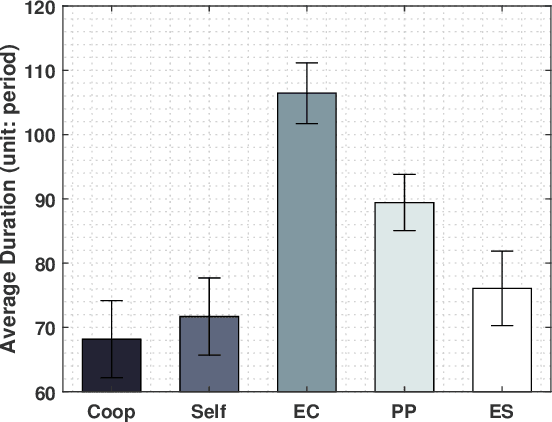

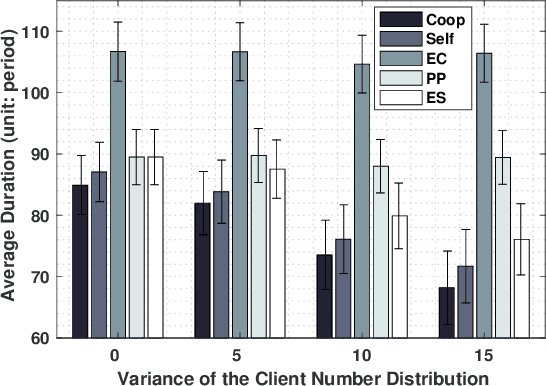

Abstract:This paper studies a federated learning (FL) system, where \textit{multiple} FL services co-exist in a wireless network and share common wireless resources. It fills the void of wireless resource allocation for multiple simultaneous FL services in the existing literature. Our method designs a two-level resource allocation framework comprising \emph{intra-service} resource allocation and \emph{inter-service} resource allocation. The intra-service resource allocation problem aims to minimize the length of FL rounds by optimizing the bandwidth allocation among the clients of each FL service. Based on this, an inter-service resource allocation problem is further considered, which distributes bandwidth resources among multiple simultaneous FL services. We consider both cooperative and selfish providers of the FL services. For cooperative FL service providers, we design a distributed bandwidth allocation algorithm to optimize the overall performance of multiple FL services, meanwhile cater to the fairness among FL services and the privacy of clients. For selfish FL service providers, a new auction scheme is designed with the FL service owners as the bidders and the network provider as the auctioneer. The designed auction scheme strikes a balance between the overall FL performance and fairness. Our simulation results show that the proposed algorithms outperform other benchmarks under various network conditions.

Client Selection and Bandwidth Allocation in Wireless Federated Learning Networks: A Long-Term Perspective

Apr 09, 2020

Abstract:This paper studies federated learning (FL) in a classic wireless network, where learning clients share a common wireless link to a coordinating server to perform federated model training using their local data. In such wireless federated learning networks (WFLNs), optimizing the learning performance depends crucially on how clients are selected and how bandwidth is allocated among the selected clients in every learning round, as both radio and client energy resources are limited. While existing works have made some attempts to allocate the limited wireless resources to optimize FL, they focus on the problem in individual learning rounds, overlooking an inherent yet critical feature of federated learning. This paper brings a new long-term perspective to resource allocation in WFLNs, realizing that learning rounds are not only temporally interdependent but also have varying significance towards the final learning outcome. To this end, we first design data-driven experiments to show that different temporal client selection patterns lead to considerably different learning performance. With the obtained insights, we formulate a stochastic optimization problem for joint client selection and bandwidth allocation under long-term client energy constraints, and develop a new algorithm that utilizes only currently available wireless channel information but can achieve long-term performance guarantee. Further experiments show that our algorithm results in the desired temporal client selection pattern, is adaptive to changing network environments and far outperforms benchmarks that ignore the long-term effect of FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge