Xiaojun Zeng

PULSAR at MEDIQA-Sum 2023: Large Language Models Augmented by Synthetic Dialogue Convert Patient Dialogues to Medical Records

Jul 05, 2023

Abstract:This paper describes PULSAR, our system submission at the ImageClef 2023 MediQA-Sum task on summarising patient-doctor dialogues into clinical records. The proposed framework relies on domain-specific pre-training, to produce a specialised language model which is trained on task-specific natural data augmented by synthetic data generated by a black-box LLM. We find limited evidence towards the efficacy of domain-specific pre-training and data augmentation, while scaling up the language model yields the best performance gains. Our approach was ranked second and third among 13 submissions on task B of the challenge. Our code is available at https://github.com/yuping-wu/PULSAR.

PULSAR: Pre-training with Extracted Healthcare Terms for Summarising Patients' Problems and Data Augmentation with Black-box Large Language Models

Jun 05, 2023

Abstract:Medical progress notes play a crucial role in documenting a patient's hospital journey, including his or her condition, treatment plan, and any updates for healthcare providers. Automatic summarisation of a patient's problems in the form of a problem list can aid stakeholders in understanding a patient's condition, reducing workload and cognitive bias. BioNLP 2023 Shared Task 1A focuses on generating a list of diagnoses and problems from the provider's progress notes during hospitalisation. In this paper, we introduce our proposed approach to this task, which integrates two complementary components. One component employs large language models (LLMs) for data augmentation; the other is an abstractive summarisation LLM with a novel pre-training objective for generating the patients' problems summarised as a list. Our approach was ranked second among all submissions to the shared task. The performance of our model on the development and test datasets shows that our approach is more robust on unknown data, with an improvement of up to 3.1 points over the same size of the larger model.

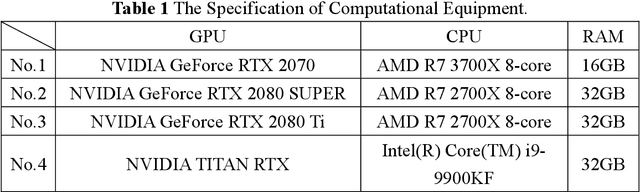

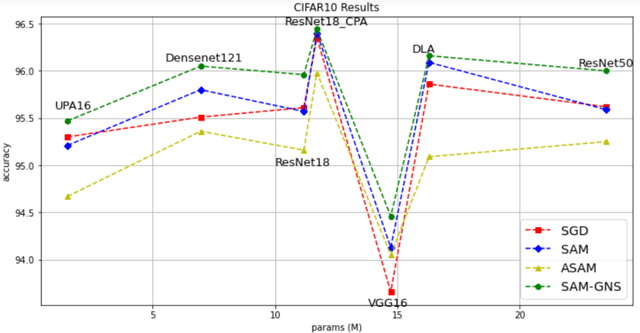

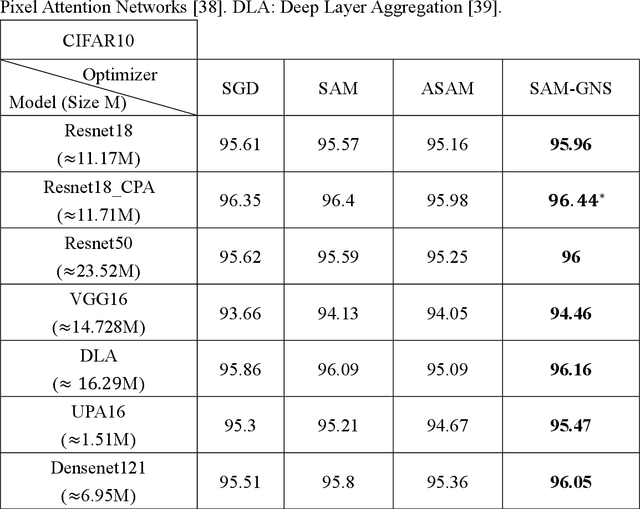

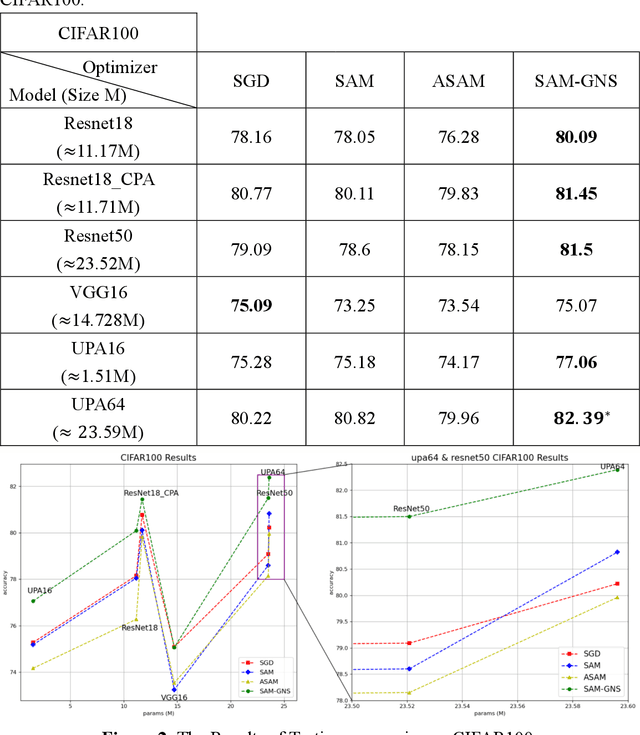

Update in Unit Gradient

Oct 01, 2021

Abstract:In Machine Learning, optimization mostly has been done by using a gradient descent method to find the minimum value of the loss. However, especially in deep learning, finding a global minimum from a nonconvex loss function across a high dimensional space is an extraordinarily difficult task. Recently, a generalization learning algorithm, Sharpness-Aware Minimization (SAM), has made a great success in image classification task. Despite the great performance in creating convex space, proper direction leading by SAM is still remained unclear. We, thereby, propose a creating a Unit Vector space in SAM, which not only consisted of the mathematical instinct in linear algebra but also kept the advantages of adaptive gradient algorithm. Moreover, applying SAM in unit gradient brings models competitive performances in image classification datasets, such as CIFAR - {10, 100}. The experiment showed that it performed even better and more robust than SAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge