Update in Unit Gradient

Paper and Code

Oct 01, 2021

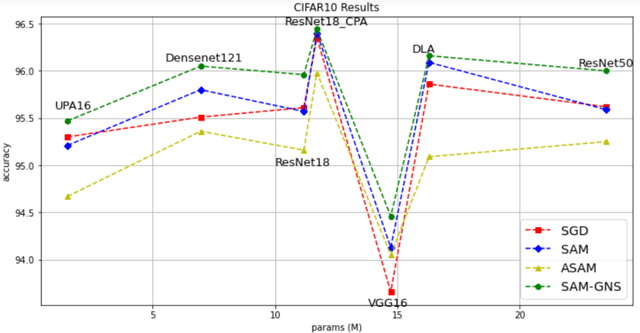

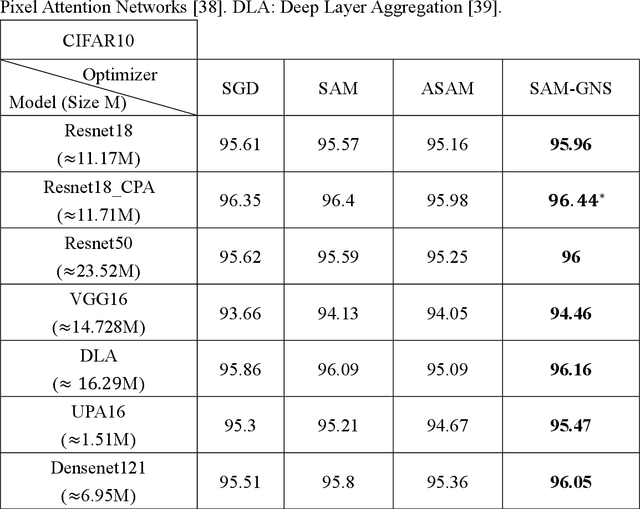

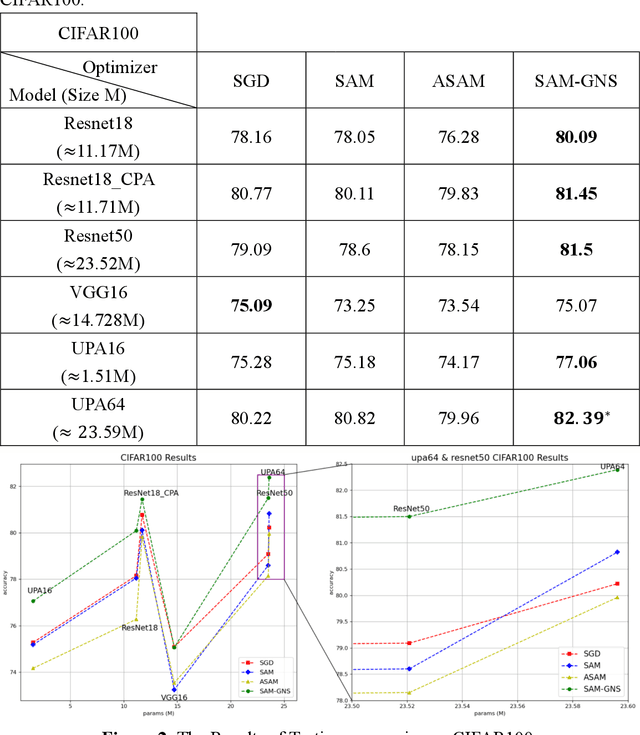

In Machine Learning, optimization mostly has been done by using a gradient descent method to find the minimum value of the loss. However, especially in deep learning, finding a global minimum from a nonconvex loss function across a high dimensional space is an extraordinarily difficult task. Recently, a generalization learning algorithm, Sharpness-Aware Minimization (SAM), has made a great success in image classification task. Despite the great performance in creating convex space, proper direction leading by SAM is still remained unclear. We, thereby, propose a creating a Unit Vector space in SAM, which not only consisted of the mathematical instinct in linear algebra but also kept the advantages of adaptive gradient algorithm. Moreover, applying SAM in unit gradient brings models competitive performances in image classification datasets, such as CIFAR - {10, 100}. The experiment showed that it performed even better and more robust than SAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge