Xiaogang Li

Domain adaption and physical constrains transfer learning for shale gas production

Dec 18, 2023

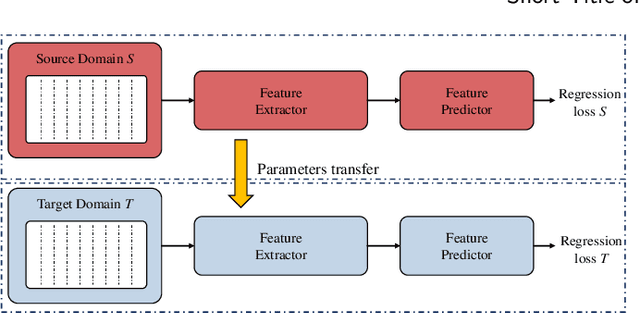

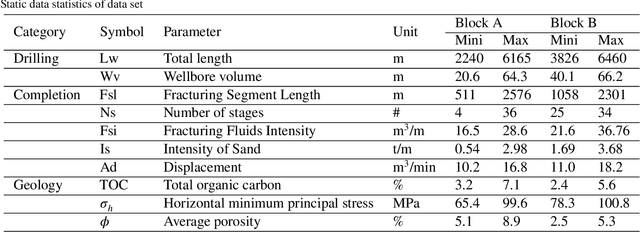

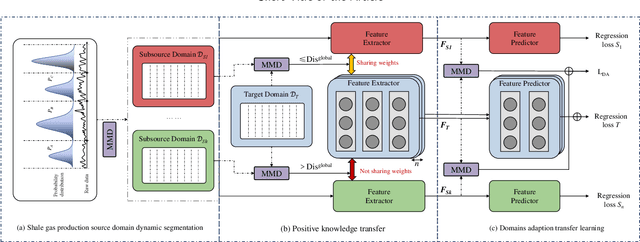

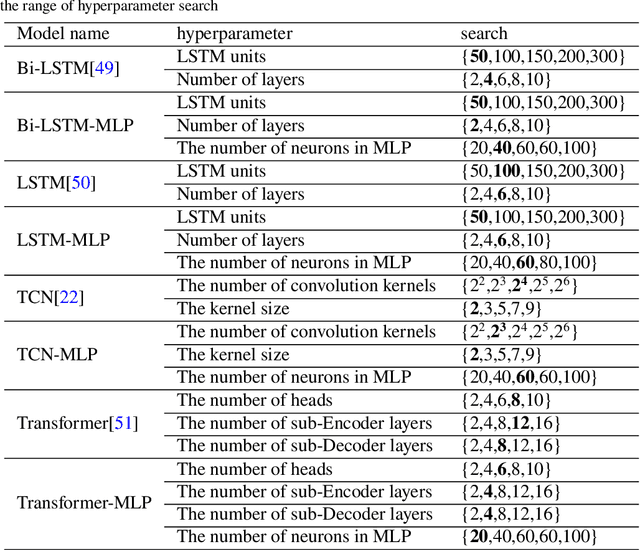

Abstract:Effective prediction of shale gas production is crucial for strategic reservoir development. However, in new shale gas blocks, two main challenges are encountered: (1) the occurrence of negative transfer due to insufficient data, and (2) the limited interpretability of deep learning (DL) models. To tackle these problems, we propose a novel transfer learning methodology that utilizes domain adaptation and physical constraints. This methodology effectively employs historical data from the source domain to reduce negative transfer from the data distribution perspective, while also using physical constraints to build a robust and reliable prediction model that integrates various types of data. The methodology starts by dividing the production data from the source domain into multiple subdomains, thereby enhancing data diversity. It then uses Maximum Mean Discrepancy (MMD) and global average distance measures to decide on the feasibility of transfer. Through domain adaptation, we integrate all transferable knowledge, resulting in a more comprehensive target model. Lastly, by incorporating drilling, completion, and geological data as physical constraints, we develop a hybrid model. This model, a combination of a multi-layer perceptron (MLP) and a Transformer (Transformer-MLP), is designed to maximize interpretability. Experimental validation in China's southwestern region confirms the method's effectiveness.

Interpretability and causal discovery of the machine learning models to predict the production of CBM wells after hydraulic fracturing

Dec 21, 2022

Abstract:Machine learning approaches are widely studied in the production prediction of CBM wells after hydraulic fracturing, but merely used in practice due to the low generalization ability and the lack of interpretability. A novel methodology is proposed in this article to discover the latent causality from observed data, which is aimed at finding an indirect way to interpret the machine learning results. Based on the theory of causal discovery, a causal graph is derived with explicit input, output, treatment and confounding variables. Then, SHAP is employed to analyze the influence of the factors on the production capability, which indirectly interprets the machine learning models. The proposed method can capture the underlying nonlinear relationship between the factors and the output, which remedies the limitation of the traditional machine learning routines based on the correlation analysis of factors. The experiment on the data of CBM shows that the detected relationship between the production and the geological/engineering factors by the presented method, is coincident with the actual physical mechanism. Meanwhile, compared with traditional methods, the interpretable machine learning models have better performance in forecasting production capability, averaging 20% improvement in accuracy.

RGB Image Classification with Quantum Convolutional Ansaetze

Jul 23, 2021

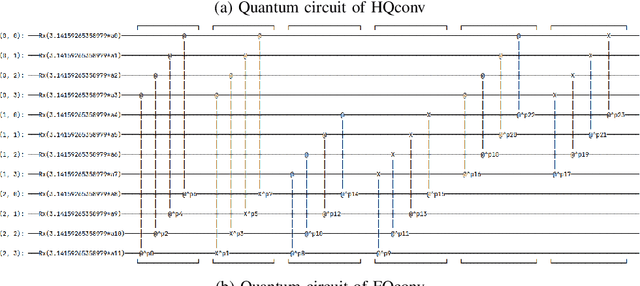

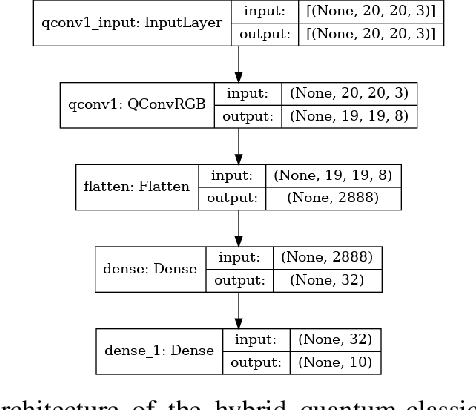

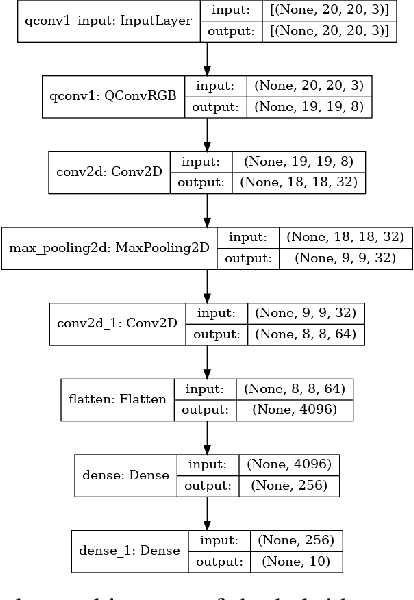

Abstract:With the rapid growth of qubit numbers and coherence times in quantum hardware technology, implementing shallow neural networks on the so-called Noisy Intermediate-Scale Quantum (NISQ) devices has attracted a lot of interest. Many quantum (convolutional) circuit ansaetze are proposed for grayscale images classification tasks with promising empirical results. However, when applying these ansaetze on RGB images, the intra-channel information that is useful for vision tasks is not extracted effectively. In this paper, we propose two types of quantum circuit ansaetze to simulate convolution operations on RGB images, which differ in the way how inter-channel and intra-channel information are extracted. To the best of our knowledge, this is the first work of a quantum convolutional circuit to deal with RGB images effectively, with a higher test accuracy compared to the purely classical CNNs. We also investigate the relationship between the size of quantum circuit ansatz and the learnability of the hybrid quantum-classical convolutional neural network. Through experiments based on CIFAR-10 and MNIST datasets, we demonstrate that a larger size of the quantum circuit ansatz improves predictive performance in multiclass classification tasks, providing useful insights for near term quantum algorithm developments.

Geodesic Clustering in Deep Generative Models

Sep 13, 2018

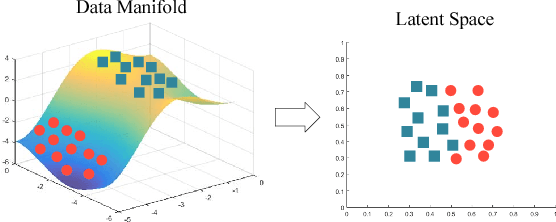

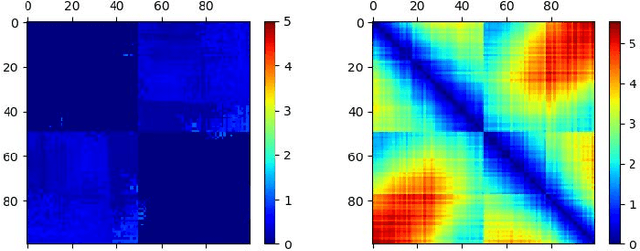

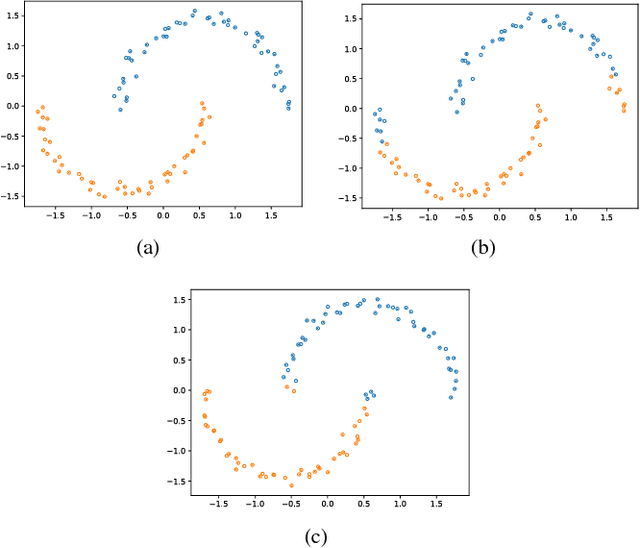

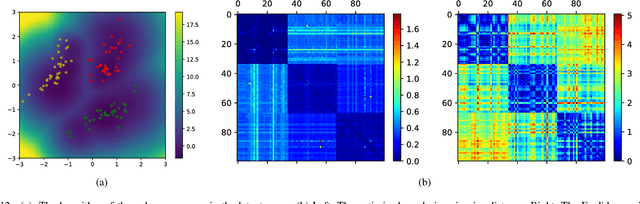

Abstract:Deep generative models are tremendously successful in learning low-dimensional latent representations that well-describe the data. These representations, however, tend to much distort relationships between points, i.e. pairwise distances tend to not reflect semantic similarities well. This renders unsupervised tasks, such as clustering, difficult when working with the latent representations. We demonstrate that taking the geometry of the generative model into account is sufficient to make simple clustering algorithms work well over latent representations. Leaning on the recent finding that deep generative models constitute stochastically immersed Riemannian manifolds, we propose an efficient algorithm for computing geodesics (shortest paths) and computing distances in the latent space, while taking its distortion into account. We further propose a new architecture for modeling uncertainty in variational autoencoders, which is essential for understanding the geometry of deep generative models. Experiments show that the geodesic distance is very likely to reflect the internal structure of the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge