Xianke Chen

Partially Relevant Video Retrieval

Aug 26, 2022

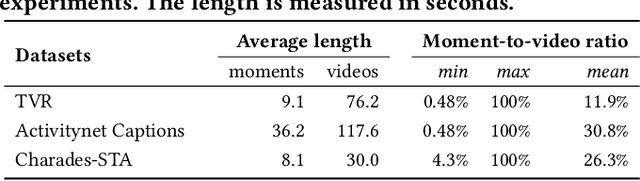

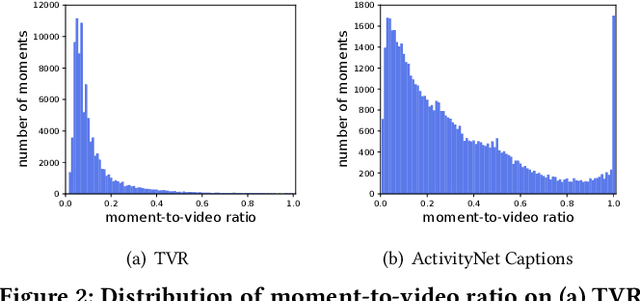

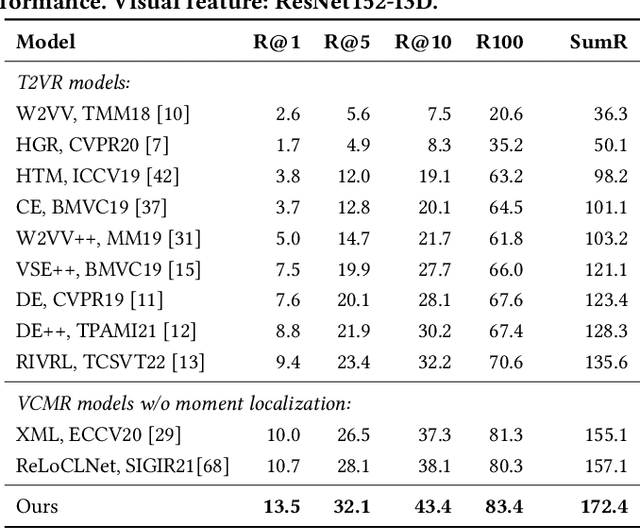

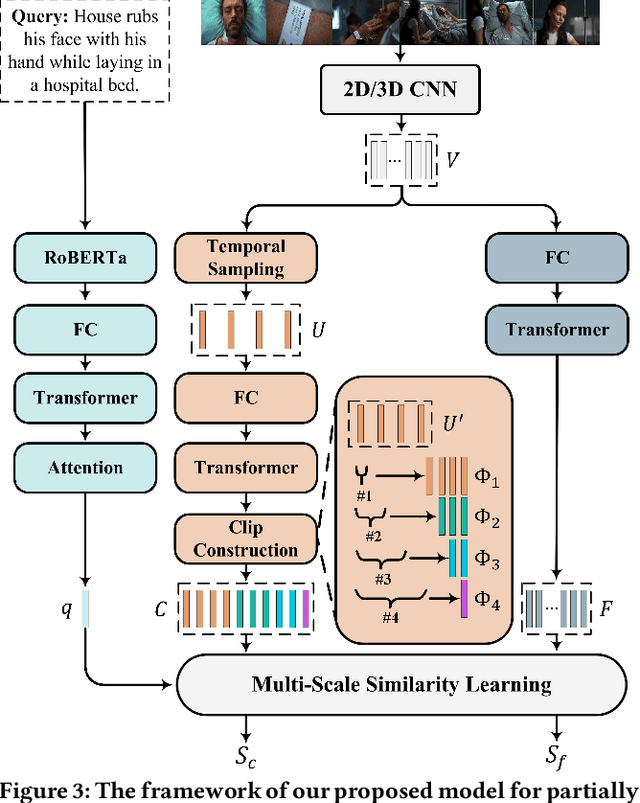

Abstract:Current methods for text-to-video retrieval (T2VR) are trained and tested on video-captioning oriented datasets such as MSVD, MSR-VTT and VATEX. A key property of these datasets is that videos are assumed to be temporally pre-trimmed with short duration, whilst the provided captions well describe the gist of the video content. Consequently, for a given paired video and caption, the video is supposed to be fully relevant to the caption. In reality, however, as queries are not known a priori, pre-trimmed video clips may not contain sufficient content to fully meet the query. This suggests a gap between the literature and the real world. To fill the gap, we propose in this paper a novel T2VR subtask termed Partially Relevant Video Retrieval (PRVR). An untrimmed video is considered to be partially relevant w.r.t. a given textual query if it contains a moment relevant to the query. PRVR aims to retrieve such partially relevant videos from a large collection of untrimmed videos. PRVR differs from single video moment retrieval and video corpus moment retrieval, as the latter two are to retrieve moments rather than untrimmed videos. We formulate PRVR as a multiple instance learning (MIL) problem, where a video is simultaneously viewed as a bag of video clips and a bag of video frames. Clips and frames represent video content at different time scales. We propose a Multi-Scale Similarity Learning (MS-SL) network that jointly learns clip-scale and frame-scale similarities for PRVR. Extensive experiments on three datasets (TVR, ActivityNet Captions, and Charades-STA) demonstrate the viability of the proposed method. We also show that our method can be used for improving video corpus moment retrieval.

Reading-strategy Inspired Visual Representation Learning for Text-to-Video Retrieval

Jan 23, 2022

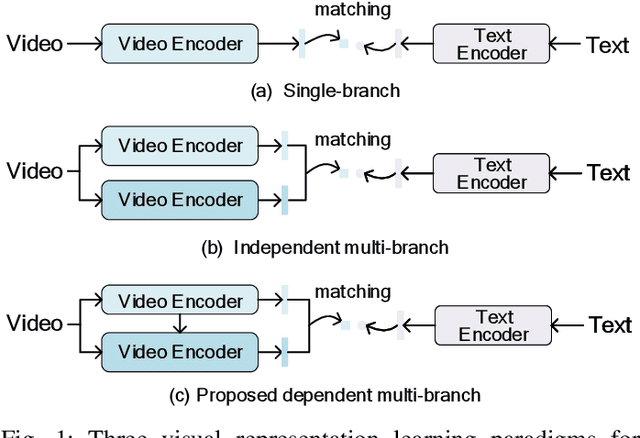

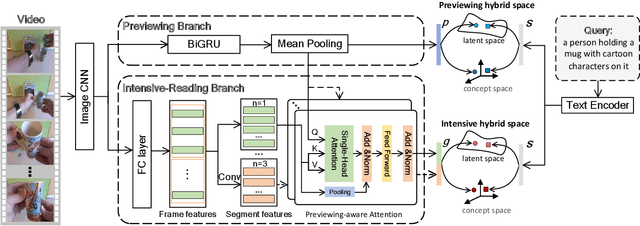

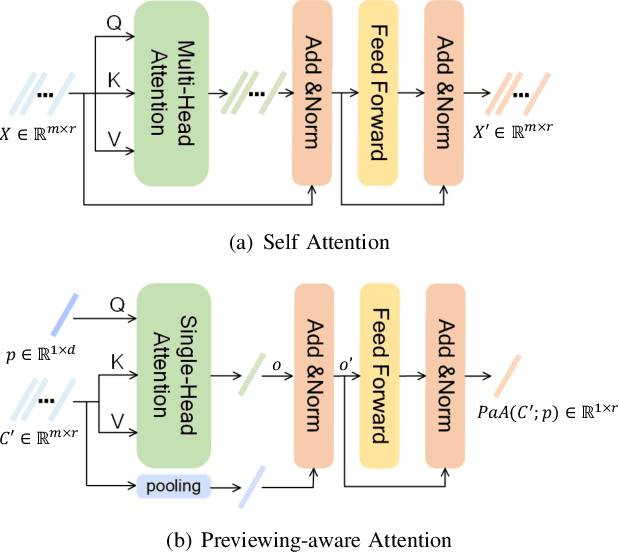

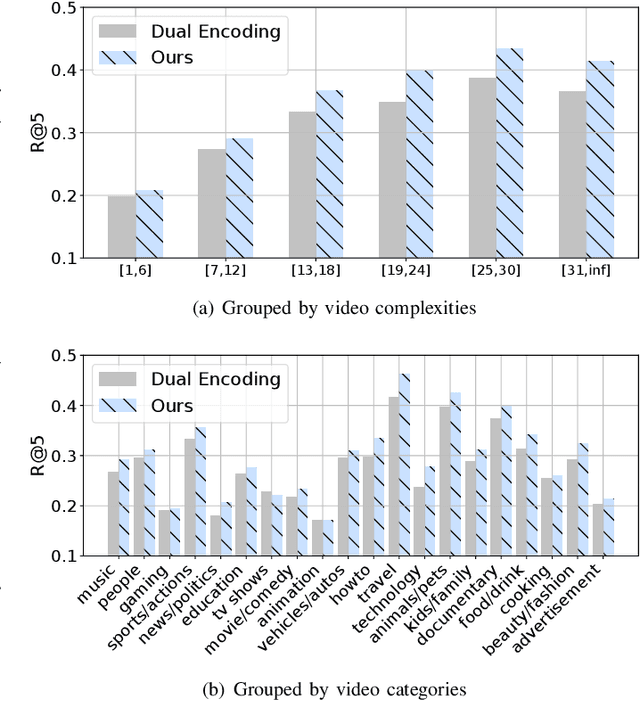

Abstract:This paper aims for the task of text-to-video retrieval, where given a query in the form of a natural-language sentence, it is asked to retrieve videos which are semantically relevant to the given query, from a great number of unlabeled videos. The success of this task depends on cross-modal representation learning that projects both videos and sentences into common spaces for semantic similarity computation. In this work, we concentrate on video representation learning, an essential component for text-to-video retrieval. Inspired by the reading strategy of humans, we propose a Reading-strategy Inspired Visual Representation Learning (RIVRL) to represent videos, which consists of two branches: a previewing branch and an intensive-reading branch. The previewing branch is designed to briefly capture the overview information of videos, while the intensive-reading branch is designed to obtain more in-depth information. Moreover, the intensive-reading branch is aware of the video overview captured by the previewing branch. Such holistic information is found to be useful for the intensive-reading branch to extract more fine-grained features. Extensive experiments on three datasets are conducted, where our model RIVRL achieves a new state-of-the-art on TGIF and VATEX. Moreover, on MSR-VTT, our model using two video features shows comparable performance to the state-of-the-art using seven video features and even outperforms models pre-trained on the large-scale HowTo100M dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge