Xiangning Xie

Terrain Point Cloud Inpainting via Signal Decomposition

Apr 04, 2024

Abstract:The rapid development of 3D acquisition technology has made it possible to obtain point clouds of real-world terrains. However, due to limitations in sensor acquisition technology or specific requirements, point clouds often contain defects such as holes with missing data. Inpainting algorithms are widely used to patch these holes. However, existing traditional inpainting algorithms rely on precise hole boundaries, which limits their ability to handle cases where the boundaries are not well-defined. On the other hand, learning-based completion methods often prioritize reconstructing the entire point cloud instead of solely focusing on hole filling. Based on the fact that real-world terrain exhibits both global smoothness and rich local detail, we propose a novel representation for terrain point clouds. This representation can help to repair the holes without clear boundaries. Specifically, it decomposes terrains into low-frequency and high-frequency components, which are represented by B-spline surfaces and relative height maps respectively. In this way, the terrain point cloud inpainting problem is transformed into a B-spline surface fitting and 2D image inpainting problem. By solving the two problems, the highly complex and irregular holes on the terrain point clouds can be well-filled, which not only satisfies the global terrain undulation but also exhibits rich geometric details. The experimental results also demonstrate the effectiveness of our method.

Efficient Evaluation Methods for Neural Architecture Search: A Survey

Jan 14, 2023Abstract:Neural Architecture Search (NAS) has received increasing attention because of its exceptional merits in automating the design of Deep Neural Network (DNN) architectures. However, the performance evaluation process, as a key part of NAS, often requires training a large number of DNNs. This inevitably causes NAS computationally expensive. In past years, many Efficient Evaluation Methods (EEMs) have been proposed to address this critical issue. In this paper, we comprehensively survey these EEMs published up to date, and provide a detailed analysis to motivate the further development of this research direction. Specifically, we divide the existing EEMs into four categories based on the number of DNNs trained for constructing these EEMs. The categorization can reflect the degree of efficiency in principle, which can in turn help quickly grasp the methodological features. In surveying each category, we further discuss the design principles and analyze the strength and weaknesses to clarify the landscape of existing EEMs, thus making easily understanding the research trends of EEMs. Furthermore, we also discuss the current challenges and issues to identify future research directions in this emerging topic. To the best of our knowledge, this is the first work that extensively and systematically surveys the EEMs of NAS.

Differentiable Search of Accurate and Robust Architectures

Jan 02, 2023

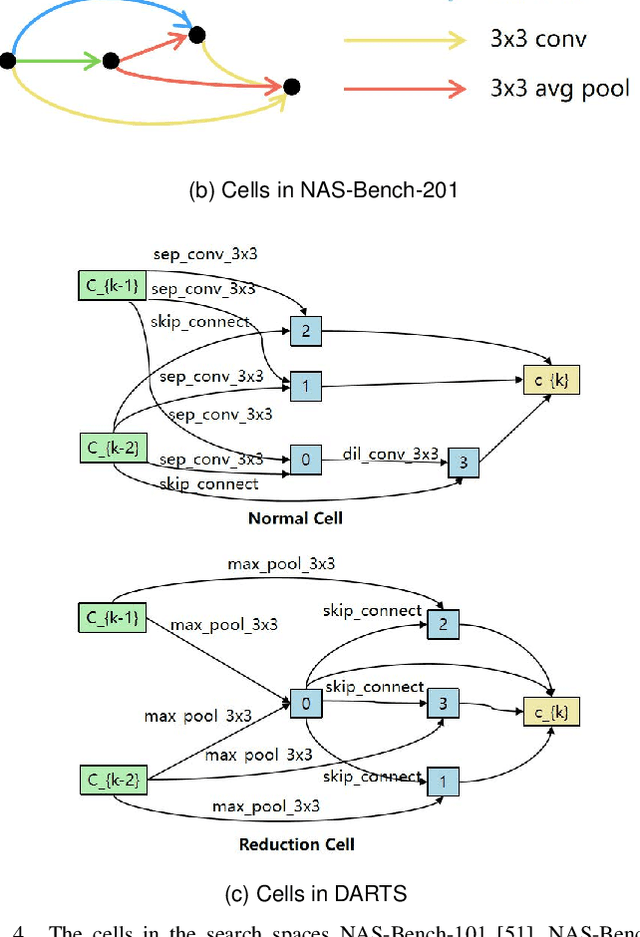

Abstract:Deep neural networks (DNNs) are found to be vulnerable to adversarial attacks, and various methods have been proposed for the defense. Among these methods, adversarial training has been drawing increasing attention because of its simplicity and effectiveness. However, the performance of the adversarial training is greatly limited by the architectures of target DNNs, which often makes the resulting DNNs with poor accuracy and unsatisfactory robustness. To address this problem, we propose DSARA to automatically search for the neural architectures that are accurate and robust after adversarial training. In particular, we design a novel cell-based search space specially for adversarial training, which improves the accuracy and the robustness upper bound of the searched architectures by carefully designing the placement of the cells and the proportional relationship of the filter numbers. Then we propose a two-stage search strategy to search for both accurate and robust neural architectures. At the first stage, the architecture parameters are optimized to minimize the adversarial loss, which makes full use of the effectiveness of the adversarial training in enhancing the robustness. At the second stage, the architecture parameters are optimized to minimize both the natural loss and the adversarial loss utilizing the proposed multi-objective adversarial training method, so that the searched neural architectures are both accurate and robust. We evaluate the proposed algorithm under natural data and various adversarial attacks, which reveals the superiority of the proposed method in terms of both accurate and robust architectures. We also conclude that accurate and robust neural architectures tend to deploy very different structures near the input and the output, which has great practical significance on both hand-crafting and automatically designing of accurate and robust neural architectures.

Architecture Augmentation for Performance Predictor Based on Graph Isomorphism

Jul 03, 2022

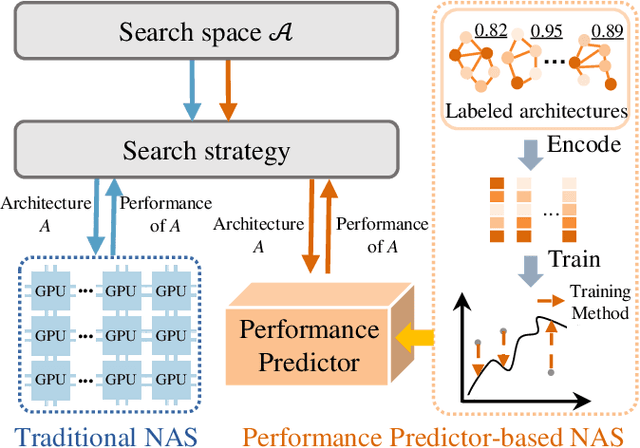

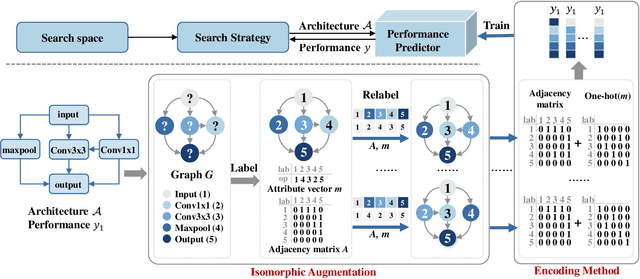

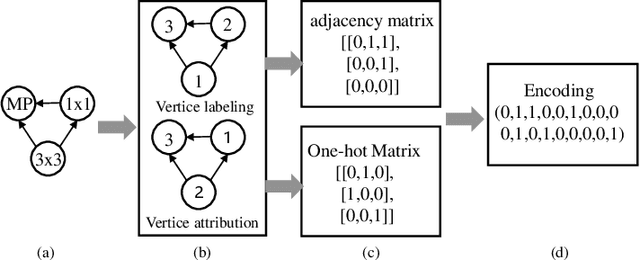

Abstract:Neural Architecture Search (NAS) can automatically design architectures for deep neural networks (DNNs) and has become one of the hottest research topics in the current machine learning community. However, NAS is often computationally expensive because a large number of DNNs require to be trained for obtaining performance during the search process. Performance predictors can greatly alleviate the prohibitive cost of NAS by directly predicting the performance of DNNs. However, building satisfactory performance predictors highly depends on enough trained DNN architectures, which are difficult to obtain in most scenarios. To solve this critical issue, we propose an effective DNN architecture augmentation method named GIAug in this paper. Specifically, we first propose a mechanism based on graph isomorphism, which has the merit of efficiently generating a factorial of $\boldsymbol n$ (i.e., $\boldsymbol n!$) diverse annotated architectures upon a single architecture having $\boldsymbol n$ nodes. In addition, we also design a generic method to encode the architectures into the form suitable to most prediction models. As a result, GIAug can be flexibly utilized by various existing performance predictors-based NAS algorithms. We perform extensive experiments on CIFAR-10 and ImageNet benchmark datasets on small-, medium- and large-scale search space. The experiments show that GIAug can significantly enhance the performance of most state-of-the-art peer predictors. In addition, GIAug can save three magnitude order of computation cost at most on ImageNet yet with similar performance when compared with state-of-the-art NAS algorithms.

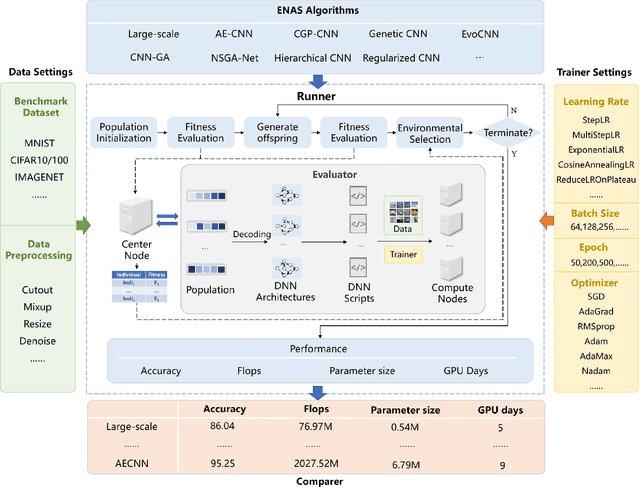

BenchENAS: A Benchmarking Platform for Evolutionary Neural Architecture Search

Aug 14, 2021

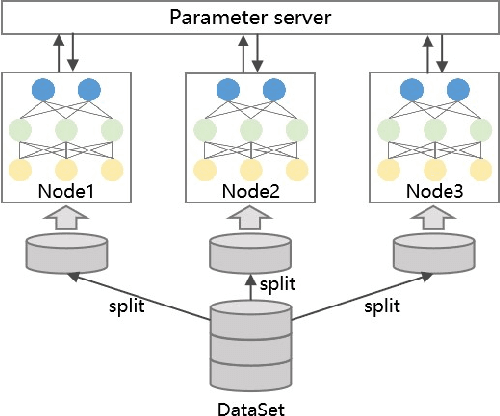

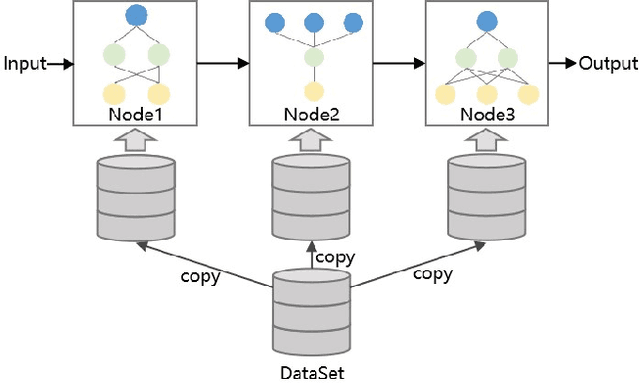

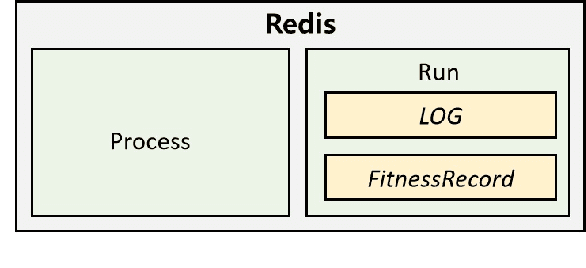

Abstract:Neural architecture search (NAS), which automatically designs the architectures of deep neural networks, has achieved breakthrough success over many applications in the past few years. Among different classes of NAS methods, evolutionary computation based NAS (ENAS) methods have recently gained much attention. Unfortunately, the issues of fair comparisons and efficient evaluations have hindered the development of ENAS. The current benchmark architecture datasets designed for fair comparisons only provide the datasets, not the ENAS algorithms or the platform to run the algorithms. The existing efficient evaluation methods are either not suitable for the population-based ENAS algorithm or are too complex to use. This paper develops a platform named BenchENAS to address these issues. BenchENAS aims to achieve fair comparisons by running different algorithms in the same environment and with the same settings. To achieve efficient evaluation in a common lab environment, BenchENAS designs a parallel component and a cache component with high maintainability. Furthermore, BenchENAS is easy to install and highly configurable and modular, which brings benefits in good usability and easy extensibility. The paper conducts efficient comparison experiments on eight ENAS algorithms with high GPU utilization on this platform. The experiments validate that the fair comparison issue does exist, and BenchENAS can alleviate this issue. A website has been built to promote BenchENAS at https://benchenas.com, where interested researchers can obtain the source code and document of BenchENAS for free.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge