Yuwei Ou

Towards Accurate and Robust Architectures via Neural Architecture Search

May 09, 2024Abstract:To defend deep neural networks from adversarial attacks, adversarial training has been drawing increasing attention for its effectiveness. However, the accuracy and robustness resulting from the adversarial training are limited by the architecture, because adversarial training improves accuracy and robustness by adjusting the weight connection affiliated to the architecture. In this work, we propose ARNAS to search for accurate and robust architectures for adversarial training. First we design an accurate and robust search space, in which the placement of the cells and the proportional relationship of the filter numbers are carefully determined. With the design, the architectures can obtain both accuracy and robustness by deploying accurate and robust structures to their sensitive positions, respectively. Then we propose a differentiable multi-objective search strategy, performing gradient descent towards directions that are beneficial for both natural loss and adversarial loss, thus the accuracy and robustness can be guaranteed at the same time. We conduct comprehensive experiments in terms of white-box attacks, black-box attacks, and transferability. Experimental results show that the searched architecture has the strongest robustness with the competitive accuracy, and breaks the traditional idea that NAS-based architectures cannot transfer well to complex tasks in robustness scenarios. By analyzing outstanding architectures searched, we also conclude that accurate and robust neural architectures tend to deploy different structures near the input and output, which has great practical significance on both hand-crafting and automatically designing of accurate and robust architectures.

Differentiable Genetic Programming for High-dimensional Symbolic Regression

Apr 18, 2023

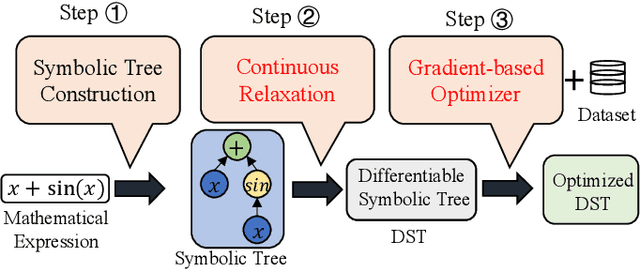

Abstract:Symbolic regression (SR) is the process of discovering hidden relationships from data with mathematical expressions, which is considered an effective way to reach interpretable machine learning (ML). Genetic programming (GP) has been the dominator in solving SR problems. However, as the scale of SR problems increases, GP often poorly demonstrates and cannot effectively address the real-world high-dimensional problems. This limitation is mainly caused by the stochastic evolutionary nature of traditional GP in constructing the trees. In this paper, we propose a differentiable approach named DGP to construct GP trees towards high-dimensional SR for the first time. Specifically, a new data structure called differentiable symbolic tree is proposed to relax the discrete structure to be continuous, thus a gradient-based optimizer can be presented for the efficient optimization. In addition, a sampling method is proposed to eliminate the discrepancy caused by the above relaxation for valid symbolic expressions. Furthermore, a diversification mechanism is introduced to promote the optimizer escaping from local optima for globally better solutions. With these designs, the proposed DGP method can efficiently search for the GP trees with higher performance, thus being capable of dealing with high-dimensional SR. To demonstrate the effectiveness of DGP, we conducted various experiments against the state of the arts based on both GP and deep neural networks. The experiment results reveal that DGP can outperform these chosen peer competitors on high-dimensional regression benchmarks with dimensions varying from tens to thousands. In addition, on the synthetic SR problems, the proposed DGP method can also achieve the best recovery rate even with different noisy levels. It is believed this work can facilitate SR being a powerful alternative to interpretable ML for a broader range of real-world problems.

Differentiable Search of Accurate and Robust Architectures

Jan 02, 2023

Abstract:Deep neural networks (DNNs) are found to be vulnerable to adversarial attacks, and various methods have been proposed for the defense. Among these methods, adversarial training has been drawing increasing attention because of its simplicity and effectiveness. However, the performance of the adversarial training is greatly limited by the architectures of target DNNs, which often makes the resulting DNNs with poor accuracy and unsatisfactory robustness. To address this problem, we propose DSARA to automatically search for the neural architectures that are accurate and robust after adversarial training. In particular, we design a novel cell-based search space specially for adversarial training, which improves the accuracy and the robustness upper bound of the searched architectures by carefully designing the placement of the cells and the proportional relationship of the filter numbers. Then we propose a two-stage search strategy to search for both accurate and robust neural architectures. At the first stage, the architecture parameters are optimized to minimize the adversarial loss, which makes full use of the effectiveness of the adversarial training in enhancing the robustness. At the second stage, the architecture parameters are optimized to minimize both the natural loss and the adversarial loss utilizing the proposed multi-objective adversarial training method, so that the searched neural architectures are both accurate and robust. We evaluate the proposed algorithm under natural data and various adversarial attacks, which reveals the superiority of the proposed method in terms of both accurate and robust architectures. We also conclude that accurate and robust neural architectures tend to deploy very different structures near the input and the output, which has great practical significance on both hand-crafting and automatically designing of accurate and robust neural architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge