William R. Norris

L-Lipschitz Gershgorin ResNet Network

Feb 28, 2025Abstract:Deep residual networks (ResNets) have demonstrated outstanding success in computer vision tasks, attributed to their ability to maintain gradient flow through deep architectures. Simultaneously, controlling the Lipschitz bound in neural networks has emerged as an essential area of research for enhancing adversarial robustness and network certifiability. This paper uses a rigorous approach to design $\mathcal{L}$-Lipschitz deep residual networks using a Linear Matrix Inequality (LMI) framework. The ResNet architecture was reformulated as a pseudo-tri-diagonal LMI with off-diagonal elements and derived closed-form constraints on network parameters to ensure $\mathcal{L}$-Lipschitz continuity. To address the lack of explicit eigenvalue computations for such matrix structures, the Gershgorin circle theorem was employed to approximate eigenvalue locations, guaranteeing the LMI's negative semi-definiteness. Our contributions include a provable parameterization methodology for constructing Lipschitz-constrained networks and a compositional framework for managing recursive systems within hierarchical architectures. These findings enable robust network designs applicable to adversarial robustness, certified training, and control systems. However, a limitation was identified in the Gershgorin-based approximations, which over-constrain the system, suppressing non-linear dynamics and diminishing the network's expressive capacity.

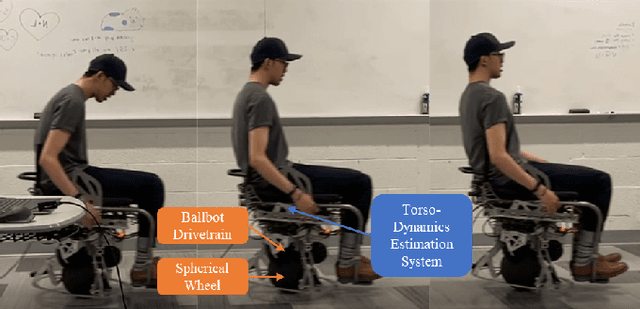

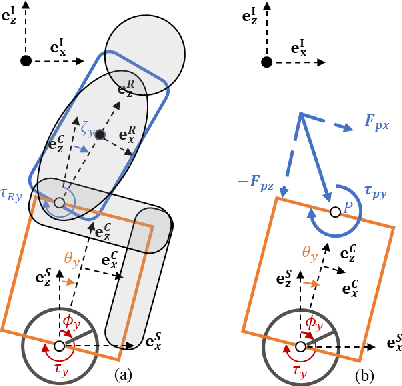

Exploiting Physical Human-Robot Interaction to Provide a Unique Rolling Experience with a Riding Ballbot

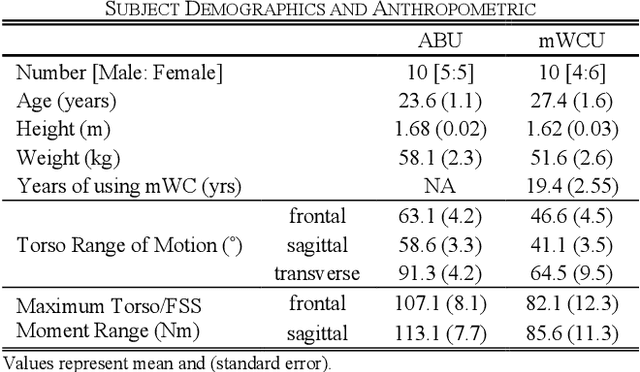

Sep 27, 2024Abstract:This study introduces the development of hands-free control schemes for a riding ballbot, designed to allow riders including manual wheelchair users to control its movement through torso leaning and twisting. The hardware platform, Personal Unique Rolling Experience (PURE), utilizes a ballbot drivetrain, a dynamically stable mobile robot that uses a ball as its wheel to provide omnidirectional maneuverability. To accommodate users with varying torso motion functions, the hanads-free control scheme should be adjustable based on the rider's torso function and personal preferences. Therefore, concepts of (a) impedance control and (b) admittance control were integrated into the control scheme. A duo-agent optimization framework was utilized to assess the efficiency of this rider-ballbot system for a safety-critical task: braking from 1.4 m/s. The candidate control schemes were further implemented in the physical robot hardware and validated with two experienced users, demonstrating the efficiency and robustness of the hands-free admittance control scheme (HACS). This interface, which utilized physical human-robot interaction (pHRI) as the input, resulted in lower braking effort and shorter braking distance and time. Subsequently, 12 novice participants (six able-bodied users and six manual wheelchair users) with different levels of torso motion capability were then recruited to benchmark the braking performance with HACS. The indoor navigation capability of PURE was further demonstrated with these participants in courses simulating narrow hallways, tight turns, and navigation through static and dynamic obstacles. By exploiting pHRI, the proposed admittance-style control scheme provided effective control of the ballbot via torso motions. This interface enables PURE to provide a personal unique rolling experience to manual wheelchair users for safe and agile indoor navigation.

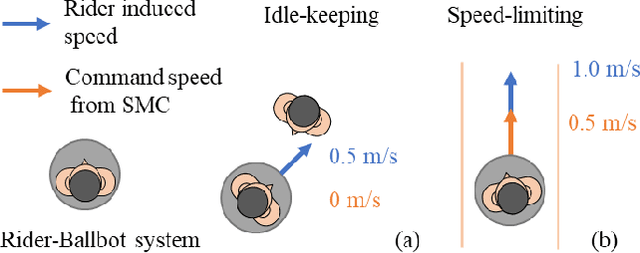

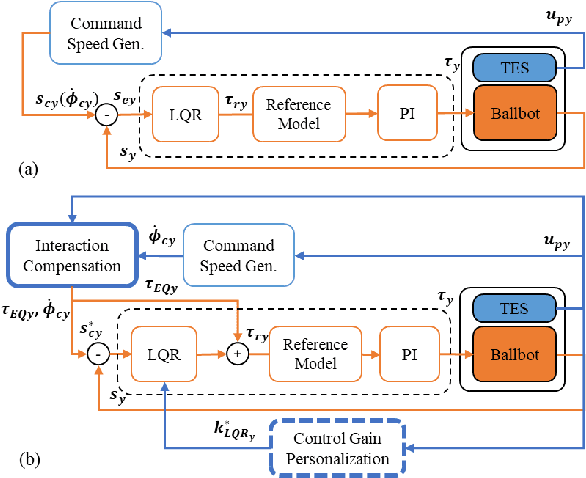

An Interactive Hands-Free Controller for a Riding Ballbot to Enable Simple Shared Control Tasks

Sep 27, 2024

Abstract:Our team developed a riding ballbot (called PURE) that is dynamically stable, omnidirectional, and driven by lean-to-steer control. A hands-free admittance control scheme (HACS) was previously integrated to allow riders with different torso functions to control the robot's movements via torso leaning and twisting. Such an interface requires motor coordination skills and could result in collisions with obstacles due to low proficiency. Hence, a shared controller (SC) that limits the speed of PURE could be helpful to ensure the safety of riders. However, the self-balancing dynamics of PURE could result in a weak control authority of its motion, in which the torso motion of the rider could easily result in poor tracking of the command speed dictated by the shared controller. Thus, we proposed an interactive hands-free admittance control scheme (iHACS), which added two modules to HACS to improve the speed-tracking performance of PURE: control gain personalization module and interaction compensation module. Human riding tests of simple tasks, idle-keeping and speed-limiting, were conducted to compare the performance of HACS and iHACS. Two manual wheelchair users and two able-bodied individuals participated in this study. They were instructed to use "adversarial" torso motions that would tax the SC's ability to keep the ballbot idling or below a set speed. In the idle-keeping tasks, iHACS demonstrated minimal translational motion and low command speed tracking RMSE, even with significant torso lean angles. During the speed-limiting task with command speed saturated at 0.5 m/s, the system achieved an average maximum speed of 1.1 m/s with iHACS, compared with that of over 1.9 m/s with HACS. These results suggest that iHACS can enhance PURE's control authority over the rider, which enables PURE to provide physical interactions back to the rider and results in a collaborative rider-robot synergy.

Enabling Shared-Control for A Riding Ballbot System

Sep 11, 2024

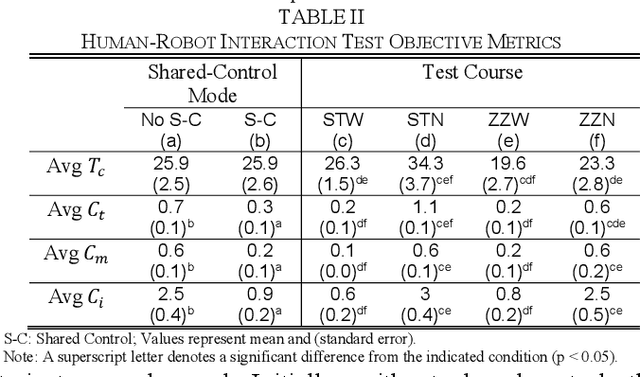

Abstract:This study introduces a shared-control approach for collision avoidance in a self-balancing riding ballbot, called PURE, marked by its dynamic stability, omnidirectional movement, and hands-free interface. Integrated with a sensor array and a novel Passive Artificial Potential Field (PAPF) method, PURE provides intuitive navigation with deceleration assistance and haptic/audio feedback, effectively mitigating collision risks. This approach addresses the limitations of traditional APF methods, such as control oscillations and unnecessary speed reduction in challenging scenarios. A human-robot interaction experiment, with 20 manual wheelchair users and able-bodied individuals, was conducted to evaluate the performance of indoor navigation and obstacle avoidance with the proposed shared-control algorithm. Results indicated that shared-control significantly reduced collisions and cognitive load without affecting travel speed, offering intuitive and safe operation. These findings highlight the shared-control system's suitability for enhancing collision avoidance in self-balancing mobility devices, a relatively unexplored area in assistive mobility research.

Redefining Aerial Innovation: Autonomous Tethered Drones as a Solution to Battery Life and Data Latency Challenges

Feb 28, 2024

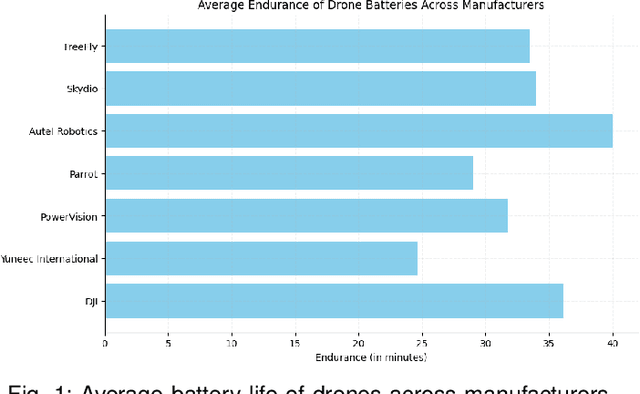

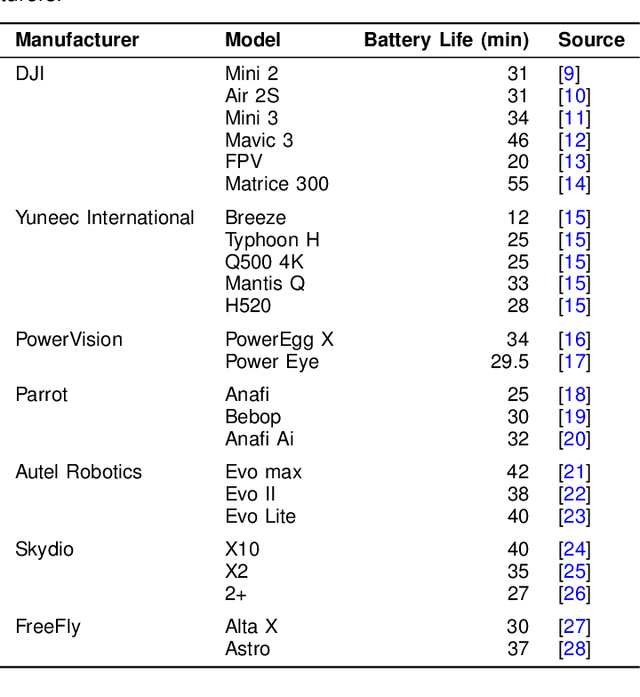

Abstract:The emergence of tethered drones represents a major advancement in unmanned aerial vehicles (UAVs) offering solutions to key limitations faced by traditional drones. This article explores the potential of tethered drones with a particular focus on their ability to tackle issues related to battery life constraints and data latency commonly experienced by battery operated drones. Through their connection to a ground station via a tether, autonomous tethered drones provide continuous power supply and a secure direct data transmission link facilitating prolonged operational durations and real time data transfer. These attributes significantly enhance the effectiveness and dependability of drone missions in scenarios requiring extended surveillance, continuous monitoring and immediate data processing needs. Examining the advancements, operational benefits and potential future progressions associated with tethered drones, this article shows their increasing significance across various sectors and their pivotal role in pushing the boundaries of current UAV capabilities. The emergence of tethered drone technology not only addresses existing obstacles but also paves the way for new innovations within the UAV industry.

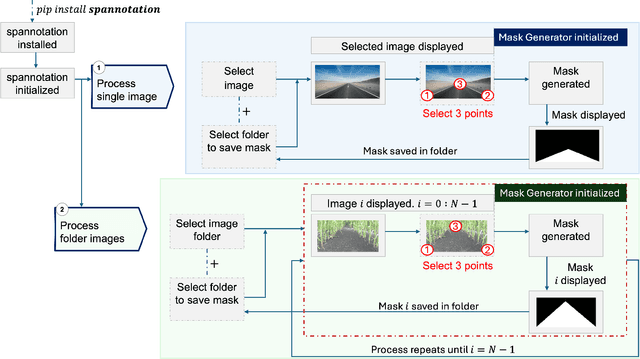

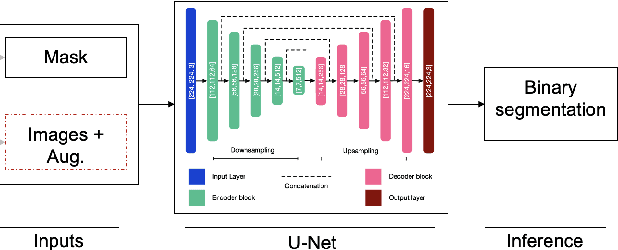

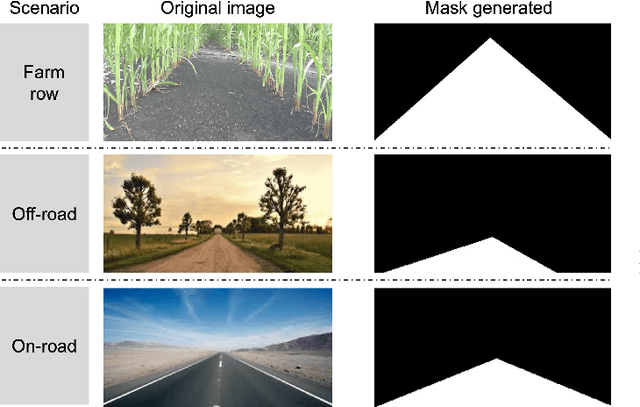

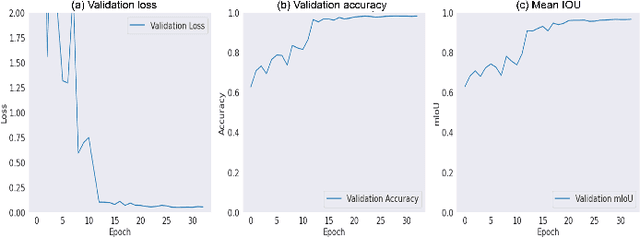

Spannotation: Enhancing Semantic Segmentation for Autonomous Navigation with Efficient Image Annotation

Feb 28, 2024

Abstract:Spannotation is an open source user-friendly tool developed for image annotation for semantic segmentation specifically in autonomous navigation tasks. This study provides an evaluation of Spannotation, demonstrating its effectiveness in generating accurate segmentation masks for various environments like agricultural crop rows, off-road terrains and urban roads. Unlike other popular annotation tools that requires about 40 seconds to annotate an image for semantic segmentation in a typical navigation task, Spannotation achieves similar result in about 6.03 seconds. The tools utility was validated through the utilization of its generated masks to train a U-Net model which achieved a validation accuracy of 98.27% and mean Intersection Over Union (mIOU) of 96.66%. The accessibility, simple annotation process and no-cost features have all contributed to the adoption of Spannotation evident from its download count of 2098 (as of February 25, 2024) since its launch. Future enhancements of Spannotation aim to broaden its application to complex navigation scenarios and incorporate additional automation functionalities. Given its increasing popularity and promising potential, Spannotation stands as a valuable resource in autonomous navigation and semantic segmentation. For detailed information and access to Spannotation, readers are encouraged to visit the project's GitHub repository at https://github.com/sof-danny/spannotation

Improved Generalizability of CNN Based Lane Detection in Challenging Weather Using Adaptive Preprocessing Parameter Tuning

Feb 09, 2024Abstract:Ensuring the robustness of lane detection systems is essential for the reliability of autonomous vehicles, particularly in the face of diverse weather conditions. While numerous algorithms have been proposed, addressing challenges posed by varying weather remains an ongoing issue. Geometric-based lane detection methods, rooted in the inherent properties of road geometry, provide enhanced generalizability. However, these methods often require manual parameter tuning to accommodate it fluctuating illumination and weather conditions. Conversely, learning-based approaches, trained on pre-labeled datasets, excel in localizing intricate and curved lane configurations but grapple with the absence of diverse weather datasets. This paper introduces a promising hybrid approach that merges the strengths of both methodologies. A novel adaptive preprocessing method is proposed in this work. Utilizing a fuzzy inference system (FIS), the algorithm dynamically adjusts parameters in geometric-based image processing functions and enhances adaptability to diverse weather conditions. Notably, this preprocessing algorithm is designed to seamlessly integrate with all learning-based lane detection models. When implemented in conjunction with CNN-based models, the hybrid approach demonstrates commendable generalizability across weather conditions and adaptability to complex lane configurations. Rigorous testing on datasets featuring challenging weather conditions showcases the proposed method's significant improvements over existing models, underscoring its efficacy in addressing the persistent challenges associated with lane detection in adverse weather scenarios.

A Self-Supervised Miniature One-Shot Texture Segmentation Model for Real-Time Robot Navigation and Embedded Applications

Jun 15, 2023Abstract:Determining the drivable area, or free space segmentation, is critical for mobile robots to navigate indoor environments safely. However, the lack of coherent markings and structures (e.g., lanes, curbs, etc.) in indoor spaces places the burden of traversability estimation heavily on the mobile robot. This paper explores the use of a self-supervised one-shot texture segmentation framework and an RGB-D camera to achieve robust drivable area segmentation. With a fast inference speed and compact size, the developed model, MOSTS is ideal for real-time robot navigation and various embedded applications. A benchmark study was conducted to compare MOSTS's performance with existing one-shot texture segmentation models to evaluate its performance. Additionally, a validation dataset was built to assess MOSTS's ability to perform texture segmentation in the wild, where it effectively identified small low-lying objects that were previously undetectable by depth measurements. Further, the study also compared MOSTS's performance with two State-Of-The-Art (SOTA) indoor semantic segmentation models, both quantitatively and qualitatively. The results showed that MOSTS offers comparable accuracy with up to eight times faster inference speed in indoor drivable area segmentation.

Comparison of Dynamic and Kinematic Model Driven Extended Kalman Filters (EKF) for the Localization of Autonomous Underwater Vehicles

May 26, 2021

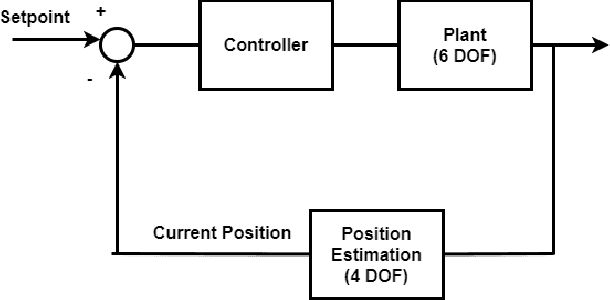

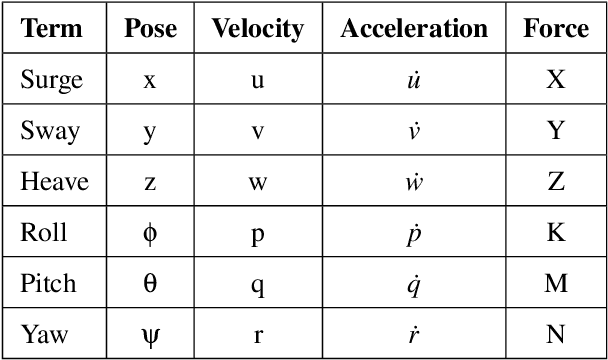

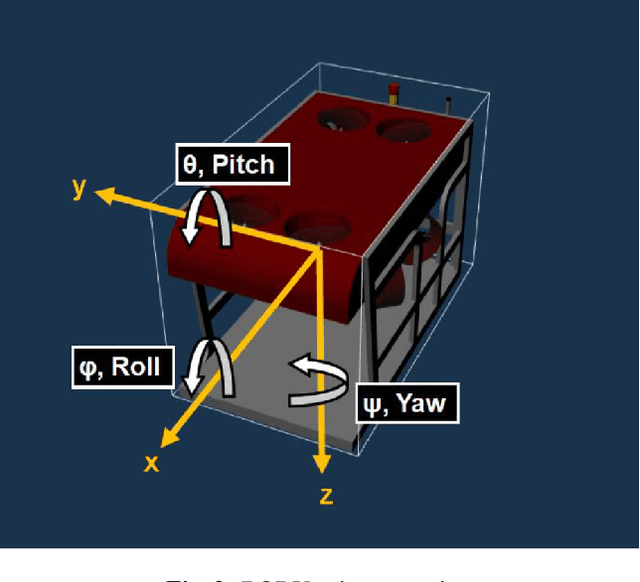

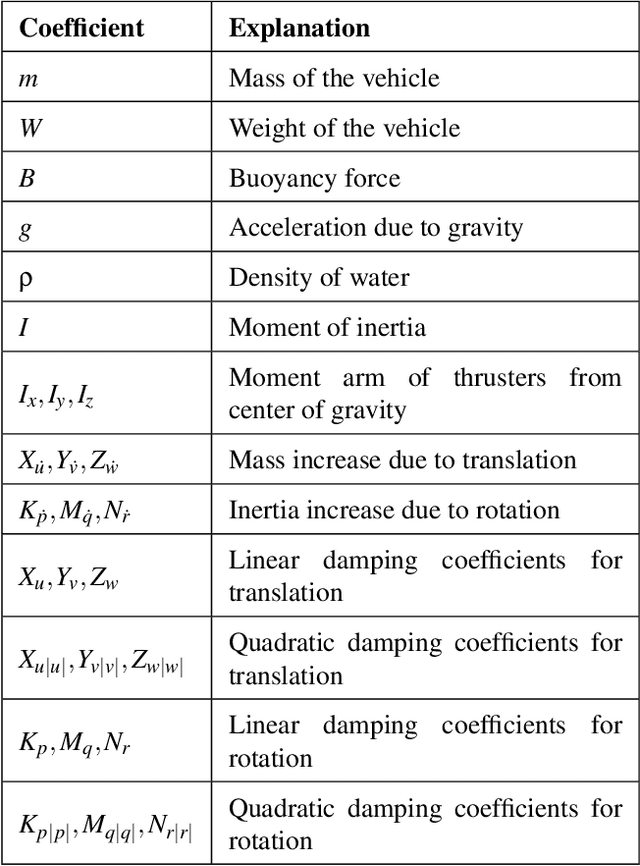

Abstract:Autonomous Underwater Vehicles (AUVs) and Remotely Operated Vehicles (ROVs) are used for a wide variety of missions related to exploration and scientific research. Successful navigation by these systems requires a good localization system. Kalman filter based localization techniques have been prevalent since the early 1960s and extensive research has been carried out using them, both in development and in design. It has been found that the use of a dynamic model (instead of a kinematic model) in the Kalman filter can lead to more accurate predictions, as the dynamic model takes the forces acting on the AUV into account. Presented in this paper is a motion-predictive extended Kalman filter (EKF) for AUVs using a simplified dynamic model. The dynamic model is derived first and then it was simplified for a RexROV, a type of submarine vehicle used in simple underwater exploration, inspection of subsea structures, pipelines and shipwrecks. The filter was implemented with a simulated vehicle in an open-source marine vehicle simulator called UUV Simulator and the results were compared with the ground truth. The results show good prediction accuracy for the dynamic filter, though improvements are needed before the EKF can be used on real-time. Some perspective and discussion on practical implementation is presented to show the next steps needed for this concept.

System-Level Development of a User-Integrated Semi-Autonomous Lawn Mowing System: Problem Overview, Basic Requirements, and Proposed Architecture

Jul 12, 2019

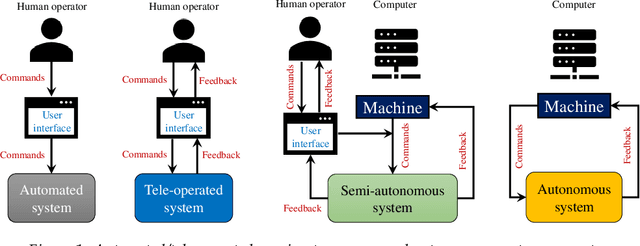

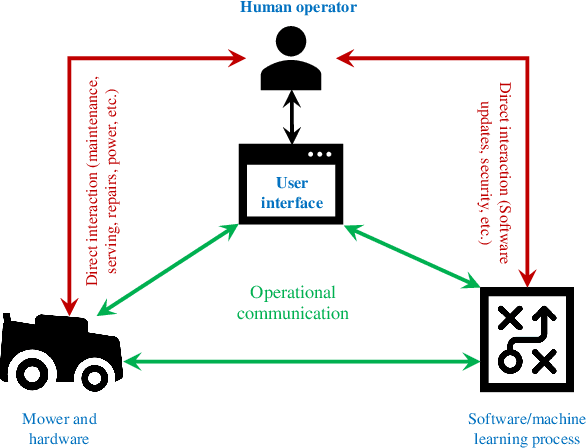

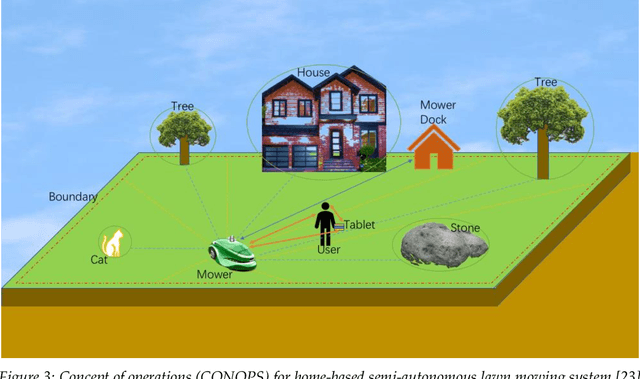

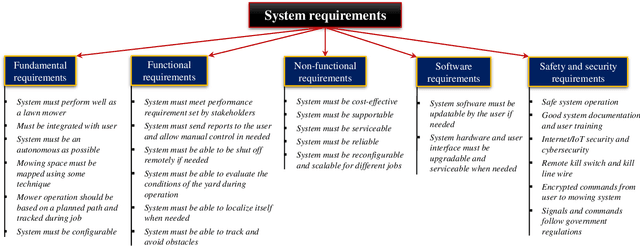

Abstract:This concept paper outlines some recent efforts toward the design and development of user-integrated semi-autonomous home-sized lawn mowing systems from a systems engineering perspective. This is an important and emerging field of study within the robotics and systems engineering communities. The work presented includes a review of current progress on this problem, a discussion of the problem from a systems engineering perspective, a general system architecture developed by the authors, and a preliminary set of design requirements. This work is meant to provide a baseline and motivation for the further development and refinement of these systems within the systems engineering and robotics communities and is relevant to both academic and commercial research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge