Wesley Cheung

Towards Fairness in Personalized Ads Using Impression Variance Aware Reinforcement Learning

Jun 08, 2023

Abstract:Variances in ad impression outcomes across demographic groups are increasingly considered to be potentially indicative of algorithmic bias in personalized ads systems. While there are many definitions of fairness that could be applicable in the context of personalized systems, we present a framework which we call the Variance Reduction System (VRS) for achieving more equitable outcomes in Meta's ads systems. VRS seeks to achieve a distribution of impressions with respect to selected protected class (PC) attributes that more closely aligns the demographics of an ad's eligible audience (a function of advertiser targeting criteria) with the audience who sees that ad, in a privacy-preserving manner. We first define metrics to quantify fairness gaps in terms of ad impression variances with respect to PC attributes including gender and estimated race. We then present the VRS for re-ranking ads in an impression variance-aware manner. We evaluate VRS via extensive simulations over different parameter choices and study the effect of the VRS on the chosen fairness metric. We finally present online A/B testing results from applying VRS to Meta's ads systems, concluding with a discussion of future work. We have deployed the VRS to all users in the US for housing ads, resulting in significant improvement in our fairness metric. VRS is the first large-scale deployed framework for pursuing fairness for multiple PC attributes in online advertising.

Automated Storytelling via Causal, Commonsense Plot Ordering

Sep 02, 2020

Abstract:Automated story plot generation is the task of generating a coherent sequence of plot events. Causal relations between plot events are believed to increase the perception of story and plot coherence. In this work, we introduce the concept of soft causal relations as causal relations inferred from commonsense reasoning. We demonstrate C2PO, an approach to narrative generation that operationalizes this concept through Causal, Commonsense Plot Ordering. Using human-participant protocols, we evaluate our system against baseline systems with different commonsense reasoning reasoning and inductive biases to determine the role of soft causal relations in perceived story quality. Through these studies we also probe the interplay of how changes in commonsense norms across storytelling genres affect perceptions of story quality.

Bringing Stories Alive: Generating Interactive Fiction Worlds

Jan 28, 2020

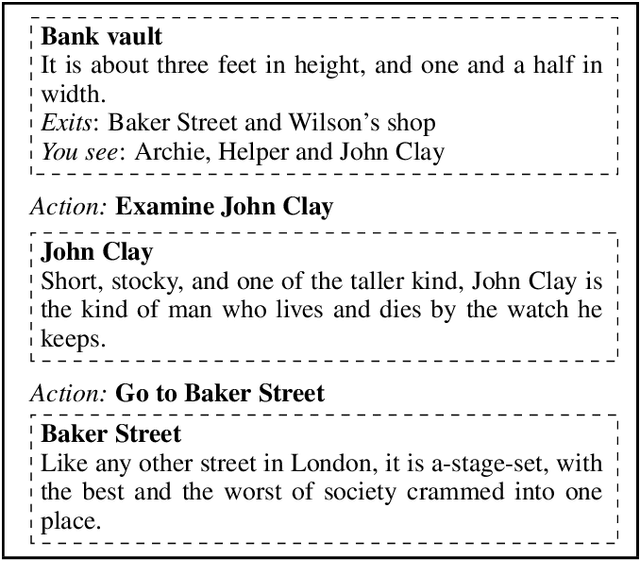

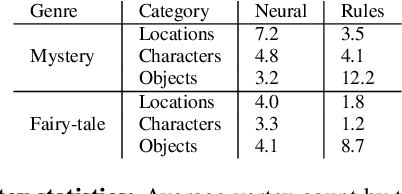

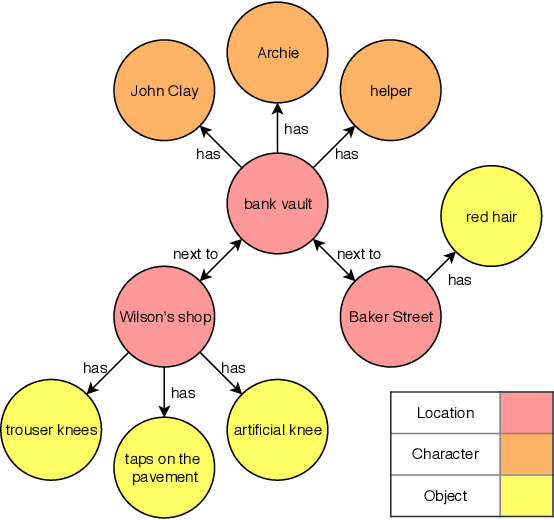

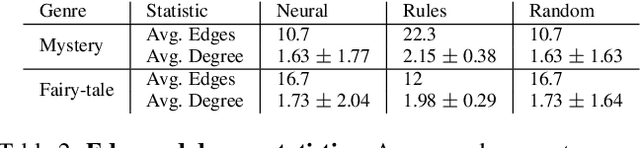

Abstract:World building forms the foundation of any task that requires narrative intelligence. In this work, we focus on procedurally generating interactive fiction worlds---text-based worlds that players "see" and "talk to" using natural language. Generating these worlds requires referencing everyday and thematic commonsense priors in addition to being semantically consistent, interesting, and coherent throughout. Using existing story plots as inspiration, we present a method that first extracts a partial knowledge graph encoding basic information regarding world structure such as locations and objects. This knowledge graph is then automatically completed utilizing thematic knowledge and used to guide a neural language generation model that fleshes out the rest of the world. We perform human participant-based evaluations, testing our neural model's ability to extract and fill-in a knowledge graph and to generate language conditioned on it against rule-based and human-made baselines. Our code is available at https://github.com/rajammanabrolu/WorldGeneration.

UNO: Uncertainty-aware Noisy-Or Multimodal Fusion for Unanticipated Input Degradation

Nov 06, 2019

Abstract:The fusion of multiple sensor modalities, especially through deep learning architectures, has been an active area of study. However, an under-explored aspect of such work is whether the methods can be robust to degradations across their input modalities, especially when they must generalize to degradations not seen during training. In this work, we propose an uncertainty-aware fusion scheme to effectively fuse inputs that might suffer from a range of known and unknown degradations. Specifically, we analyze a number of uncertainty measures, each of which captures a different aspect of uncertainty, and we propose a novel way to fuse degraded inputs by scaling modality-specific output softmax probabilities. We additionally propose a novel data-dependent spatial temperature scaling method to complement these existing uncertainty measures. Finally, we integrate the uncertainty-scaled output from each modality using a probabilistic noisy-or fusion method. In a photo-realistic simulation environment (AirSim), we show that our method achieves significantly better results on a semantic segmentation task, compared to state-of-art fusion architectures, on a range of degradations (e.g. fog, snow, frost, and various other types of noise), some of which are unknown during training. We specifically improve upon the state-of-art[1] by 28% in mean IoU on various degradations. [1] Abhinav Valada, Rohit Mohan, and Wolfram Burgard. Self-Supervised Model Adaptation for Multimodal Semantic Segmentation. In: arXiv e-prints, arXiv:1808.03833 (Aug. 2018), arXiv:1808.03833. arXiv: 1808.03833 [cs.CV].

Story Realization: Expanding Plot Events into Sentences

Sep 08, 2019

Abstract:Neural network based approaches to automated story plot generation attempt to learn how to generate novel plots from a corpus of natural language plot summaries. Prior work has shown that a semantic abstraction of sentences called events improves neural plot generation and and allows one to decompose the problem into: (1) the generation of a sequence of events (event-to-event) and (2) the transformation of these events into natural language sentences (event-to-sentence). However, typical neural language generation approaches to event-to-sentence can ignore the event details and produce grammatically-correct but semantically-unrelated sentences. We present an ensemble-based model that generates natural language guided by events.We provide results---including a human subjects study---for a full end-to-end automated story generation system showing that our method generates more coherent and plausible stories than baseline approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge