Wenjun Shi

DAPLSR: Data Augmentation Partial Least Squares Regression Model via Manifold Optimization

Apr 23, 2025Abstract:Traditional Partial Least Squares Regression (PLSR) models frequently underperform when handling data characterized by uneven categories. To address the issue, this paper proposes a Data Augmentation Partial Least Squares Regression (DAPLSR) model via manifold optimization. The DAPLSR model introduces the Synthetic Minority Over-sampling Technique (SMOTE) to increase the number of samples and utilizes the Value Difference Metric (VDM) to select the nearest neighbor samples that closely resemble the original samples for generating synthetic samples. In solving the model, in order to obtain a more accurate numerical solution for PLSR, this paper proposes a manifold optimization method that uses the geometric properties of the constraint space to improve model degradation and optimization. Comprehensive experiments show that the proposed DAPLSR model achieves superior classification performance and outstanding evaluation metrics on various datasets, significantly outperforming existing methods.

Unsupervised Monocular Depth Estimation Based on Hierarchical Feature-Guided Diffusion

Jun 14, 2024

Abstract:Unsupervised monocular depth estimation has received widespread attention because of its capability to train without ground truth. In real-world scenarios, the images may be blurry or noisy due to the influence of weather conditions and inherent limitations of the camera. Therefore, it is particularly important to develop a robust depth estimation model. Benefiting from the training strategies of generative networks, generative-based methods often exhibit enhanced robustness. In light of this, we employ a well-converging diffusion model among generative networks for unsupervised monocular depth estimation. Additionally, we propose a hierarchical feature-guided denoising module. This model significantly enriches the model's capacity for learning and interpreting depth distribution by fully leveraging image features to guide the denoising process. Furthermore, we explore the implicit depth within reprojection and design an implicit depth consistency loss. This loss function serves to enhance the performance of the model and ensure the scale consistency of depth within a video sequence. We conduct experiments on the KITTI, Make3D, and our self-collected SIMIT datasets. The results indicate that our approach stands out among generative-based models, while also showcasing remarkable robustness.

Real Time 3D Indoor Human Image Capturing Based on FMCW Radar

Dec 08, 2018

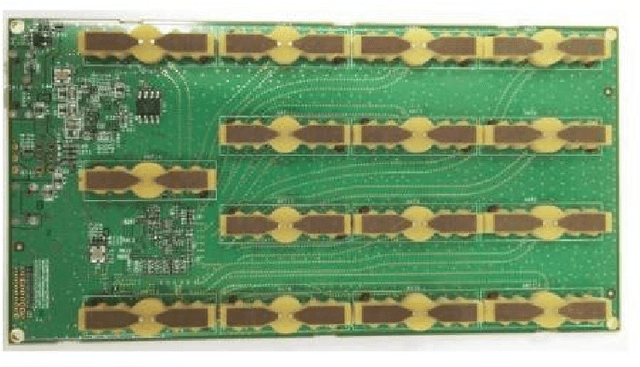

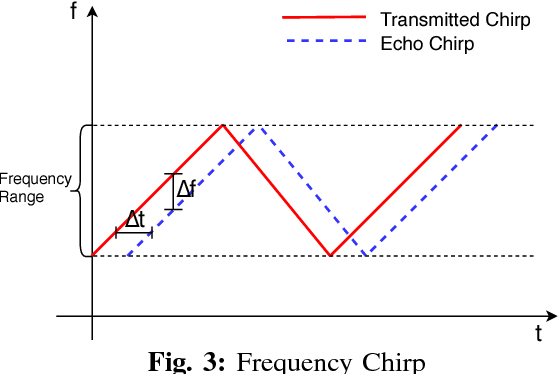

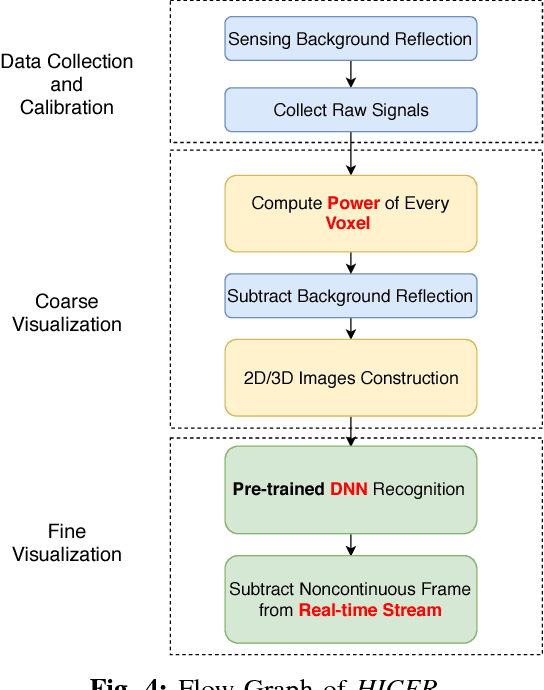

Abstract:Most smart systems such as smart home and smart health response to human's locations and activities. However, traditional solutions are either require wearable sensors or lead to leaking privacy. This work proposes an ambient radar solution which is a real-time, privacy secure and dark surroundings resistant system. In this solution, we use a low power, Frequency-Modulated Continuous Wave (FMCW) radar array to capture the reflected signals and then construct to 3D image frames. This solution designs $1)$a data preprocessing mechanism to remove background static reflection, $2)$a signal processing mechanism to transfer received complex radar signals to a matrix contains spacial information, and $3)$ a Deep Learning scheme to filter broken frame which caused by the rough surface of human's body. This solution has been extensively evaluated in a research area and captures real-time human images that are recognizable for specific activities. Our results show that the indoor capturing is clear to be recognized frame by frame compares to camera recorded video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge