Wenda Jin

Planning-inspired Hierarchical Trajectory Prediction for Autonomous Driving

Apr 22, 2023

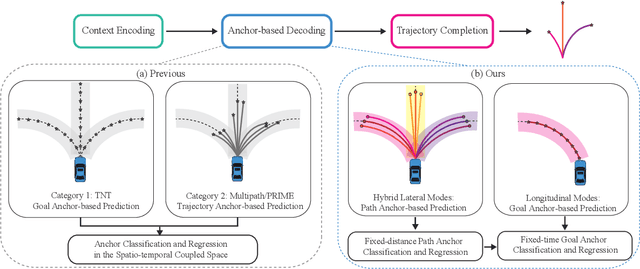

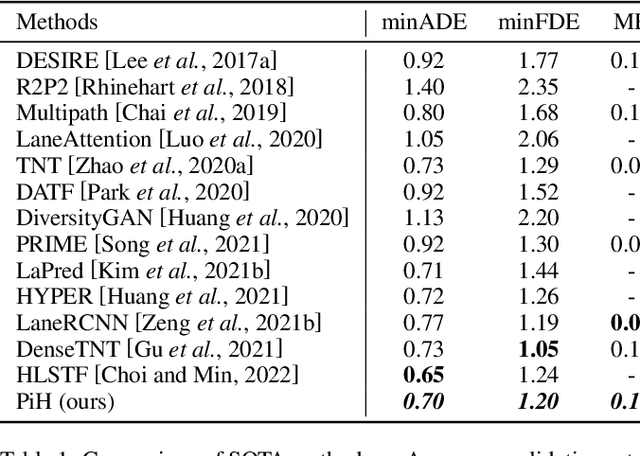

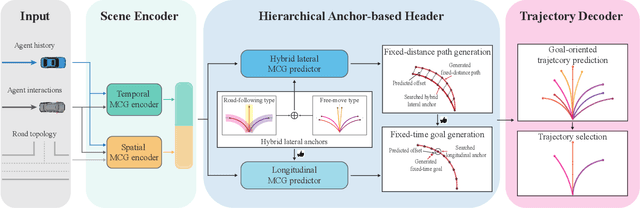

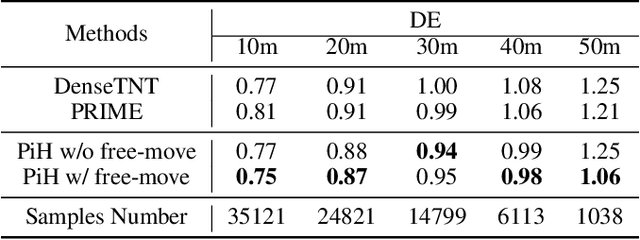

Abstract:Recently, anchor-based trajectory prediction methods have shown promising performance, which directly selects a final set of anchors as future intents in the spatio-temporal coupled space. However, such methods typically neglect a deeper semantic interpretation of path intents and suffer from inferior performance under the imperfect High-Definition (HD) map. To address this challenge, we propose a novel Planning-inspired Hierarchical (PiH) trajectory prediction framework that selects path and speed intents through a hierarchical lateral and longitudinal decomposition. Especially, a hybrid lateral predictor is presented to select a set of fixed-distance lateral paths from map-based road-following and cluster-based free-move path candidates. {Then, the subsequent longitudinal predictor selects plausible goals sampled from a set of lateral paths as speed intents.} Finally, a trajectory decoder is given to generate future trajectories conditioned on a categorical distribution over lateral-longitudinal intents. Experiments demonstrate that PiH achieves competitive and more balanced results against state-of-the-art methods on the Argoverse motion forecasting benchmark and has the strongest robustness under the imperfect HD map.

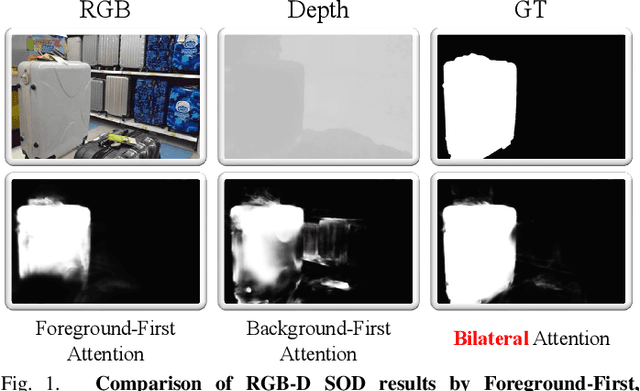

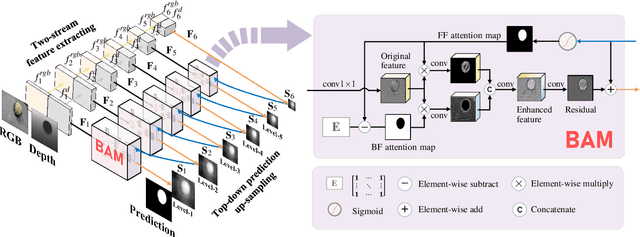

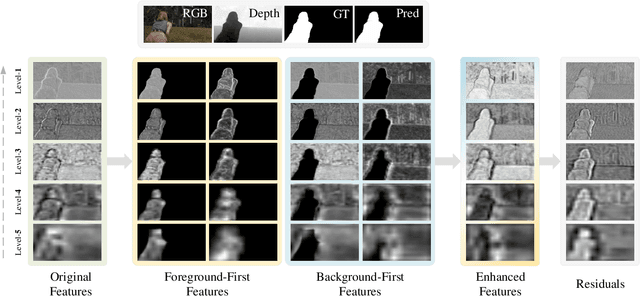

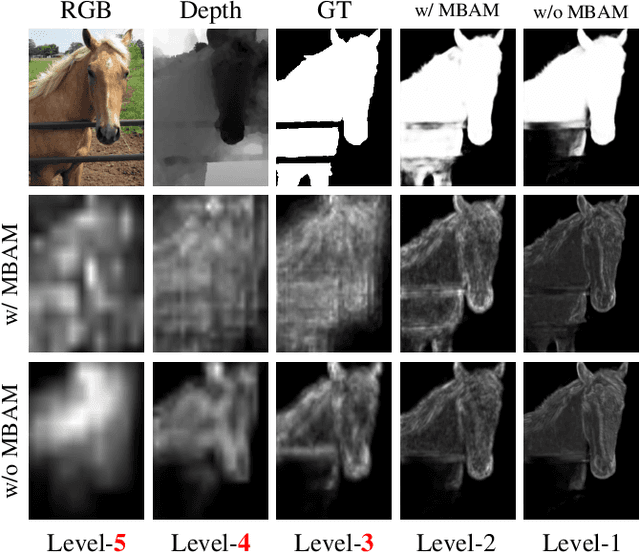

Bilateral Attention Network for RGB-D Salient Object Detection

Apr 30, 2020

Abstract:Most existing RGB-D salient object detection (SOD) methods focus on the foreground region when utilizing the depth images. However, the background also provides important information in traditional SOD methods for promising performance. To better explore salient information in both foreground and background regions, this paper proposes a Bilateral Attention Network (BiANet) for the RGB-D SOD task. Specifically, we introduce a Bilateral Attention Module (BAM) with a complementary attention mechanism: foreground-first (FF) attention and background-first (BF) attention. The FF attention focuses on the foreground region with a gradual refinement style, while the BF one recovers potentially useful salient information in the background region. Benefitted from the proposed BAM module, our BiANet can capture more meaningful foreground and background cues, and shift more attention to refining the uncertain details between foreground and background regions. Additionally, we extend our BAM by leveraging the multi-scale techniques for better SOD performance. Extensive experiments on six benchmark datasets demonstrate that our BiANet outperforms other state-of-the-art RGB-D SOD methods in terms of objective metrics and subjective visual comparison. Our BiANet can run up to 80fps on $224\times224$ RGB-D images, with an NVIDIA GeForce RTX 2080Ti GPU. Comprehensive ablation studies also validate our contributions.

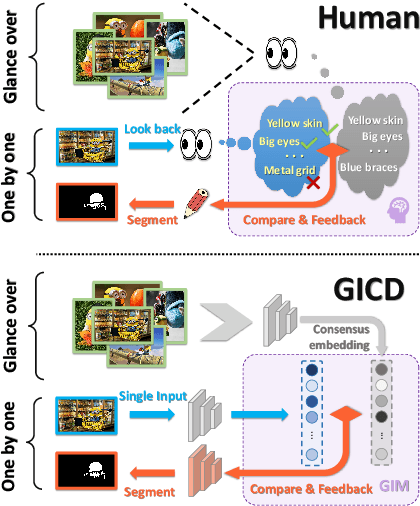

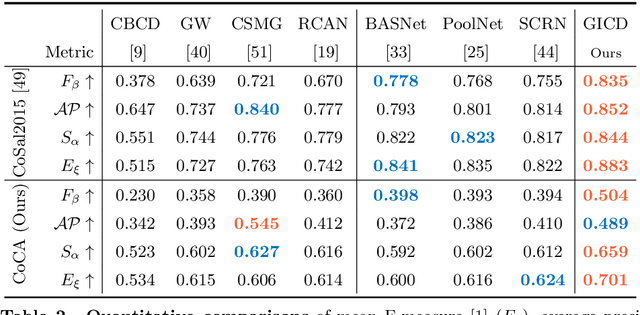

Gradient-Induced Co-Saliency Detection

Apr 28, 2020

Abstract:Co-saliency detection (Co-SOD) aims to segment the common salient foreground in a group of relevant images. In this paper, inspired by human behavior, we propose a gradient-induced co-saliency detection (GICD) method. We first abstract a consensus representation for the group of images in the embedding space; then, by comparing the single image with consensus representation, we utilize the feedback gradient information to induce more attention to the discriminative co-salient features. In addition, due to the lack of Co-SOD training data, we design a jigsaw training strategy, with which Co-SOD networks can be trained on general saliency datasets without extra annotations. To evaluate the performance of Co-SOD methods on discovering the co-salient object among multiple foregrounds, we construct a challenging CoCA dataset, where each image contains at least one extraneous foreground along with the co-salient object. Experiments demonstrate that our GICD achieves state-of-the-art performance. The code, model, and dataset will be publicly released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge