Weijie Guo

DINeMo: Learning Neural Mesh Models with no 3D Annotations

Mar 26, 2025

Abstract:Category-level 3D/6D pose estimation is a crucial step towards comprehensive 3D scene understanding, which would enable a broad range of applications in robotics and embodied AI. Recent works explored neural mesh models that approach a range of 2D and 3D tasks from an analysis-by-synthesis perspective. Despite the largely enhanced robustness to partial occlusion and domain shifts, these methods depended heavily on 3D annotations for part-contrastive learning, which confines them to a narrow set of categories and hinders efficient scaling. In this work, we present DINeMo, a novel neural mesh model that is trained with no 3D annotations by leveraging pseudo-correspondence obtained from large visual foundation models. We adopt a bidirectional pseudo-correspondence generation method, which produce pseudo correspondence utilize both local appearance features and global context information. Experimental results on car datasets demonstrate that our DINeMo outperforms previous zero- and few-shot 3D pose estimation by a wide margin, narrowing the gap with fully-supervised methods by 67.3%. Our DINeMo also scales effectively and efficiently when incorporating more unlabeled images during training, which demonstrate the advantages over supervised learning methods that rely on 3D annotations. Our project page is available at https://analysis-by-synthesis.github.io/DINeMo/.

Learning-based Optoelectronically Innervated Tactile Finger for Rigid-Soft Interactive Grasping

Jan 29, 2021

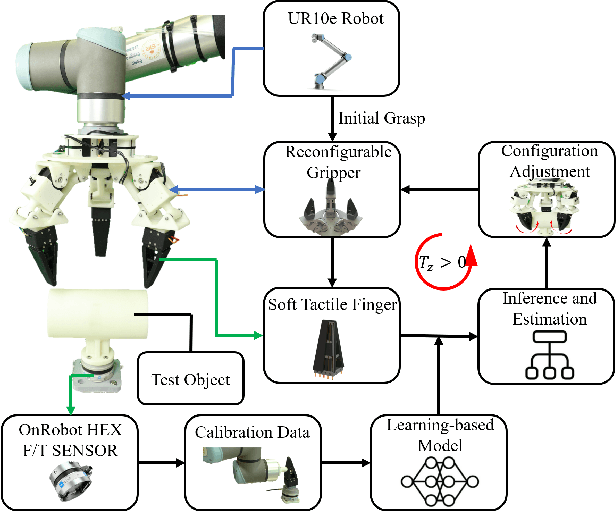

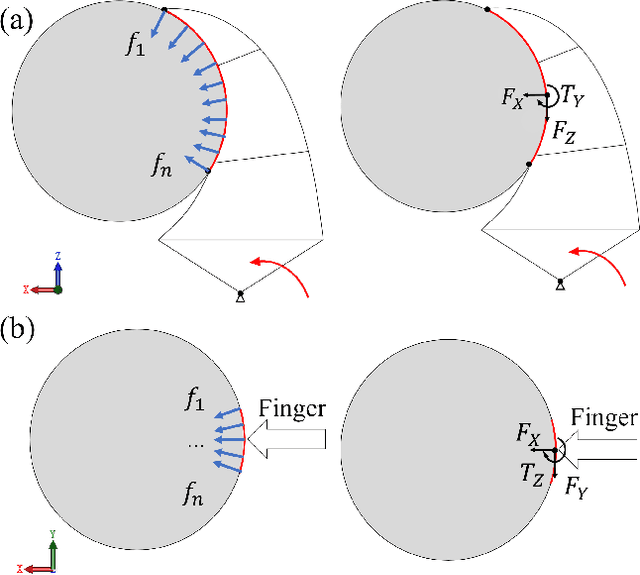

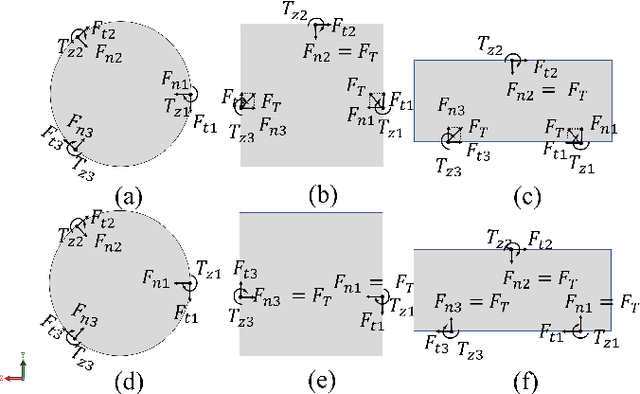

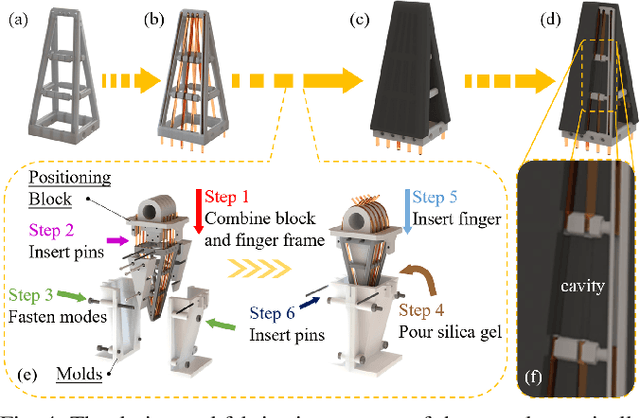

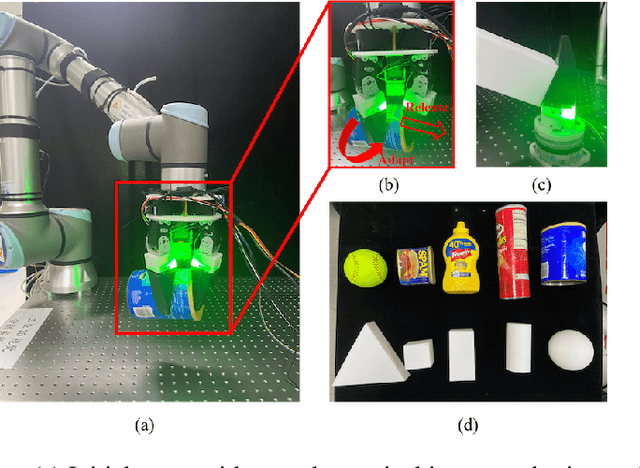

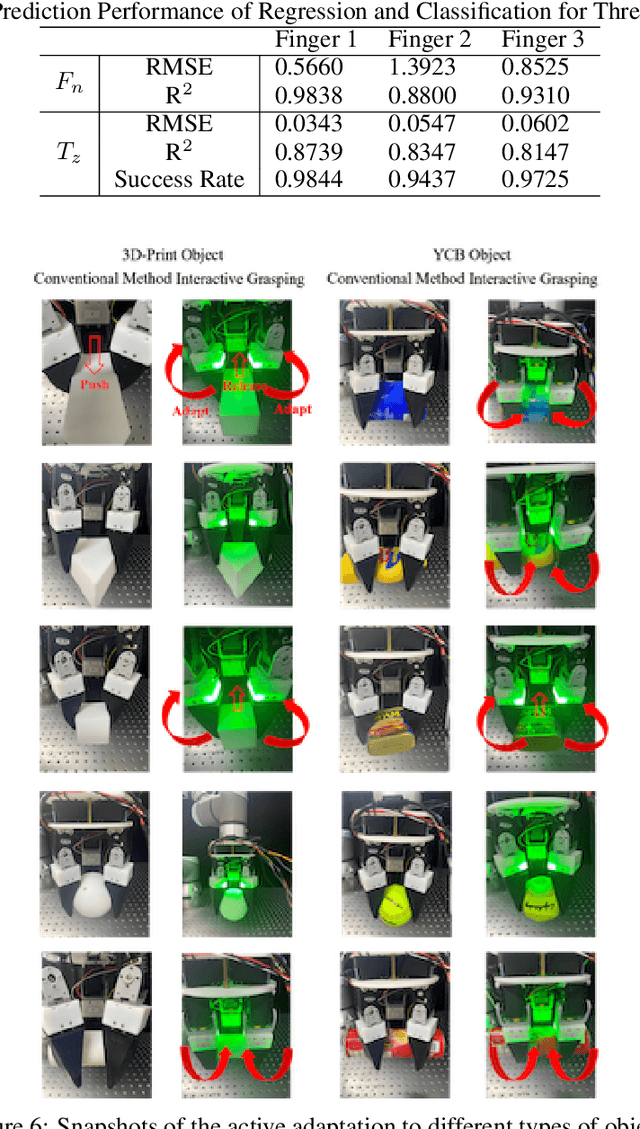

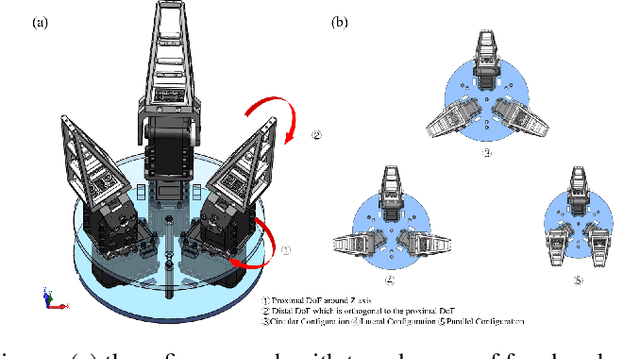

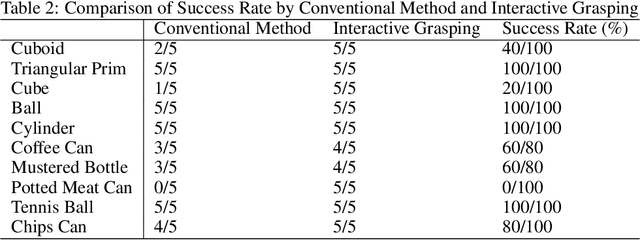

Abstract:This paper presents a novel design of a soft tactile finger with omni-directional adaptation using multi-channel optical fibers for rigid-soft interactive grasping. Machine learning methods are used to train a model for real-time prediction of force, torque, and contact using the tactile data collected. We further integrated such fingers in a reconfigurable gripper design with three fingers so that the finger arrangement can be actively adjusted in real-time based on the tactile data collected during grasping, achieving the process of rigid-soft interactive grasping. Detailed sensor calibration and experimental results are also included to further validate the proposed design for enhanced grasping robustness.

Design of an Optoelectronically Innervated Gripper for Rigid-Soft Interactive Grasping

Dec 06, 2020

Abstract:Over the past few decades, efforts have been made towards robust robotic grasping, and therefore dexterous manipulation. The soft gripper has shown their potential in robust grasping due to their inherent properties-low, control complexity, and high adaptability. However, the deformation of the soft gripper when interacting with objects bring inaccuracy of grasped objects, which causes instability for robust grasping and further manipulation. In this paper, we present an omni-directional adaptive soft finger that can sense deformation based on embedded optical fibers and the application of machine learning methods to interpret transmitted light intensities. Furthermore, to use tactile information provided by a soft finger, we design a low-cost and multi degrees of freedom gripper to conform to the shape of objects actively and optimize grasping policy, which is called Rigid-Soft Interactive Grasping. Two main advantages of this grasping policy are provided: one is that a more robust grasping could be achieved through an active adaptation; the other is that the tactile information collected could be helpful for further manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge