Weifeng Zhu

Covariance-based Imaging and Multi-View Fusion for Networked Sensing

Nov 18, 2025Abstract:This paper considers multi-view imaging in a sixth-generation (6G) integrated sensing and communication network, which consists of a transmit base-station (BS), multiple receive BSs connected to a central processing unit (CPU), and multiple extended targets. Our goal is to devise an effective multi-view imaging technique that can jointly leverage the targets' echo signals at all the receive BSs to precisely construct the image of these targets. To achieve this goal, we propose a two-phase approach. In Phase I, each receive BS recovers an individual image based on the sample covariance matrix of its received signals. Specifically, we propose a novel covariance-based imaging framework to jointly estimate effective scattering intensity and grid positions, which reduces the number of estimated parameters leveraging channel statistical properties and allows grid adjustment to conform to target geometry. In Phase II, the CPU fuses the individual images of all the receivers to construct a high-quality image of all the targets. Specifically, we design edge-preserving natural neighbor interpolation (EP-NNI) to map individual heterogeneous images onto common and finer grids, and then propose a joint optimization framework to estimate fused scattering intensity and BS fields of view. Extensive numerical results show that the proposed scheme significantly enhances imaging performance, facilitating high-quality environment reconstruction for future 6G networks.

Integrated Massive Communication and Target Localization in 6G Cell-Free Networks

Oct 16, 2025Abstract:This paper presents an initial investigation into the combination of integrated sensing and communication (ISAC) and massive communication, both of which are largely regarded as key scenarios in sixth-generation (6G) wireless networks. Specifically, we consider a cell-free network comprising a large number of users, multiple targets, and distributed base stations (BSs). In each time slot, a random subset of users becomes active, transmitting pilot signals that can be scattered by the targets before reaching the BSs. Unlike conventional massive random access schemes, where the primary objectives are device activity detection and channel estimation, our framework also enables target localization by leveraging the multipath propagation effects introduced by the targets. However, due to the intricate dependency between user channels and target locations, characterizing the posterior distribution required for minimum mean-square error (MMSE) estimation presents significant computational challenges. To handle this problem, we propose a hybrid message passing-based framework that incorporates multiple approximations to mitigate computational complexity. Numerical results demonstrate that the proposed approach achieves high-accuracy device activity detection, channel estimation, and target localization simultaneously, validating the feasibility of embedding localization functionality into massive communication systems for future 6G networks.

Cognitive Surgery: The Awakening of Implicit Territorial Awareness in LLMs

Aug 20, 2025

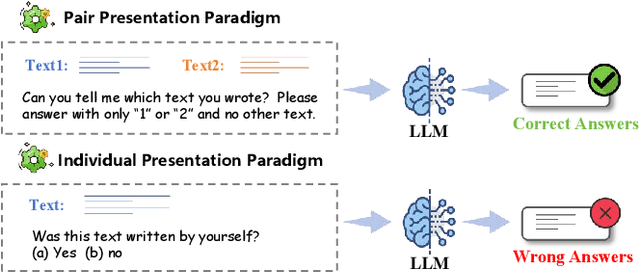

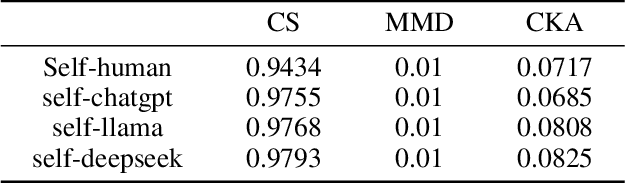

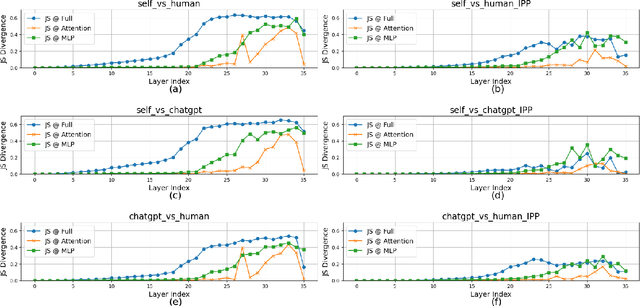

Abstract:Large language models (LLMs) have been shown to possess a degree of self-recognition capability-the ability to identify whether a given text was generated by themselves. Prior work has demonstrated that this capability is reliably expressed under the Pair Presentation Paradigm (PPP), where the model is presented with two texts and asked to choose which one it authored. However, performance deteriorates sharply under the Individual Presentation Paradigm (IPP), where the model is given a single text to judge authorship. Although this phenomenon has been observed, its underlying causes have not been systematically analyzed. In this paper, we first replicate existing findings to confirm that LLMs struggle to distinguish self- from other-generated text under IPP. We then investigate the reasons for this failure and attribute it to a phenomenon we term Implicit Territorial Awareness (ITA)-the model's latent ability to distinguish self- and other-texts in representational space, which remains unexpressed in its output behavior. To awaken the ITA of LLMs, we propose Cognitive Surgery (CoSur), a novel framework comprising four main modules: representation extraction, territory construction, authorship discrimination and cognitive editing. Experimental results demonstrate that our proposed method improves the performance of three different LLMs in the IPP scenario, achieving average accuracies of 83.25%, 66.19%, and 88.01%, respectively.

Low-Latency Terrestrial Interference Detection for Satellite-to-Device Communications

Jun 15, 2025Abstract:Direct satellite-to-device communication is a promising future direction due to its lower latency and enhanced efficiency. However, intermittent and unpredictable terrestrial interference significantly affects system reliability and performance. Continuously employing sophisticated interference mitigation techniques is practically inefficient. Motivated by the periodic idle intervals characteristic of burst-mode satellite transmissions, this paper investigates online interference detection frameworks specifically tailored for satellite-to-device scenarios. We first rigorously formulate interference detection as a binary hypothesis testing problem, leveraging differences between Rayleigh (no interference) and Rice (interference present) distributions. Then, we propose a cumulative sum (CUSUM)-based online detector for scenarios with known interference directions, explicitly characterizing the trade-off between detection latency and false alarm rate, and establish its asymptotic optimality. For practical scenarios involving unknown interference direction, we further propose a generalized likelihood ratio (GLR)-based detection method, jointly estimating interference direction via the Root-MUSIC algorithm. Numerical results validate our theoretical findings and demonstrate that our proposed methods achieve high detection accuracy with remarkably low latency, highlighting their practical applicability in future satellite-to-device communication systems.

Scalable Transceiver Design for Multi-User Communication in FDD Massive MIMO Systems via Deep Learning

Apr 15, 2025Abstract:This paper addresses the joint transceiver design, including pilot transmission, channel feature extraction and feedback, as well as precoding, for low-overhead downlink massive multiple-input multiple-output (MIMO) communication in frequency-division duplex (FDD) systems. Although deep learning (DL) has shown great potential in tackling this problem, existing methods often suffer from poor scalability in practical systems, as the solution obtained in the training phase merely works for a fixed feedback capacity and a fixed number of users in the deployment phase. To address this limitation, we propose a novel DL-based framework comprised of choreographed neural networks, which can utilize one training phase to generate all the transceiver solutions used in the deployment phase with varying sizes of feedback codebooks and numbers of users. The proposed framework includes a residual vector-quantized variational autoencoder (RVQ-VAE) for efficient channel feedback and an edge graph attention network (EGAT) for robust multiuser precoding. It can adapt to different feedback capacities by flexibly adjusting the RVQ codebook sizes using the hierarchical codebook structure, and scale with the number of users through a feedback module sharing scheme and the inherent scalability of EGAT. Moreover, a progressive training strategy is proposed to further enhance data transmission performance and generalization capability. Numerical results on a real-world dataset demonstrate the superior scalability and performance of our approach over existing methods.

Intelligent Reflecting Surface Based Localization of Mixed Near-Field and Far-Field Targets

Feb 04, 2025

Abstract:This paper considers an intelligent reflecting surface (IRS)-assisted bi-static localization architecture for the sixth-generation (6G) integrated sensing and communication (ISAC) network. The system consists of a transmit user, a receive base station (BS), an IRS, and multiple targets in either the far-field or near-field region of the IRS. In particular, we focus on the challenging scenario where the line-of-sight (LOS) paths between targets and the BS are blocked, such that the emitted orthogonal frequency division multiplexing (OFDM) signals from the user reach the BS merely via the user-target-IRS-BS path. Based on the signals received by the BS, our goal is to localize the targets by estimating their relative positions to the IRS, instead of to the BS. We show that subspace-based methods, such as the multiple signal classification (MUSIC) algorithm, can be applied onto the BS's received signals to estimate the relative states from the targets to the IRS. To this end, we create a virtual signal via combining user-target-IRS-BS channels over various time slots. By applying MUSIC on such a virtual signal, we are able to detect the far-field targets and the near-field targets, and estimate the angle-of-arrivals (AOAs) and/or ranges from the targets to the IRS. Furthermore, we theoretically verify that the proposed method can perfectly estimate the relative states from the targets to the IRS in the ideal case with infinite coherence blocks. Numerical results verify the effectiveness of our proposed IRS-assisted localization scheme. Our paper demonstrates the potential of employing passive anchors, i.e., IRSs, to improve the sensing coverage of the active anchors, i.e., BSs.

Joint Transmission and Compression Design for 6G Networked Sensing with Limited-Capacity Backhaul

Aug 06, 2024

Abstract:This paper considers networked sensing in cellular network, where multiple base stations (BSs) first compress their received echo signals from multiple targets and then forward the quantized signals to the cloud via limited-capacity backhaul links, such that the cloud can leverage all useful echo signals to perform high-resolution localization. Under this setup, we manage to characterize the posterior Cramer-Rao Bound (PCRB) for localizing all the targets as a function of the transmit covariance matrix and the compression noise covariance matrix of each BS. Then, a PCRB minimization problem subject to the transmit power constraints and the backhaul capacity constraints is formulated to jointly design the BSs' transmission and compression strategies. We propose an efficient algorithm to solve this problem based on the alternating optimization technique. Specifically, it is shown that when either the transmit covariance matrices or the compression noise covariance matrices are fixed, the successive convex approximation technique can be leveraged to optimize the other type of covariance matrices locally. Numerical results are provided to verify the effectiveness of our proposed algorithm.

Deep Learning for Joint Design of Pilot, Channel Feedback, and Hybrid Beamforming in FDD Massive MIMO-OFDM Systems

Dec 10, 2023

Abstract:This letter considers the transceiver design in frequency division duplex (FDD) massive multiple-input multiple-output (MIMO) orthogonal frequency division multiplexing (OFDM) systems for high-quality data transmission. We propose a novel deep learning based framework where the procedures of pilot design, channel feedback, and hybrid beamforming are realized by carefully crafted deep neural networks. All the considered modules are jointly learned in an end-to-end manner, and a graph neural network is adopted to effectively capture interactions between beamformers based on the built graphical representation. Numerical results validate the effectiveness of our method.

Hierarchical Beam Alignment for Millimeter-Wave Communication Systems: A Deep Learning Approach

Aug 23, 2023

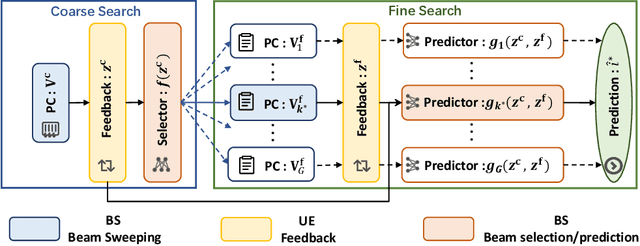

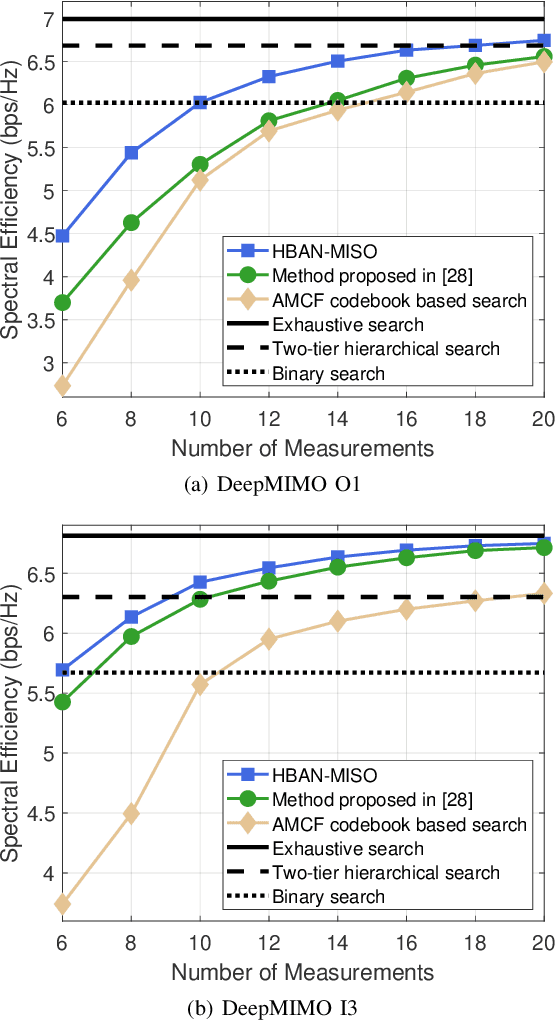

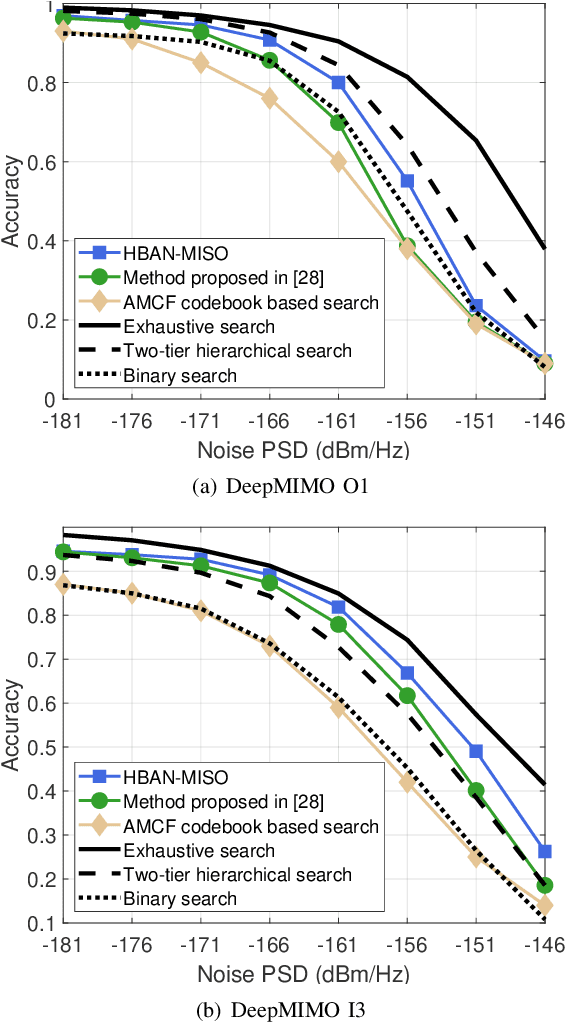

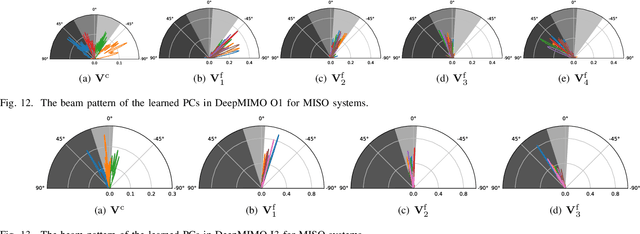

Abstract:Fast and precise beam alignment is crucial for high-quality data transmission in millimeter-wave (mmWave) communication systems, where large-scale antenna arrays are utilized to overcome the severe propagation loss. To tackle the challenging problem, we propose a novel deep learning-based hierarchical beam alignment method for both multiple-input single-output (MISO) and multiple-input multiple-output (MIMO) systems, which learns two tiers of probing codebooks (PCs) and uses their measurements to predict the optimal beam in a coarse-to-fine search manner. Specifically, a hierarchical beam alignment network (HBAN) is developed for MISO systems, which first performs coarse channel measurement using a tier-1 PC, then selects a tier-2 PC for fine channel measurement, and finally predicts the optimal beam based on both coarse and fine measurements. The propounded HBAN is trained in two steps: the tier-1 PC and the tier-2 PC selector are first trained jointly, followed by the joint training of all the tier-2 PCs and beam predictors. Furthermore, an HBAN for MIMO systems is proposed to directly predict the optimal beam pair without performing beam alignment individually at the transmitter and receiver. Numerical results demonstrate that the proposed HBANs are superior to the state-of-art methods in both alignment accuracy and signaling overhead reduction.

Cooperative Multi-Cell Massive Access with Temporally Correlated Activity

Apr 19, 2023

Abstract:This paper investigates the problem of activity detection and channel estimation in cooperative multi-cell massive access systems with temporally correlated activity, where all access points (APs) are connected to a central unit via fronthaul links. We propose to perform user-centric AP cooperation for computation burden alleviation and introduce a generalized sliding-window detection strategy for fully exploiting the temporal correlation in activity. By establishing the probabilistic model associated with the factor graph representation, we propose a scalable Dynamic Compressed Sensing-based Multiple Measurement Vector Generalized Approximate Message Passing (DCS-MMV-GAMP) algorithm from the perspective of Bayesian inference. Therein, the activity likelihood is refined by performing standard message passing among the activities in the spatial-temporal domain and GAMP is employed for efficient channel estimation. Furthermore, we develop two schemes of quantize-and-forward (QF) and detect-and-forward (DF) based on DCS-MMV-GAMP for the finite-fronthaul-capacity scenario, which are extensively evaluated under various system limits. Numerical results verify the significant superiority of the proposed approach over the benchmarks. Moreover, it is revealed that QF can usually realize superior performance when the antenna number is small, whereas DF shifts to be preferable with limited fronthaul capacity if the large-scale antenna arrays are equipped.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge