Junyi Yang

1024-Channel 0.8V 23.9-nW/Channel Event-based Compute In-memory Neural Spike Detector

Dec 09, 2025

Abstract:The increasing data rate has become a major issue confronting next-generation intracortical brain-machine interfaces (iBMIs). The scaling number of recording sites requires complex analog wiring and lead to huge digitization power consumption. Compressive event-based neural frontends have been used in high-density neural implants to support the simultaneous recording of more channels. Event-based frontends (EBF) convert recorded signals into asynchronous digital events via delta modulation and can inherently achieve considerable compression. But EBFs are prone to false events that do not correspond to neural spikes. Spike detection (SPD) is a key process in the iBMI pipeline to detect neural spikes and further reduce the data rate. However, conventional digital SPD suffers from the increasing buffer size and frequent memory access power, and conventional spike emphasizers are not compatible with EBFs. In this work we introduced an event-based spike detection (Ev-SPD) algorithm for scalable compressive EBFs. To implement the algorithm effectively, we proposed a novel low-power 10-T eDRAM-SRAM hybrid random-access memory in-memory computing bitcell for event processing. We fabricated the proposed 1024-channel IMC SPD macro in a 65nm process and tested the macro with both synthetic dataset and Neuropixel recordings. The proposed macro achieved a high spike detection accuracy of 96.06% on a synthetic dataset and 95.08% similarity and 0.05 firing pattern MAE on Neuropixel recordings. Our event-based IMC SPD macro achieved a high per channel spike detection energy efficiency of 23.9 nW per channel and an area efficiency of 375 um^2 per channel. Our work presented a SPD scheme compatible with compressive EBFs for high-density iBMIs, achieving ultra-low power consumption with an IMC architecture while maintaining considerable accuracy.

Deep Learning for Joint Design of Pilot, Channel Feedback, and Hybrid Beamforming in FDD Massive MIMO-OFDM Systems

Dec 10, 2023

Abstract:This letter considers the transceiver design in frequency division duplex (FDD) massive multiple-input multiple-output (MIMO) orthogonal frequency division multiplexing (OFDM) systems for high-quality data transmission. We propose a novel deep learning based framework where the procedures of pilot design, channel feedback, and hybrid beamforming are realized by carefully crafted deep neural networks. All the considered modules are jointly learned in an end-to-end manner, and a graph neural network is adopted to effectively capture interactions between beamformers based on the built graphical representation. Numerical results validate the effectiveness of our method.

Hierarchical Beam Alignment for Millimeter-Wave Communication Systems: A Deep Learning Approach

Aug 23, 2023

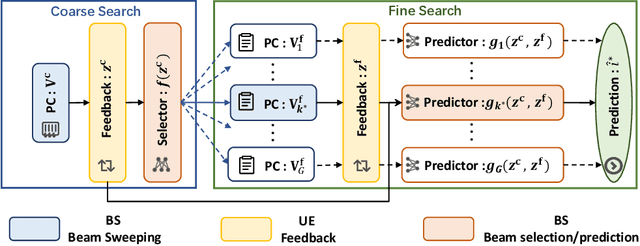

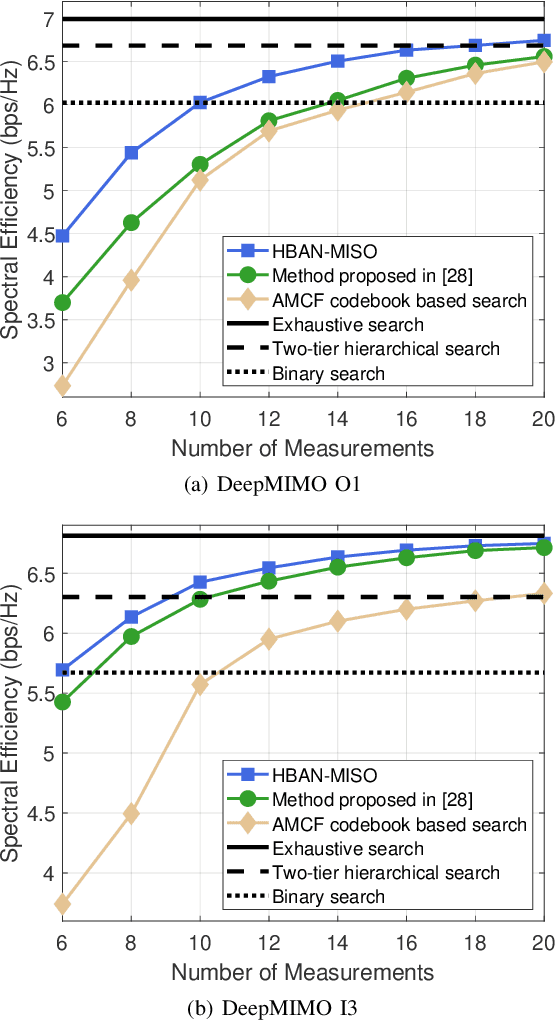

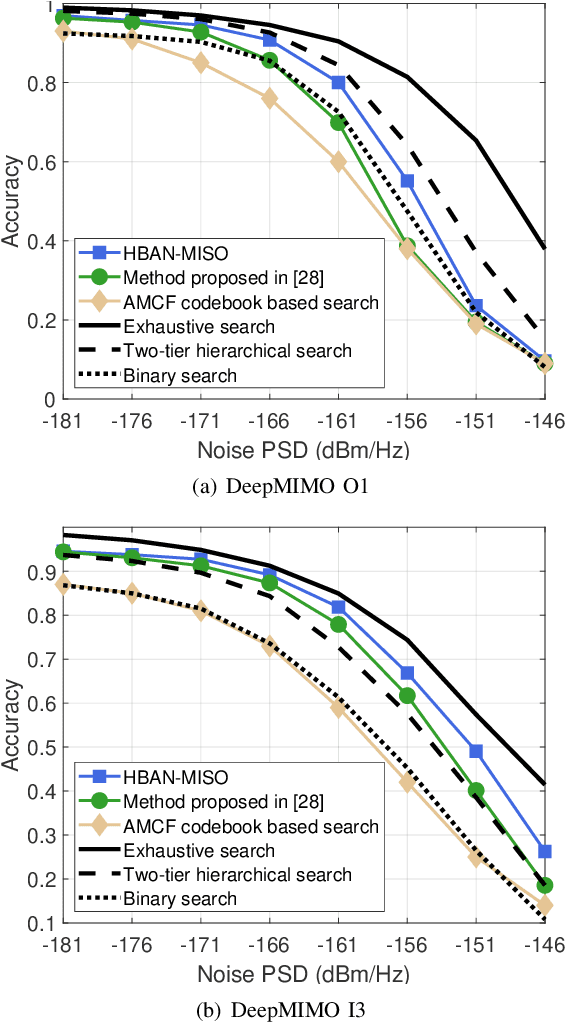

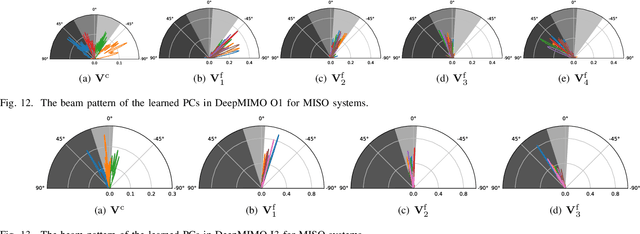

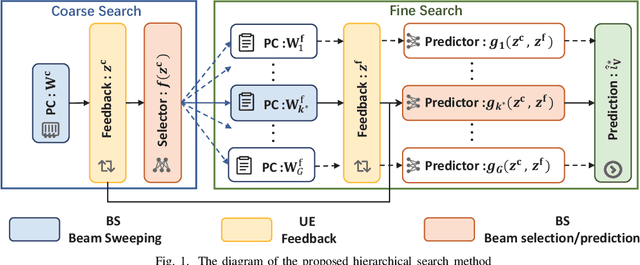

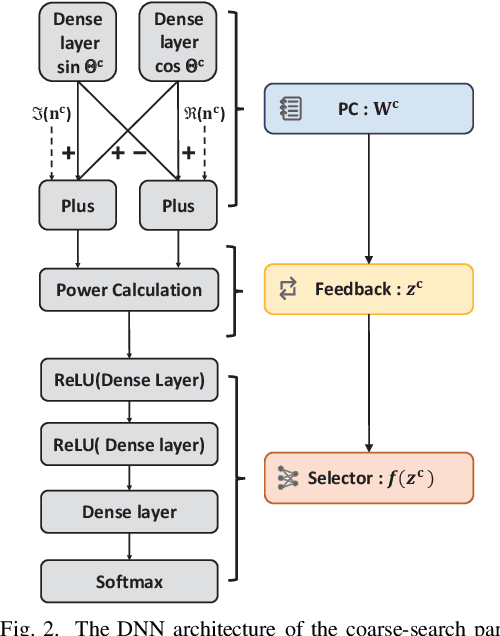

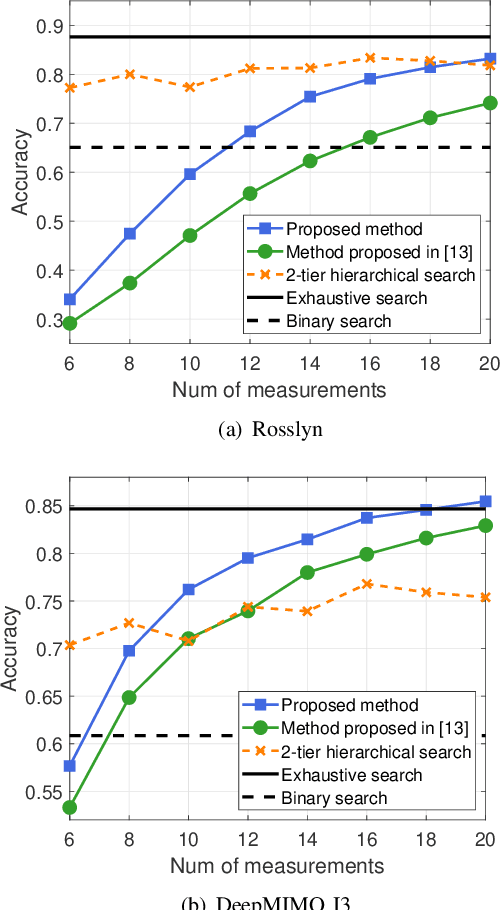

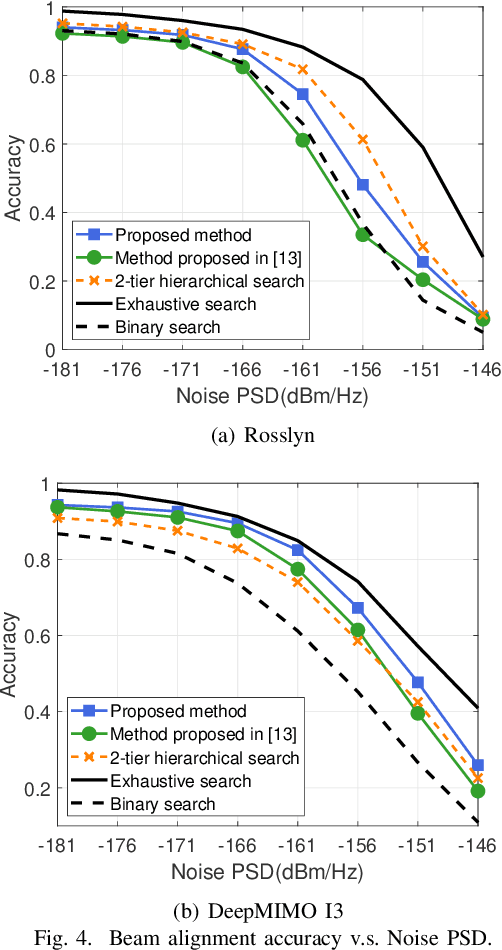

Abstract:Fast and precise beam alignment is crucial for high-quality data transmission in millimeter-wave (mmWave) communication systems, where large-scale antenna arrays are utilized to overcome the severe propagation loss. To tackle the challenging problem, we propose a novel deep learning-based hierarchical beam alignment method for both multiple-input single-output (MISO) and multiple-input multiple-output (MIMO) systems, which learns two tiers of probing codebooks (PCs) and uses their measurements to predict the optimal beam in a coarse-to-fine search manner. Specifically, a hierarchical beam alignment network (HBAN) is developed for MISO systems, which first performs coarse channel measurement using a tier-1 PC, then selects a tier-2 PC for fine channel measurement, and finally predicts the optimal beam based on both coarse and fine measurements. The propounded HBAN is trained in two steps: the tier-1 PC and the tier-2 PC selector are first trained jointly, followed by the joint training of all the tier-2 PCs and beam predictors. Furthermore, an HBAN for MIMO systems is proposed to directly predict the optimal beam pair without performing beam alignment individually at the transmitter and receiver. Numerical results demonstrate that the proposed HBANs are superior to the state-of-art methods in both alignment accuracy and signaling overhead reduction.

Deep Learning for Hierarchical Beam Alignment in mmWave Communication Systems

Sep 08, 2022

Abstract:Fast and precise beam alignment is crucial to support high-quality data transmission in millimeter wave (mmWave) communication systems. In this work, we propose a novel deep learning based hierarchical beam alignment method that learns two tiers of probing codebooks (PCs) and uses their measurements to predict the optimal beam in a coarse-to-fine searching manner. Specifically, the proposed method first performs coarse channel measurement using the tier-1 PC, then selects a tier-2 PC for fine channel measurement, and finally predicts the optimal beam based on both coarse and fine measurements. The proposed deep neural network (DNN) architecture is trained in two steps. First, the tier-1 PC and the tier-2 PC selector are trained jointly. After that, all the tier-2 PCs together with the optimal beam predictors are trained jointly. The learned hierarchical PCs can capture the features of propagation environment. Numerical results based on realistic ray-tracing datasets demonstrate that the proposed method is superior to the state-of-art beam alignment methods in both alignment accuracy and sweeping overhead.

LEO Satellite Access Network (LEO-SAN) Towards 6G: Challenges and Approaches

Jul 25, 2022

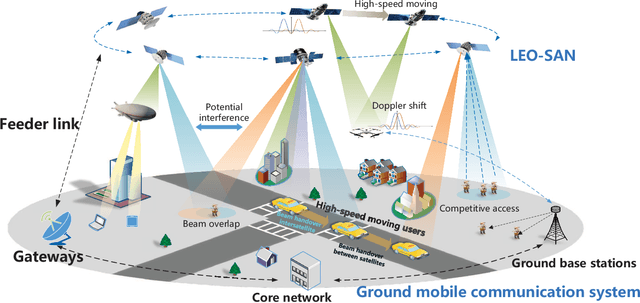

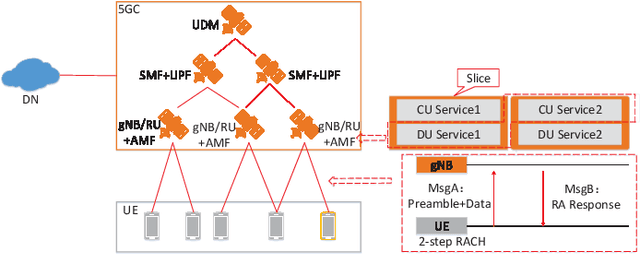

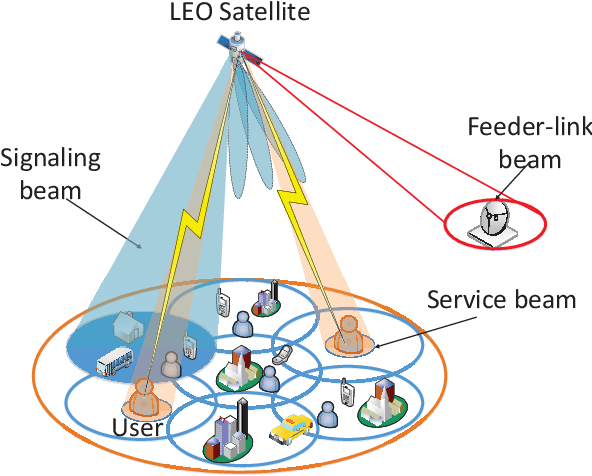

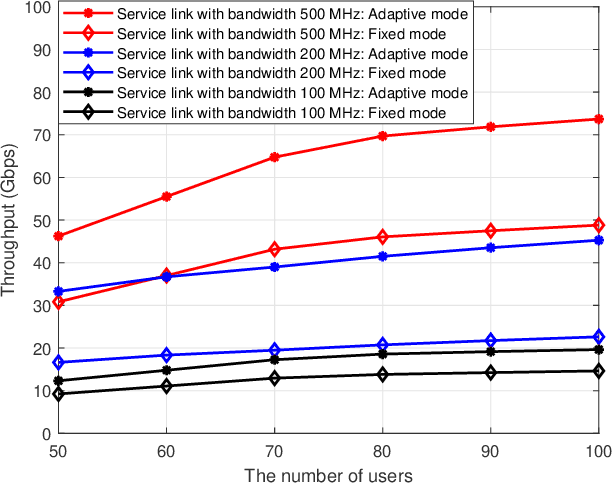

Abstract:With the rapid development of satellite communication technologies, the space-based access network has been envisioned as a promising complementary part of the future 6G network. Aside from terrestrial base stations, satellite nodes, especially the low-earth-orbit (LEO) satellites, can also serve as base stations for Internet access, and constitute the LEO-satellite-based access network (LEO-SAN). LEO-SAN is expected to provide seamless massive access and extended coverage with high signal quality. However, its practical implementation still faces significant technical challenges, e.g., high mobility and limited budget for communication payloads of LEO satellite nodes. This paper aims at revealing the main technical issues that have not been fully addressed by the existing LEO-SAN designs, from three aspects namely random access, beam management and Doppler-resistant transmission technologies. More specifically, the critical issues of random access in LEO-SAN are discussed regarding low flexibility, long transmission delay, and inefficient handshakes. Then the beam management for LEO-SAN is investigated in complex propagation environments under the constraints of high mobility and limited payload budget. Furthermore, the influence of Doppler shifts on LEO-SAN is explored. Correspondingly, promising technologies to address these challenges are also discussed, respectively. Finally, the future research directions are envisioned.

Image Restoration Using Deep Regulated Convolutional Networks

Oct 19, 2019

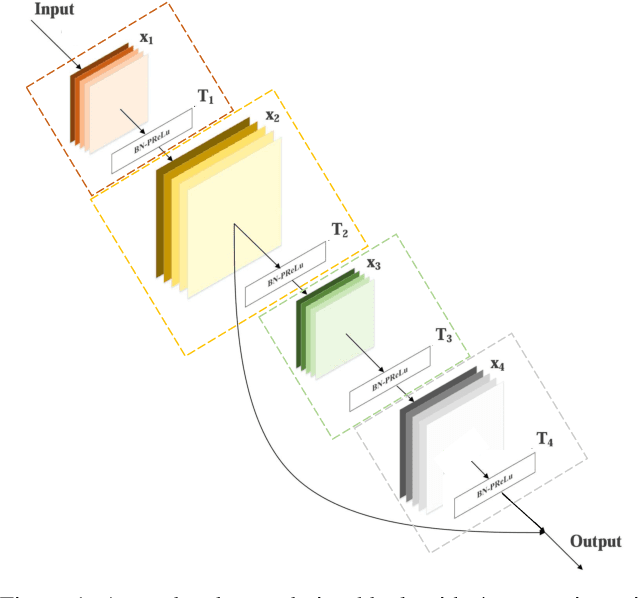

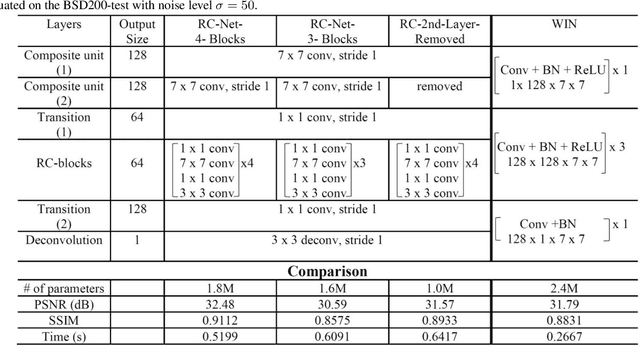

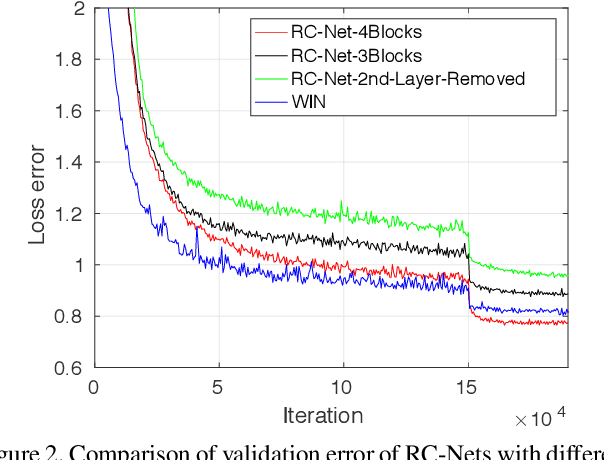

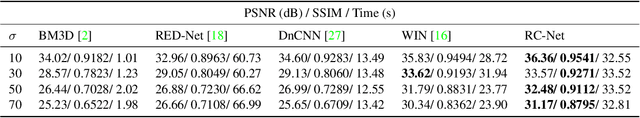

Abstract:While the depth of convolutional neural networks has attracted substantial attention in the deep learning research, the width of these networks has recently received greater interest. The width of networks, defined as the size of the receptive fields and the density of the channels, has demonstrated crucial importance in low-level vision tasks such as image denoising and restoration. However, the limited generalization ability, due to the increased width of networks, creates a bottleneck in designing wider networks. In this paper, we propose the Deep Regulated Convolutional Network (RC-Net), a deep network composed of regulated sub-network blocks cascaded by skip-connections, to overcome this bottleneck. Specifically, the Regulated Convolution block (RC-block), featured by a combination of large and small convolution filters, balances the effectiveness of prominent feature extraction and the generalization ability of the network. RC-Nets have several compelling advantages: they embrace diversified features through large-small filter combinations, alleviate the hazy boundary and blurred details in image denoising and super-resolution problems, and stabilize the learning process. Our proposed RC-Nets outperform state-of-the-art approaches with significant performance gains in various image restoration tasks while demonstrating promising generalization ability. The code is available at https://github.com/cswin/RC-Nets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge