Wei-Te Chen

RakutenAI-7B: Extending Large Language Models for Japanese

Mar 21, 2024

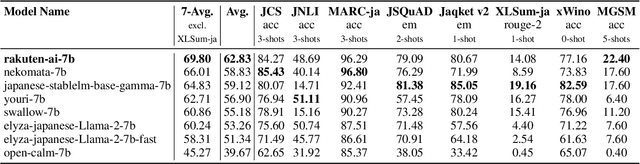

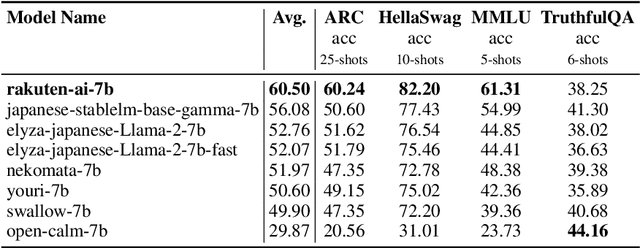

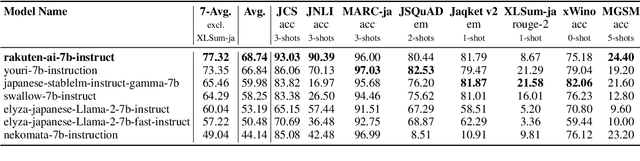

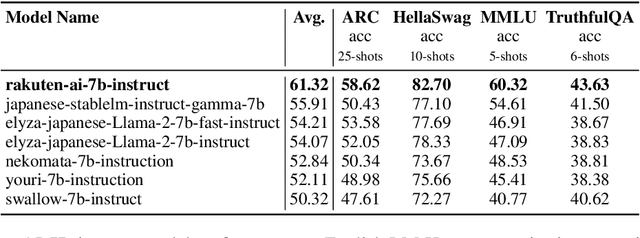

Abstract:We introduce RakutenAI-7B, a suite of Japanese-oriented large language models that achieve the best performance on the Japanese LM Harness benchmarks among the open 7B models. Along with the foundation model, we release instruction- and chat-tuned models, RakutenAI-7B-instruct and RakutenAI-7B-chat respectively, under the Apache 2.0 license.

AE-smnsMLC: Multi-Label Classification with Semantic Matching and Negative Label Sampling for Product Attribute Value Extraction

Oct 11, 2023

Abstract:Product attribute value extraction plays an important role for many real-world applications in e-Commerce such as product search and recommendation. Previous methods treat it as a sequence labeling task that needs more annotation for position of values in the product text. This limits their application to real-world scenario in which only attribute values are weakly-annotated for each product without their position. Moreover, these methods only use product text (i.e., product title and description) and do not consider the semantic connection between the multiple attribute values of a given product and its text, which can help attribute value extraction. In this paper, we reformulate this task as a multi-label classification task that can be applied for real-world scenario in which only annotation of attribute values is available to train models (i.e., annotation of positional information of attribute values is not available). We propose a classification model with semantic matching and negative label sampling for attribute value extraction. Semantic matching aims to capture semantic interactions between attribute values of a given product and its text. Negative label sampling aims to enhance the model's ability of distinguishing similar values belonging to the same attribute. Experimental results on three subsets of a large real-world e-Commerce dataset demonstrate the effectiveness and superiority of our proposed model.

Knowledge-Enhanced Multi-Label Few-Shot Product Attribute-Value Extraction

Aug 16, 2023Abstract:Existing attribute-value extraction (AVE) models require large quantities of labeled data for training. However, new products with new attribute-value pairs enter the market every day in real-world e-Commerce. Thus, we formulate AVE in multi-label few-shot learning (FSL), aiming to extract unseen attribute value pairs based on a small number of training examples. We propose a Knowledge-Enhanced Attentive Framework (KEAF) based on prototypical networks, leveraging the generated label description and category information to learn more discriminative prototypes. Besides, KEAF integrates with hybrid attention to reduce noise and capture more informative semantics for each class by calculating the label-relevant and query-related weights. To achieve multi-label inference, KEAF further learns a dynamic threshold by integrating the semantic information from both the support set and the query set. Extensive experiments with ablation studies conducted on two datasets demonstrate that KEAF outperforms other SOTA models for information extraction in FSL. The code can be found at: https://github.com/gjiaying/KEAF

A Unified Generative Approach to Product Attribute-Value Identification

Jun 09, 2023Abstract:Product attribute-value identification (PAVI) has been studied to link products on e-commerce sites with their attribute values (e.g., <Material, Cotton>) using product text as clues. Technical demands from real-world e-commerce platforms require PAVI methods to handle unseen values, multi-attribute values, and canonicalized values, which are only partly addressed in existing extraction- and classification-based approaches. Motivated by this, we explore a generative approach to the PAVI task. We finetune a pre-trained generative model, T5, to decode a set of attribute-value pairs as a target sequence from the given product text. Since the attribute value pairs are unordered set elements, how to linearize them will matter; we, thus, explore methods of composing an attribute-value pair and ordering the pairs for the task. Experimental results confirm that our generation-based approach outperforms the existing extraction and classification-based methods on large-scale real-world datasets meant for those methods.

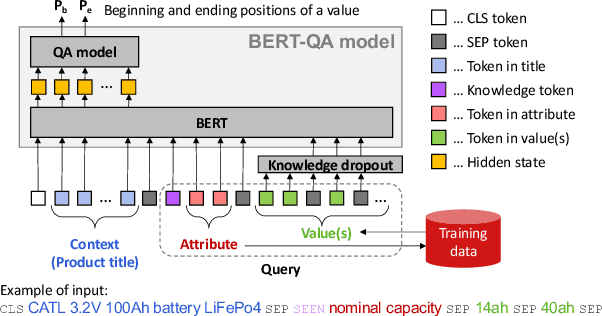

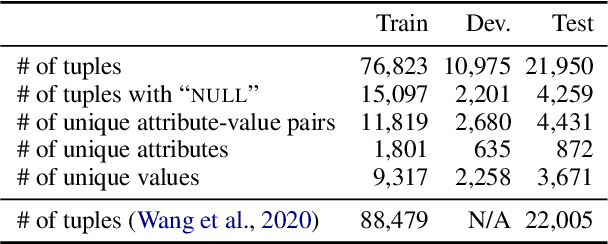

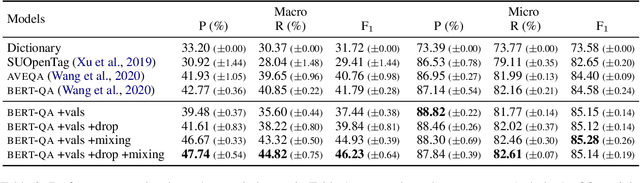

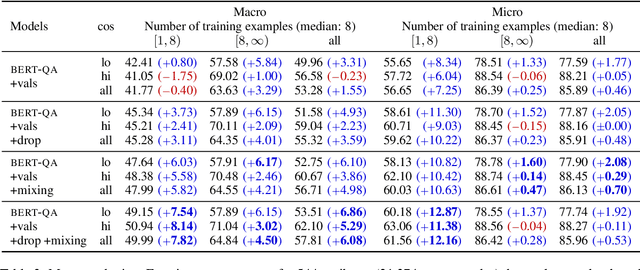

Simple and Effective Knowledge-Driven Query Expansion for QA-Based Product Attribute Extraction

Jun 28, 2022

Abstract:A key challenge in attribute value extraction (AVE) from e-commerce sites is how to handle a large number of attributes for diverse products. Although this challenge is partially addressed by a question answering (QA) approach which finds a value in product data for a given query (attribute), it does not work effectively for rare and ambiguous queries. We thus propose simple knowledge-driven query expansion based on possible answers (values) of a query (attribute) for QA-based AVE. We retrieve values of a query (attribute) from the training data to expand the query. We train a model with two tricks, knowledge dropout and knowledge token mixing, which mimic the imperfection of the value knowledge in testing. Experimental results on our cleaned version of AliExpress dataset show that our method improves the performance of AVE (+6.08 macro F1), especially for rare and ambiguous attributes (+7.82 and +6.86 macro F1, respectively).

* Published at ACL 2022

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge