Vladimir Ivanovic

REFINE: Reachability-based Trajectory Design using Robust Feedback Linearization and Zonotopes

Nov 22, 2022Abstract:Performing real-time receding horizon motion planning for autonomous vehicles while providing safety guarantees remains difficult. This is because existing methods to accurately predict ego vehicle behavior under a chosen controller use online numerical integration that requires a fine time discretization and thereby adversely affects real-time performance. To address this limitation, several recent papers have proposed to apply offline reachability analysis to conservatively predict the behavior of the ego vehicle. This reachable set can be constructed by utilizing a simplified model whose behavior is assumed a priori to conservatively bound the dynamics of a full-order model. However, guaranteeing that one satisfies this assumption is challenging. This paper proposes a framework named REFINE to overcome the limitations of these existing approaches. REFINE utilizes a parameterized robust controller that partially linearizes the vehicle dynamics even in the presence of modeling error. Zonotope-based reachability analysis is then performed on the closed-loop, full-order vehicle dynamics to compute the corresponding control-parameterized, over-approximate Forward Reachable Sets (FRS). Because reachability analysis is applied to the full-order model, the potential conservativeness introduced by using a simplified model is avoided. The pre-computed, control-parameterized FRS is then used online in an optimization framework to ensure safety. The proposed method is compared to several state of the art methods during a simulation-based evaluation on a full-size vehicle model and is evaluated on a 1/10th race car robot in real hardware testing. In contrast to existing methods, REFINE is shown to enable the vehicle to safely navigate itself through complex environments.

Robust AI Driving Strategy for Autonomous Vehicles

Jul 16, 2022

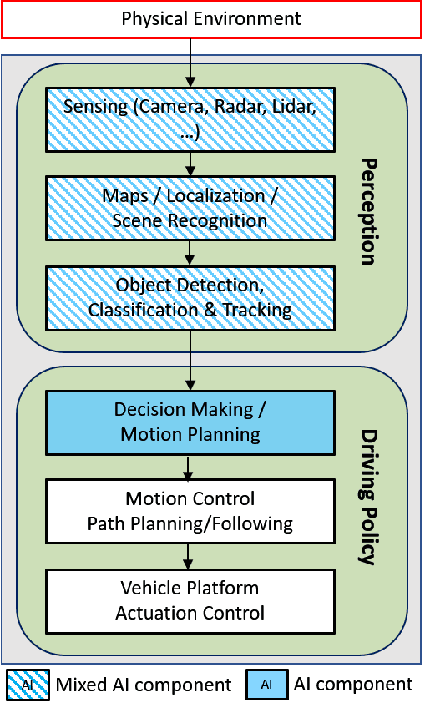

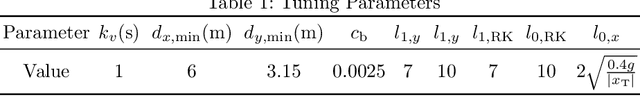

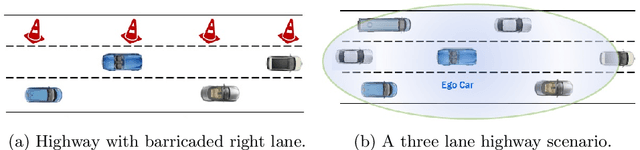

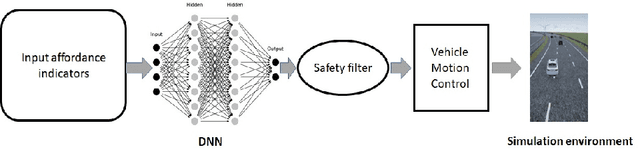

Abstract:There has been significant progress in sensing, perception, and localization for automated driving, However, due to the wide spectrum of traffic/road structure scenarios and the long tail distribution of human driver behavior, it has remained an open challenge for an intelligent vehicle to always know how to make and execute the best decision on road given available sensing / perception / localization information. In this chapter, we talk about how artificial intelligence and more specifically, reinforcement learning, can take advantage of operational knowledge and safety reflex to make strategical and tactical decisions. We discuss some challenging problems related to the robustness of reinforcement learning solutions and their implications to the practical design of driving strategies for autonomous vehicles. We focus on automated driving on highway and the integration of reinforcement learning, vehicle motion control, and control barrier function, leading to a robust AI driving strategy that can learn and adapt safely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge