Eric Tseng

Robust AI Driving Strategy for Autonomous Vehicles

Jul 16, 2022

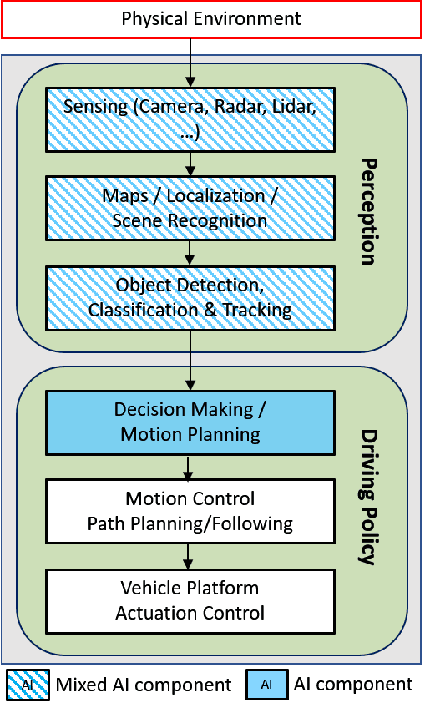

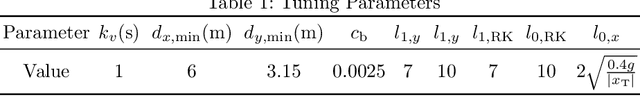

Abstract:There has been significant progress in sensing, perception, and localization for automated driving, However, due to the wide spectrum of traffic/road structure scenarios and the long tail distribution of human driver behavior, it has remained an open challenge for an intelligent vehicle to always know how to make and execute the best decision on road given available sensing / perception / localization information. In this chapter, we talk about how artificial intelligence and more specifically, reinforcement learning, can take advantage of operational knowledge and safety reflex to make strategical and tactical decisions. We discuss some challenging problems related to the robustness of reinforcement learning solutions and their implications to the practical design of driving strategies for autonomous vehicles. We focus on automated driving on highway and the integration of reinforcement learning, vehicle motion control, and control barrier function, leading to a robust AI driving strategy that can learn and adapt safely.

Autonomous Highway Driving using Deep Reinforcement Learning

Mar 29, 2019

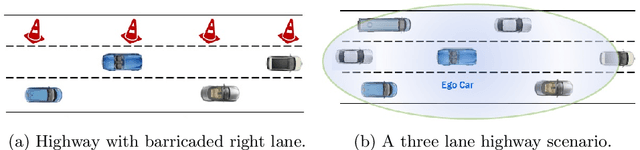

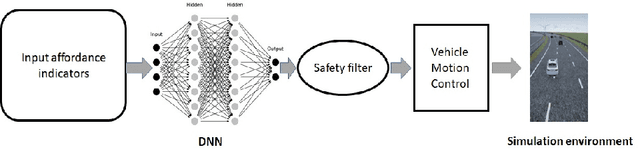

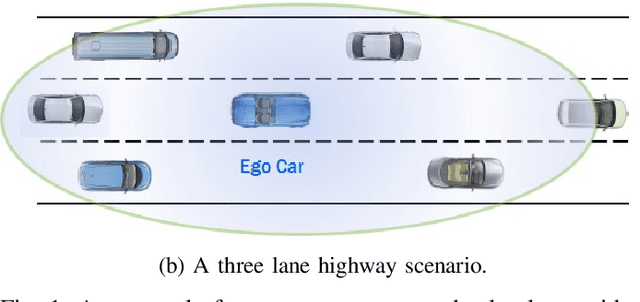

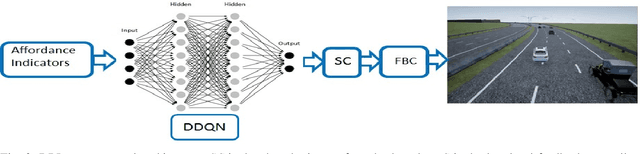

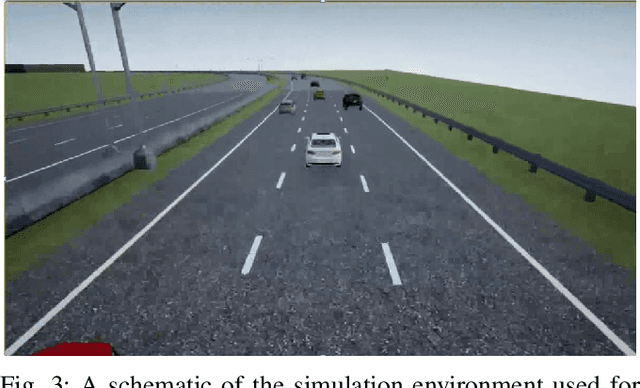

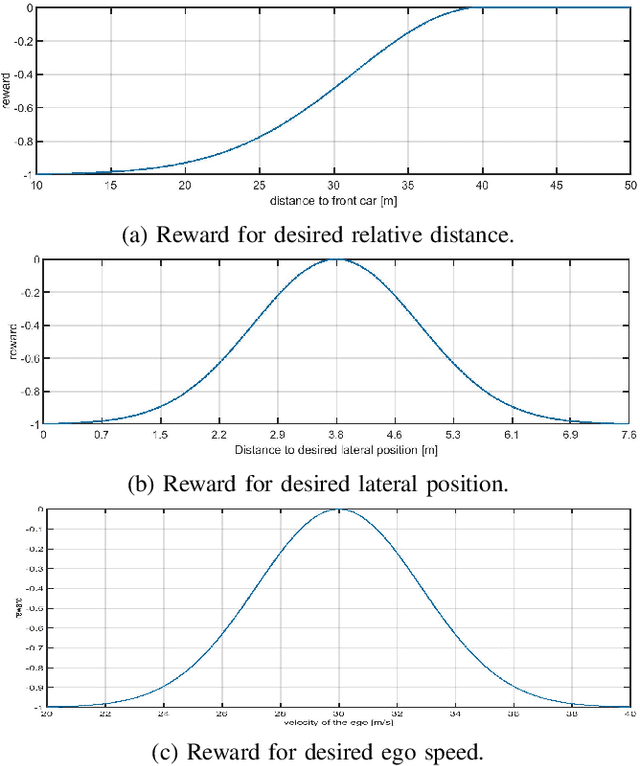

Abstract:The operational space of an autonomous vehicle (AV) can be diverse and vary significantly. This may lead to a scenario that was not postulated in the design phase. Due to this, formulating a rule based decision maker for selecting maneuvers may not be ideal. Similarly, it may not be effective to design an a-priori cost function and then solve the optimal control problem in real-time. In order to address these issues and to avoid peculiar behaviors when encountering unforeseen scenario, we propose a reinforcement learning (RL) based method, where the ego car, i.e., an autonomous vehicle, learns to make decisions by directly interacting with simulated traffic. The decision maker for AV is implemented as a deep neural network providing an action choice for a given system state. In a critical application such as driving, an RL agent without explicit notion of safety may not converge or it may need extremely large number of samples before finding a reliable policy. To best address the issue, this paper incorporates reinforcement learning with an additional short horizon safety check (SC). In a critical scenario, the safety check will also provide an alternate safe action to the agent provided if it exists. This leads to two novel contributions. First, it generalizes the states that could lead to undesirable "near-misses" or "collisions ". Second, inclusion of safety check can provide a safe and stable training environment. This significantly enhances learning efficiency without inhibiting meaningful exploration to ensure safe and optimal learned behavior. We demonstrate the performance of the developed algorithm in highway driving scenario where the trained AV encounters varying traffic density in a highway setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge