Vladimir Berman

The Morphemic Origin of Zipf's Law: A Factorized Combinatorial Framework

Dec 13, 2025

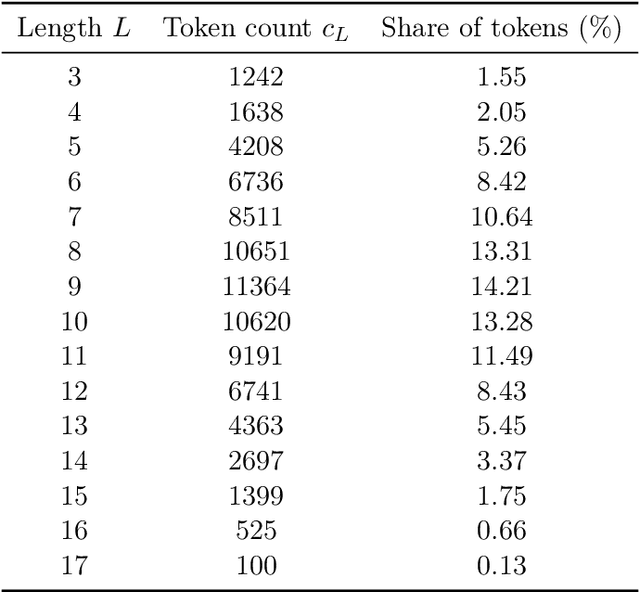

Abstract:We present a simple structure based model of how words are formed from morphemes. The model explains two major empirical facts: the typical distribution of word lengths and the appearance of Zipf like rank frequency curves. In contrast to classical explanations based on random text or communication efficiency, our approach uses only the combinatorial organization of prefixes, roots, suffixes and inflections. In this Morphemic Combinatorial Word Model, a word is created by activating several positional slots. Each slot turns on with a certain probability and selects one morpheme from its inventory. Morphemes are treated as stable building blocks that regularly appear in word formation and have characteristic positions. This mechanism produces realistic word length patterns with a concentrated middle zone and a thin long tail, closely matching real languages. Simulations with synthetic morpheme inventories also generate rank frequency curves with Zipf like exponents around 1.1-1.4, similar to English, Russian and Romance languages. The key result is that Zipf like behavior can emerge without meaning, communication pressure or optimization principles. The internal structure of morphology alone, combined with probabilistic activation of slots, is sufficient to create the robust statistical patterns observed across languages.

MissionGPT: Mission Planner for Mobile Robot based on Robotics Transformer Model

Nov 07, 2024Abstract:This paper presents a novel approach to building mission planners based on neural networks with Transformer architecture and Large Language Models (LLMs). This approach demonstrates the possibility of setting a task for a mobile robot and its successful execution without the use of perception algorithms, based only on the data coming from the camera. In this work, a success rate of more than 50\% was obtained for one of the basic actions for mobile robots. The proposed approach is of practical importance in the field of warehouse logistics robots, as in the future it may allow to eliminate the use of markings, LiDARs, beacons and other tools for robot orientation in space. In conclusion, this approach can be scaled for any type of robot and for any number of robots.

DogSurf: Quadruped Robot Capable of GRU-based Surface Recognition for Blind Person Navigation

Feb 05, 2024Abstract:This paper introduces DogSurf - a newapproach of using quadruped robots to help visually impaired people navigate in real world. The presented method allows the quadruped robot to detect slippery surfaces, and to use audio and haptic feedback to inform the user when to stop. A state-of-the-art GRU-based neural network architecture with mean accuracy of 99.925% was proposed for the task of multiclass surface classification for quadruped robots. A dataset was collected on a Unitree Go1 Edu robot. The dataset and code have been posted to the public domain.

CognitiveDog: Large Multimodal Model Based System to Translate Vision and Language into Action of Quadruped Robot

Jan 17, 2024

Abstract:This paper introduces CognitiveDog, a pioneering development of quadruped robot with Large Multi-modal Model (LMM) that is capable of not only communicating with humans verbally but also physically interacting with the environment through object manipulation. The system was realized on Unitree Go1 robot-dog equipped with a custom gripper and demonstrated autonomous decision-making capabilities, independently determining the most appropriate actions and interactions with various objects to fulfill user-defined tasks. These tasks do not necessarily include direct instructions, challenging the robot to comprehend and execute them based on natural language input and environmental cues. The paper delves into the intricacies of this system, dataset characteristics, and the software architecture. Key to this development is the robot's proficiency in navigating space using Visual-SLAM, effectively manipulating and transporting objects, and providing insightful natural language commentary during task execution. Experimental results highlight the robot's advanced task comprehension and adaptability, underscoring its potential in real-world applications. The dataset used to fine-tune the robot-dog behavior generation model is provided at the following link: huggingface.co/datasets/ArtemLykov/CognitiveDog_dataset

LLM-MARS: Large Language Model for Behavior Tree Generation and NLP-enhanced Dialogue in Multi-Agent Robot Systems

Dec 14, 2023

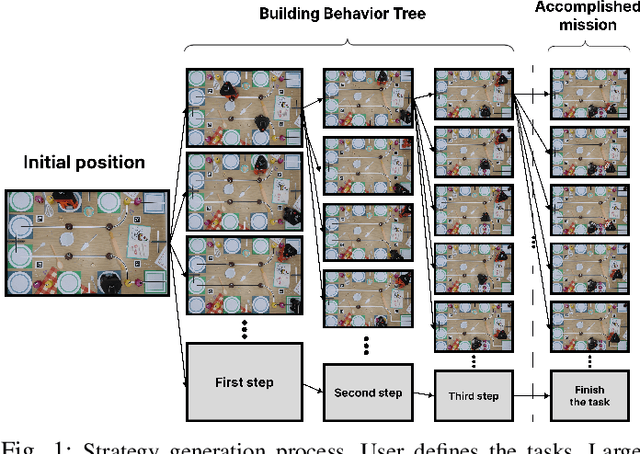

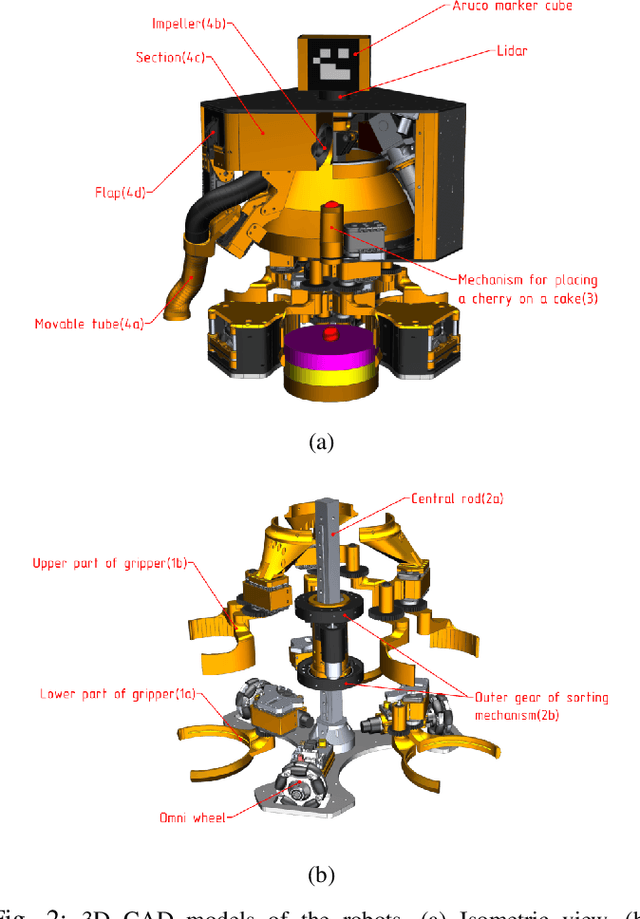

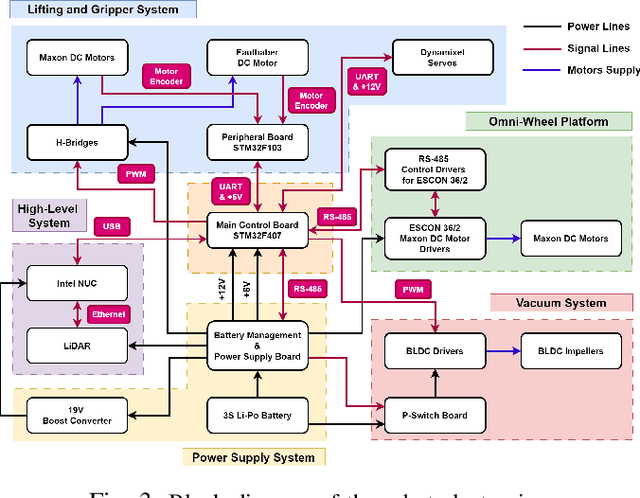

Abstract:This paper introduces LLM-MARS, first technology that utilizes a Large Language Model based Artificial Intelligence for Multi-Agent Robot Systems. LLM-MARS enables dynamic dialogues between humans and robots, allowing the latter to generate behavior based on operator commands and provide informative answers to questions about their actions. LLM-MARS is built on a transformer-based Large Language Model, fine-tuned from the Falcon 7B model. We employ a multimodal approach using LoRa adapters for different tasks. The first LoRa adapter was developed by fine-tuning the base model on examples of Behavior Trees and their corresponding commands. The second LoRa adapter was developed by fine-tuning on question-answering examples. Practical trials on a multi-agent system of two robots within the Eurobot 2023 game rules demonstrate promising results. The robots achieve an average task execution accuracy of 79.28% in compound commands. With commands containing up to two tasks accuracy exceeded 90%. Evaluation confirms the system's answers on operators questions exhibit high accuracy, relevance, and informativeness. LLM-MARS and similar multi-agent robotic systems hold significant potential to revolutionize logistics, enabling autonomous exploration missions and advancing Industry 5.0.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge