Virginia Morini

Y Social: an LLM-powered Social Media Digital Twin

Aug 01, 2024

Abstract:In this paper we introduce Y, a new-generation digital twin designed to replicate an online social media platform. Digital twins are virtual replicas of physical systems that allow for advanced analyses and experimentation. In the case of social media, a digital twin such as Y provides a powerful tool for researchers to simulate and understand complex online interactions. {\tt Y} leverages state-of-the-art Large Language Models (LLMs) to replicate sophisticated agent behaviors, enabling accurate simulations of user interactions, content dissemination, and network dynamics. By integrating these aspects, Y offers valuable insights into user engagement, information spread, and the impact of platform policies. Moreover, the integration of LLMs allows Y to generate nuanced textual content and predict user responses, facilitating the study of emergent phenomena in online environments. To better characterize the proposed digital twin, in this paper we describe the rationale behind its implementation, provide examples of the analyses that can be performed on the data it enables to be generated, and discuss its relevance for multidisciplinary research.

A survey on the impact of AI-based recommenders on human behaviours: methodologies, outcomes and future directions

Jun 29, 2024

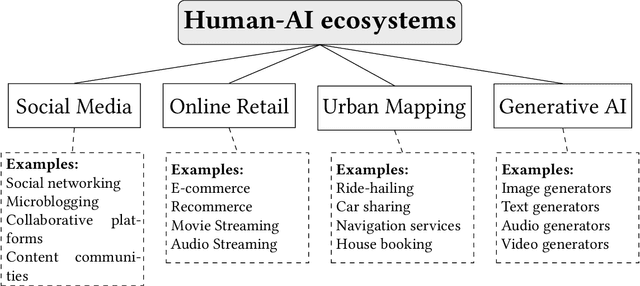

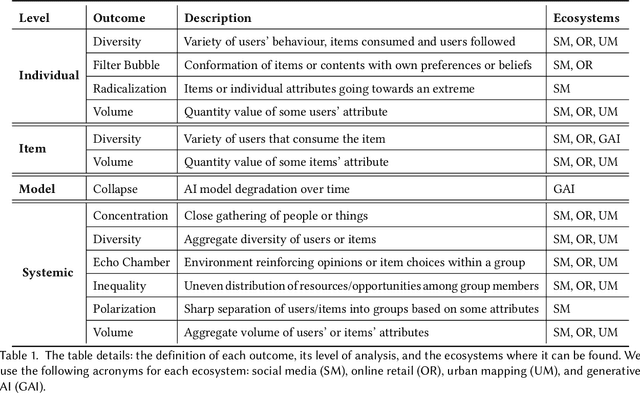

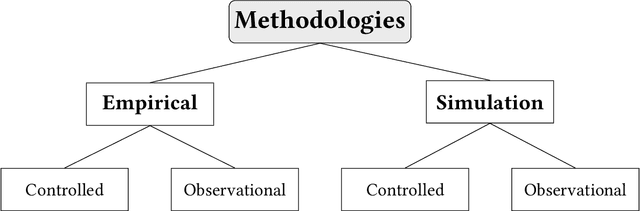

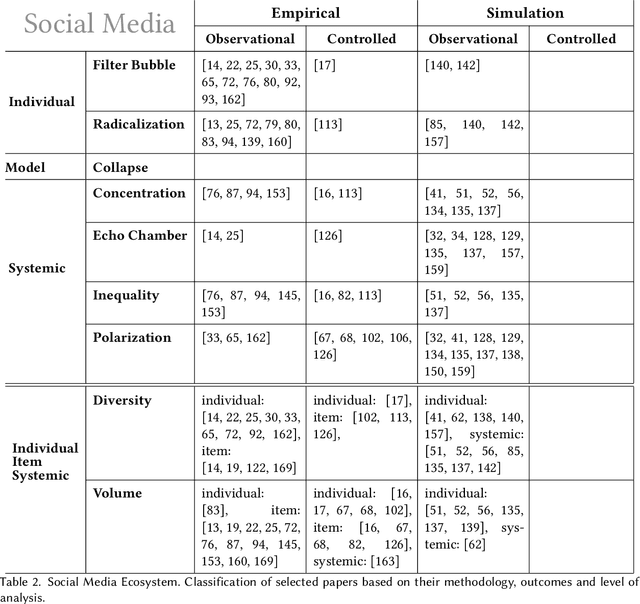

Abstract:Recommendation systems and assistants (in short, recommenders) are ubiquitous in online platforms and influence most actions of our day-to-day lives, suggesting items or providing solutions based on users' preferences or requests. This survey analyses the impact of recommenders in four human-AI ecosystems: social media, online retail, urban mapping and generative AI ecosystems. Its scope is to systematise a fast-growing field in which terminologies employed to classify methodologies and outcomes are fragmented and unsystematic. We follow the customary steps of qualitative systematic review, gathering 144 articles from different disciplines to develop a parsimonious taxonomy of: methodologies employed (empirical, simulation, observational, controlled), outcomes observed (concentration, model collapse, diversity, echo chamber, filter bubble, inequality, polarisation, radicalisation, volume), and their level of analysis (individual, item, model, and systemic). We systematically discuss all findings of our survey substantively and methodologically, highlighting also potential avenues for future research. This survey is addressed to scholars and practitioners interested in different human-AI ecosystems, policymakers and institutional stakeholders who want to understand better the measurable outcomes of recommenders, and tech companies who wish to obtain a systematic view of the impact of their recommenders.

From Perils to Possibilities: Understanding how Human (and AI) Biases affect Online Fora

Mar 21, 2024Abstract:Social media platforms are online fora where users engage in discussions, share content, and build connections. This review explores the dynamics of social interactions, user-generated contents, and biases within the context of social media analysis (analyzing works that use the tools offered by complex network analysis and natural language processing) through the lens of three key points of view: online debates, online support, and human-AI interactions. On the one hand, we delineate the phenomenon of online debates, where polarization, misinformation, and echo chamber formation often proliferate, driven by algorithmic biases and extreme mechanisms of homophily. On the other hand, we explore the emergence of online support groups through users' self-disclosure and social support mechanisms. Online debates and support mechanisms present a duality of both perils and possibilities within social media; perils of segregated communities and polarized debates, and possibilities of empathy narratives and self-help groups. This dichotomy also extends to a third perspective: users' reliance on AI-generated content, such as the ones produced by Large Language Models, which can manifest both human biases hidden in training sets and non-human biases that emerge from their artificial neural architectures. Analyzing interdisciplinary approaches, we aim to deepen the understanding of the complex interplay between social interactions, user-generated content, and biases within the realm of social media ecosystems.

Cognitive network science quantifies feelings expressed in suicide letters and Reddit mental health communities

Oct 29, 2021

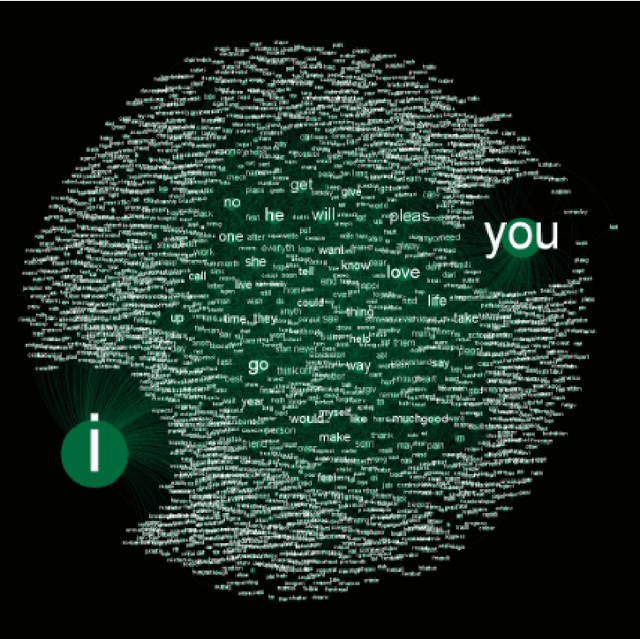

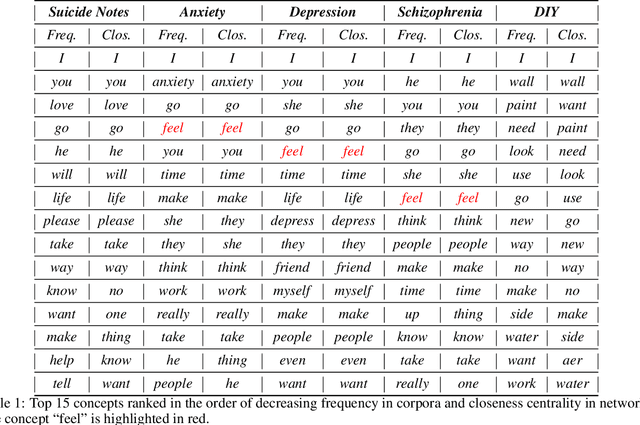

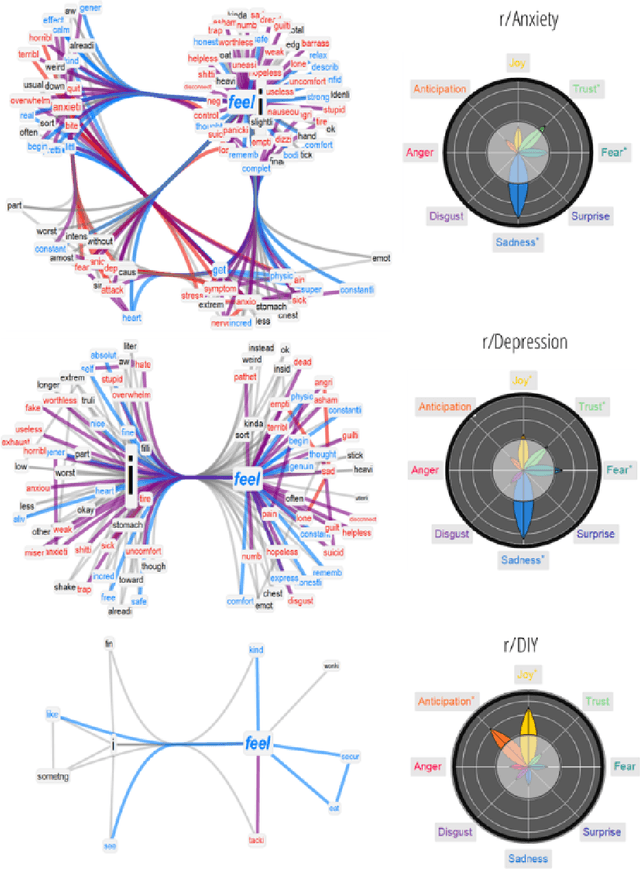

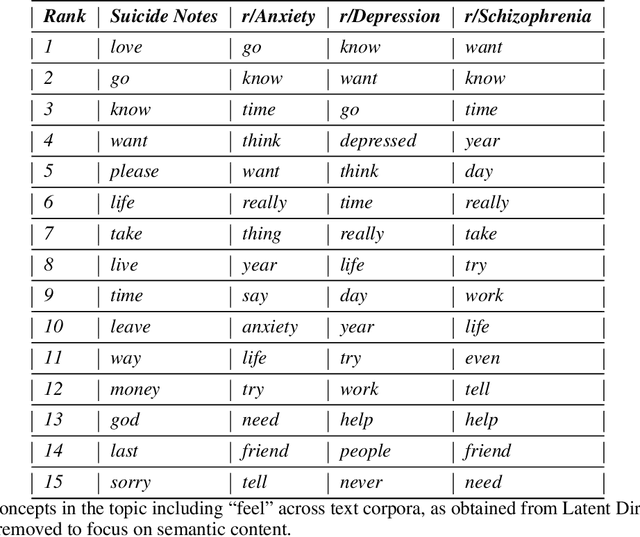

Abstract:Writing messages is key to expressing feelings. This study adopts cognitive network science to reconstruct how individuals report their feelings in clinical narratives like suicide notes or mental health posts. We achieve this by reconstructing syntactic/semantic associations between conceptsin texts as co-occurrences enriched with affective data. We transform 142 suicide notes and 77,000 Reddit posts from the r/anxiety, r/depression, r/schizophrenia, and r/do-it-your-own (r/DIY) forums into 5 cognitive networks, each one expressing meanings and emotions as reported by authors. These networks reconstruct the semantic frames surrounding 'feel', enabling a quantification of prominent associations and emotions focused around feelings. We find strong feelings of sadness across all clinical Reddit boards, added to fear r/depression, and replaced by joy/anticipation in r/DIY. Semantic communities and topic modelling both highlight key narrative topics of 'regret', 'unhealthy lifestyle' and 'low mental well-being'. Importantly, negative associations and emotions co-existed with trustful/positive language, focused on 'getting better'. This emotional polarisation provides quantitative evidence that online clinical boards possess a complex structure, where users mix both positive and negative outlooks. This dichotomy is absent in the r/DIY reference board and in suicide notes, where negative emotional associations about regret and pain persist but are overwhelmed by positive jargon addressing loved ones. Our quantitative comparisons provide strong evidence that suicide notes encapsulate different ways of expressing feelings compared to online Reddit boards, the latter acting more like personal diaries and relief valve. Our findings provide an interpretable, quantitative aid for supporting psychological inquiries of human feelings in digital and clinical settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge