Vidyasagar Sadhu

Real-Time Navigation for Autonomous Aerial Vehicles Using Video

Apr 01, 2025Abstract:Most applications in autonomous navigation using mounted cameras rely on the construction and processing of geometric 3D point clouds, which is an expensive process. However, there is another simpler way to make a space navigable quickly: to use semantic information (e.g., traffic signs) to guide the agent. However, detecting and acting on semantic information involves Computer Vision~(CV) algorithms such as object detection, which themselves are demanding for agents such as aerial drones with limited onboard resources. To solve this problem, we introduce a novel Markov Decision Process~(MDP) framework to reduce the workload of these CV approaches. We apply our proposed framework to both feature-based and neural-network-based object-detection tasks, using open-loop and closed-loop simulations as well as hardware-in-the-loop emulations. These holistic tests show significant benefits in energy consumption and speed with only a limited loss in accuracy compared to models based on static features and neural networks.

ToMCAT: Theory-of-Mind for Cooperative Agents in Teams via Multiagent Diffusion Policies

Feb 25, 2025Abstract:In this paper we present ToMCAT (Theory-of-Mind for Cooperative Agents in Teams), a new framework for generating ToM-conditioned trajectories. It combines a meta-learning mechanism, that performs ToM reasoning over teammates' underlying goals and future behavior, with a multiagent denoising-diffusion model, that generates plans for an agent and its teammates conditioned on both the agent's goals and its teammates' characteristics, as computed via ToM. We implemented an online planning system that dynamically samples new trajectories (replans) from the diffusion model whenever it detects a divergence between a previously generated plan and the current state of the world. We conducted several experiments using ToMCAT in a simulated cooking domain. Our results highlight the importance of the dynamic replanning mechanism in reducing the usage of resources without sacrificing team performance. We also show that recent observations about the world and teammates' behavior collected by an agent over the course of an episode combined with ToM inferences are crucial to generate team-aware plans for dynamic adaptation to teammates, especially when no prior information is provided about them.

Automatic Measures for Evaluating Generative Design Methods for Architects

Mar 20, 2023Abstract:The recent explosion of high-quality image-to-image methods has prompted interest in applying image-to-image methods towards artistic and design tasks. Of interest for architects is to use these methods to generate design proposals from conceptual sketches, usually hand-drawn sketches that are quickly developed and can embody a design intent. More specifically, instantiating a sketch into a visual that can be used to elicit client feedback is typically a time consuming task, and being able to speed up this iteration time is important. While the body of work in generative methods has been impressive, there has been a mismatch between the quality measures used to evaluate the outputs of these systems and the actual expectations of architects. In particular, most recent image-based works place an emphasis on realism of generated images. While important, this is one of several criteria architects look for. In this work, we describe the expectations architects have for design proposals from conceptual sketches, and identify corresponding automated metrics from the literature. We then evaluate several image-to-image generative methods that may address these criteria and examine their performance across these metrics. From these results, we identify certain challenges with hand-drawn conceptual sketches and describe possible future avenues of investigation to address them.

Sensor Control for Information Gain in Dynamic, Sparse and Partially Observed Environments

Nov 03, 2022Abstract:We present an approach for autonomous sensor control for information gathering under partially observable, dynamic and sparsely sampled environments. We consider the problem of controlling a sensor that makes partial observations in some space of interest such that it maximizes information about entities present in that space. We describe our approach for the task of Radio-Frequency (RF) spectrum monitoring, where the goal is to search for and track unknown, dynamic signals in the environment. To this end, we develop and demonstrate enhancements of the Deep Anticipatory Network (DAN) Reinforcement Learning (RL) framework that uses prediction and information-gain rewards to learn information-maximization policies in reward-sparse environments. We also extend this problem to situations in which taking samples from the actual RF spectrum/field is limited and expensive, and propose a model-based version of the original RL algorithm that fine-tunes the controller using a model of the environment that is iteratively improved from limited samples taken from the RF field. Our approach was thoroughly validated by testing against baseline expert-designed controllers in simulated RF environments of different complexity, using different rewards schemes and evaluation metrics. The results show that our system outperforms the standard DAN architecture and is more flexible and robust than several hand-coded agents. We also show that our approach is adaptable to non-stationary environments where the agent has to learn to adapt to changes from the emitting sources.

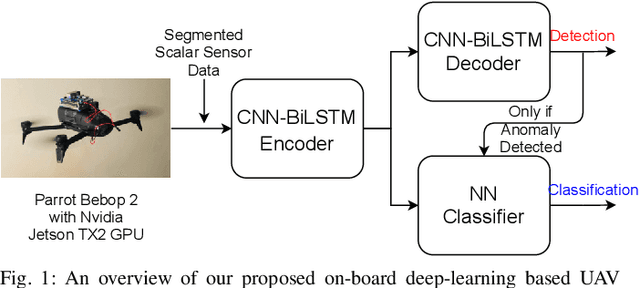

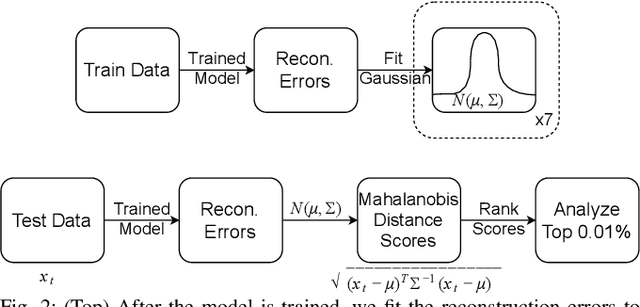

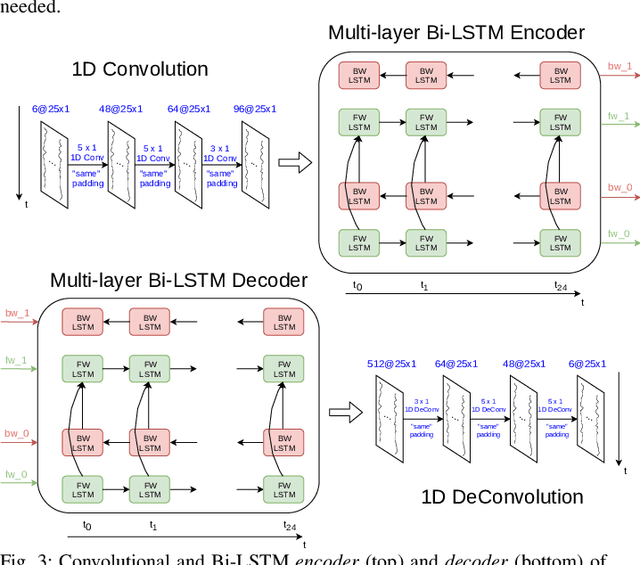

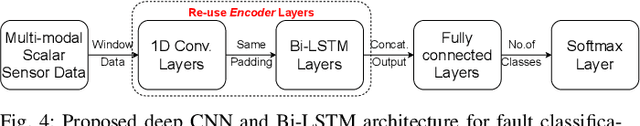

On-board Deep-learning-based Unmanned Aerial Vehicle Fault Cause Detection and Identification

Apr 03, 2020

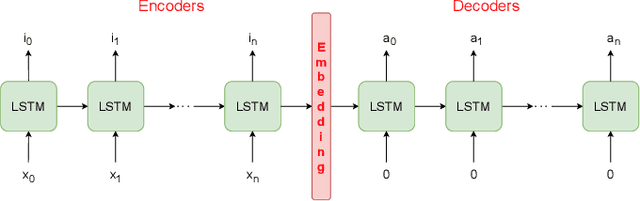

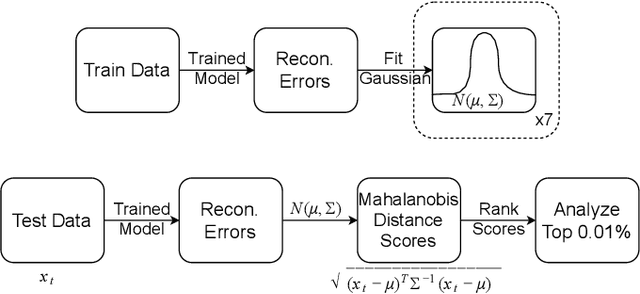

Abstract:With the increase in use of Unmanned Aerial Vehicles (UAVs)/drones, it is important to detect and identify causes of failure in real time for proper recovery from a potential crash-like scenario or post incident forensics analysis. The cause of crash could be either a fault in the sensor/actuator system, a physical damage/attack, or a cyber attack on the drone's software. In this paper, we propose novel architectures based on deep Convolutional and Long Short-Term Memory Neural Networks (CNNs and LSTMs) to detect (via Autoencoder) and classify drone mis-operations based on sensor data. The proposed architectures are able to learn high-level features automatically from the raw sensor data and learn the spatial and temporal dynamics in the sensor data. We validate the proposed deep-learning architectures via simulations and experiments on a real drone. Empirical results show that our solution is able to detect with over 90% accuracy and classify various types of drone mis-operations (with about 99% accuracy (simulation data) and upto 88% accuracy (experimental data)).

Deep Multi-Task Learning for Anomalous Driving Detection Using CAN Bus Scalar Sensor Data

Jun 28, 2019

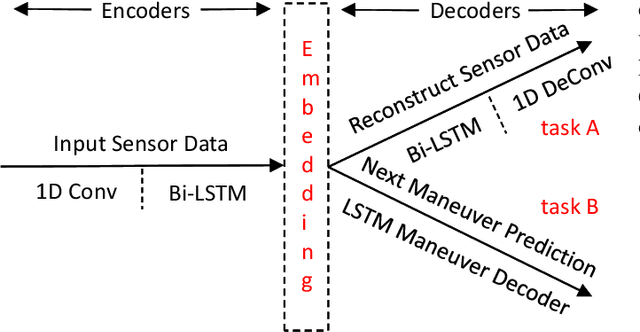

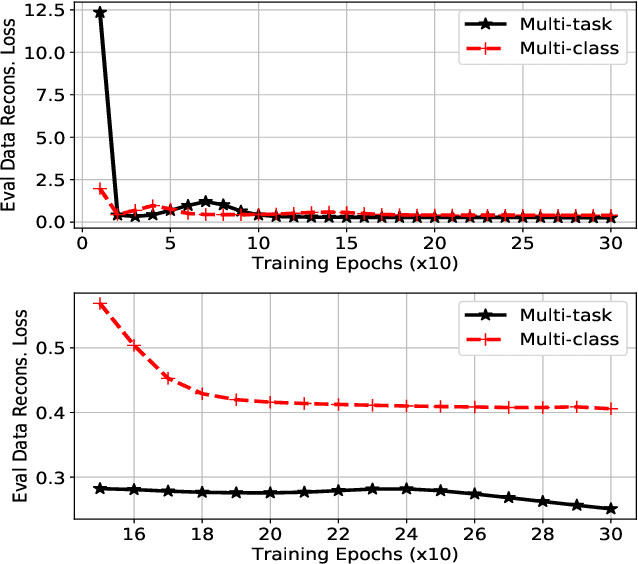

Abstract:Corner cases are the main bottlenecks when applying Artificial Intelligence (AI) systems to safety-critical applications. An AI system should be intelligent enough to detect such situations so that system developers can prepare for subsequent planning. In this paper, we propose semi-supervised anomaly detection considering the imbalance of normal situations. In particular, driving data consists of multiple positive/normal situations (e.g., right turn, going straight), some of which (e.g., U-turn) could be as rare as anomalous situations. Existing machine learning based anomaly detection approaches do not fare sufficiently well when applied to such imbalanced data. In this paper, we present a novel multi-task learning based approach that leverages domain-knowledge (maneuver labels) for anomaly detection in driving data. We evaluate the proposed approach both quantitatively and qualitatively on 150 hours of real-world driving data and show improved performance over baseline approaches.

* IROS 2019, 8 pages

HCFContext: Smartphone Context Inference via Sequential History-based Collaborative Filtering

Apr 29, 2019

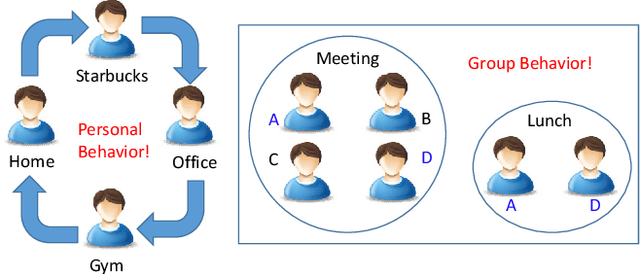

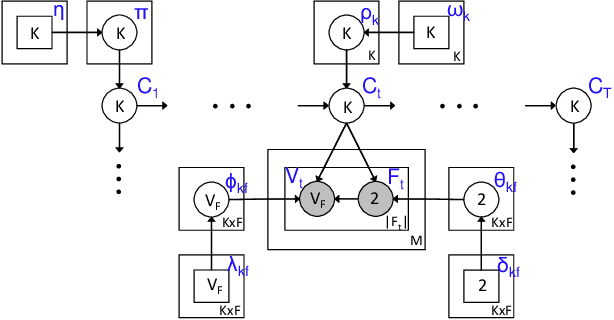

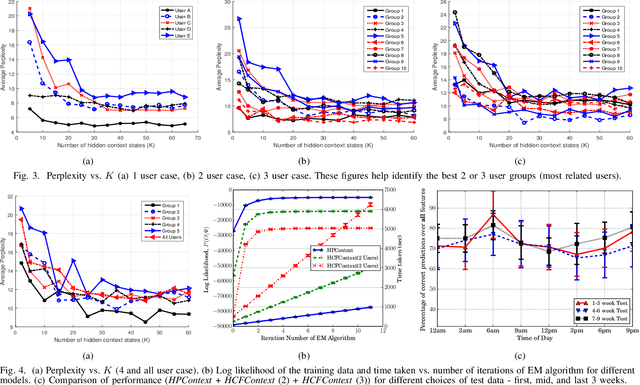

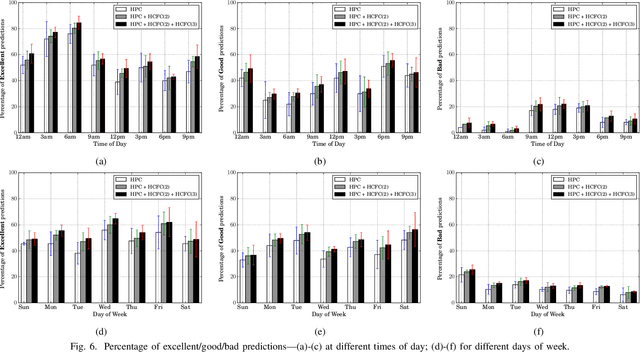

Abstract:Mobile context determination is an important step for many context aware services such as location-based services, enterprise policy enforcement, building or room occupancy detection for power or HVAC operation, etc. Especially in enterprise scenarios where policies (e.g., attending a confidential meeting only when the user is in "Location X") are defined based on mobile context, it is paramount to verify the accuracy of the mobile context. To this end, two stochastic models based on the theory of Hidden Markov Models (HMMs) to obtain mobile context are proposed-personalized model (HPContext) and collaborative filtering model (HCFContext). The former predicts the current context using sequential history of the user's past context observations, the latter enhances HPContext with collaborative filtering features, which enables it to predict the current context of the primary user based on the context observations of users related to the primary user, e.g., same team colleagues in company, gym friends, family members, etc. Each of the proposed models can also be used to enhance or complement the context obtained from sensors. Furthermore, since privacy is a concern in collaborative filtering, a privacy-preserving method is proposed to derive HCFContext model parameters based on the concepts of homomorphic encryption. Finally, these models are thoroughly validated on a real-life dataset.

* Mobile context, collaborative filtering, privacy-preserving, personalized model, sensors, location, prediction, hidden markov models, google now, apple siri, cortana, alexa

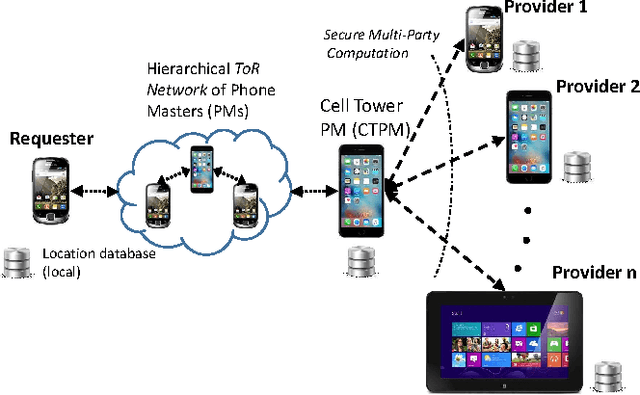

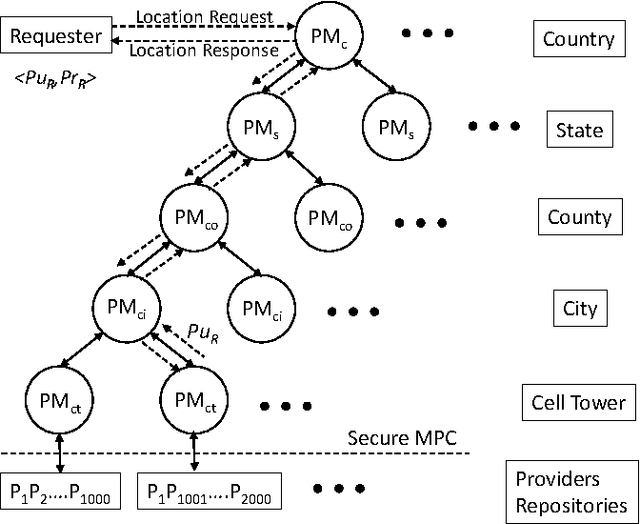

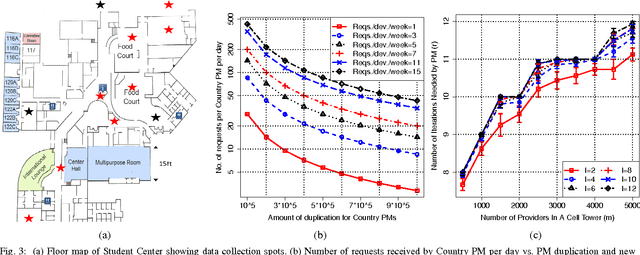

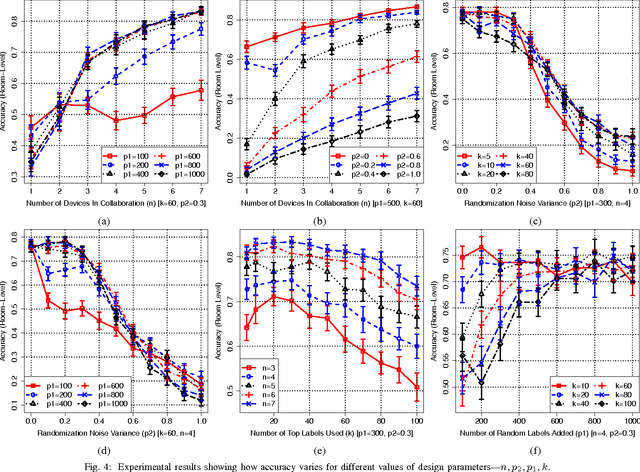

CollabLoc: Privacy-Preserving Multi-Modal Localization via Collaborative Information Fusion

Sep 29, 2017

Abstract:Mobile phones provide an excellent opportunity for building context-aware applications. In particular, location-based services are important context-aware services that are more and more used for enforcing security policies, for supporting indoor room navigation, and for providing personalized assistance. However, a major problem still remains unaddressed---the lack of solutions that work across buildings while not using additional infrastructure and also accounting for privacy and reliability needs. In this paper, a privacy-preserving, multi-modal, cross-building, collaborative localization platform is proposed based on Wi-Fi RSSI (existing infrastructure), Cellular RSSI, sound and light levels, that enables room-level localization as main application (though sub room level granularity is possible). The privacy is inherently built into the solution based on onion routing, and perturbation/randomization techniques, and exploits the idea of weighted collaboration to increase the reliability as well as to limit the effect of noisy devices (due to sensor noise/privacy). The proposed solution has been analyzed in terms of privacy, accuracy, optimum parameters, and other overheads on location data collected at multiple indoor and outdoor locations using an Android app.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge