Vera Chung

FinCast: A Foundation Model for Financial Time-Series Forecasting

Aug 27, 2025Abstract:Financial time-series forecasting is critical for maintaining economic stability, guiding informed policymaking, and promoting sustainable investment practices. However, it remains challenging due to various underlying pattern shifts. These shifts arise primarily from three sources: temporal non-stationarity (distribution changes over time), multi-domain diversity (distinct patterns across financial domains such as stocks, commodities, and futures), and varying temporal resolutions (patterns differing across per-second, hourly, daily, or weekly indicators). While recent deep learning methods attempt to address these complexities, they frequently suffer from overfitting and typically require extensive domain-specific fine-tuning. To overcome these limitations, we introduce FinCast, the first foundation model specifically designed for financial time-series forecasting, trained on large-scale financial datasets. Remarkably, FinCast exhibits robust zero-shot performance, effectively capturing diverse patterns without domain-specific fine-tuning. Comprehensive empirical and qualitative evaluations demonstrate that FinCast surpasses existing state-of-the-art methods, highlighting its strong generalization capabilities.

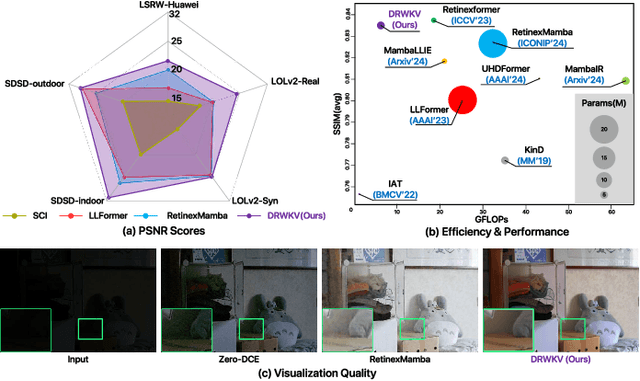

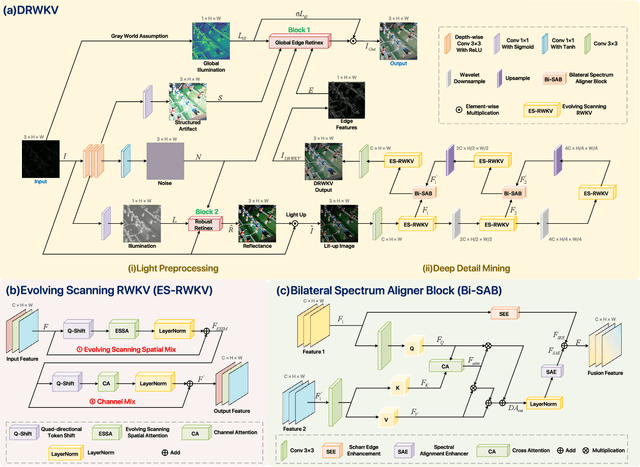

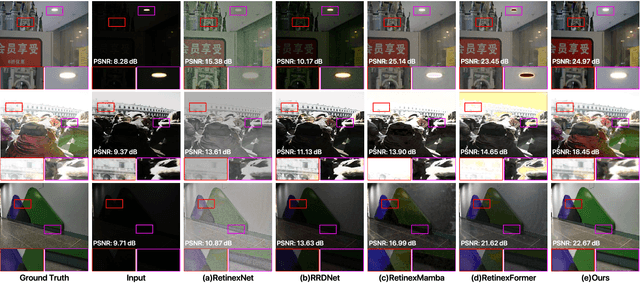

DRWKV: Focusing on Object Edges for Low-Light Image Enhancement

Jul 24, 2025

Abstract:Low-light image enhancement remains a challenging task, particularly in preserving object edge continuity and fine structural details under extreme illumination degradation. In this paper, we propose a novel model, DRWKV (Detailed Receptance Weighted Key Value), which integrates our proposed Global Edge Retinex (GER) theory, enabling effective decoupling of illumination and edge structures for enhanced edge fidelity. Secondly, we introduce Evolving WKV Attention, a spiral-scanning mechanism that captures spatial edge continuity and models irregular structures more effectively. Thirdly, we design the Bilateral Spectrum Aligner (Bi-SAB) and a tailored MS2-Loss to jointly align luminance and chrominance features, improving visual naturalness and mitigating artifacts. Extensive experiments on five LLIE benchmarks demonstrate that DRWKV achieves leading performance in PSNR, SSIM, and NIQE while maintaining low computational complexity. Furthermore, DRWKV enhances downstream performance in low-light multi-object tracking tasks, validating its generalization capabilities.

MGDFIS: Multi-scale Global-detail Feature Integration Strategy for Small Object Detection

Jun 15, 2025Abstract:Small object detection in UAV imagery is crucial for applications such as search-and-rescue, traffic monitoring, and environmental surveillance, but it is hampered by tiny object size, low signal-to-noise ratios, and limited feature extraction. Existing multi-scale fusion methods help, but add computational burden and blur fine details, making small object detection in cluttered scenes difficult. To overcome these challenges, we propose the Multi-scale Global-detail Feature Integration Strategy (MGDFIS), a unified fusion framework that tightly couples global context with local detail to boost detection performance while maintaining efficiency. MGDFIS comprises three synergistic modules: the FusionLock-TSS Attention Module, which marries token-statistics self-attention with DynamicTanh normalization to highlight spectral and spatial cues at minimal cost; the Global-detail Integration Module, which fuses multi-scale context via directional convolution and parallel attention while preserving subtle shape and texture variations; and the Dynamic Pixel Attention Module, which generates pixel-wise weighting maps to rebalance uneven foreground and background distributions and sharpen responses to true object regions. Extensive experiments on the VisDrone benchmark demonstrate that MGDFIS consistently outperforms state-of-the-art methods across diverse backbone architectures and detection frameworks, achieving superior precision and recall with low inference time. By striking an optimal balance between accuracy and resource usage, MGDFIS provides a practical solution for small-object detection on resource-constrained UAV platforms.

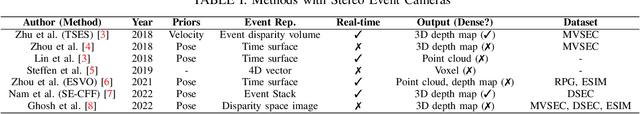

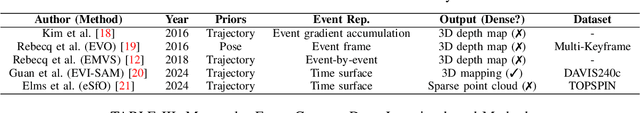

A Survey of 3D Reconstruction with Event Cameras: From Event-based Geometry to Neural 3D Rendering

May 13, 2025Abstract:Event cameras have emerged as promising sensors for 3D reconstruction due to their ability to capture per-pixel brightness changes asynchronously. Unlike conventional frame-based cameras, they produce sparse and temporally rich data streams, which enable more accurate 3D reconstruction and open up the possibility of performing reconstruction in extreme environments such as high-speed motion, low light, or high dynamic range scenes. In this survey, we provide the first comprehensive review focused exclusively on 3D reconstruction using event cameras. The survey categorises existing works into three major types based on input modality - stereo, monocular, and multimodal systems, and further classifies them by reconstruction approach, including geometry-based, deep learning-based, and recent neural rendering techniques such as Neural Radiance Fields and 3D Gaussian Splatting. Methods with a similar research focus were organised chronologically into the most subdivided groups. We also summarise public datasets relevant to event-based 3D reconstruction. Finally, we highlight current research limitations in data availability, evaluation, representation, and dynamic scene handling, and outline promising future research directions. This survey aims to serve as a comprehensive reference and a roadmap for future developments in event-driven 3D reconstruction.

A Survey on Event-driven 3D Reconstruction: Development under Different Categories

Mar 26, 2025

Abstract:Event cameras have gained increasing attention for 3D reconstruction due to their high temporal resolution, low latency, and high dynamic range. They capture per-pixel brightness changes asynchronously, allowing accurate reconstruction under fast motion and challenging lighting conditions. In this survey, we provide a comprehensive review of event-driven 3D reconstruction methods, including stereo, monocular, and multimodal systems. We further categorize recent developments based on geometric, learning-based, and hybrid approaches. Emerging trends, such as neural radiance fields and 3D Gaussian splatting with event data, are also covered. The related works are structured chronologically to illustrate the innovations and progression within the field. To support future research, we also highlight key research gaps and future research directions in dataset, experiment, evaluation, event representation, etc.

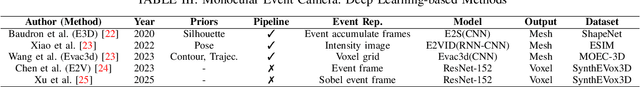

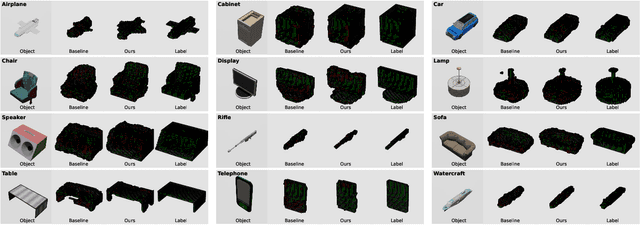

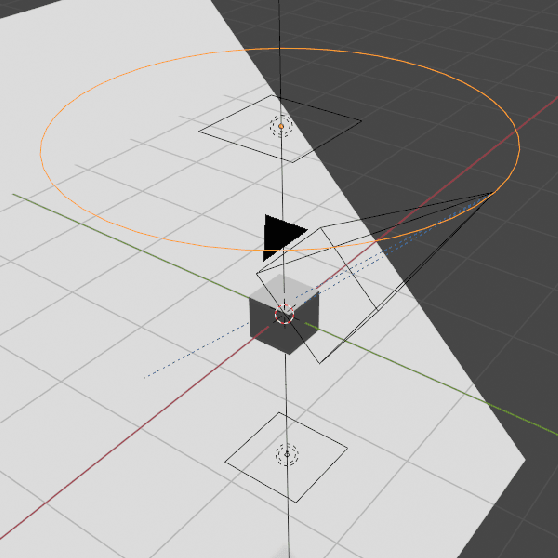

Towards End-to-End Neuromorphic Voxel-based 3D Object Reconstruction Without Physical Priors

Jan 01, 2025

Abstract:Neuromorphic cameras, also known as event cameras, are asynchronous brightness-change sensors that can capture extremely fast motion without suffering from motion blur, making them particularly promising for 3D reconstruction in extreme environments. However, existing research on 3D reconstruction using monocular neuromorphic cameras is limited, and most of the methods rely on estimating physical priors and employ complex multi-step pipelines. In this work, we propose an end-to-end method for dense voxel 3D reconstruction using neuromorphic cameras that eliminates the need to estimate physical priors. Our method incorporates a novel event representation to enhance edge features, enabling the proposed feature-enhancement model to learn more effectively. Additionally, we introduced Optimal Binarization Threshold Selection Principle as a guideline for future related work, using the optimal reconstruction results achieved with threshold optimization as the benchmark. Our method achieves a 54.6% improvement in reconstruction accuracy compared to the baseline method.

Light Field Image Quality Assessment With Auxiliary Learning Based on Depthwise and Anglewise Separable Convolutions

Dec 10, 2024

Abstract:In multimedia broadcasting, no-reference image quality assessment (NR-IQA) is used to indicate the user-perceived quality of experience (QoE) and to support intelligent data transmission while optimizing user experience. This paper proposes an improved no-reference light field image quality assessment (NR-LFIQA) metric for future immersive media broadcasting services. First, we extend the concept of depthwise separable convolution (DSC) to the spatial domain of light field image (LFI) and introduce "light field depthwise separable convolution (LF-DSC)", which can extract the LFI's spatial features efficiently. Second, we further theoretically extend the LF-DSC to the angular space of LFI and introduce the novel concept of "light field anglewise separable convolution (LF-ASC)", which is capable of extracting both the spatial and angular features for comprehensive quality assessment with low complexity. Third, we define the spatial and angular feature estimations as auxiliary tasks in aiding the primary NR-LFIQA task by providing spatial and angular quality features as hints. To the best of our knowledge, this work is the first exploration of deep auxiliary learning with spatial-angular hints on NR-LFIQA. Experiments were conducted in mainstream LFI datasets such as Win5-LID and SMART with comparisons to the mainstream full reference IQA metrics as well as the state-of-the-art NR-LFIQA methods. The experimental results show that the proposed metric yields overall 42.86% and 45.95% smaller prediction errors than the second-best benchmarking metric in Win5-LID and SMART, respectively. In some challenging cases with particular distortion types, the proposed metric can reduce the errors significantly by more than 60%.

Adaptively Augmented Consistency Learning: A Semi-supervised Segmentation Framework for Remote Sensing

Nov 14, 2024

Abstract:Remote sensing (RS) involves the acquisition of data about objects or areas from a distance, primarily to monitor environmental changes, manage resources, and support planning and disaster response. A significant challenge in RS segmentation is the scarcity of high-quality labeled images due to the diversity and complexity of RS image, which makes pixel-level annotation difficult and hinders the development of effective supervised segmentation algorithms. To solve this problem, we propose Adaptively Augmented Consistency Learning (AACL), a semi-supervised segmentation framework designed to enhances RS segmentation accuracy under condictions of limited labeled data. AACL extracts additional information embedded in unlabeled images through the use of Uniform Strength Augmentation (USAug) and Adaptive Cut-Mix (AdaCM). Evaluations across various RS datasets demonstrate that AACL achieves competitive performance in semi-supervised segmentation, showing up to a 20% improvement in specific categories and 2% increase in overall performance compared to state-of-the-art frameworks.

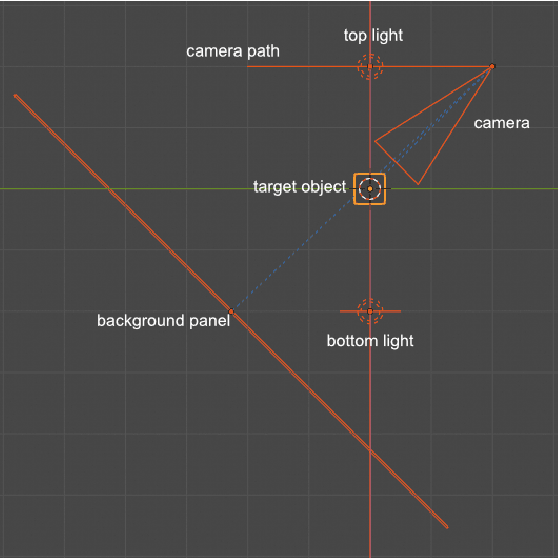

Dense Voxel 3D Reconstruction Using a Monocular Event Camera

Sep 01, 2023

Abstract:Event cameras are sensors inspired by biological systems that specialize in capturing changes in brightness. These emerging cameras offer many advantages over conventional frame-based cameras, including high dynamic range, high frame rates, and extremely low power consumption. Due to these advantages, event cameras have increasingly been adapted in various fields, such as frame interpolation, semantic segmentation, odometry, and SLAM. However, their application in 3D reconstruction for VR applications is underexplored. Previous methods in this field mainly focused on 3D reconstruction through depth map estimation. Methods that produce dense 3D reconstruction generally require multiple cameras, while methods that utilize a single event camera can only produce a semi-dense result. Other single-camera methods that can produce dense 3D reconstruction rely on creating a pipeline that either incorporates the aforementioned methods or other existing Structure from Motion (SfM) or Multi-view Stereo (MVS) methods. In this paper, we propose a novel approach for solving dense 3D reconstruction using only a single event camera. To the best of our knowledge, our work is the first attempt in this regard. Our preliminary results demonstrate that the proposed method can produce visually distinguishable dense 3D reconstructions directly without requiring pipelines like those used by existing methods. Additionally, we have created a synthetic dataset with $39,739$ object scans using an event camera simulator. This dataset will help accelerate other relevant research in this field.

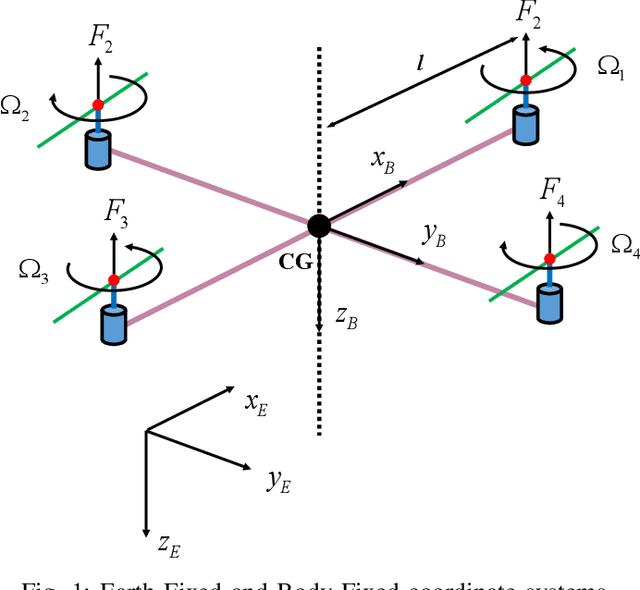

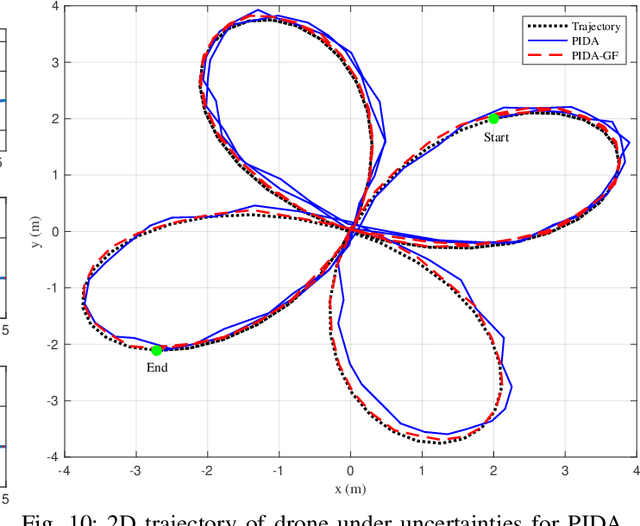

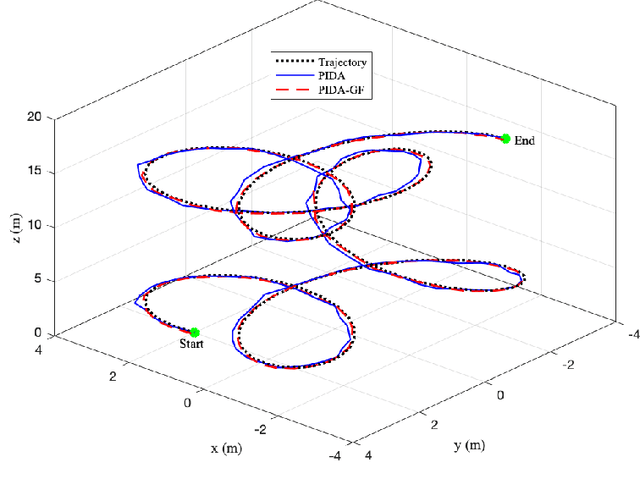

PIDA: Smooth and Stable Flight Using Stochastic Dual Simplex Algorithm and Genetic Filter

Jun 17, 2020

Abstract:This paper presents a new Proportional-Integral-Derivative-Accelerated (PIDA) control with a derivative filter to improve quadcopter flight stability in a noisy environment. The mathematical model is derived from having an accurate model with a high level of fidelity by addressing the problems of non-linearity, uncertainties, and coupling. These uncertainties and measurement noises cause instability in flight and automatic hovering. The proposed controller associated with a heuristic Genetic Filter (GF) addresses these challenges. The tuning of the proposed PIDA controller associated with the objective of controlling is performed by Stochastic Dual Simplex Algorithm (SDSA). GF is applied to the PIDA control to estimate the observed states and parameters of quadcopters in both attitude and altitude. The simulation results show that the proposed control associated with GF has a strong ability to track the desired point in the presence of disturbances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge