Vanessa Gómez-Verdejo

Interpretable Generative and Discriminative Learning for Multimodal and Incomplete Clinical Data

Oct 10, 2025Abstract:Real-world clinical problems are often characterized by multimodal data, usually associated with incomplete views and limited sample sizes in their cohorts, posing significant limitations for machine learning algorithms. In this work, we propose a Bayesian approach designed to efficiently handle these challenges while providing interpretable solutions. Our approach integrates (1) a generative formulation to capture cross-view relationships with a semi-supervised strategy, and (2) a discriminative task-oriented formulation to identify relevant information for specific downstream objectives. This dual generative-discriminative formulation offers both general understanding and task-specific insights; thus, it provides an automatic imputation of the missing views while enabling robust inference across different data sources. The potential of this approach becomes evident when applied to the multimodal clinical data, where our algorithm is able to capture and disentangle the complex interactions among biological, psychological, and sociodemographic modalities.

Unified Bayesian representation for high-dimensional multi-modal biomedical data for small-sample classification

Nov 11, 2024Abstract:We present BALDUR, a novel Bayesian algorithm designed to deal with multi-modal datasets and small sample sizes in high-dimensional settings while providing explainable solutions. To do so, the proposed model combines within a common latent space the different data views to extract the relevant information to solve the classification task and prune out the irrelevant/redundant features/data views. Furthermore, to provide generalizable solutions in small sample size scenarios, BALDUR efficiently integrates dual kernels over the views with a small sample-to-feature ratio. Finally, its linear nature ensures the explainability of the model outcomes, allowing its use for biomarker identification. This model was tested over two different neurodegeneration datasets, outperforming the state-of-the-art models and detecting features aligned with markers already described in the scientific literature.

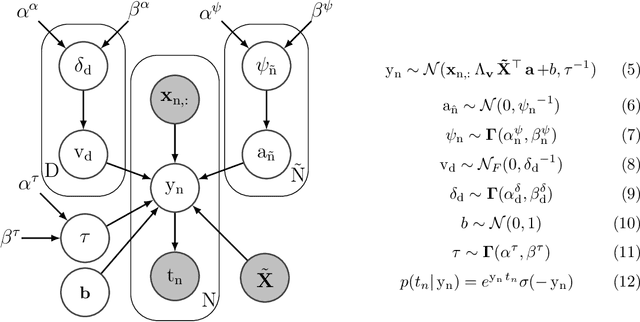

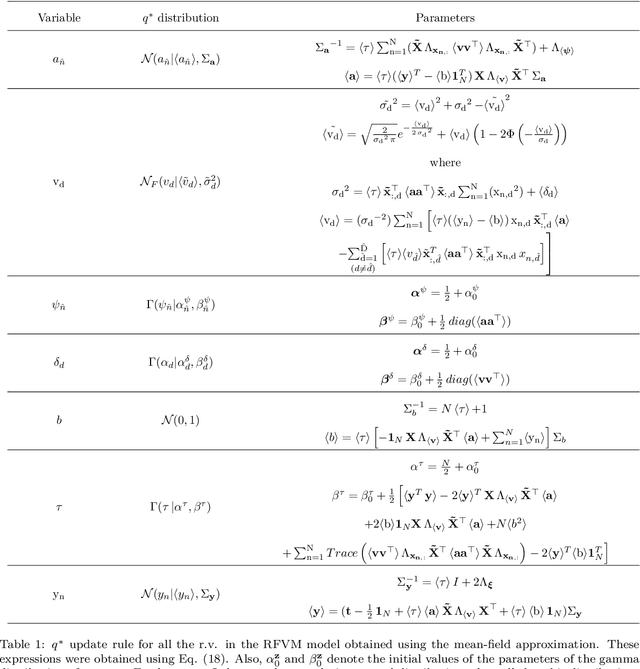

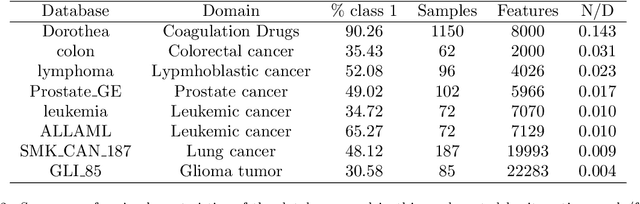

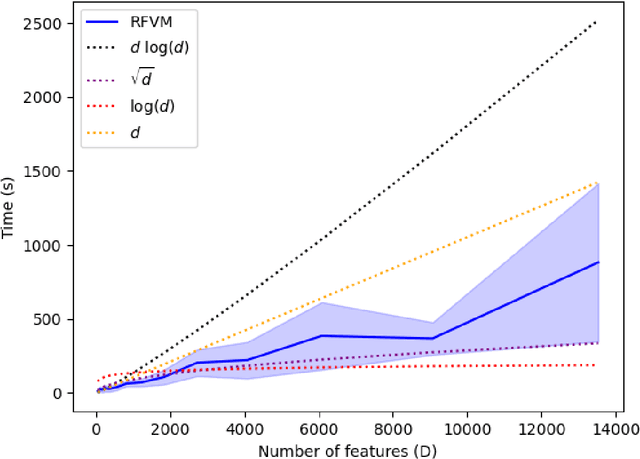

The Relevance Feature and Vector Machine for health applications

Feb 11, 2024

Abstract:This paper presents the Relevance Feature and Vector Machine (RFVM), a novel model that addresses the challenges of the fat-data problem when dealing with clinical prospective studies. The fat-data problem refers to the limitations of Machine Learning (ML) algorithms when working with databases in which the number of features is much larger than the number of samples (a common scenario in certain medical fields). To overcome such limitations, the RFVM incorporates different characteristics: (1) A Bayesian formulation which enables the model to infer its parameters without overfitting thanks to the Bayesian model averaging. (2) A joint optimisation that overcomes the limitations arising from the fat-data characteristic by simultaneously including the variables that define the primal space (features) and those that define the dual space (observations). (3) An integrated prunning that removes the irrelevant features and samples during the training iterative optimization. Also, this last point turns out crucial when performing medical prospective studies, enabling researchers to exclude unnecessary medical tests, reducing costs and inconvenience for patients, and identifying the critical patients/subjects that characterize the disorder and, subsequently, optimize the patient recruitment process that leads to a balanced cohort. The model capabilities are tested against state-of-the-art models in several medical datasets with fat-data problems. These experimental works show that RFVM is capable of achieving competitive classification accuracies while providing the most compact subset of data (in both terms of features and samples). Moreover, the selected features (medical tests) seem to be aligned with the existing medical literature.

Adaptive Sparse Gaussian Process

Feb 20, 2023Abstract:Adaptive learning is necessary for non-stationary environments where the learning machine needs to forget past data distribution. Efficient algorithms require a compact model update to not grow in computational burden with the incoming data and with the lowest possible computational cost for online parameter updating. Existing solutions only partially cover these needs. Here, we propose the first adaptive sparse Gaussian Process (GP) able to address all these issues. We first reformulate a variational sparse GP algorithm to make it adaptive through a forgetting factor. Next, to make the model inference as simple as possible, we propose updating a single inducing point of the sparse GP model together with the remaining model parameters every time a new sample arrives. As a result, the algorithm presents a fast convergence of the inference process, which allows an efficient model update (with a single inference iteration) even in highly non-stationary environments. Experimental results demonstrate the capabilities of the proposed algorithm and its good performance in modeling the predictive posterior in mean and confidence interval estimation compared to state-of-the-art approaches.

Bayesian learning of feature spaces for multitasks problems

Sep 07, 2022

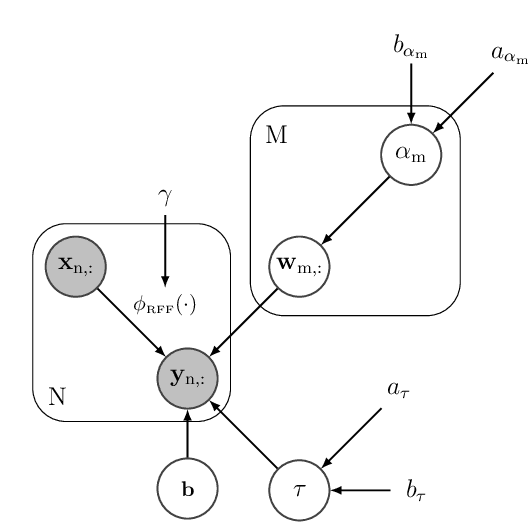

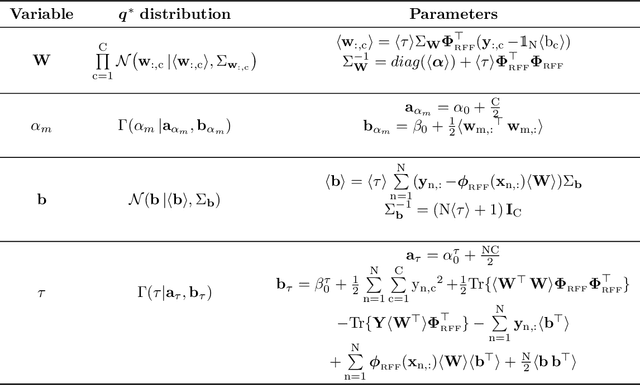

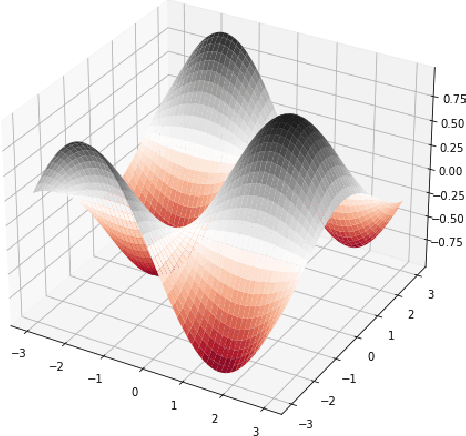

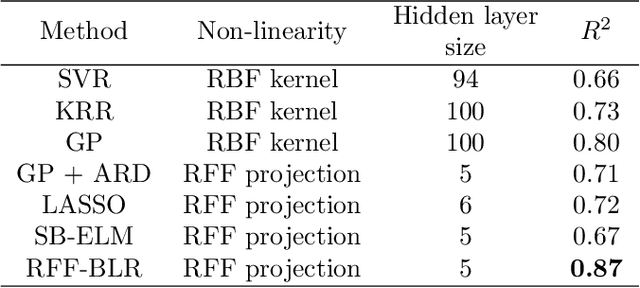

Abstract:This paper presents a Bayesian framework to construct non-linear, parsimonious, shallow models for multitask regression. The proposed framework relies on the fact that Random Fourier Features (RFFs) enables the approximation of an RBF kernel by an extreme learning machine whose hidden layer is formed by RFFs. The main idea is to combine both dual views of a same model under a single Bayesian formulation that extends the Sparse Bayesian Extreme Learning Machines to multitask problems. From the kernel methods point of view, the proposed formulation facilitates the introduction of prior domain knowledge through the RBF kernel parameter. From the extreme learning machines perspective, the new formulation helps control overfitting and enables a parsimonious overall model (the models that serve each task share a same set of RFFs selected within the joint Bayesian optimisation). The experimental results show that combining advantages from kernel methods and extreme learning machines within the same framework can lead to significant improvements in the performance achieved by each of these two paradigms independently.

Multi-view hierarchical Variational AutoEncoders with Factor Analysis latent space

Jul 19, 2022

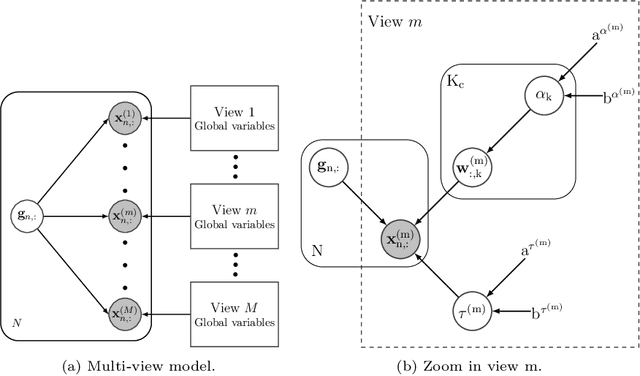

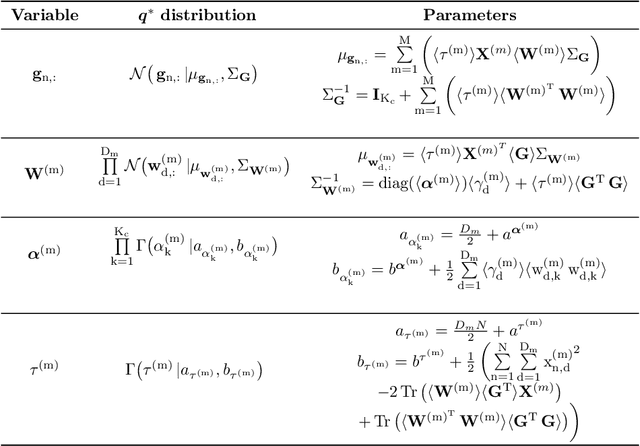

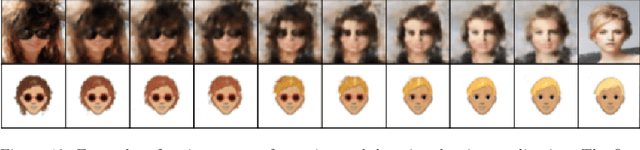

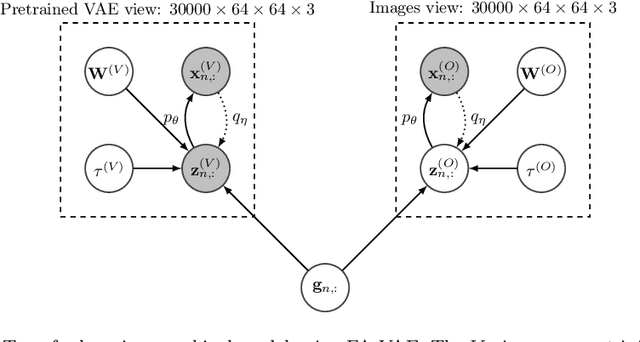

Abstract:Real-world databases are complex, they usually present redundancy and shared correlations between heterogeneous and multiple representations of the same data. Thus, exploiting and disentangling shared information between views is critical. For this purpose, recent studies often fuse all views into a shared nonlinear complex latent space but they lose the interpretability. To overcome this limitation, here we propose a novel method to combine multiple Variational AutoEncoders (VAE) architectures with a Factor Analysis latent space (FA-VAE). Concretely, we use a VAE to learn a private representation of each heterogeneous view in a continuous latent space. Then, we model the shared latent space by projecting every private variable to a low-dimensional latent space using a linear projection matrix. Thus, we create an interpretable hierarchical dependency between private and shared information. This way, the novel model is able to simultaneously: (i) learn from multiple heterogeneous views, (ii) obtain an interpretable hierarchical shared space, and, (iii) perform transfer learning between generative models.

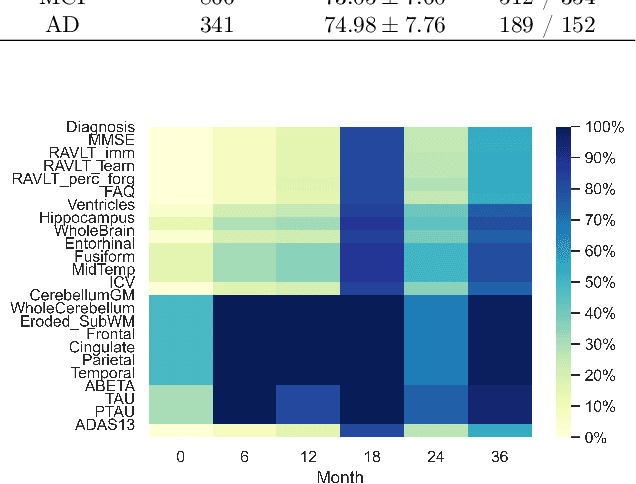

Multi-task longitudinal forecasting with missing values on Alzheimer's Disease

Jan 13, 2022

Abstract:Machine learning techniques typically applied to dementia forecasting lack in their capabilities to jointly learn several tasks, handle time dependent heterogeneous data and missing values. In this paper, we propose a framework using the recently presented SSHIBA model for jointly learning different tasks on longitudinal data with missing values. The method uses Bayesian variational inference to impute missing values and combine information of several views. This way, we can combine different data-views from different time-points in a common latent space and learn the relations between each time-point while simultaneously modelling and predicting several output variables. We apply this model to predict together diagnosis, ventricle volume, and clinical scores in dementia. The results demonstrate that SSHIBA is capable of learning a good imputation of the missing values and outperforming the baselines while simultaneously predicting three different tasks.

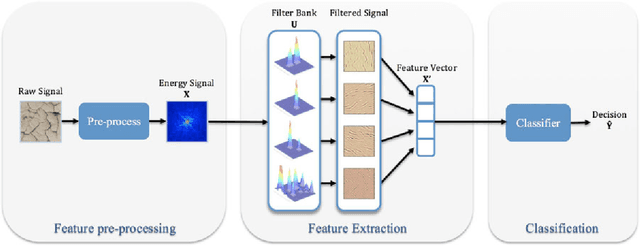

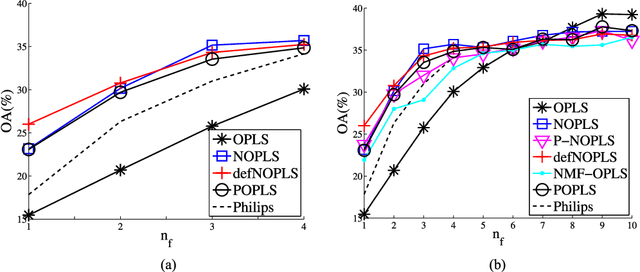

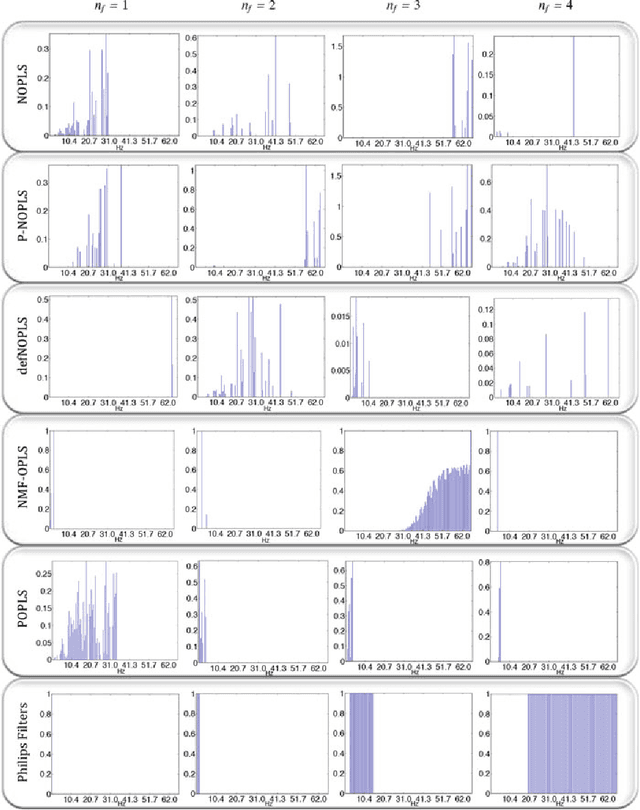

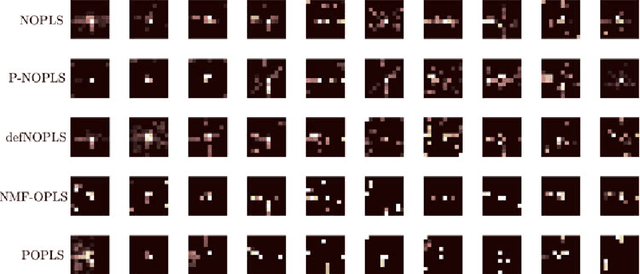

Nonnegative OPLS for Supervised Design of Filter Banks: Application to Image and Audio Feature Extraction

Dec 22, 2021

Abstract:Audio or visual data analysis tasks usually have to deal with high-dimensional and nonnegative signals. However, most data analysis methods suffer from overfitting and numerical problems when data have more than a few dimensions needing a dimensionality reduction preprocessing. Moreover, interpretability about how and why filters work for audio or visual applications is a desired property, especially when energy or spectral signals are involved. In these cases, due to the nature of these signals, the nonnegativity of the filter weights is a desired property to better understand its working. Because of these two necessities, we propose different methods to reduce the dimensionality of data while the nonnegativity and interpretability of the solution are assured. In particular, we propose a generalized methodology to design filter banks in a supervised way for applications dealing with nonnegative data, and we explore different ways of solving the proposed objective function consisting of a nonnegative version of the orthonormalized partial least-squares method. We analyze the discriminative power of the features obtained with the proposed methods for two different and widely studied applications: texture and music genre classification. Furthermore, we compare the filter banks achieved by our methods with other state-of-the-art methods specifically designed for feature extraction.

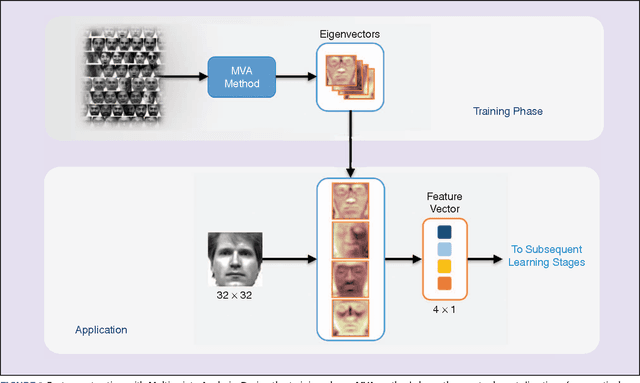

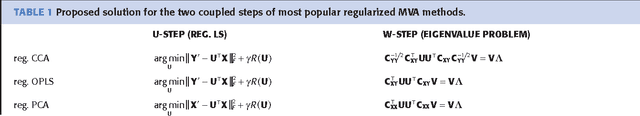

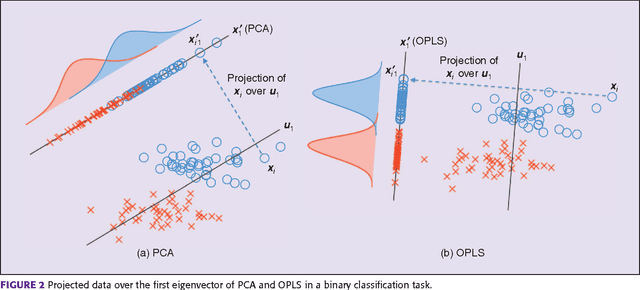

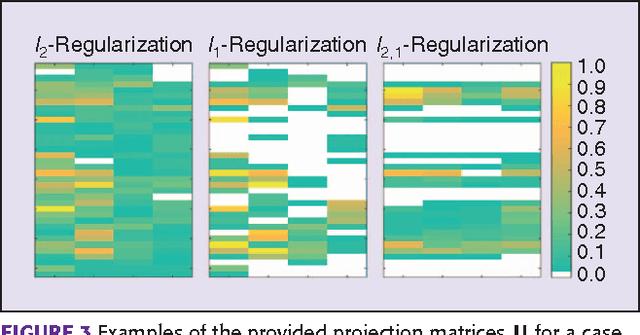

Regularized Multivariate Analysis Framework for Interpretable High-Dimensional Variable Selection

Dec 22, 2021

Abstract:Multivariate Analysis (MVA) comprises a family of well-known methods for feature extraction which exploit correlations among input variables representing the data. One important property that is enjoyed by most such methods is uncorrelation among the extracted features. Recently, regularized versions of MVA methods have appeared in the literature, mainly with the goal to gain interpretability of the solution. In these cases, the solutions can no longer be obtained in a closed manner, and more complex optimization methods that rely on the iteration of two steps are frequently used. This paper recurs to an alternative approach to solve efficiently this iterative problem. The main novelty of this approach lies in preserving several properties of the original methods, most notably the uncorrelation of the extracted features. Under this framework, we propose a novel method that takes advantage of the l-21 norm to perform variable selection during the feature extraction process. Experimental results over different problems corroborate the advantages of the proposed formulation in comparison to state of the art formulations.

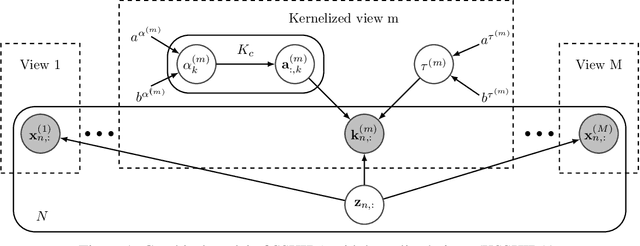

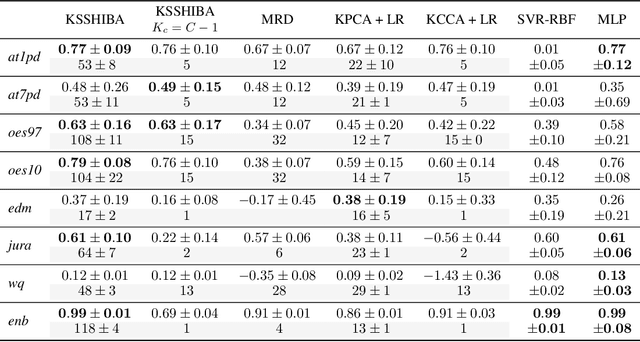

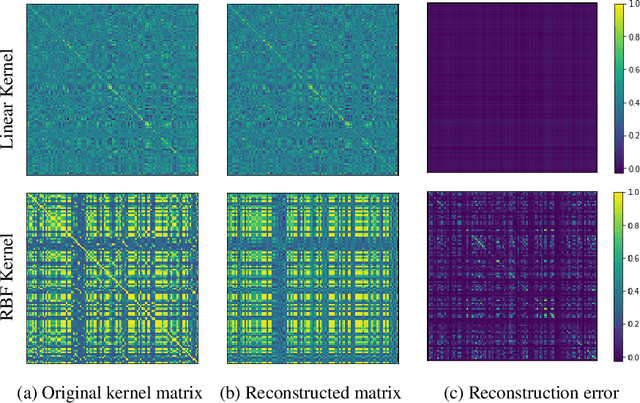

Bayesian Sparse Factor Analysis with Kernelized Observations

Jun 10, 2020

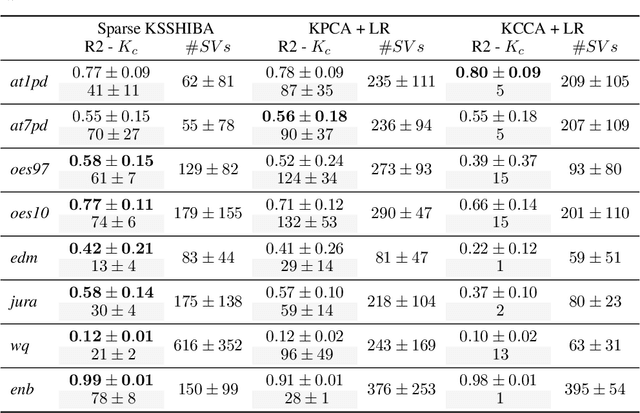

Abstract:Latent variable models for multi-view learning attempt to find low-dimensional projections that fairly capture the correlations among multiple views that characterise each datum. High-dimensional views in medium-sized datasets and non-linear problems are traditionally handled by kernel methods, inducing a (non)-linear function between the latent projection and the data itself. However, they usually come with scalability issues and exposition to overfitting. To overcome these limitations, instead of imposing a kernel function, here we propose an alternative method. In particular, we combine probabilistic factor analysis with what we refer to as kernelized observations, in which the model focuses on reconstructing not the data itself, but its correlation with other data points measured by a kernel function. This model can combine several types of views (kernelized or not), can handle heterogeneous data and work in semi-supervised settings. Additionally, by including adequate priors, it can provide compact solutions for the kernelized observations (based in a automatic selection of bayesian support vectors) and can include feature selection capabilities. Using several public databases, we demonstrate the potential of our approach (and its extensions) w.r.t. common multi-view learning models such as kernel canonical correlation analysis or manifold relevance determination gaussian processes latent variable models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge