Vahid Janfaza

Machine Learning, Deep Learning and Data Preprocessing Techniques for Detection, Prediction, and Monitoring of Stress and Stress-related Mental Disorders: A Scoping Review

Aug 08, 2023Abstract:This comprehensive review systematically evaluates Machine Learning (ML) methodologies employed in the detection, prediction, and analysis of mental stress and its consequent mental disorders (MDs). Utilizing a rigorous scoping review process, the investigation delves into the latest ML algorithms, preprocessing techniques, and data types employed in the context of stress and stress-related MDs. The findings highlight that Support Vector Machine (SVM), Neural Network (NN), and Random Forest (RF) models consistently exhibit superior accuracy and robustness among all machine learning algorithms examined. Furthermore, the review underscores that physiological parameters, such as heart rate measurements and skin response, are prevalently used as stress predictors in ML algorithms. This is attributed to their rich explanatory information concerning stress and stress-related MDs, as well as the relative ease of data acquisition. Additionally, the application of dimensionality reduction techniques, including mappings, feature selection, filtering, and noise reduction, is frequently observed as a crucial step preceding the training of ML algorithms. The synthesis of this review identifies significant research gaps and outlines future directions for the field. These encompass areas such as model interpretability, model personalization, the incorporation of naturalistic settings, and real-time processing capabilities for detection and prediction of stress and stress-related MDs.

Adaptive Gradient Prediction for DNN Training

May 22, 2023

Abstract:Neural network training is inherently sequential where the layers finish the forward propagation in succession, followed by the calculation and back-propagation of gradients (based on a loss function) starting from the last layer. The sequential computations significantly slow down neural network training, especially the deeper ones. Prediction has been successfully used in many areas of computer architecture to speed up sequential processing. Therefore, we propose ADA-GP, that uses gradient prediction adaptively to speed up deep neural network (DNN) training while maintaining accuracy. ADA-GP works by incorporating a small neural network to predict gradients for different layers of a DNN model. ADA-GP uses a novel tensor reorganization to make it feasible to predict a large number of gradients. ADA-GP alternates between DNN training using backpropagated gradients and DNN training using predicted gradients. ADA-GP adaptively adjusts when and for how long gradient prediction is used to strike a balance between accuracy and performance. Last but not least, we provide a detailed hardware extension in a typical DNN accelerator to realize the speed up potential from gradient prediction. Our extensive experiments with fourteen DNN models show that ADA-GP can achieve an average speed up of 1.47x with similar or even higher accuracy than the baseline models. Moreover, it consumes, on average, 34% less energy due to reduced off-chip memory accesses compared to the baseline hardware accelerator.

Large Language Models Based Automatic Synthesis of Software Specifications

Apr 18, 2023Abstract:Software configurations play a crucial role in determining the behavior of software systems. In order to ensure safe and error-free operation, it is necessary to identify the correct configuration, along with their valid bounds and rules, which are commonly referred to as software specifications. As software systems grow in complexity and scale, the number of configurations and associated specifications required to ensure the correct operation can become large and prohibitively difficult to manipulate manually. Due to the fast pace of software development, it is often the case that correct software specifications are not thoroughly checked or validated within the software itself. Rather, they are frequently discussed and documented in a variety of external sources, including software manuals, code comments, and online discussion forums. Therefore, it is hard for the system administrator to know the correct specifications of configurations due to the lack of clarity, organization, and a centralized unified source to look at. To address this challenge, we propose SpecSyn a framework that leverages a state-of-the-art large language model to automatically synthesize software specifications from natural language sources. Our approach formulates software specification synthesis as a sequence-to-sequence learning problem and investigates the extraction of specifications from large contextual texts. This is the first work that uses a large language model for end-to-end specification synthesis from natural language texts. Empirical results demonstrate that our system outperforms prior the state-of-the-art specification synthesis tool by 21% in terms of F1 score and can find specifications from single as well as multiple sentences.

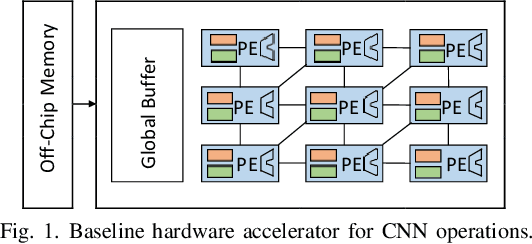

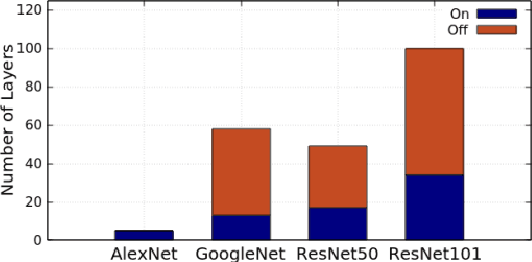

SIMCNN -- Exploiting Computational Similarity to Accelerate CNN Training in Hardware

Oct 28, 2021

Abstract:Convolution neural networks (CNN) are computation intensive to train. It consists of a substantial number of multidimensional dot products between many kernels and inputs. We observe that there are notable similarities among the vectors extracted from inputs (i.e., input vectors). If one input vector is similar to another one, its computations with the kernels are also similar to those of the other and therefore, can be skipped by reusing the already-computed results. Based on this insight, we propose a novel scheme based on locality sensitive hashing (LSH) to exploit the similarity of computations during CNN training in a hardware accelerator. The proposed scheme, called SIMCNN, uses a cache (SIMCACHE) to store LSH signatures of recent input vectors along with the computed results. If the LSH signature of a new input vector matches with that of an already existing vector in the SIMCACHE, the already-computed result is reused for the new vector. SIMCNN is the first work that exploits computational similarity for accelerating CNN training in hardware. The paper presents a detailed design, workflow, and implementation of SIMCNN. Our experimental evaluation with four different deep learning models shows that SIMCNN saves a significant number of computations and therefore, improves training time up to 43%.

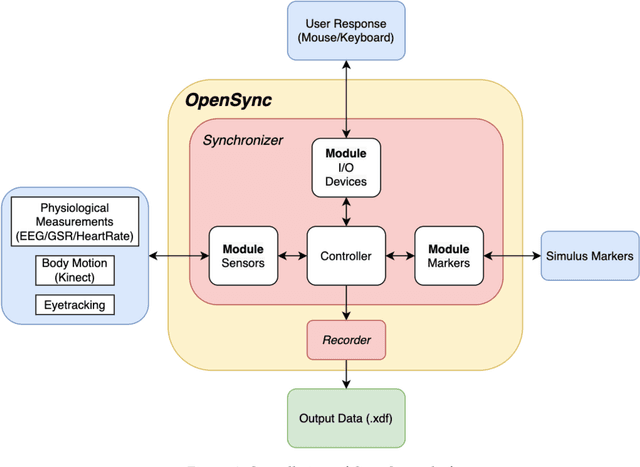

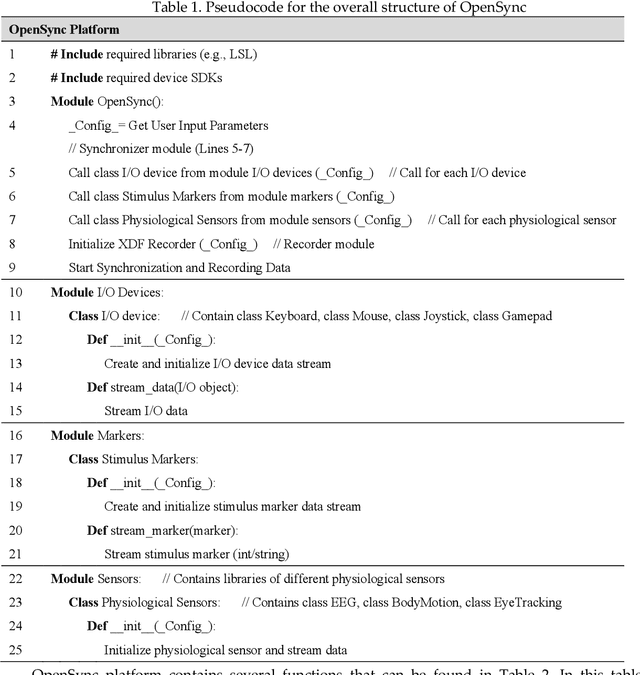

OpenSync: An opensource platform for synchronizing multiple measures in neuroscience experiments

Jul 29, 2021

Abstract:Background: The human mind is multimodal. Yet most behavioral studies rely on century-old measures such as task accuracy and latency. To create a better understanding of human behavior and brain functionality, we should introduce other measures and analyze behavior from various aspects. However, it is technically complex and costly to design and implement the experiments that record multiple measures. To address this issue, a platform that allows synchronizing multiple measures from human behavior is needed. Method: This paper introduces an opensource platform named OpenSync, which can be used to synchronize multiple measures in neuroscience experiments. This platform helps to automatically integrate, synchronize and record physiological measures (e.g., electroencephalogram (EEG), galvanic skin response (GSR), eye-tracking, body motion, etc.), user input response (e.g., from mouse, keyboard, joystick, etc.), and task-related information (stimulus markers). In this paper, we explain the structure and details of OpenSync, provide two case studies in PsychoPy and Unity. Comparison with existing tools: Unlike proprietary systems (e.g., iMotions), OpenSync is free and it can be used inside any opensource experiment design software (e.g., PsychoPy, OpenSesame, Unity, etc., https://pypi.org/project/OpenSync/ and https://github.com/moeinrazavi/OpenSync_Unity). Results: Our experimental results show that the OpenSync platform is able to synchronize multiple measures with microsecond resolution.

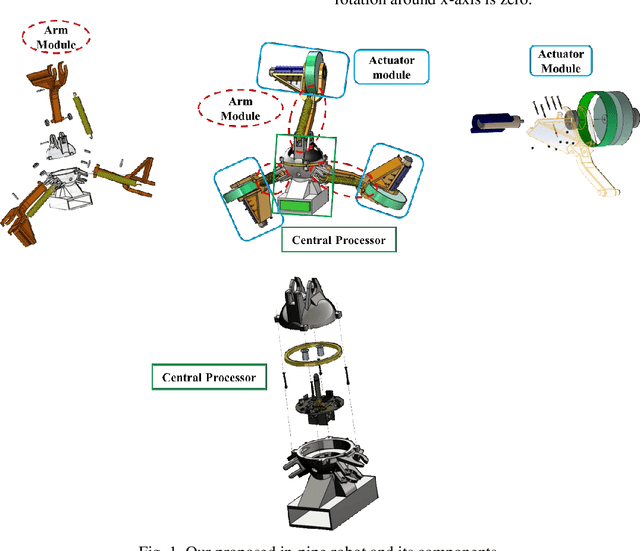

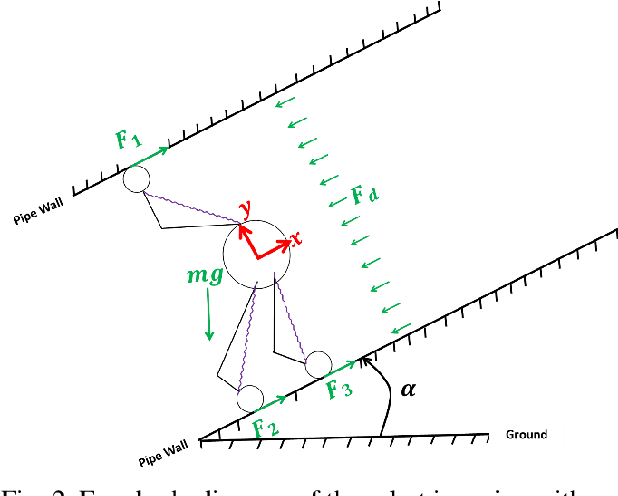

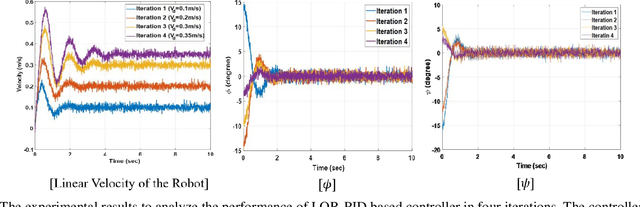

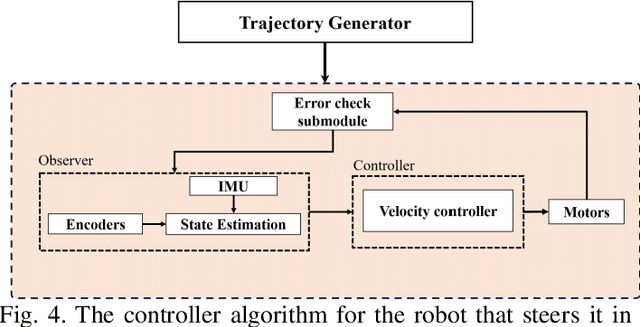

Smart Navigation for an In-pipe Robot Through Multi-phase Motion Control and Particle Filtering Method

Feb 23, 2021

Abstract:In-pipe robots are promising solutions for condition assessment, leak detection, water quality monitoring in a variety of other tasks in pipeline networks. Smart navigation is an extremely challenging task for these robots as a result of highly uncertain and disturbing environment for operation. Wireless communication to control these robots during operation is not feasible if the pipe material is metal since the radio signals are destroyed in the pipe environment, and hence, this challenge is still unsolved. In this paper, we introduce a method for smart navigation for our previously designed in-pipe robot [1] based on particle filtering and a two-phase motion controller. The robot is given the map of the operation path with a novel approach and the particle filtering determines the straight and non-straight configurations of the pipeline. In the straight paths, the robot follows a linear quadratic regulator (LQR) and proportional-integral-derivative (PID) based controller that stabilizes the robot and tracks a desired velocity. In non-straight paths, the robot follows the trajectory that a motion trajectory generator block plans for the robot. The proposed method is a promising solution for smart navigation without the need for wireless communication and capable of inspecting long distances in water distribution systems.

An Automatic System to Monitor the Physical Distance and Face Mask Wearing of Construction Workers in COVID-19 Pandemic

Jan 30, 2021

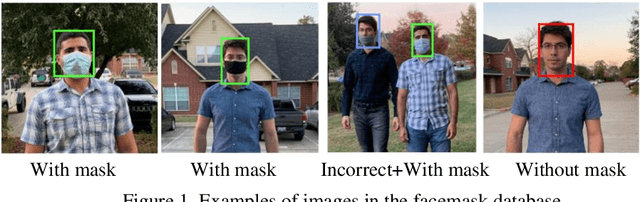

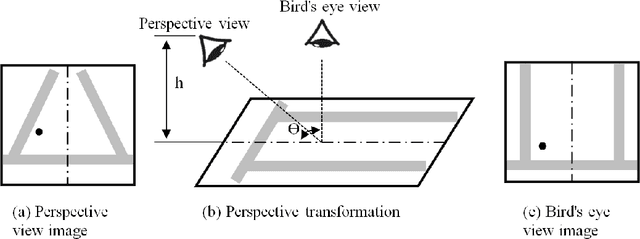

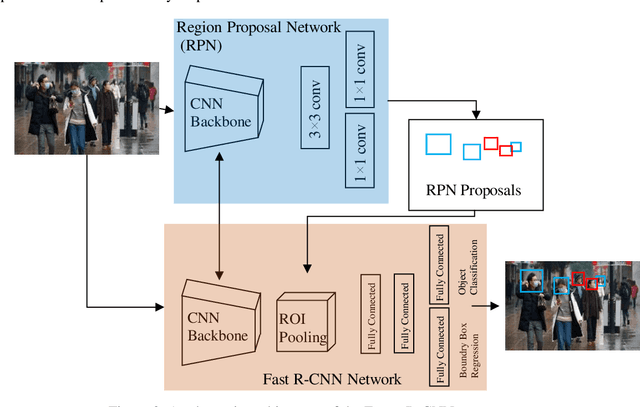

Abstract:The COVID-19 pandemic has caused many shutdowns in different industries around the world. Sectors such as infrastructure construction and maintenance projects have not been suspended due to their significant effect on people's routine life. In such projects, workers work close together that makes a high risk of infection. The World Health Organization recommends wearing a face mask and practicing physical distancing to mitigate the virus's spread. This paper developed a computer vision system to automatically detect the violation of face mask wearing and physical distancing among construction workers to assure their safety on infrastructure projects during the pandemic. For the face mask detection, the paper collected and annotated 1,000 images, including different types of face mask wearing, and added them to a pre-existing face mask dataset to develop a dataset of 1,853 images. Then trained and tested multiple Tensorflow state-of-the-art object detection models on the face mask dataset and chose the Faster R-CNN Inception ResNet V2 network that yielded the accuracy of 99.8%. For physical distance detection, the paper employed the Faster R-CNN Inception V2 to detect people. A transformation matrix was used to eliminate the camera angle's effect on the object distances on the image. The Euclidian distance used the pixels of the transformed image to compute the actual distance between people. A threshold of six feet was considered to capture physical distance violation. The paper also used transfer learning for training the model. The final model was applied on four videos of road maintenance projects in Houston, TX, that effectively detected the face mask and physical distance. We recommend that construction owners use the proposed system to enhance construction workers' safety in the pandemic situation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge