Shantanu Mandal

Learning-Based Automatic Synthesis of Software Code and Configuration

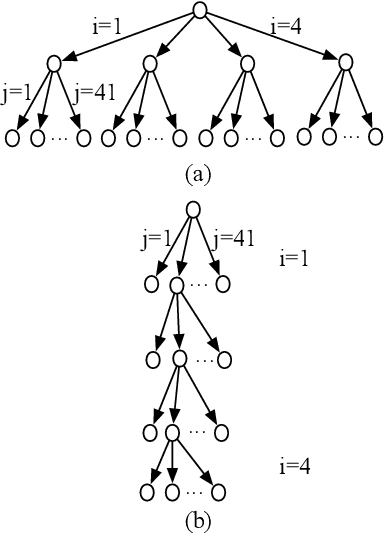

May 25, 2023Abstract:Increasing demands in software industry and scarcity of software engineers motivates researchers and practitioners to automate the process of software generation and configuration. Large scale automatic software generation and configuration is a very complex and challenging task. In this proposal, we set out to investigate this problem by breaking down automatic software generation and configuration into two different tasks. In first task, we propose to synthesize software automatically with input output specifications. This task is further broken down into two sub-tasks. The first sub-task is about synthesizing programs with a genetic algorithm which is driven by a neural network based fitness function trained with program traces and specifications. For the second sub-task, we formulate program synthesis as a continuous optimization problem and synthesize programs with covariance matrix adaption evolutionary strategy (a state-of-the-art continuous optimization method). Finally, for the second task, we propose to synthesize configurations of large scale software from different input files (e.g. software manuals, configurations files, online blogs, etc.) using a sequence-to-sequence deep learning mechanism.

Adaptive Gradient Prediction for DNN Training

May 22, 2023

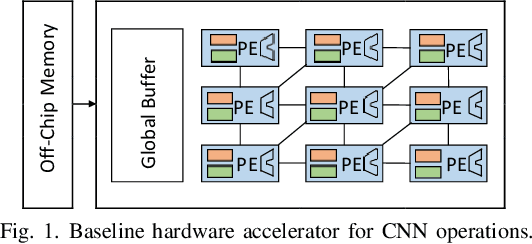

Abstract:Neural network training is inherently sequential where the layers finish the forward propagation in succession, followed by the calculation and back-propagation of gradients (based on a loss function) starting from the last layer. The sequential computations significantly slow down neural network training, especially the deeper ones. Prediction has been successfully used in many areas of computer architecture to speed up sequential processing. Therefore, we propose ADA-GP, that uses gradient prediction adaptively to speed up deep neural network (DNN) training while maintaining accuracy. ADA-GP works by incorporating a small neural network to predict gradients for different layers of a DNN model. ADA-GP uses a novel tensor reorganization to make it feasible to predict a large number of gradients. ADA-GP alternates between DNN training using backpropagated gradients and DNN training using predicted gradients. ADA-GP adaptively adjusts when and for how long gradient prediction is used to strike a balance between accuracy and performance. Last but not least, we provide a detailed hardware extension in a typical DNN accelerator to realize the speed up potential from gradient prediction. Our extensive experiments with fourteen DNN models show that ADA-GP can achieve an average speed up of 1.47x with similar or even higher accuracy than the baseline models. Moreover, it consumes, on average, 34% less energy due to reduced off-chip memory accesses compared to the baseline hardware accelerator.

Large Language Models Based Automatic Synthesis of Software Specifications

Apr 18, 2023Abstract:Software configurations play a crucial role in determining the behavior of software systems. In order to ensure safe and error-free operation, it is necessary to identify the correct configuration, along with their valid bounds and rules, which are commonly referred to as software specifications. As software systems grow in complexity and scale, the number of configurations and associated specifications required to ensure the correct operation can become large and prohibitively difficult to manipulate manually. Due to the fast pace of software development, it is often the case that correct software specifications are not thoroughly checked or validated within the software itself. Rather, they are frequently discussed and documented in a variety of external sources, including software manuals, code comments, and online discussion forums. Therefore, it is hard for the system administrator to know the correct specifications of configurations due to the lack of clarity, organization, and a centralized unified source to look at. To address this challenge, we propose SpecSyn a framework that leverages a state-of-the-art large language model to automatically synthesize software specifications from natural language sources. Our approach formulates software specification synthesis as a sequence-to-sequence learning problem and investigates the extraction of specifications from large contextual texts. This is the first work that uses a large language model for end-to-end specification synthesis from natural language texts. Empirical results demonstrate that our system outperforms prior the state-of-the-art specification synthesis tool by 21% in terms of F1 score and can find specifications from single as well as multiple sentences.

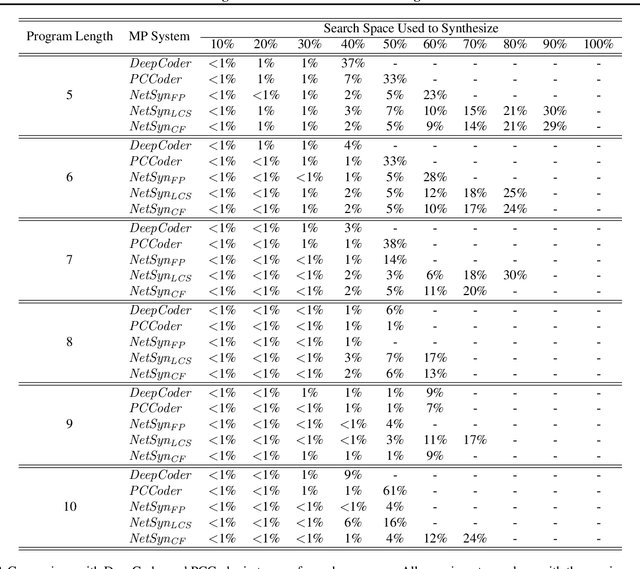

Synthesizing Programs with Continuous Optimization

Nov 02, 2022

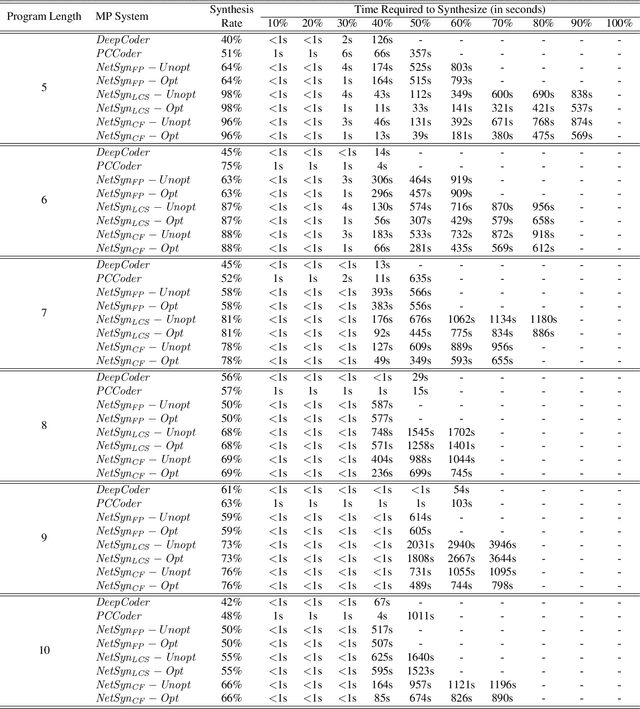

Abstract:Automatic software generation based on some specification is known as program synthesis. Most existing approaches formulate program synthesis as a search problem with discrete parameters. In this paper, we present a novel formulation of program synthesis as a continuous optimization problem and use a state-of-the-art evolutionary approach, known as Covariance Matrix Adaptation Evolution Strategy to solve the problem. We then propose a mapping scheme to convert the continuous formulation into actual programs. We compare our system, called GENESYS, with several recent program synthesis techniques (in both discrete and continuous domains) and show that GENESYS synthesizes more programs within a fixed time budget than those existing schemes. For example, for programs of length 10, GENESYS synthesizes 28% more programs than those existing schemes within the same time budget.

SIMCNN -- Exploiting Computational Similarity to Accelerate CNN Training in Hardware

Oct 28, 2021

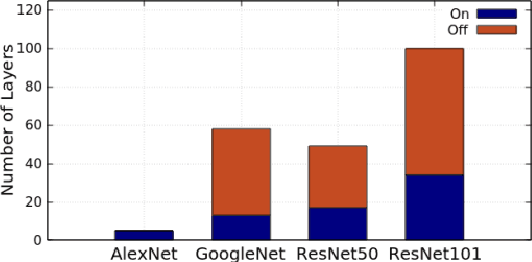

Abstract:Convolution neural networks (CNN) are computation intensive to train. It consists of a substantial number of multidimensional dot products between many kernels and inputs. We observe that there are notable similarities among the vectors extracted from inputs (i.e., input vectors). If one input vector is similar to another one, its computations with the kernels are also similar to those of the other and therefore, can be skipped by reusing the already-computed results. Based on this insight, we propose a novel scheme based on locality sensitive hashing (LSH) to exploit the similarity of computations during CNN training in a hardware accelerator. The proposed scheme, called SIMCNN, uses a cache (SIMCACHE) to store LSH signatures of recent input vectors along with the computed results. If the LSH signature of a new input vector matches with that of an already existing vector in the SIMCACHE, the already-computed result is reused for the new vector. SIMCNN is the first work that exploits computational similarity for accelerating CNN training in hardware. The paper presents a detailed design, workflow, and implementation of SIMCNN. Our experimental evaluation with four different deep learning models shows that SIMCNN saves a significant number of computations and therefore, improves training time up to 43%.

Learning Fitness Functions for Genetic Algorithms

Sep 10, 2019

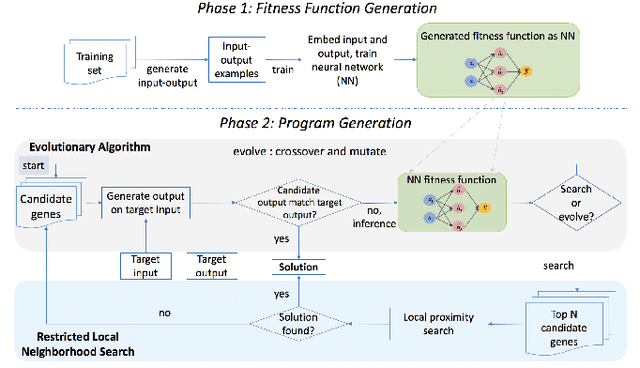

Abstract:A genetic algorithm (GA) attempts to solve a problem using a pool of potential solutions that are iteratively refined using various selection techniques. Although GAs have been used successfully for many problems, one criticism is that hand-crafting a GA's fitness function, the test that aims to effectively guide its evolution, can be notably challenging. Moreover, the complexity of a GA's fitness function tends to grow proportionally with the complexity of the problem being solved. In this work, we present a novel approach to learn a GA's fitness function. For the purpose of simplicity, we limit the demonstration of this technique to automatic software program generation. However, our system has no specific restrictions that prevent it from being applied to other domains. We also augment the GA evolutionary process with a minimally intrusive search heuristic. This heuristic improves the GA's ability to discover correct programs from ones that are approximately correct and does so with negligible computational overhead. We compare our approach to two state-of-the-art program generation systems and demonstrate that it finds more correct programs with fewer candidate program generations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge