Upinder Kaur

Everything-Grasping (EG) Gripper: A Universal Gripper with Synergistic Suction-Grasping Capabilities for Cross-Scale and Cross-State Manipulation

Oct 06, 2025Abstract:Grasping objects across vastly different sizes and physical states-including both solids and liquids-with a single robotic gripper remains a fundamental challenge in soft robotics. We present the Everything-Grasping (EG) Gripper, a soft end-effector that synergistically integrates distributed surface suction with internal granular jamming, enabling cross-scale and cross-state manipulation without requiring airtight sealing at the contact interface with target objects. The EG Gripper can handle objects with surface areas ranging from sub-millimeter scale 0.2 mm2 (glass bead) to over 62,000 mm2 (A4 sized paper and woven bag), enabling manipulation of objects nearly 3,500X smaller and 88X larger than its own contact area (approximated at 707 mm2 for a 30 mm-diameter base). We further introduce a tactile sensing framework that combines liquid detection and pressure-based suction feedback, enabling real-time differentiation between solid and liquid targets. Guided by the actile-Inferred Grasping Mode Selection (TIGMS) algorithm, the gripper autonomously selects grasping modes based on distributed pressure and voltage signals. Experiments across diverse tasks-including underwater grasping, fragile object handling, and liquid capture-demonstrate robust and repeatable performance. To our knowledge, this is the first soft gripper to reliably grasp both solid and liquid objects across scales using a unified compliant architecture.

SCOUT: An in-vivo Methane Sensing System for Real-time Monitoring of Enteric Emissions in Cattle with ex-vivo Validation

Aug 06, 2025Abstract:Accurate measurement of enteric methane emissions remains a critical bottleneck for advancing livestock sustainability through genetic selection and precision management. Existing ambient sampling approaches suffer from low data retention rates, environmental interference, and limited temporal resolution. We developed SCOUT (Smart Cannula-mounted Optical Unit for Trace-methane), the first robust in-vivo sensing system enabling continuous, high-resolution monitoring of ruminal methane concentrations through an innovative closed-loop gas recirculation design. We conducted comprehensive validation with two cannulated Simmental heifers under contrasting dietary treatments, with cross-platform comparison against established ambient sniffer systems. SCOUT achieved exceptional performance with 82% data retention compared to 17% for conventional sniffer systems, while capturing methane concentrations 100-1000x higher than ambient approaches. Cross-platform validation demonstrated strong scale-dependent correlations, with optimal correlation strength (r = -0.564 $\pm$ 0.007) at biologically relevant 40-minute windows and 100% statistical significance. High-frequency monitoring revealed novel behavior-emission coupling, including rapid concentration changes (14.5 $\pm$ 11.3k ppm) triggered by postural transitions within 15 minutes, insights previously inaccessible through existing technologies. The SCOUT system represents a transformative advancement, enabling accurate, continuous emission phenotyping essential for genomic selection programs and sustainable precision livestock management. This validation framework establishes new benchmarks for agricultural sensor performance while generating unprecedented biological insights into ruminal methane dynamics, contributing essential tools for sustainable livestock production in climate-conscious agricultural systems.

MBE-ARI: A Multimodal Dataset Mapping Bi-directional Engagement in Animal-Robot Interaction

Apr 11, 2025

Abstract:Animal-robot interaction (ARI) remains an unexplored challenge in robotics, as robots struggle to interpret the complex, multimodal communication cues of animals, such as body language, movement, and vocalizations. Unlike human-robot interaction, which benefits from established datasets and frameworks, animal-robot interaction lacks the foundational resources needed to facilitate meaningful bidirectional communication. To bridge this gap, we present the MBE-ARI (Multimodal Bidirectional Engagement in Animal-Robot Interaction), a novel multimodal dataset that captures detailed interactions between a legged robot and cows. The dataset includes synchronized RGB-D streams from multiple viewpoints, annotated with body pose and activity labels across interaction phases, offering an unprecedented level of detail for ARI research. Additionally, we introduce a full-body pose estimation model tailored for quadruped animals, capable of tracking 39 keypoints with a mean average precision (mAP) of 92.7%, outperforming existing benchmarks in animal pose estimation. The MBE-ARI dataset and our pose estimation framework lay a robust foundation for advancing research in animal-robot interaction, providing essential tools for developing perception, reasoning, and interaction frameworks needed for effective collaboration between robots and animals. The dataset and resources are publicly available at https://github.com/RISELabPurdue/MBE-ARI/, inviting further exploration and development in this critical area.

RoboMal: Malware Detection for Robot Network Systems

Jan 20, 2022

Abstract:Robot systems are increasingly integrating into numerous avenues of modern life. From cleaning houses to providing guidance and emotional support, robots now work directly with humans. Due to their far-reaching applications and progressively complex architecture, they are being targeted by adversarial attacks such as sensor-actuator attacks, data spoofing, malware, and network intrusion. Therefore, security for robotic systems has become crucial. In this paper, we address the underserved area of malware detection in robotic software. Since robots work in close proximity to humans, often with direct interactions, malware could have life-threatening impacts. Hence, we propose the RoboMal framework of static malware detection on binary executables to detect malware before it gets a chance to execute. Additionally, we address the great paucity of data in this space by providing the RoboMal dataset comprising controller executables of a small-scale autonomous car. The performance of the framework is compared against widely used supervised learning models: GRU, CNN, and ANN. Notably, the LSTM-based RoboMal model outperforms the other models with an accuracy of 85% and precision of 87% in 10-fold cross-validation, hence proving the effectiveness of the proposed framework.

Learning Multimodal Contact-Rich Skills from Demonstrations Without Reward Engineering

Mar 01, 2021

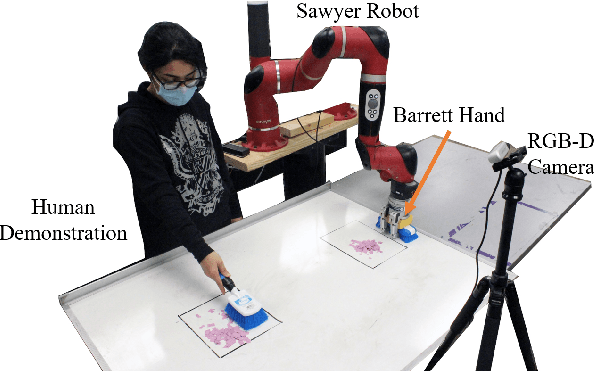

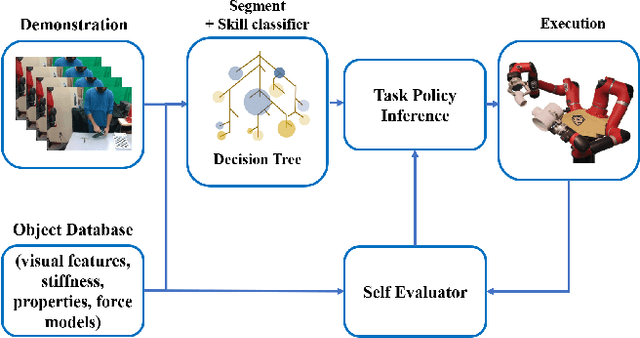

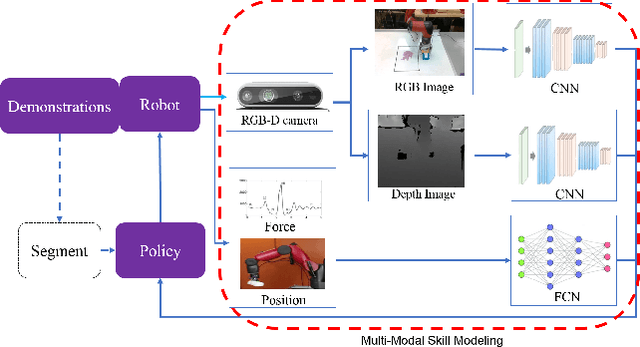

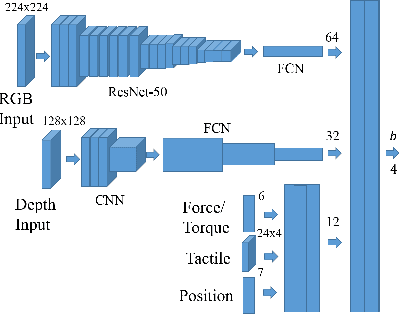

Abstract:Everyday contact-rich tasks, such as peeling, cleaning, and writing, demand multimodal perception for effective and precise task execution. However, these present a novel challenge to robots as they lack the ability to combine these multimodal stimuli for performing contact-rich tasks. Learning-based methods have attempted to model multi-modal contact-rich tasks, but they often require extensive training examples and task-specific reward functions which limits their practicality and scope. Hence, we propose a generalizable model-free learning-from-demonstration framework for robots to learn contact-rich skills without explicit reward engineering. We present a novel multi-modal sensor data representation which improves the learning performance for contact-rich skills. We performed training and experiments using the real-life Sawyer robot for three everyday contact-rich skills -- cleaning, writing, and peeling. Notably, the framework achieves a success rate of 100% for the peeling and writing skill, and 80% for the cleaning skill. Hence, this skill learning framework can be extended for learning other physical manipulation skills.

Clutter Slices Approach for Identification-on-the-fly of Indoor Spaces

Jan 12, 2021

Abstract:Construction spaces are constantly evolving, dynamic environments in need of continuous surveying, inspection, and assessment. Traditional manual inspection of such spaces proves to be an arduous and time-consuming activity. Automation using robotic agents can be an effective solution. Robots, with perception capabilities can autonomously classify and survey indoor construction spaces. In this paper, we present a novel identification-on-the-fly approach for coarse classification of indoor spaces using the unique signature of clutter. Using the context granted by clutter, we recognize common indoor spaces such as corridors, staircases, shared spaces, and restrooms. The proposed clutter slices pipeline achieves a maximum accuracy of 93.6% on the presented clutter slices dataset. This sensor independent approach can be generalized to various domains to equip intelligent autonomous agents in better perceiving their environment.

* First two authors share equal contribution. Presented at ICPR2020 The 25th International Conference on Pattern Recognition, PRAConBE Workshop

From the DESK to the Battlefield -- A Robotics Exploratory Study

Nov 30, 2020

Abstract:Short response time is critical for future military medical operations in austere settings or remote areas. Such effective patient care at the point of injury can greatly benefit from the integration of semi-autonomous robotic systems. To achieve autonomy, robots would require massive libraries of maneuvers. While this is possible in controlled settings, obtaining surgical data in austere settings can be difficult. Hence, in this paper, we present the Dexterous Surgical Skill (DESK) database for knowledge transfer between robots. The peg transfer task was selected as it is one of 6 main tasks of laparoscopic training. Also, we provide a ML framework to evaluate novel transfer learning methodologies on this database. The collected DESK dataset comprises a set of surgical robotic skills using the four robotic platforms: Taurus II, simulated Taurus II, YuMi, and the da Vinci Research Kit. Then, we explored two different learning scenarios: no-transfer and domain-transfer. In the no-transfer scenario, the training and testing data were obtained from the same domain; whereas in the domain-transfer scenario, the training data is a blend of simulated and real robot data that is tested on a real robot. Using simulation data enhances the performance of the real robot where limited or no real data is available. The transfer model showed an accuracy of 81% for the YuMi robot when the ratio of real-to-simulated data was 22%-78%. For Taurus II and da Vinci robots, the model showed an accuracy of 97.5% and 93% respectively, training only with simulation data. Results indicate that simulation can be used to augment training data to enhance the performance of models in real scenarios. This shows the potential for future use of surgical data from the operating room in deployable surgical robots in remote areas.

* First 3 authors share equal contribution

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge