Umit Ogras

Dataflow-Aware PIM-Enabled Manycore Architecture for Deep Learning Workloads

Mar 28, 2024Abstract:Processing-in-memory (PIM) has emerged as an enabler for the energy-efficient and high-performance acceleration of deep learning (DL) workloads. Resistive random-access memory (ReRAM) is one of the most promising technologies to implement PIM. However, as the complexity of Deep convolutional neural networks (DNNs) grows, we need to design a manycore architecture with multiple ReRAM-based processing elements (PEs) on a single chip. Existing PIM-based architectures mostly focus on computation while ignoring the role of communication. ReRAM-based tiled manycore architectures often involve many Processing Elements (PEs), which need to be interconnected via an efficient on-chip communication infrastructure. Simply allocating more resources (ReRAMs) to speed up only computation is ineffective if the communication infrastructure cannot keep up with it. In this paper, we highlight the design principles of a dataflow-aware PIM-enabled manycore platform tailor-made for various types of DL workloads. We consider the design challenges with both 2.5D interposer- and 3D integration-enabled architectures.

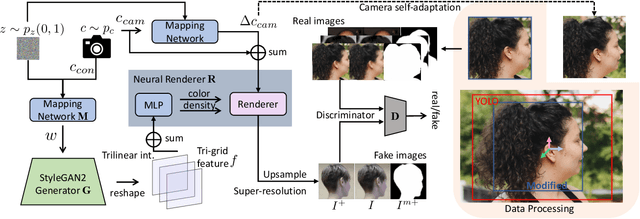

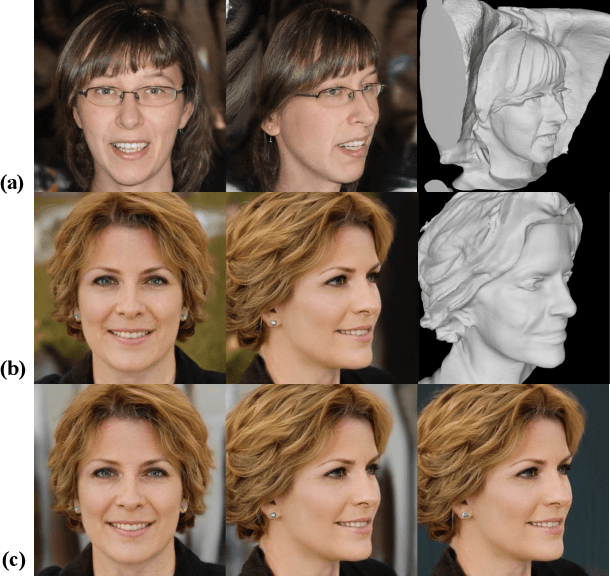

PanoHead: Geometry-Aware 3D Full-Head Synthesis in 360$^{\circ}$

Mar 23, 2023

Abstract:Synthesis and reconstruction of 3D human head has gained increasing interests in computer vision and computer graphics recently. Existing state-of-the-art 3D generative adversarial networks (GANs) for 3D human head synthesis are either limited to near-frontal views or hard to preserve 3D consistency in large view angles. We propose PanoHead, the first 3D-aware generative model that enables high-quality view-consistent image synthesis of full heads in $360^\circ$ with diverse appearance and detailed geometry using only in-the-wild unstructured images for training. At its core, we lift up the representation power of recent 3D GANs and bridge the data alignment gap when training from in-the-wild images with widely distributed views. Specifically, we propose a novel two-stage self-adaptive image alignment for robust 3D GAN training. We further introduce a tri-grid neural volume representation that effectively addresses front-face and back-head feature entanglement rooted in the widely-adopted tri-plane formulation. Our method instills prior knowledge of 2D image segmentation in adversarial learning of 3D neural scene structures, enabling compositable head synthesis in diverse backgrounds. Benefiting from these designs, our method significantly outperforms previous 3D GANs, generating high-quality 3D heads with accurate geometry and diverse appearances, even with long wavy and afro hairstyles, renderable from arbitrary poses. Furthermore, we show that our system can reconstruct full 3D heads from single input images for personalized realistic 3D avatars.

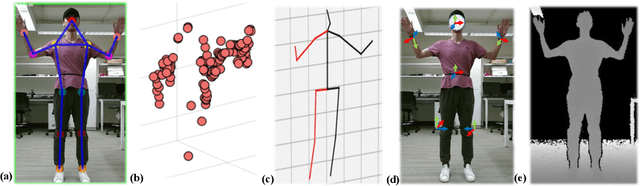

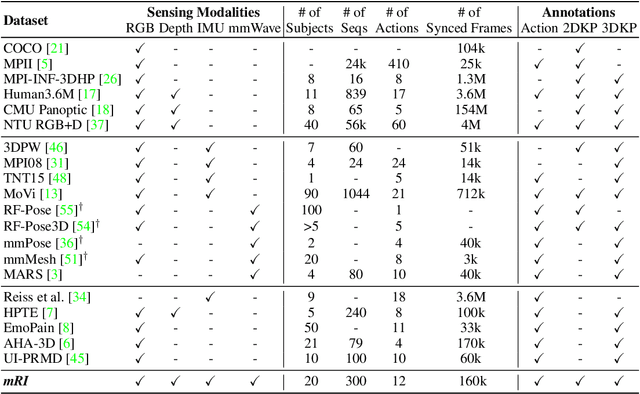

mRI: Multi-modal 3D Human Pose Estimation Dataset using mmWave, RGB-D, and Inertial Sensors

Oct 15, 2022

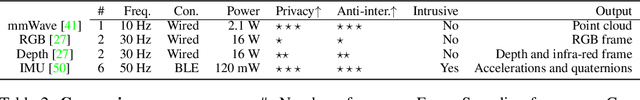

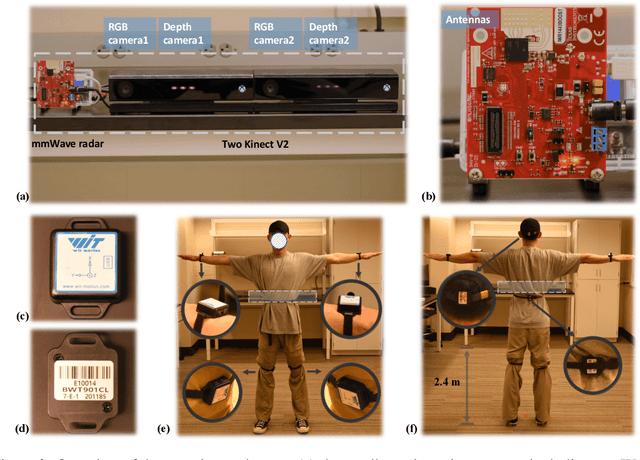

Abstract:The ability to estimate 3D human body pose and movement, also known as human pose estimation (HPE), enables many applications for home-based health monitoring, such as remote rehabilitation training. Several possible solutions have emerged using sensors ranging from RGB cameras, depth sensors, millimeter-Wave (mmWave) radars, and wearable inertial sensors. Despite previous efforts on datasets and benchmarks for HPE, few dataset exploits multiple modalities and focuses on home-based health monitoring. To bridge the gap, we present mRI, a multi-modal 3D human pose estimation dataset with mmWave, RGB-D, and Inertial Sensors. Our dataset consists of over 160k synchronized frames from 20 subjects performing rehabilitation exercises and supports the benchmarks of HPE and action detection. We perform extensive experiments using our dataset and delineate the strength of each modality. We hope that the release of mRI can catalyze the research in pose estimation, multi-modal learning, and action understanding, and more importantly facilitate the applications of home-based health monitoring.

ECO: Enabling Energy-Neutral IoT Devices through Runtime Allocation of Harvested Energy

Feb 26, 2021

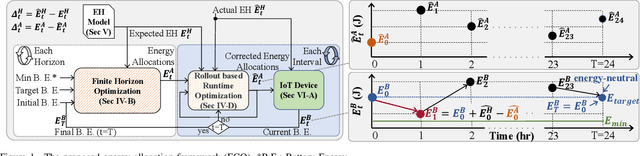

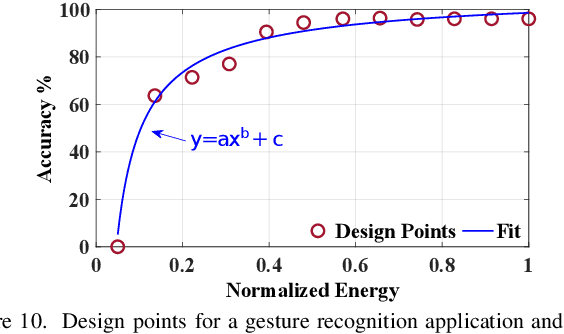

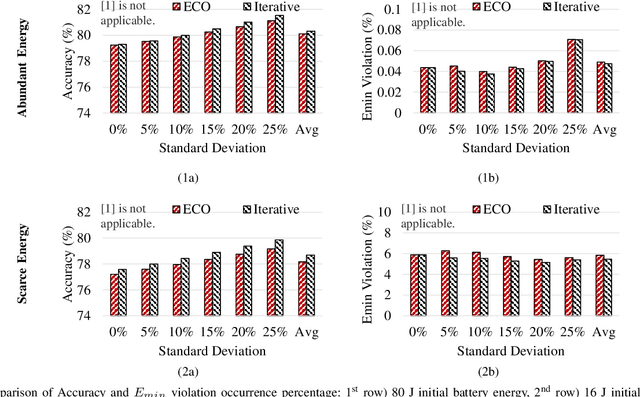

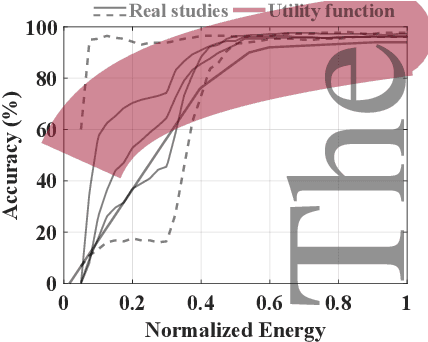

Abstract:Energy harvesting offers an attractive and promising mechanism to power low-energy devices. However, it alone is insufficient to enable an energy-neutral operation, which can eliminate tedious battery charging and replacement requirements. Achieving an energy-neutral operation is challenging since the uncertainties in harvested energy undermine the quality of service requirements. To address this challenge, we present a rollout-based runtime energy-allocation framework that optimizes the utility of the target device under energy constraints. The proposed framework uses an efficient iterative algorithm to compute initial energy allocations at the beginning of a day. The initial allocations are then corrected at every interval to compensate for the deviations from the expected energy harvesting pattern. We evaluate this framework using solar and motion energy harvesting modalities and American Time Use Survey data from 4772 different users. Compared to state-of-the-art techniques, the proposed framework achieves 34.6% higher utility even under energy-limited scenarios. Moreover, measurements on a wearable device prototype show that the proposed framework has less than 0.1% energy overhead compared to iterative approaches with a negligible loss in utility.

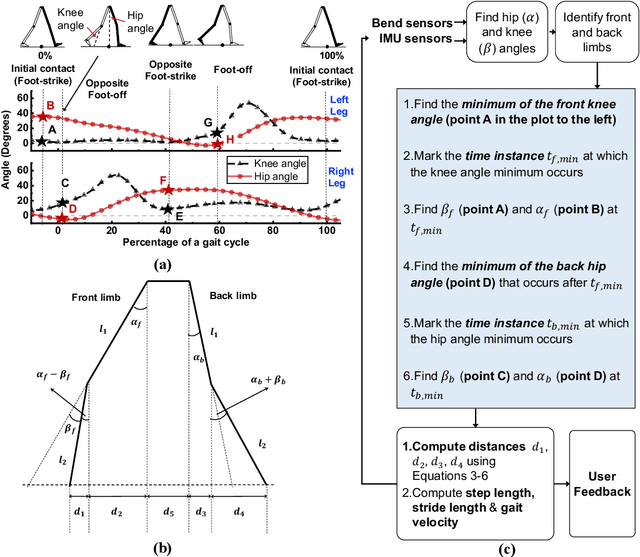

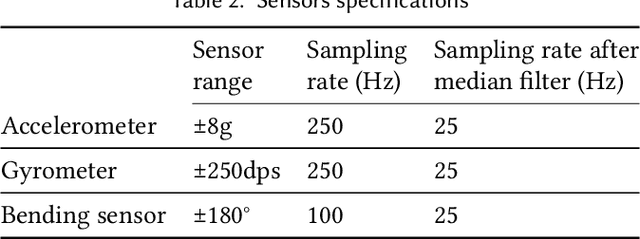

MGait: Model-Based Gait Analysis Using Wearable Bend and Inertial Sensors

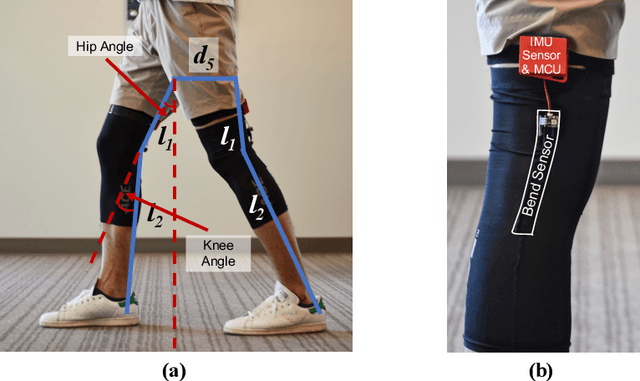

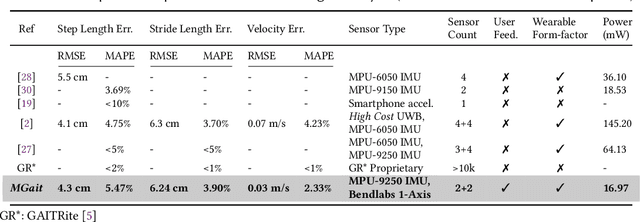

Feb 23, 2021

Abstract:Movement disorders, such as Parkinson's disease, affect more than 10 million people worldwide. Gait analysis is a critical step in the diagnosis and rehabilitation of these disorders. Specifically, step length provides valuable insights into the gait quality and rehabilitation process. However, traditional approaches for estimating step length are not suitable for continuous daily monitoring since they rely on special mats and clinical environments. To address this limitation, we present a novel and practical step-length estimation technique using low-power wearable bend and inertial sensors. Experimental results show that the proposed model estimates step length with 5.49% mean absolute percentage error and provides accurate real-time feedback to the user.

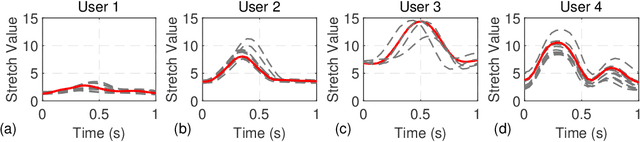

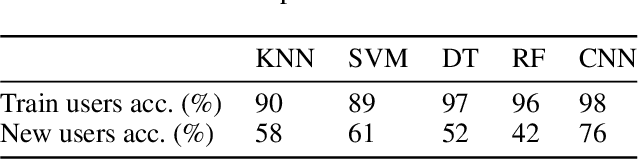

Transfer Learning for Human Activity Recognition using Representational Analysis of Neural Networks

Dec 05, 2020

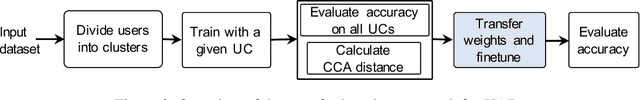

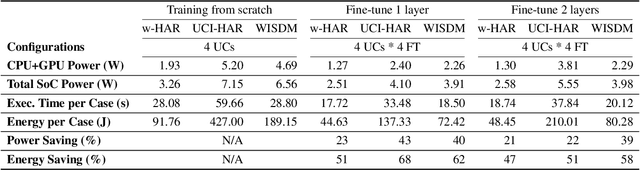

Abstract:Human activity recognition (HAR) research has increased in recent years due to its applications in mobile health monitoring, activity recognition, and patient rehabilitation. The typical approach is training a HAR classifier offline with known users and then using the same classifier for new users. However, the accuracy for new users can be low with this approach if their activity patterns are different than those in the training data. At the same time, training from scratch for new users is not feasible for mobile applications due to the high computational cost and training time. To address this issue, we propose a HAR transfer learning framework with two components. First, a representational analysis reveals common features that can transfer across users and user-specific features that need to be customized. Using this insight, we transfer the reusable portion of the offline classifier to new users and fine-tune only the rest. Our experiments with five datasets show up to 43% accuracy improvement and 66% training time reduction when compared to the baseline without using transfer learning. Furthermore, measurements on the Nvidia Jetson Xavier-NX hardware platform reveal that the power and energy consumption decrease by 43% and 68%, respectively, while achieving the same or higher accuracy as training from scratch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge