Yigit Tuncel

tinyMAN: Lightweight Energy Manager using Reinforcement Learning for Energy Harvesting Wearable IoT Devices

Feb 18, 2022

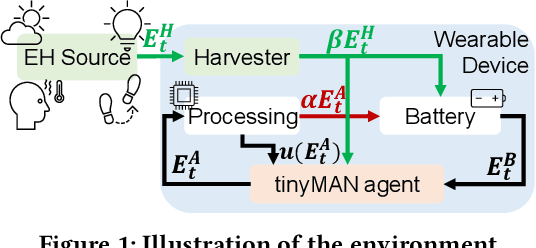

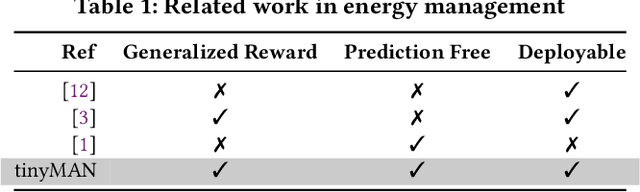

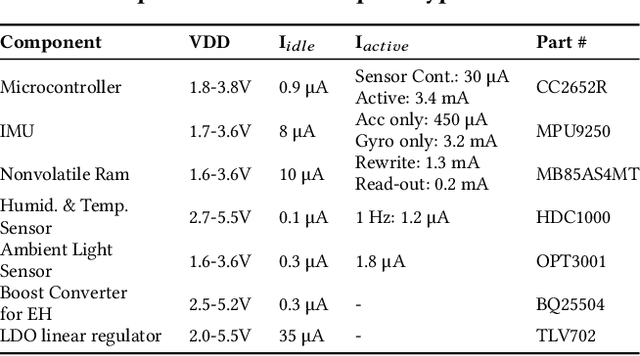

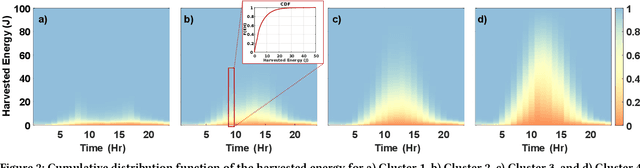

Abstract:Advances in low-power electronics and machine learning techniques lead to many novel wearable IoT devices. These devices have limited battery capacity and computational power. Thus, energy harvesting from ambient sources is a promising solution to power these low-energy wearable devices. They need to manage the harvested energy optimally to achieve energy-neutral operation, which eliminates recharging requirements. Optimal energy management is a challenging task due to the dynamic nature of the harvested energy and the battery energy constraints of the target device. To address this challenge, we present a reinforcement learning-based energy management framework, tinyMAN, for resource-constrained wearable IoT devices. The framework maximizes the utilization of the target device under dynamic energy harvesting patterns and battery constraints. Moreover, tinyMAN does not rely on forecasts of the harvested energy which makes it a prediction-free approach. We deployed tinyMAN on a wearable device prototype using TensorFlow Lite for Micro thanks to its small memory footprint of less than 100 KB. Our evaluations show that tinyMAN achieves less than 2.36 ms and 27.75 $\mu$J while maintaining up to 45% higher utility compared to prior approaches.

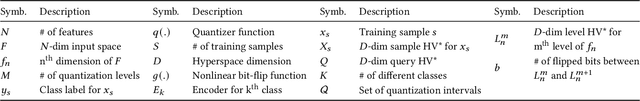

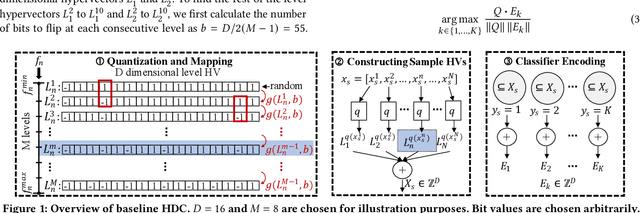

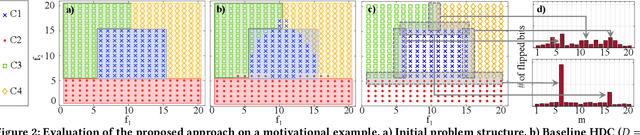

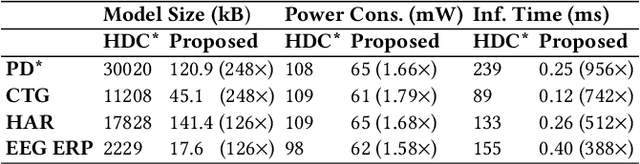

Hypervector Design for Efficient Hyperdimensional Computing on Edge Devices

Mar 08, 2021

Abstract:Hyperdimensional computing (HDC) has emerged as a new light-weight learning algorithm with smaller computation and energy requirements compared to conventional techniques. In HDC, data points are represented by high-dimensional vectors (hypervectors), which are mapped to high-dimensional space (hyperspace). Typically, a large hypervector dimension ($\geq1000$) is required to achieve accuracies comparable to conventional alternatives. However, unnecessarily large hypervectors increase hardware and energy costs, which can undermine their benefits. This paper presents a technique to minimize the hypervector dimension while maintaining the accuracy and improving the robustness of the classifier. To this end, we formulate the hypervector design as a multi-objective optimization problem for the first time in the literature. The proposed approach decreases the hypervector dimension by more than $32\times$ while maintaining or increasing the accuracy achieved by conventional HDC. Experiments on a commercial hardware platform show that the proposed approach achieves more than one order of magnitude reduction in model size, inference time, and energy consumption. We also demonstrate the trade-off between accuracy and robustness to noise and provide Pareto front solutions as a design parameter in our hypervector design.

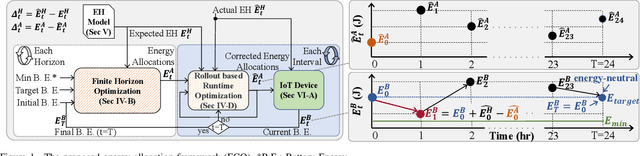

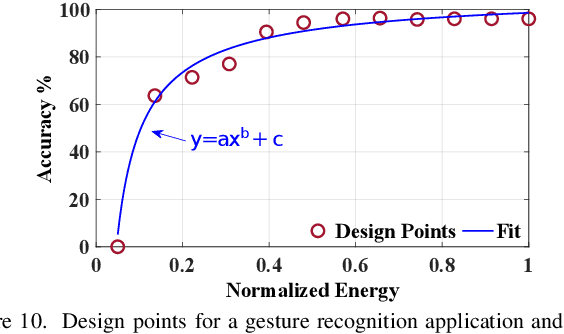

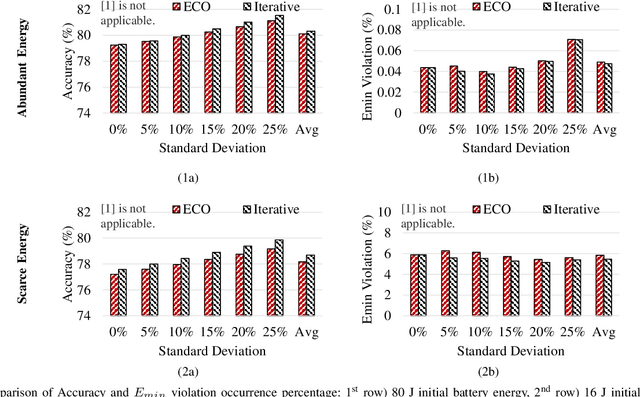

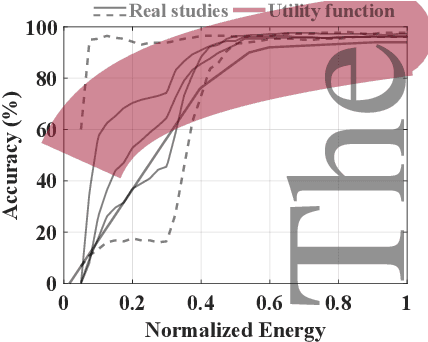

ECO: Enabling Energy-Neutral IoT Devices through Runtime Allocation of Harvested Energy

Feb 26, 2021

Abstract:Energy harvesting offers an attractive and promising mechanism to power low-energy devices. However, it alone is insufficient to enable an energy-neutral operation, which can eliminate tedious battery charging and replacement requirements. Achieving an energy-neutral operation is challenging since the uncertainties in harvested energy undermine the quality of service requirements. To address this challenge, we present a rollout-based runtime energy-allocation framework that optimizes the utility of the target device under energy constraints. The proposed framework uses an efficient iterative algorithm to compute initial energy allocations at the beginning of a day. The initial allocations are then corrected at every interval to compensate for the deviations from the expected energy harvesting pattern. We evaluate this framework using solar and motion energy harvesting modalities and American Time Use Survey data from 4772 different users. Compared to state-of-the-art techniques, the proposed framework achieves 34.6% higher utility even under energy-limited scenarios. Moreover, measurements on a wearable device prototype show that the proposed framework has less than 0.1% energy overhead compared to iterative approaches with a negligible loss in utility.

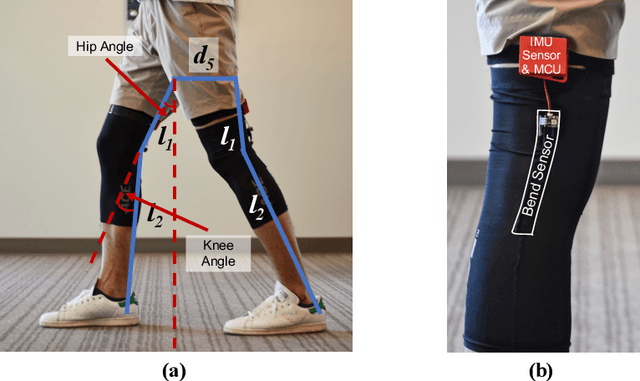

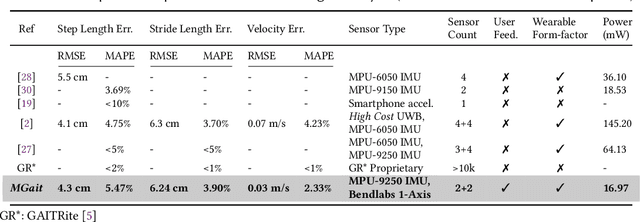

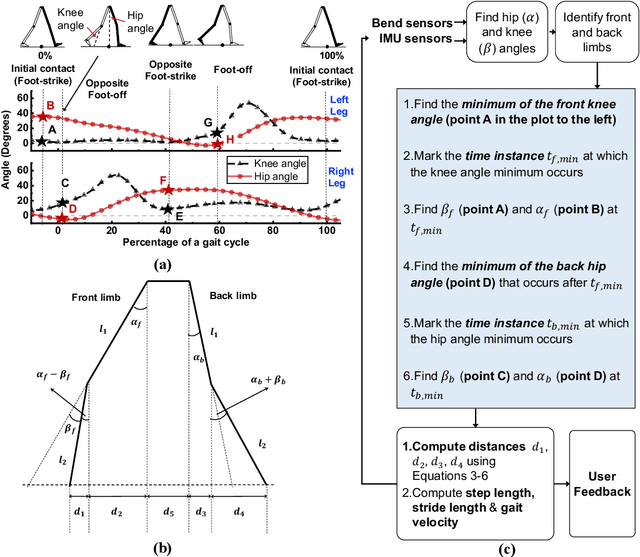

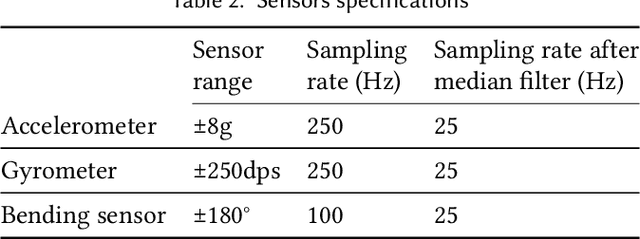

MGait: Model-Based Gait Analysis Using Wearable Bend and Inertial Sensors

Feb 23, 2021

Abstract:Movement disorders, such as Parkinson's disease, affect more than 10 million people worldwide. Gait analysis is a critical step in the diagnosis and rehabilitation of these disorders. Specifically, step length provides valuable insights into the gait quality and rehabilitation process. However, traditional approaches for estimating step length are not suitable for continuous daily monitoring since they rely on special mats and clinical environments. To address this limitation, we present a novel and practical step-length estimation technique using low-power wearable bend and inertial sensors. Experimental results show that the proposed model estimates step length with 5.49% mean absolute percentage error and provides accurate real-time feedback to the user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge