Ufuk Soylu

The Art of the Steal: Purloining Deep Learning Models Developed for an Ultrasound Scanner to a Competitor Machine

Jul 03, 2024Abstract:A transfer function approach has recently proven effective for calibrating deep learning (DL) algorithms in quantitative ultrasound (QUS), addressing data shifts at both the acquisition and machine levels. Expanding on this approach, we develop a strategy to 'steal' the functionality of a DL model from one ultrasound machine and implement it on another, in the context of QUS. This demonstrates the ease with which the functionality of a DL model can be transferred between machines, highlighting the security risks associated with deploying such models in a commercial scanner for clinical use. The proposed method is a black-box unsupervised domain adaptation technique that integrates the transfer function approach with an iterative schema. It does not utilize any information related to model internals of the victim machine but it solely relies on the availability of input-output interface. Additionally, we assume the availability of unlabelled data from the testing machine, i.e., the perpetrator machine. This scenario could become commonplace as companies begin deploying their DL functionalities for clinical use. Competing companies might acquire the victim machine and, through the input-output interface, replicate the functionality onto their own machines. In the experiments, we used a SonixOne and a Verasonics machine. The victim model was trained on SonixOne data, and its functionality was then transferred to the Verasonics machine. The proposed method successfully transferred the functionality to the Verasonics machine, achieving a remarkable 98\% classification accuracy in a binary decision task. This study underscores the need to establish security measures prior to deploying DL models in clinical settings.

Machine-to-Machine Transfer Function in Deep Learning-Based Quantitative Ultrasound

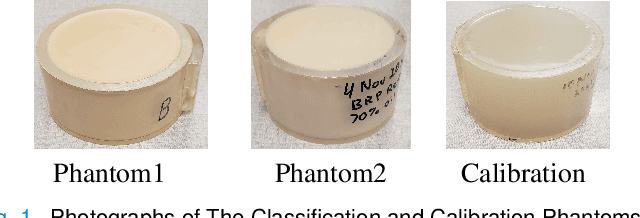

Nov 27, 2023Abstract:A Transfer Function approach was recently demonstrated to mitigate data mismatches at the acquisition level for a single ultrasound scanner in deep learning (DL) based quantitative ultrasound (QUS). As a natural progression, we further investigate the transfer function approach and introduce a Machine-to-Machine (M2M) Transfer Function, which possesses the ability to mitigate data mismatches at a machine level, i.e., mismatches between two scanners over the same frequency band. This ability opens the door to unprecedented opportunities for reducing DL model development costs, enabling the combination of data from multiple sources or scanners, or facilitating the transfer of DL models between machines with ease. We tested the proposed method utilizing a SonixOne machine and a Verasonics machine. In the experiments, we used a L9-4 array and conducted two types of acquisitions to obtain calibration data: stable and free-hand, using two different calibration phantoms. Without the proposed calibration method, the mean classification accuracy when applying a model on data acquired from one system to data acquired from another system was approximately 50%, and the mean AUC was about 0.40. With the proposed method, mean accuracy increased to approximately 90%, and the AUC rose to the 0.99. Additional observations include that shifts in statistics for the z-score normalization had a significant impact on performance. Furthermore, the choice of the calibration phantom played an important role in the proposed method. Additionally, robust implementation inspired by Wiener filtering provided an effective method for transferring the domain from one machine to another machine, and it can succeed using just a single calibration view without the need for multiple independent calibration frames.

Calibrating Data Mismatches in Deep Learning-Based Quantitative Ultrasound Using Setting Transfer Functions

Oct 04, 2022

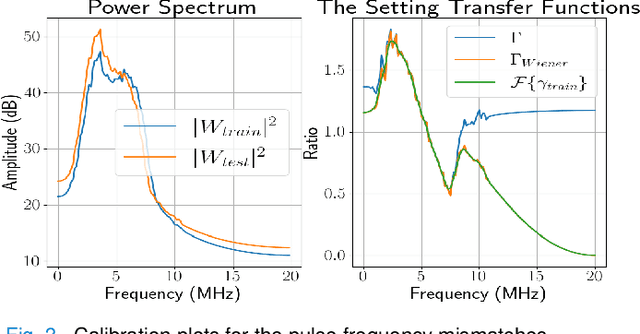

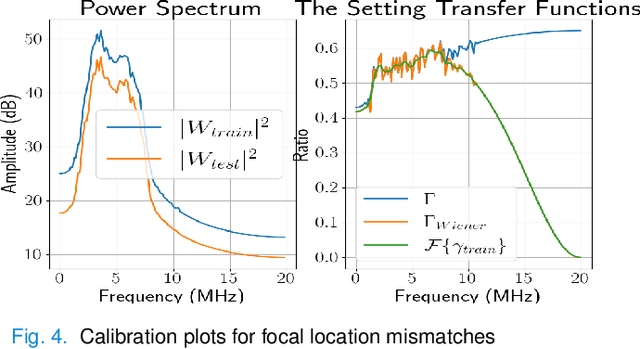

Abstract:Deep learning (DL) can fail when there are data mismatches between training and testing data. Due to its operator-dependent nature, acquisition-related data mismatches, caused by different scanner settings, can occur in ultrasound imaging. Therefore, mitigating effects of such data mismatches is essential for wider clinical adoption of DL powered ultrasound imaging. To mitigate the effects, ideally we need to collect a large training set at each scanner setting. However, acquiring such training sets is expensive. Another approach could be training on a subset of imaging settings, which makes the data generation less expensive. However, there will still be generalization issues. As an alternative approach that is inexpensive and generalizable, we propose to collect a large training set at a single setting and a small calibration set at each scanner setting. Then, the calibration set will be used to calibrate data mismatches by using a signals and systems perspective. We tested the proposed solution to classify two phantoms. To investigate generalizability of the proposed solution, we calibrated three types of data mismatches: pulse frequency, focus and output power mismatches. To calibrate the setting mismatches, we calculated the setting transfer functions. The CNN trained with no calibration resulted in mean classification accuracies of 55.3%, 64.4% and 70.3% for pulse frequency, focus and output power mismatches, respectively. By using the setting transfer functions, which allowed a matching of the training and testing domains, we obtained mean accuracies of 95.3%, 92.99% and 99.32%, respectively. Therefore, the incorporation of the setting transfer functions between scanner settings can provide an economical means of generalizing a DL model for specific classification tasks where scanner settings are not fixed by the operator.

A Data-Efficient Deep Learning Training Strategy for Biomedical Ultrasound Imaging: Zone Training

Feb 01, 2022

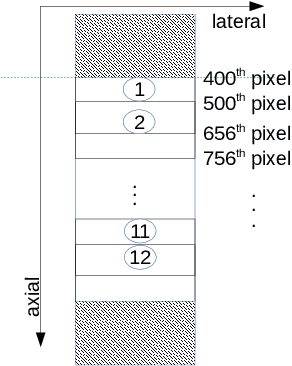

Abstract:Deep learning (DL) powered biomedical ultrasound imaging is an emerging research field where researchers adapt the image analysis capabilities of DL algorithms to biomedical ultrasound imaging settings. A major roadblock to wider adoption of DL powered biomedical ultrasound imaging is that acquiring large and diverse datasets is expensive in clinical settings, which is a requirement for successful DL implementation. Hence, there is a constant need for developing data-efficient DL techniques to turn DL powered biomedical ultrasound imaging into reality. In this work, we develop a data-efficient deep learning training strategy, which we named \textit{Zone Training}. In \textit{Zone Training}, we propose to divide the complete field of view of an ultrasound image into multiple zones associated with different regions of a diffraction pattern and then, train separate DL networks for each zone. The main advantage of \textit{Zone Training} is that it requires less training data to achieve high accuracy. In this work, three different tissue-mimicking phantoms were classified by a DL network. The results demonstrated that \textit{Zone Training} required a factor of 2-5 less training data to achieve similar classification accuracies compared to a conventional training strategy.

Circumventing the resolution-time tradeoff in Ultrasound Localization Microscopy by Velocity Filtering

Jan 23, 2021

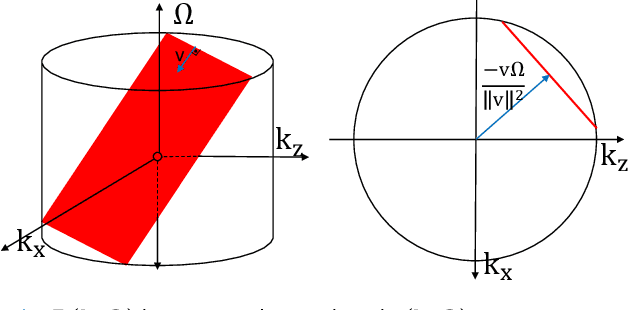

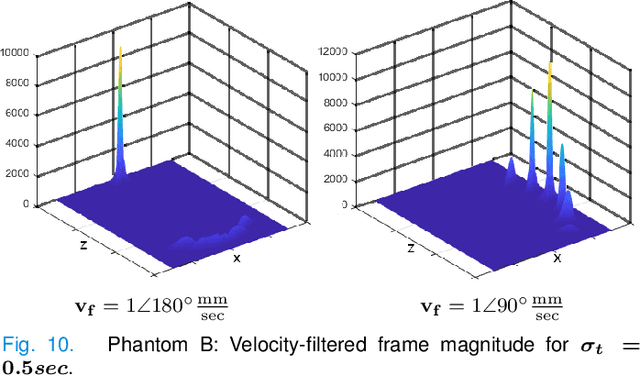

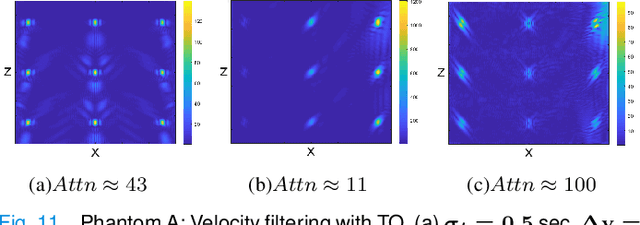

Abstract:Ultrasound Localization Microscopy (ULM) offers a cost-effective modality for microvascular imaging by using intravascular contrast agents (microbubbles). However, ULM has a fundamental trade-off between acquisition time and spatial resolution, which makes clinical translation challenging. In this paper, in order to circumvent the trade-off, we introduce a spatiotemporal filtering operation dubbed velocity filtering, which is capable of separating contrast agents into different groups based on their vector velocities thus reducing interference in the localization step, while simultaneously offering blood velocity mapping at super resolution, without tracking individual microbubbles. As side benefit, the velocity filter provides noise suppression before microbubble localization that could enable substantially increased penetration depth in tissue typically by 4cm or more. We provide a theoretical analysis of the performance of velocity filter. Numerical experiments confirm that the proposed velocity filter is able to separate the microbubbles with respect to the speed and direction of their motion. In combination with subsequent localization of microbubble centers, e.g. by matched filtering, the velocity filter improves the quality of the reconstructed vasculature significantly and provides blood flow information. Overall, the proposed imaging pipeline in this paper enables the use of higher concentrations of microbubbles while preserving spatial resolution, thus helping circumvent the trade-off between acquisition time and spatial resolution. Conveniently, because the velocity filtering operation can be implemented by fast Fourier transforms(FFTs) it admits fast, and potentially real-time realization. We believe that the proposed velocity filtering method has the potential to pave the way to clinical translation of ULM.

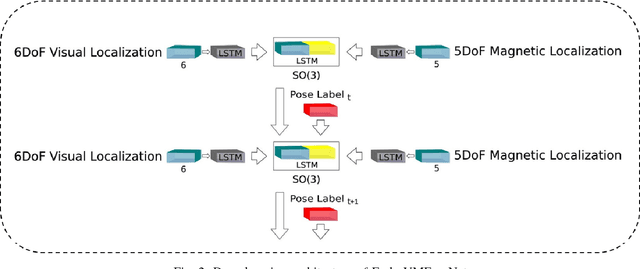

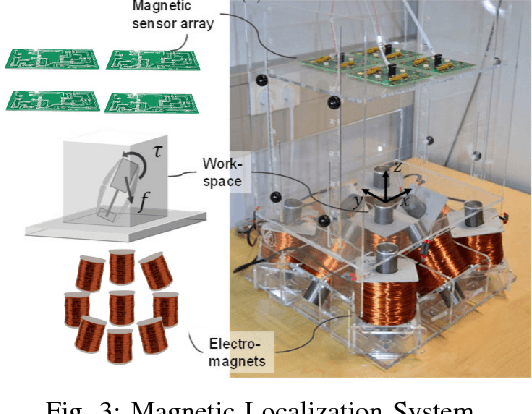

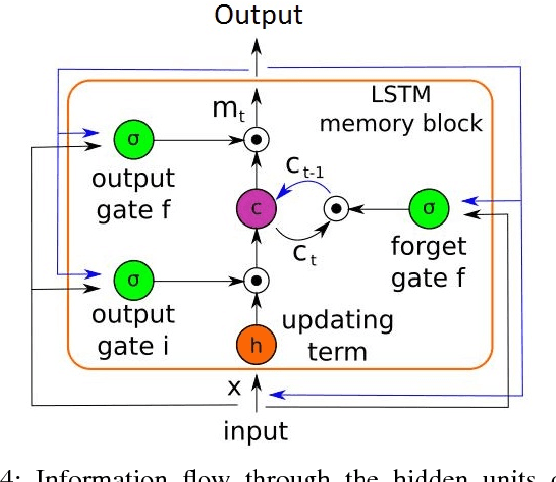

Endo-VMFuseNet: Deep Visual-Magnetic Sensor Fusion Approach for Uncalibrated, Unsynchronized and Asymmetric Endoscopic Capsule Robot Localization Data

Sep 22, 2017

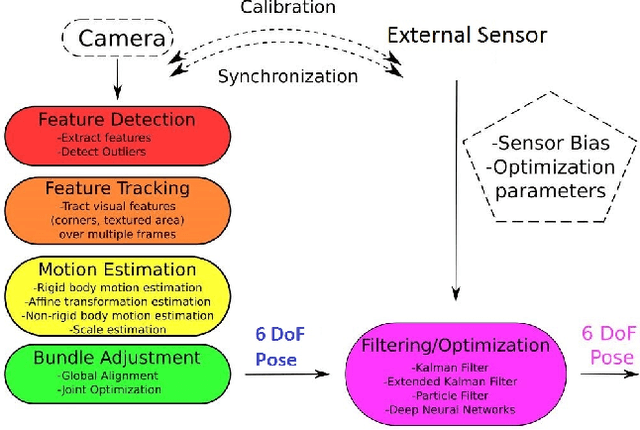

Abstract:In the last decade, researchers and medical device companies have made major advances towards transforming passive capsule endoscopes into active medical robots. One of the major challenges is to endow capsule robots with accurate perception of the environment inside the human body, which will provide necessary information and enable improved medical procedures. We extend the success of deep learning approaches from various research fields to the problem of uncalibrated, asynchronous, and asymmetric sensor fusion for endoscopic capsule robots. The results performed on real pig stomach datasets show that our method achieves sub-millimeter precision for both translational and rotational movements and contains various advantages over traditional sensor fusion techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge