Torsten Schön

Advancing Robustness in Deep Reinforcement Learning with an Ensemble Defense Approach

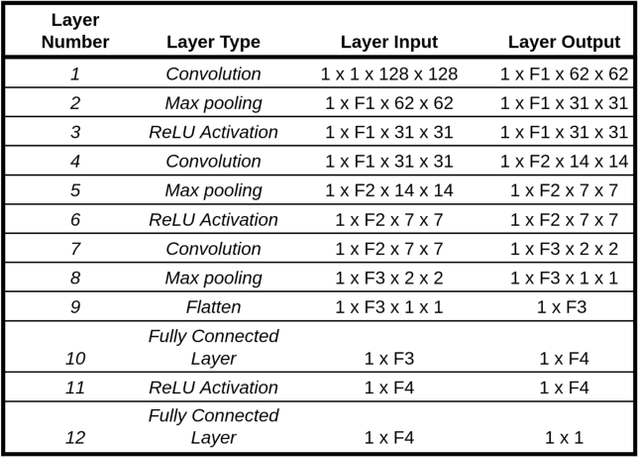

Jul 22, 2025Abstract:Recent advancements in Deep Reinforcement Learning (DRL) have demonstrated its applicability across various domains, including robotics, healthcare, energy optimization, and autonomous driving. However, a critical question remains: How robust are DRL models when exposed to adversarial attacks? While existing defense mechanisms such as adversarial training and distillation enhance the resilience of DRL models, there remains a significant research gap regarding the integration of multiple defenses in autonomous driving scenarios specifically. This paper addresses this gap by proposing a novel ensemble-based defense architecture to mitigate adversarial attacks in autonomous driving. Our evaluation demonstrates that the proposed architecture significantly enhances the robustness of DRL models. Compared to the baseline under FGSM attacks, our ensemble method improves the mean reward from 5.87 to 18.38 (over 213% increase) and reduces the mean collision rate from 0.50 to 0.09 (an 82% decrease) in the highway scenario and merge scenario, outperforming all standalone defense strategies.

Machine Learning Models for Soil Parameter Prediction Based on Satellite, Weather, Clay and Yield Data

Mar 28, 2025Abstract:Efficient nutrient management and precise fertilization are essential for advancing modern agriculture, particularly in regions striving to optimize crop yields sustainably. The AgroLens project endeavors to address this challenge by develop ing Machine Learning (ML)-based methodologies to predict soil nutrient levels without reliance on laboratory tests. By leveraging state of the art techniques, the project lays a foundation for acionable insights to improve agricultural productivity in resource-constrained areas, such as Africa. The approach begins with the development of a robust European model using the LUCAS Soil dataset and Sentinel-2 satellite imagery to estimate key soil properties, including phosphorus, potassium, nitrogen, and pH levels. This model is then enhanced by integrating supplementary features, such as weather data, harvest rates, and Clay AI-generated embeddings. This report details the methodological framework, data preprocessing strategies, and ML pipelines employed in this project. Advanced algorithms, including Random Forests, Extreme Gradient Boosting (XGBoost), and Fully Connected Neural Networks (FCNN), were implemented and finetuned for precise nutrient prediction. Results showcase robust model performance, with root mean square error values meeting stringent accuracy thresholds. By establishing a reproducible and scalable pipeline for soil nutrient prediction, this research paves the way for transformative agricultural applications, including precision fertilization and improved resource allocation in underresourced regions like Africa.

Gaussian-Based and Outside-the-Box Runtime Monitoring Join Forces

Oct 08, 2024Abstract:Since neural networks can make wrong predictions even with high confidence, monitoring their behavior at runtime is important, especially in safety-critical domains like autonomous driving. In this paper, we combine ideas from previous monitoring approaches based on observing the activation values of hidden neurons. In particular, we combine the Gaussian-based approach, which observes whether the current value of each monitored neuron is similar to typical values observed during training, and the Outside-the-Box monitor, which creates clusters of the acceptable activation values, and, thus, considers the correlations of the neurons' values. Our experiments evaluate the achieved improvement.

Unlocking Past Information: Temporal Embeddings in Cooperative Bird's Eye View Prediction

Jan 25, 2024Abstract:Accurate and comprehensive semantic segmentation of Bird's Eye View (BEV) is essential for ensuring safe and proactive navigation in autonomous driving. Although cooperative perception has exceeded the detection capabilities of single-agent systems, prevalent camera-based algorithms in cooperative perception neglect valuable information derived from historical observations. This limitation becomes critical during sensor failures or communication issues as cooperative perception reverts to single-agent perception, leading to degraded performance and incomplete BEV segmentation maps. This paper introduces TempCoBEV, a temporal module designed to incorporate historical cues into current observations, thereby improving the quality and reliability of BEV map segmentations. We propose an importance-guided attention architecture to effectively integrate temporal information that prioritizes relevant properties for BEV map segmentation. TempCoBEV is an independent temporal module that seamlessly integrates into state-of-the-art camera-based cooperative perception models. We demonstrate through extensive experiments on the OPV2V dataset that TempCoBEV performs better than non-temporal models in predicting current and future BEV map segmentations, particularly in scenarios involving communication failures. We show the efficacy of TempCoBEV and its capability to integrate historical cues into the current BEV map, improving predictions under optimal communication conditions by up to 2% and under communication failures by up to 19%. The code will be published on GitHub.

Generation of Realistic Synthetic Raw Radar Data for Automated Driving Applications using Generative Adversarial Networks

Aug 08, 2023Abstract:The main approaches for simulating FMCW radar are based on ray tracing, which is usually computationally intensive and do not account for background noise. This work proposes a faster method for FMCW radar simulation capable of generating synthetic raw radar data using generative adversarial networks (GAN). The code and pre-trained weights are open-source and available on GitHub. This method generates 16 simultaneous chirps, which allows the generated data to be used for the further development of algorithms for processing radar data (filtering and clustering). This can increase the potential for data augmentation, e.g., by generating data in non-existent or safety-critical scenarios that are not reproducible in real life. In this work, the GAN was trained with radar measurements of a motorcycle and used to generate synthetic raw radar data of a motorcycle traveling in a straight line. For generating this data, the distance of the motorcycle and Gaussian noise are used as input to the neural network. The synthetic generated radar chirps were evaluated using the Frechet Inception Distance (FID). Then, the Range-Azimuth (RA) map is calculated twice: first, based on synthetic data using this GAN and, second, based on real data. Based on these RA maps, an algorithm with adaptive threshold and edge detection is used for object detection. The results have shown that the data is realistic in terms of coherent radar reflections of the motorcycle and background noise based on the comparison of chirps, the RA maps and the object detection results. Thus, the proposed method in this work has shown to minimize the simulation-to-reality gap for the generation of radar data.

VolNet: Estimating Human Body Part Volumes from a Single RGB Image

Jul 05, 2021

Abstract:Human body volume estimation from a single RGB image is a challenging problem despite minimal attention from the research community. However VolNet, an architecture leveraging 2D and 3D pose estimation, body part segmentation and volume regression extracted from a single 2D RGB image combined with the subject's body height can be used to estimate the total body volume. VolNet is designed to predict the 2D and 3D pose as well as the body part segmentation in intermediate tasks. We generated a synthetic, large-scale dataset of photo-realistic images of human bodies with a wide range of body shapes and realistic poses called SURREALvols. By using Volnet and combining multiple stacked hourglass networks together with ResNeXt, our model correctly predicted the volume in ~82% of cases with a 10% tolerance threshold. This is a considerable improvement compared to state-of-the-art solutions such as BodyNet with only a ~38% success rate.

Temporally coherent video anonymization through GAN inpainting

Jun 04, 2021

Abstract:This work tackles the problem of temporally coherent face anonymization in natural video streams.We propose JaGAN, a two-stage system starting with detecting and masking out faces with black image patches in all individual frames of the video. The second stage leverages a privacy-preserving Video Generative Adversarial Network designed to inpaint the missing image patches with artificially generated faces. Our initial experiments reveal that image based generative models are not capable of inpainting patches showing temporal coherent appearance across neighboring video frames. To address this issue we introduce a newly curated video collection, which is made publicly available for the research community along with this paper. We also introduce the Identity Invariance Score IdI as a means to quantify temporal coherency between neighboring frames.

Vision-Based Mobile Robotics Obstacle Avoidance With Deep Reinforcement Learning

Mar 08, 2021

Abstract:Obstacle avoidance is a fundamental and challenging problem for autonomous navigation of mobile robots. In this paper, we consider the problem of obstacle avoidance in simple 3D environments where the robot has to solely rely on a single monocular camera. In particular, we are interested in solving this problem without relying on localization, mapping, or planning techniques. Most of the existing work consider obstacle avoidance as two separate problems, namely obstacle detection, and control. Inspired by the recent advantages of deep reinforcement learning in Atari games and understanding highly complex situations in Go, we tackle the obstacle avoidance problem as a data-driven end-to-end deep learning approach. Our approach takes raw images as input and generates control commands as output. We show that discrete action spaces are outperforming continuous control commands in terms of expected average reward in maze-like environments. Furthermore, we show how to accelerate the learning and increase the robustness of the policy by incorporating predicted depth maps by a generative adversarial network.

Towards Self-Supervised High Level Sensor Fusion

Feb 12, 2019

Abstract:In this paper, we present a framework to control a self-driving car by fusing raw information from RGB images and depth maps. A deep neural network architecture is used for mapping the vision and depth information, respectively, to steering commands. This fusion of information from two sensor sources allows to provide redundancy and fault tolerance in the presence of sensor failures. Even if one of the input sensors fails to produce the correct output, the other functioning sensor would still be able to maneuver the car. Such redundancy is crucial in the critical application of self-driving cars. The experimental results have showed that our method is capable of learning to use the relevant sensor information even when one of the sensors fail without any explicit signal.

Semantic Label Reduction Techniques for Autonomous Driving

Feb 11, 2019

Abstract:Semantic segmentation maps can be used as input to models for maneuvering the controls of a car. However, not all labels may be necessary for making the control decision. One would expect that certain labels such as road lanes or sidewalks would be more critical in comparison with labels for vegetation or buildings which may not have a direct influence on the car's driving decision. In this appendix, we evaluate and quantify how sensitive and important the different semantic labels are for controlling the car. Labels that do not influence the driving decision are remapped to other classes, thereby simplifying the task by reducing to only labels critical for driving of the vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge