Tomi Peltola

Interactive AI with a Theory of Mind

Dec 01, 2019

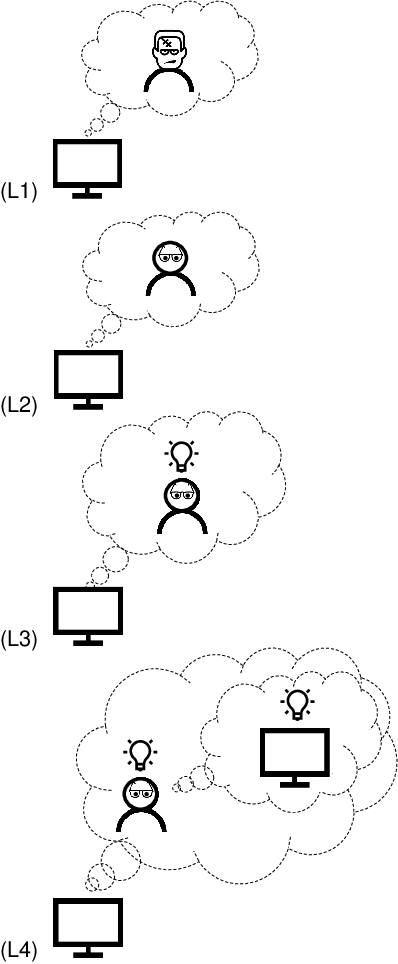

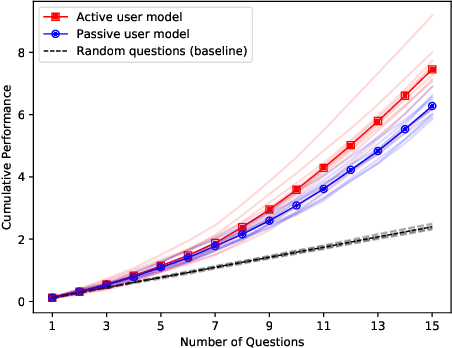

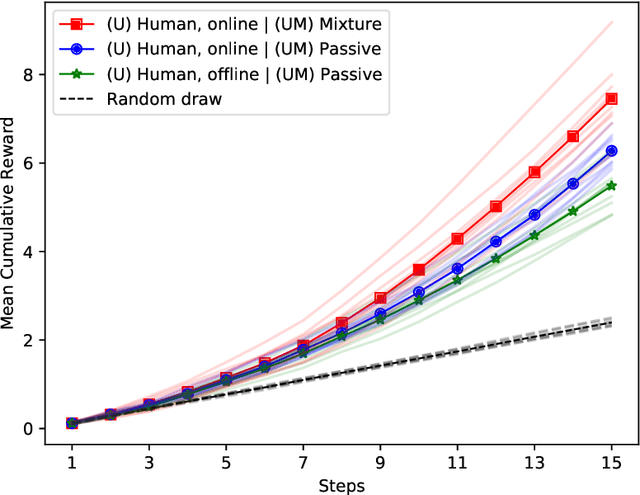

Abstract:Understanding each other is the key to success in collaboration. For humans, attributing mental states to others, the theory of mind, provides the crucial advantage. We argue for formulating human--AI interaction as a multi-agent problem, endowing AI with a computational theory of mind to understand and anticipate the user. To differentiate the approach from previous work, we introduce a categorisation of user modelling approaches based on the level of agency learnt in the interaction. We describe our recent work in using nested multi-agent modelling to formulate user models for multi-armed bandit based interactive AI systems, including a proof-of-concept user study.

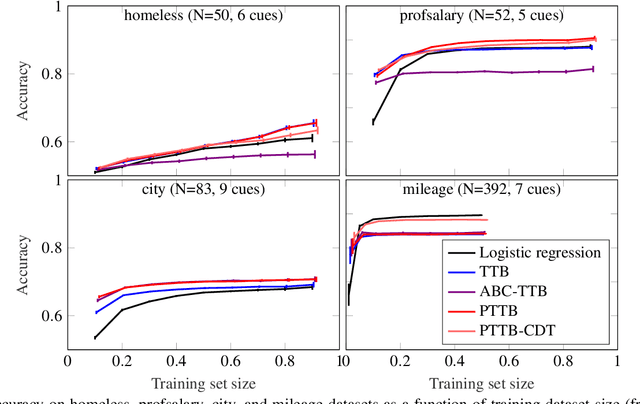

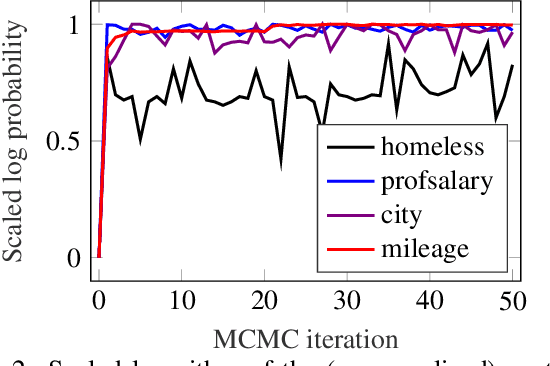

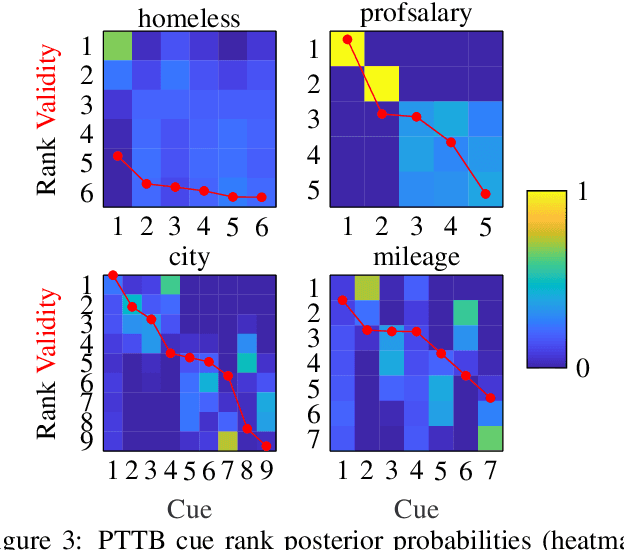

Probabilistic Formulation of the Take The Best Heuristic

Nov 01, 2019

Abstract:The framework of cognitively bounded rationality treats problem solving as fundamentally rational, but emphasises that it is constrained by cognitive architecture and the task environment. This paper investigates a simple decision making heuristic, Take The Best (TTB), within that framework. We formulate TTB as a likelihood-based probabilistic model, where the decision strategy arises by probabilistic inference based on the training data and the model constraints. The strengths of the probabilistic formulation, in addition to providing a bounded rational account of the learning of the heuristic, include natural extensibility with additional cognitively plausible constraints and prior information, and the possibility to embed the heuristic as a subpart of a larger probabilistic model. We extend the model to learn cue discrimination thresholds for continuous-valued cues and experiment with using the model to account for biased preference feedback from a boundedly rational agent in a simulated interactive machine learning task.

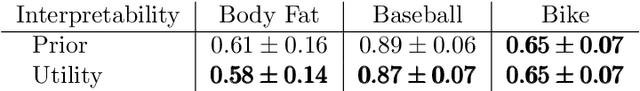

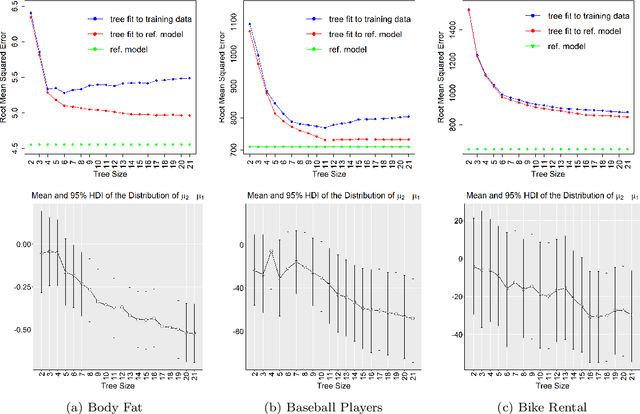

Making Bayesian Predictive Models Interpretable: A Decision Theoretic Approach

Oct 21, 2019

Abstract:A salient approach to interpretable machine learning is to restrict modeling to simple and hence understandable models. In the Bayesian framework, this can be pursued by restricting the model structure and prior to favor interpretable models. Fundamentally, however, interpretability is about users' preferences, not the data generation mechanism: it is more natural to formulate interpretability as a utility function. In this work, we propose an interpretability utility, which explicates the trade-off between explanation fidelity and interpretability in the Bayesian framework. The method consists of two steps. First, a reference model, possibly a black-box Bayesian predictive model compromising no accuracy, is constructed and fitted to the training data. Second, a proxy model from an interpretable model family that best mimics the predictive behaviour of the reference model is found by optimizing the interpretability utility function. The approach is model agnostic - neither the interpretable model nor the reference model are restricted to be from a certain class of models - and the optimization problem can be solved using standard tools in the chosen model family. Through experiments on real-word data sets using decision trees as interpretable models and Bayesian additive regression models as reference models, we show that for the same level of interpretability, our approach generates more accurate models than the earlier alternative of restricting the prior. We also propose a systematic way to measure stabilities of interpretabile models constructed by different interpretability approaches and show that our proposed approach generates more stable models.

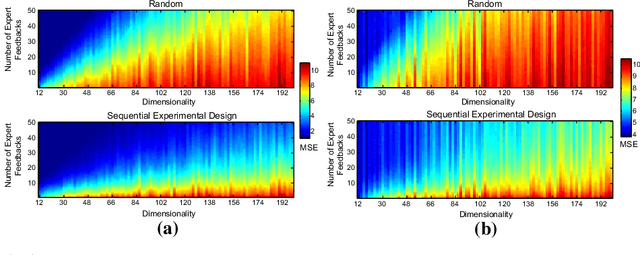

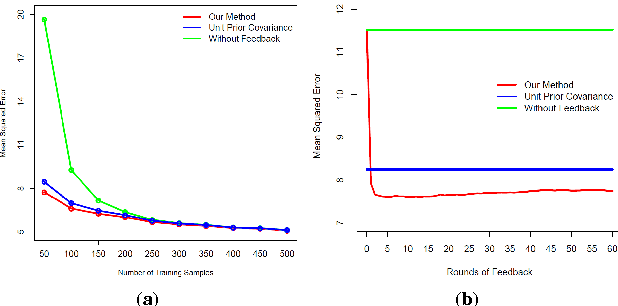

Human-in-the-loop Active Covariance Learning for Improving Prediction in Small Data Sets

Mar 18, 2019

Abstract:Learning predictive models from small high-dimensional data sets is a key problem in high-dimensional statistics. Expert knowledge elicitation can help, and a strong line of work focuses on directly eliciting informative prior distributions for parameters. This either requires considerable statistical expertise or is laborious, as the emphasis has been on accuracy and not on efficiency of the process. Another line of work queries about importance of features one at a time, assuming them to be independent and hence missing covariance information. In contrast, we propose eliciting expert knowledge about pairwise feature similarities, to borrow statistical strength in the predictions, and using sequential decision making techniques to minimize the effort of the expert. Empirical results demonstrate improvement in predictive performance on both simulated and real data, in high-dimensional linear regression tasks, where we learn the covariance structure with a Gaussian process, based on sequential elicitation.

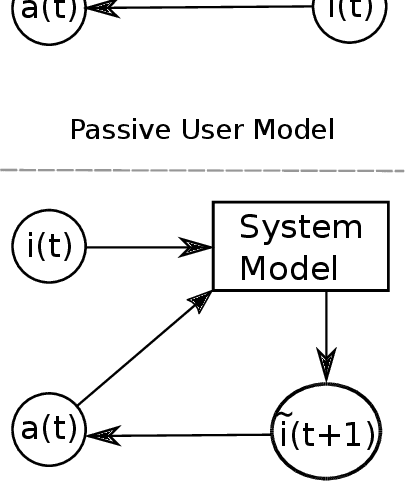

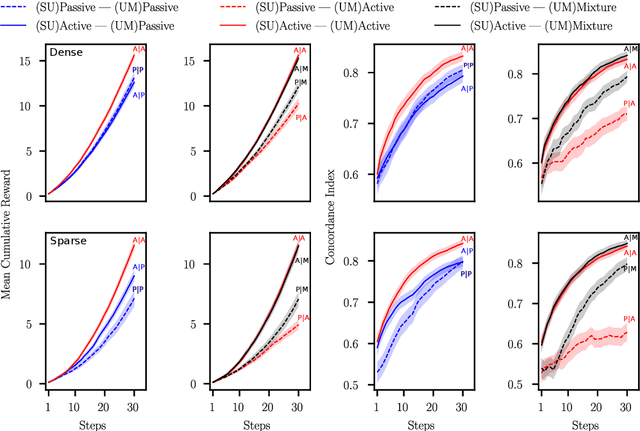

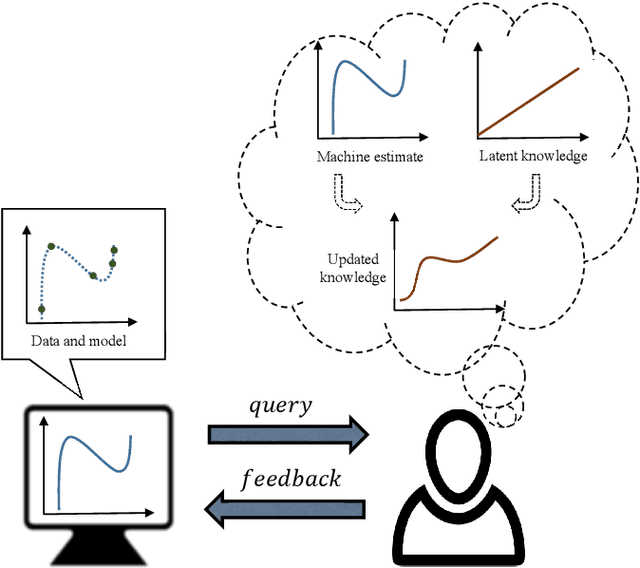

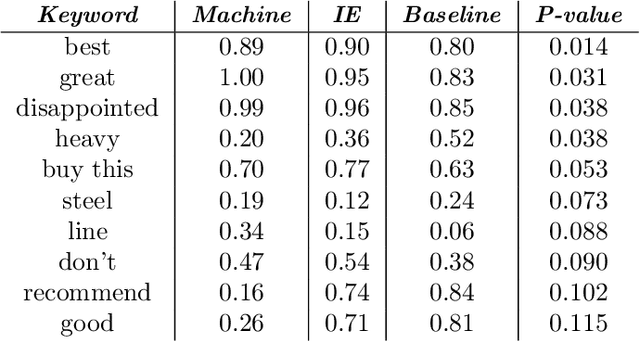

Modelling User's Theory of AI's Mind in Interactive Intelligent Systems

Sep 08, 2018

Abstract:Many interactive intelligent systems, such as recommendation and information retrieval systems, treat users as a passive data source. Yet, users form mental models of systems and instead of passively providing feedback to the queries of the system, they will strategically plan their actions within the constraints of the mental model to steer the system and achieve their goals faster. We propose to explicitly account for the user's theory of the AI's mind in the user model: the intelligent system has a model of the user having a model of the intelligent system. We study a case where the system is a contextual bandit and the user model is a Markov decision process that plans based on a simpler model of the bandit. Inference in the model can be reduced to probabilistic inverse reinforcement learning, with the nested bandit model defining the transition dynamics, and is implemented using probabilistic programming. Our results show that improved performance is achieved if users can form accurate mental models that the system can capture, implying predictability of the interactive intelligent system is important not only for the user experience but also for the design of the system's statistical models.

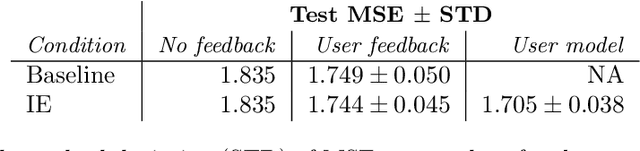

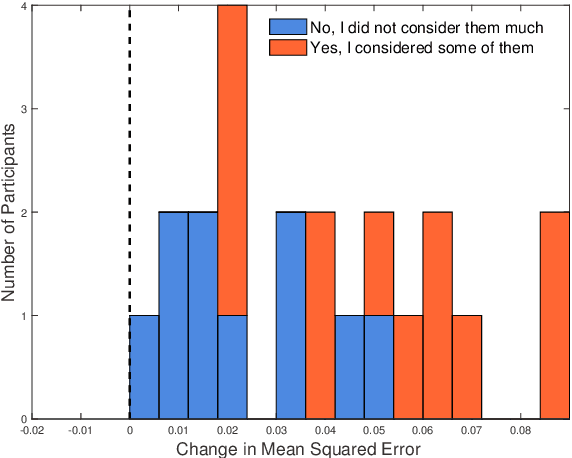

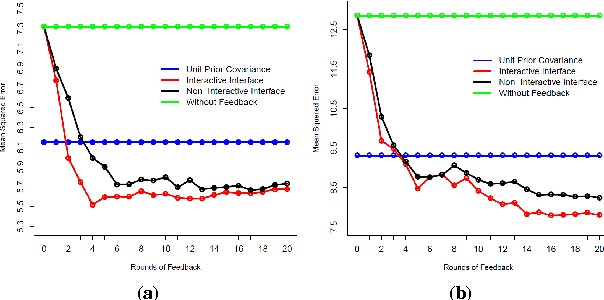

User Modelling for Avoiding Overfitting in Interactive Knowledge Elicitation for Prediction

Mar 09, 2018

Abstract:In human-in-the-loop machine learning, the user provides information beyond that in the training data. Many algorithms and user interfaces have been designed to optimize and facilitate this human--machine interaction; however, fewer studies have addressed the potential defects the designs can cause. Effective interaction often requires exposing the user to the training data or its statistics. The design of the system is then critical, as this can lead to double use of data and overfitting, if the user reinforces noisy patterns in the data. We propose a user modelling methodology, by assuming simple rational behaviour, to correct the problem. We show, in a user study with 48 participants, that the method improves predictive performance in a sparse linear regression sentiment analysis task, where graded user knowledge on feature relevance is elicited. We believe that the key idea of inferring user knowledge with probabilistic user models has general applicability in guarding against overfitting and improving interactive machine learning.

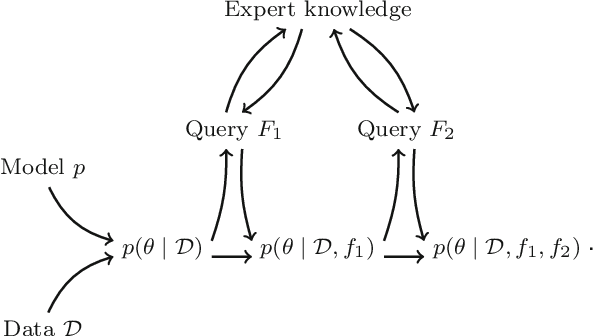

Knowledge Elicitation via Sequential Probabilistic Inference for High-Dimensional Prediction

Jul 13, 2017

Abstract:Prediction in a small-sized sample with a large number of covariates, the "small n, large p" problem, is challenging. This setting is encountered in multiple applications, such as precision medicine, where obtaining additional samples can be extremely costly or even impossible, and extensive research effort has recently been dedicated to finding principled solutions for accurate prediction. However, a valuable source of additional information, domain experts, has not yet been efficiently exploited. We formulate knowledge elicitation generally as a probabilistic inference process, where expert knowledge is sequentially queried to improve predictions. In the specific case of sparse linear regression, where we assume the expert has knowledge about the values of the regression coefficients or about the relevance of the features, we propose an algorithm and computational approximation for fast and efficient interaction, which sequentially identifies the most informative features on which to query expert knowledge. Evaluations of our method in experiments with simulated and real users show improved prediction accuracy already with a small effort from the expert.

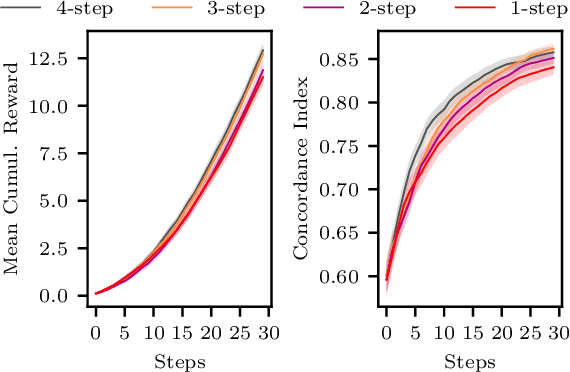

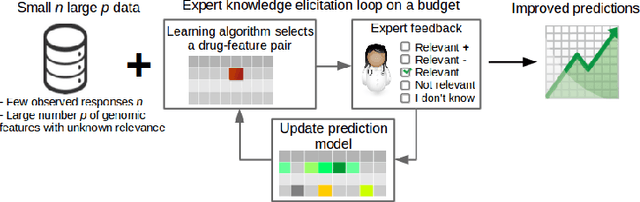

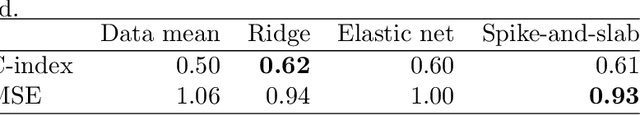

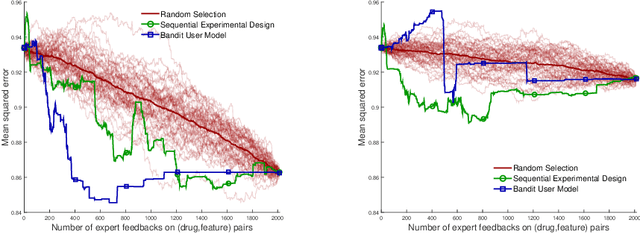

Improving drug sensitivity predictions in precision medicine through active expert knowledge elicitation

May 09, 2017

Abstract:Predicting the efficacy of a drug for a given individual, using high-dimensional genomic measurements, is at the core of precision medicine. However, identifying features on which to base the predictions remains a challenge, especially when the sample size is small. Incorporating expert knowledge offers a promising alternative to improve a prediction model, but collecting such knowledge is laborious to the expert if the number of candidate features is very large. We introduce a probabilistic model that can incorporate expert feedback about the impact of genomic measurements on the sensitivity of a cancer cell for a given drug. We also present two methods to intelligently collect this feedback from the expert, using experimental design and multi-armed bandit models. In a multiple myeloma blood cancer data set (n=51), expert knowledge decreased the prediction error by 8%. Furthermore, the intelligent approaches can be used to reduce the workload of feedback collection to less than 30% on average compared to a naive approach.

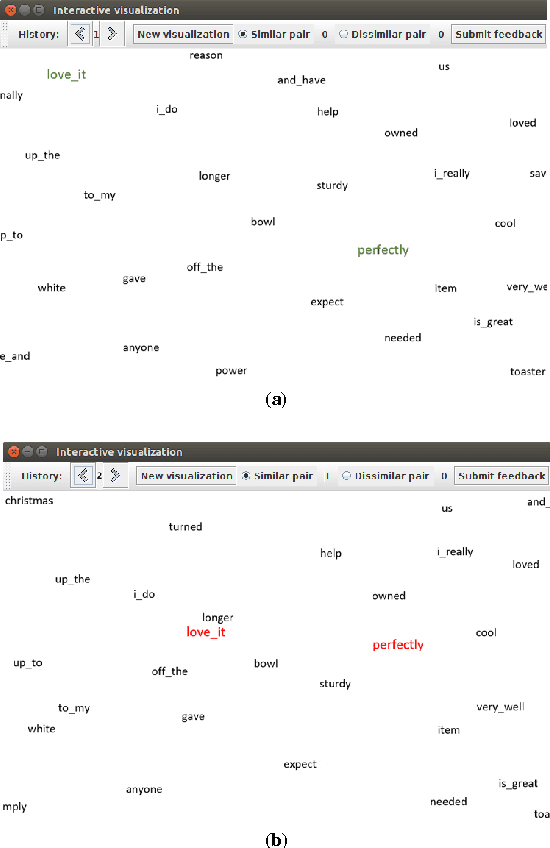

Interactive Prior Elicitation of Feature Similarities for Small Sample Size Prediction

Feb 28, 2017

Abstract:Regression under the "small $n$, large $p$" conditions, of small sample size $n$ and large number of features $p$ in the learning data set, is a recurring setting in which learning from data is difficult. With prior knowledge about relationships of the features, $p$ can effectively be reduced, but explicating such prior knowledge is difficult for experts. In this paper we introduce a new method for eliciting expert prior knowledge about the similarity of the roles of features in the prediction task. The key idea is to use an interactive multidimensional-scaling (MDS) type scatterplot display of the features to elicit the similarity relationships, and then use the elicited relationships in the prior distribution of prediction parameters. Specifically, for learning to predict a target variable with Bayesian linear regression, the feature relationships are used to construct a Gaussian prior with a full covariance matrix for the regression coefficients. Evaluation of our method in experiments with simulated and real users on text data confirm that prior elicitation of feature similarities improves prediction accuracy. Furthermore, elicitation with an interactive scatterplot display outperforms straightforward elicitation where the users choose feature pairs from a feature list.

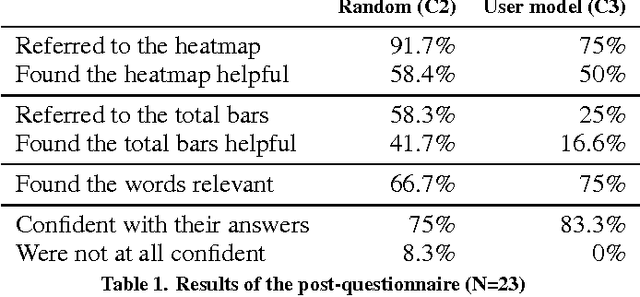

Interactive Elicitation of Knowledge on Feature Relevance Improves Predictions in Small Data Sets

Jan 16, 2017

Abstract:Providing accurate predictions is challenging for machine learning algorithms when the number of features is larger than the number of samples in the data. Prior knowledge can improve machine learning models by indicating relevant variables and parameter values. Yet, this prior knowledge is often tacit and only available from domain experts. We present a novel approach that uses interactive visualization to elicit the tacit prior knowledge and uses it to improve the accuracy of prediction models. The main component of our approach is a user model that models the domain expert's knowledge of the relevance of different features for a prediction task. In particular, based on the expert's earlier input, the user model guides the selection of the features on which to elicit user's knowledge next. The results of a controlled user study show that the user model significantly improves prior knowledge elicitation and prediction accuracy, when predicting the relative citation counts of scientific documents in a specific domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge