Tomasz Kornuta

Multi-Agent Dynamic Pricing in a Blockchain Protocol Using Gaussian Bandits

Dec 13, 2022

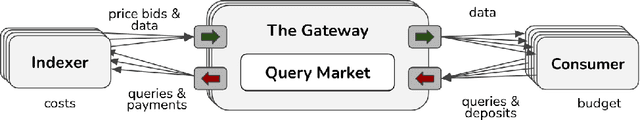

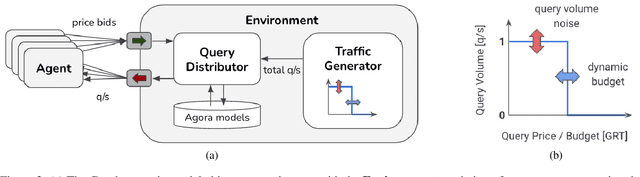

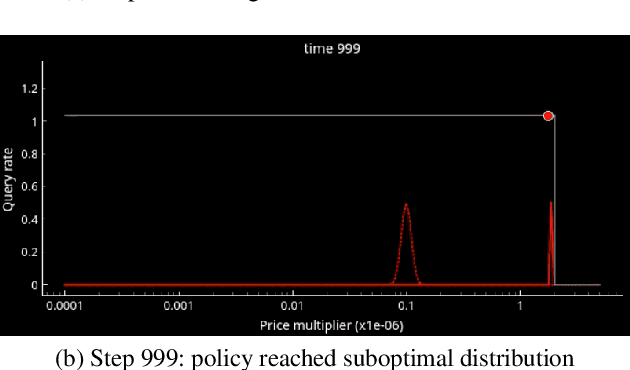

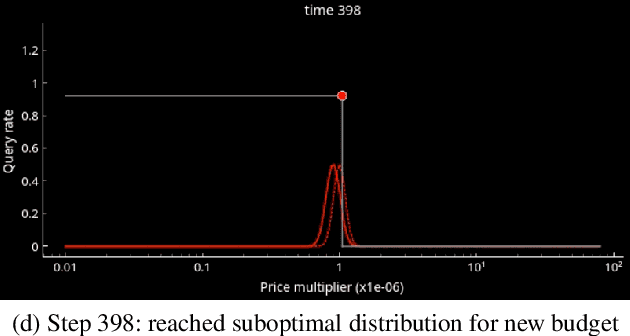

Abstract:The Graph Protocol indexes historical blockchain transaction data and makes it available for querying. As the protocol is decentralized, there are many independent Indexers that index and compete with each other for serving queries to the Consumers. One dimension along which Indexers compete is pricing. In this paper, we propose a bandit-based algorithm for maximization of Indexers' revenue via Consumer budget discovery. We present the design and the considerations we had to make for a dynamic pricing algorithm being used by multiple agents simultaneously. We discuss the results achieved by our dynamic pricing bandits both in simulation and deployed into production on one of the Indexers operating on Ethereum. We have open-sourced both the simulation framework and tools we created, which other Indexers have since started to adapt into their own workflows.

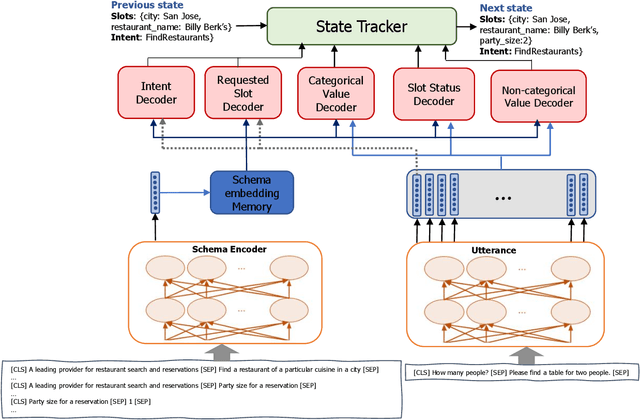

A Fast and Robust BERT-based Dialogue State Tracker for Schema-Guided Dialogue Dataset

Aug 27, 2020

Abstract:Dialog State Tracking (DST) is one of the most crucial modules for goal-oriented dialogue systems. In this paper, we introduce FastSGT (Fast Schema Guided Tracker), a fast and robust BERT-based model for state tracking in goal-oriented dialogue systems. The proposed model is designed for the Schema-Guided Dialogue (SGD) dataset which contains natural language descriptions for all the entities including user intents, services, and slots. The model incorporates two carry-over procedures for handling the extraction of the values not explicitly mentioned in the current user utterance. It also uses multi-head attention projections in some of the decoders to have a better modelling of the encoder outputs. In the conducted experiments we compared FastSGT to the baseline model for the SGD dataset. Our model keeps the efficiency in terms of computational and memory consumption while improving the accuracy significantly. Additionally, we present ablation studies measuring the impact of different parts of the model on its performance. We also show the effectiveness of data augmentation for improving the accuracy without increasing the amount of computational resources.

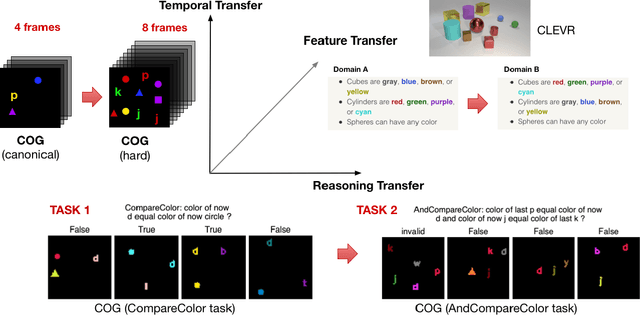

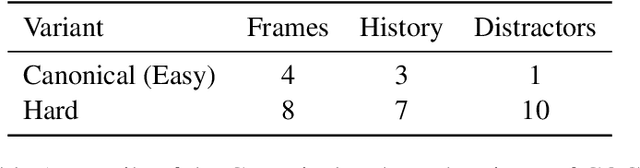

Transfer Learning in Visual and Relational Reasoning

Nov 27, 2019

Abstract:Transfer learning is becoming the de facto solution for vision and text encoders in the front-end processing of machine learning solutions. Utilizing vast amounts of knowledge in pre-trained models and subsequent fine-tuning allows achieving better performance in domains where labeled data is limited. In this paper, we analyze the efficiency of transfer learning in visual reasoning by introducing a new model (SAMNet) and testing it on two datasets: COG and CLEVR. Our new model achieves state-of-the-art accuracy on COG and shows significantly better generalization capabilities compared to the baseline. We also formalize a taxonomy of transfer learning for visual reasoning around three axes: feature, temporal, and reasoning transfer. Based on extensive experimentation of transfer learning on each of the two datasets, we show the performance of the new model along each axis.

PyTorchPipe: a framework for rapid prototyping of pipelines combining language and vision

Oct 18, 2019

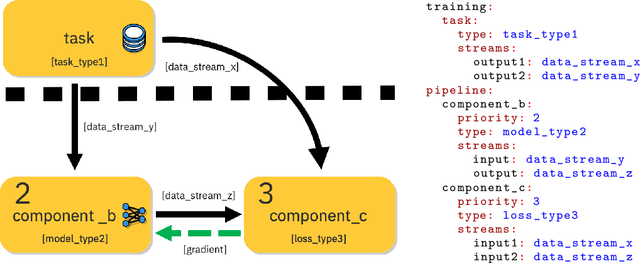

Abstract:Access to vast amounts of data along with affordable computational power stimulated the reincarnation of neural networks. The progress could not be achieved without adequate software tools, lowering the entry bar for the next generations of researchers and developers. The paper introduces PyTorchPipe (PTP), a framework built on top of PyTorch. Answering the recent needs and trends in machine learning, PTP facilitates building and training of complex, multi-modal models combining language and vision (but is not limited to those two modalities). At its core, PTP employs a component-oriented approach and relies on the concept of a pipeline, defined as a directed acyclic graph of loosely coupled components. A user defines a pipeline using yaml-based (thus human-readable) configuration files, whereas PTP provides generic workers for their loading, training, and testing using all the computational power (CPUs and GPUs) that is available to the user. The paper covers the main concepts of PyTorchPipe, discusses its key features and briefly presents the currently implemented tasks, models and components.

Leveraging Medical Visual Question Answering with Supporting Facts

May 28, 2019

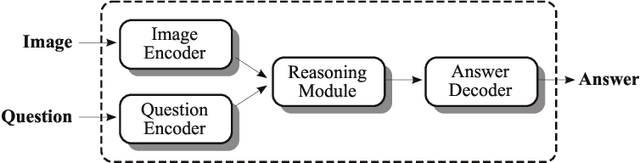

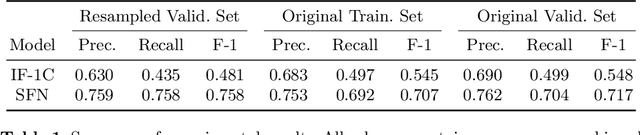

Abstract:In this working notes paper, we describe IBM Research AI (Almaden) team's participation in the ImageCLEF 2019 VQA-Med competition. The challenge consists of four question-answering tasks based on radiology images. The diversity of imaging modalities, organs and disease types combined with a small imbalanced training set made this a highly complex problem. To overcome these difficulties, we implemented a modular pipeline architecture that utilized transfer learning and multi-task learning. Our findings led to the development of a novel model called Supporting Facts Network (SFN). The main idea behind SFN is to cross-utilize information from upstream tasks to improve the accuracy on harder downstream ones. This approach significantly improved the scores achieved in the validation set (18 point improvement in F-1 score). Finally, we submitted four runs to the competition and were ranked seventh.

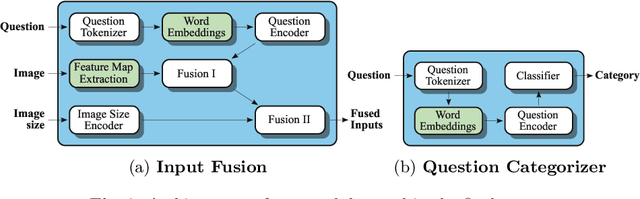

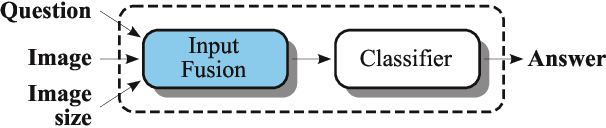

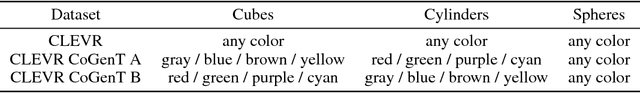

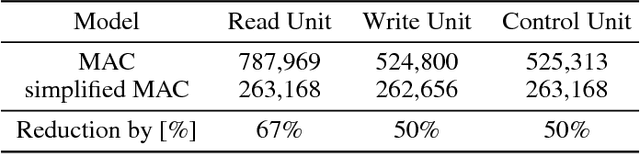

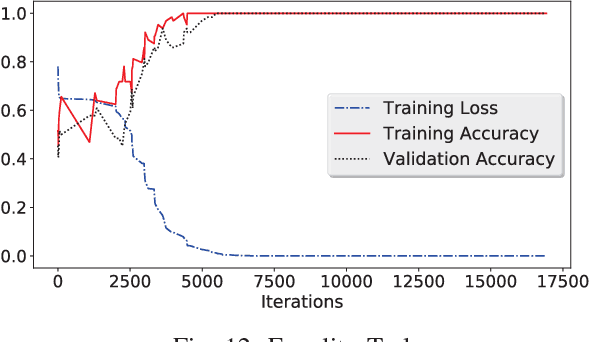

On transfer learning using a MAC model variant

Nov 16, 2018

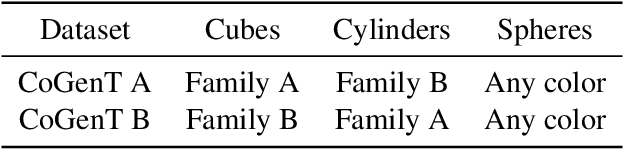

Abstract:We introduce a variant of the MAC model (Hudson and Manning, ICLR 2018) with a simplified set of equations that achieves comparable accuracy, while training faster. We evaluate both models on CLEVR and CoGenT, and show that, transfer learning with fine-tuning results in a 15 point increase in accuracy, matching the state of the art. Finally, in contrast, we demonstrate that improper fine-tuning can actually reduce a model's accuracy as well.

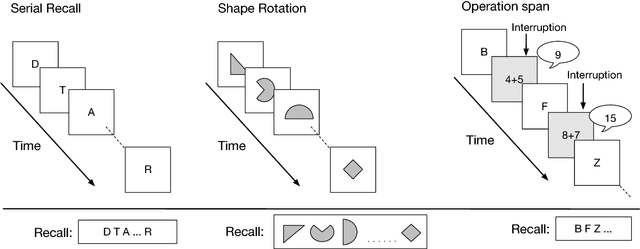

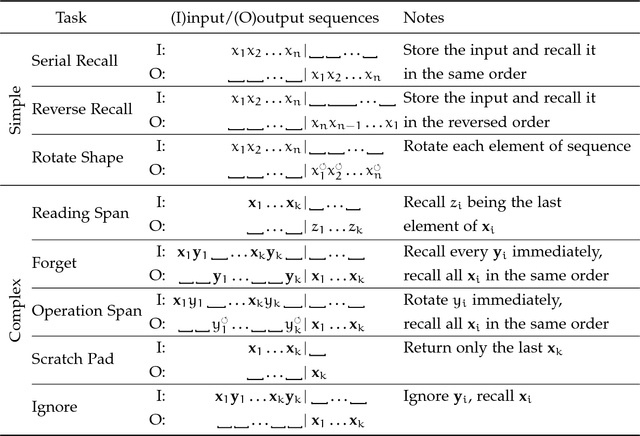

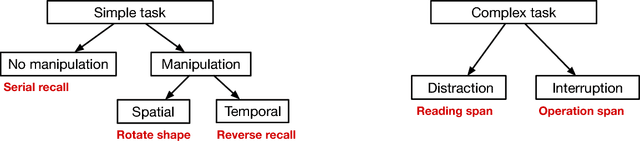

Learning to Remember, Forget and Ignore using Attention Control in Memory

Sep 28, 2018

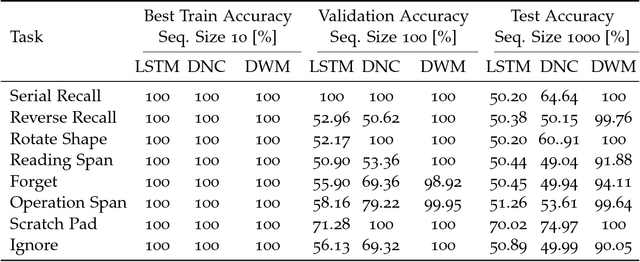

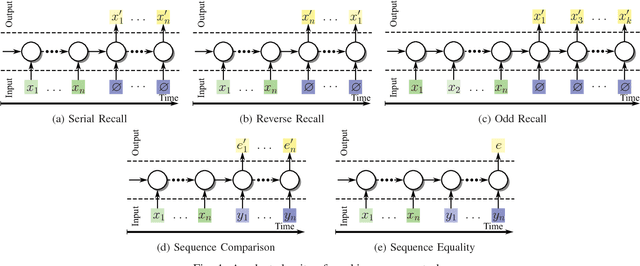

Abstract:Typical neural networks with external memory do not effectively separate capacity for episodic and working memory as is required for reasoning in humans. Applying knowledge gained from psychological studies, we designed a new model called Differentiable Working Memory (DWM) in order to specifically emulate human working memory. As it shows the same functional characteristics as working memory, it robustly learns psychology inspired tasks and converges faster than comparable state-of-the-art models. Moreover, the DWM model successfully generalizes to sequences two orders of magnitude longer than the ones used in training. Our in-depth analysis shows that the behavior of DWM is interpretable and that it learns to have fine control over memory, allowing it to retain, ignore or forget information based on its relevance.

Using Multi-task and Transfer Learning to Solve Working Memory Tasks

Sep 28, 2018

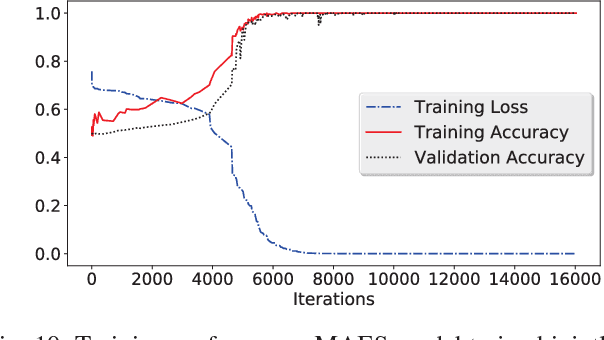

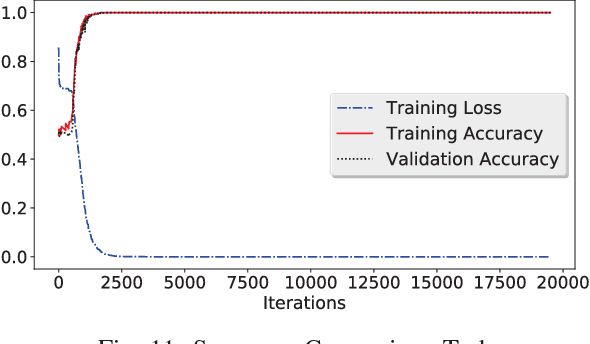

Abstract:We propose a new architecture called Memory-Augmented Encoder-Solver (MAES) that enables transfer learning to solve complex working memory tasks adapted from cognitive psychology. It uses dual recurrent neural network controllers, inside the encoder and solver, respectively, that interface with a shared memory module and is completely differentiable. We study different types of encoders in a systematic manner and demonstrate a unique advantage of multi-task learning in obtaining the best possible encoder. We show by extensive experimentation that the trained MAES models achieve task-size generalization, i.e., they are capable of handling sequential inputs 50 times longer than seen during training, with appropriately large memory modules. We demonstrate that the performance achieved by MAES far outperforms existing and well-known models such as the LSTM, NTM and DNC on the entire suite of tasks.

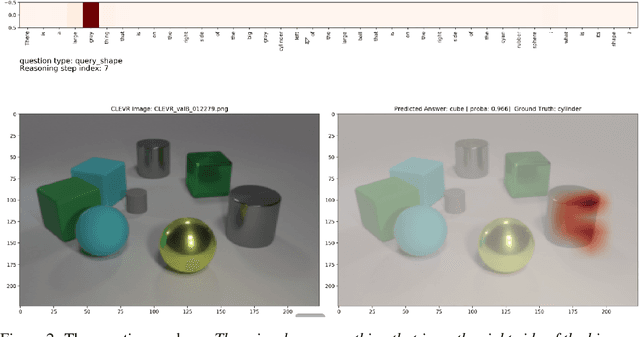

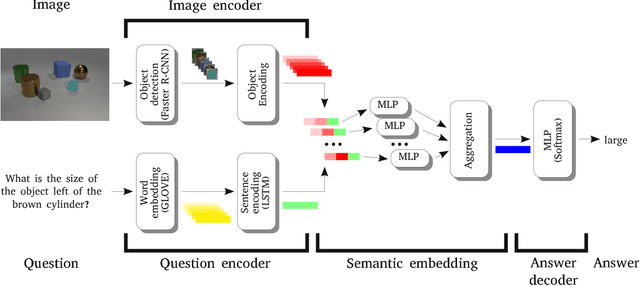

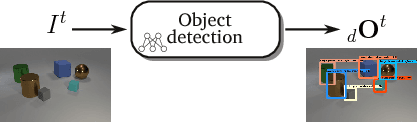

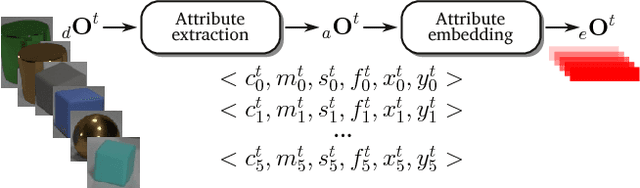

Object-based reasoning in VQA

Jan 29, 2018

Abstract:Visual Question Answering (VQA) is a novel problem domain where multi-modal inputs must be processed in order to solve the task given in the form of a natural language. As the solutions inherently require to combine visual and natural language processing with abstract reasoning, the problem is considered as AI-complete. Recent advances indicate that using high-level, abstract facts extracted from the inputs might facilitate reasoning. Following that direction we decided to develop a solution combining state-of-the-art object detection and reasoning modules. The results, achieved on the well-balanced CLEVR dataset, confirm the promises and show significant, few percent improvements of accuracy on the complex "counting" task.

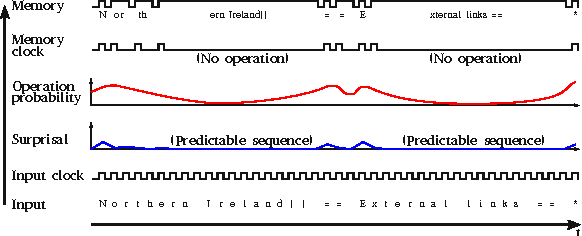

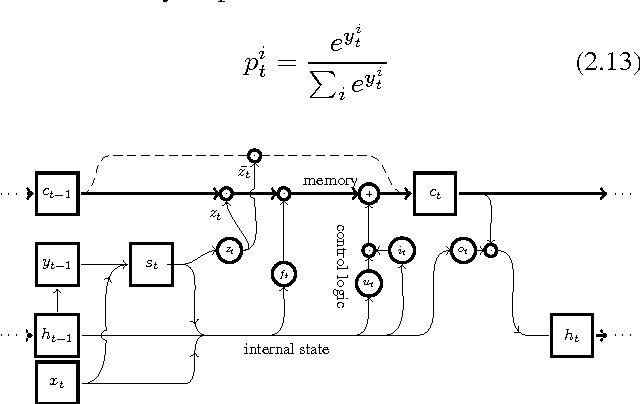

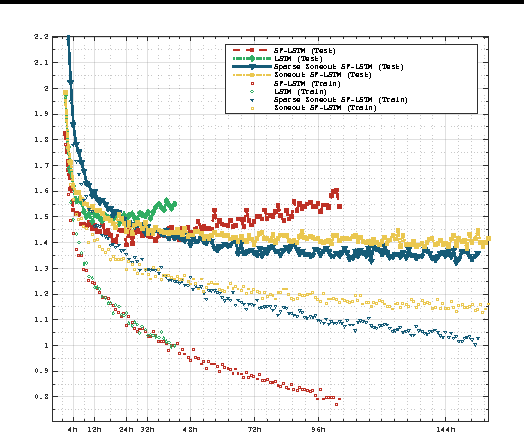

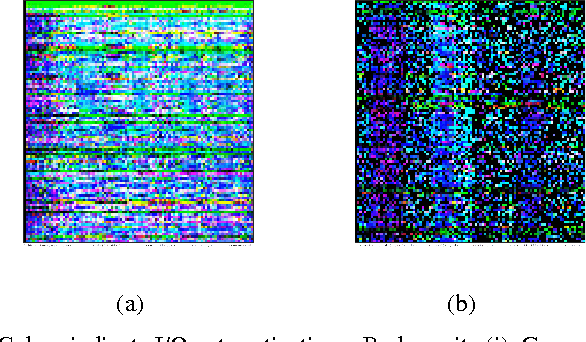

Surprisal-Driven Zoneout

Dec 13, 2016

Abstract:We propose a novel method of regularization for recurrent neural networks called suprisal-driven zoneout. In this method, states zoneout (maintain their previous value rather than updating), when the suprisal (discrepancy between the last state's prediction and target) is small. Thus regularization is adaptive and input-driven on a per-neuron basis. We demonstrate the effectiveness of this idea by achieving state-of-the-art bits per character of 1.31 on the Hutter Prize Wikipedia dataset, significantly reducing the gap to the best known highly-engineered compression methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge