Tolga Ozaslan

MAVNet: an Effective Semantic Segmentation Micro-Network for MAV-based Tasks

Apr 03, 2019

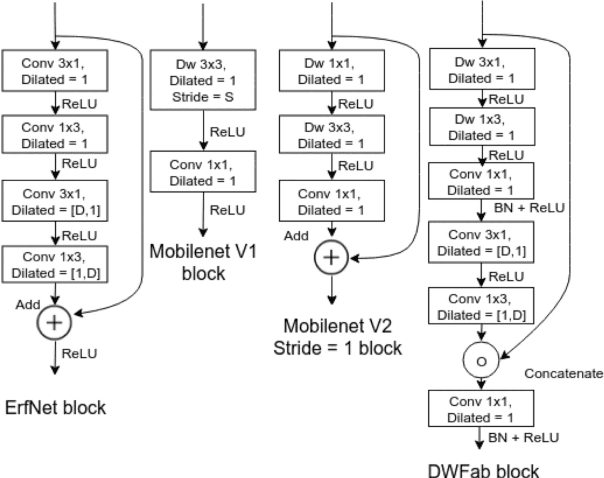

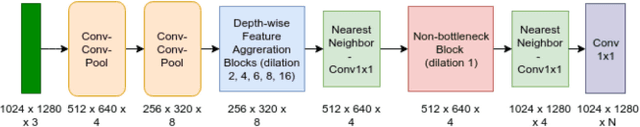

Abstract:Real-time image semantic segmentation is an essential capability to enhance robot autonomy and improve human situational awareness. In this paper, we present MAVNet, a novel deep neural network approach for semantic segmentation suitable for small scale Micro Aerial Vehicles (MAVs). Our approach is compatible with the size, weight, and power(SWaP) constraints typical of small scale MAVs, which can only employ small processing units and GPUs. These units have typically limited computational capacity, which has to be concurrently shared with other real time performance tasks such as visual odometry and path planning. Our proposed solution MAVNet, is a fast and compact network inspired by ERFNet and features about 400 times fewer parameters in comparison. Experimental results on multiple datasets validate our proposed approach. Additionally, comparisons with other state of the art approaches show that our solution outperforms theirs in terms of speed and accuracy achieving up to 48 FPS on an NVIDIA 1080Ti and 9 FPS on the NVIDIA Jetson Xavier when processing high resolution imagery. Our algorithm and datasets are made publicly available.

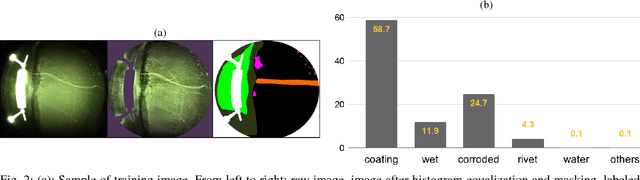

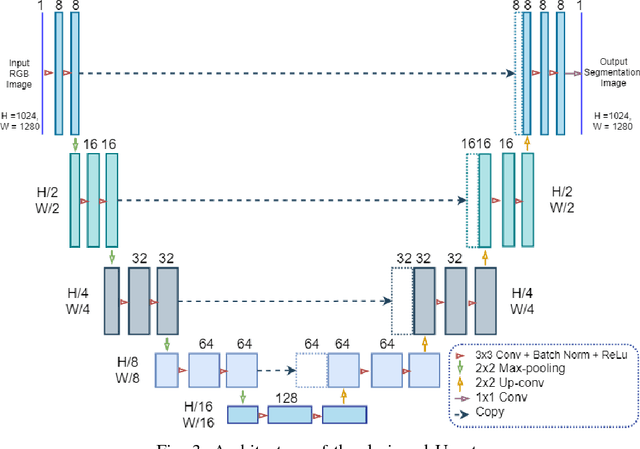

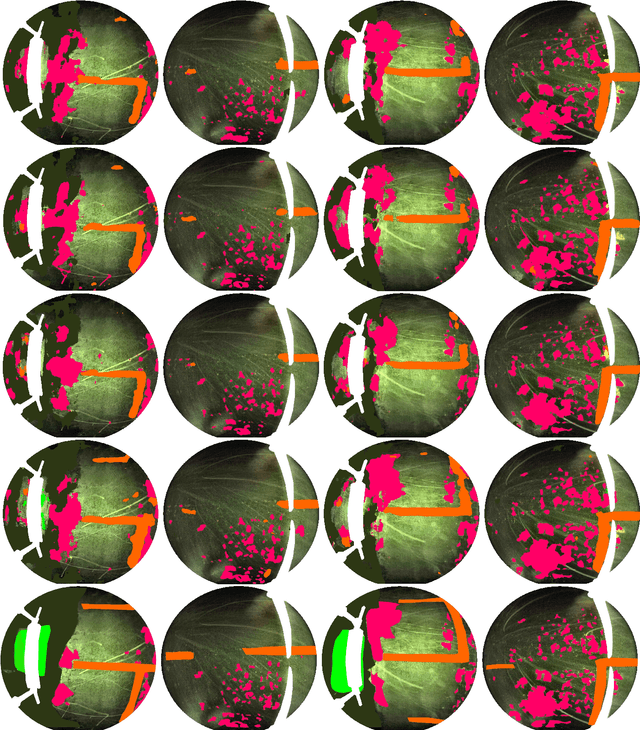

U-Net for MAV-based Penstock Inspection: an Investigation of Focal Loss in Multi-class Segmentation for Corrosion Identification

Sep 18, 2018

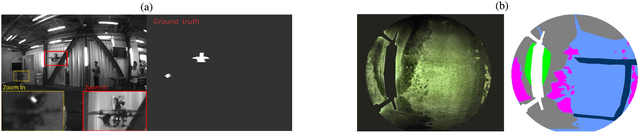

Abstract:Periodical inspection and maintenance of critical infrastructure such as dams, penstocks, and locks are of significant importance to prevent catastrophic failures. Conventional manual inspection methods require inspectors to climb along a penstock to spot corrosion, rust and crack formation which is unsafe, labor-intensive, and requires intensive training. This work presents an alternative approach using a Micro Aerial Vehicle (MAV) that autonomously flies to collect imagery which is then fed into a pretrained deep-learning model to identify corrosion. Our simplified U-Net trained with less than 40 image samples can do inference at 12 fps on a single GPU. We analyze different loss functions to solve the class imbalance problem, followed by a discussion on choosing proper metrics and weights for object classes. Results obtained with the dataset collected from Center Hill Dam, TN show that focal loss function, combined with a proper set of class weights yield better segmentation results than the base loss, Softmax cross entropy. Our method can be used in combination with planning algorithm to offer a complete, safe and cost-efficient solution to autonomous infrastructure inspection.

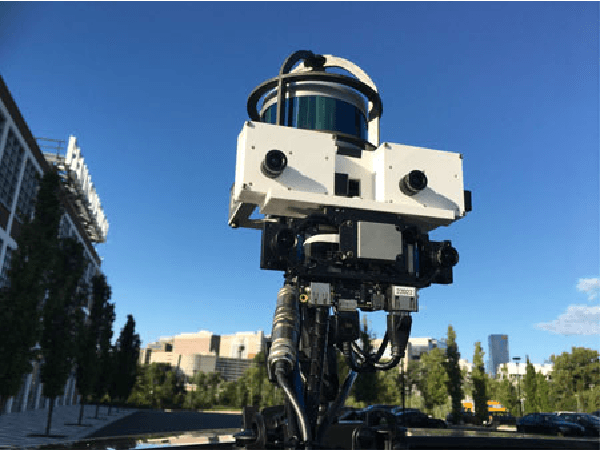

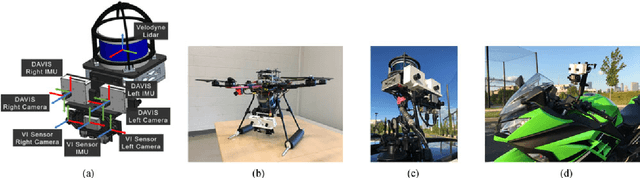

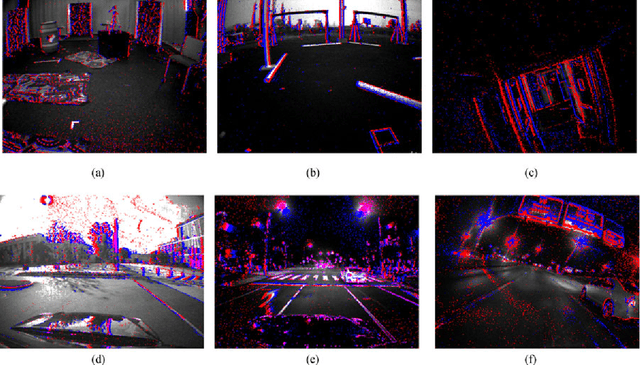

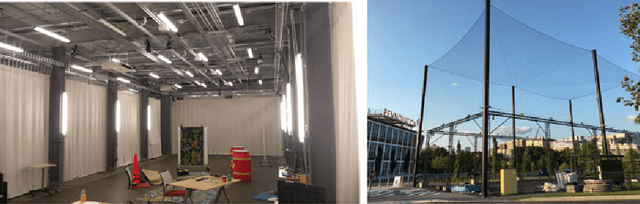

The Multi Vehicle Stereo Event Camera Dataset: An Event Camera Dataset for 3D Perception

Feb 19, 2018

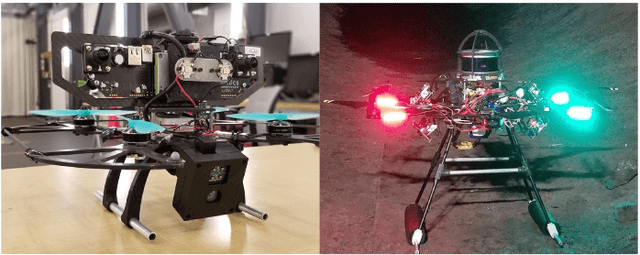

Abstract:Event based cameras are a new passive sensing modality with a number of benefits over traditional cameras, including extremely low latency, asynchronous data acquisition, high dynamic range and very low power consumption. There has been a lot of recent interest and development in applying algorithms to use the events to perform a variety of 3D perception tasks, such as feature tracking, visual odometry, and stereo depth estimation. However, there currently lacks the wealth of labeled data that exists for traditional cameras to be used for both testing and development. In this paper, we present a large dataset with a synchronized stereo pair event based camera system, carried on a handheld rig, flown by a hexacopter, driven on top of a car and mounted on a motorcycle, in a variety of different illumination levels and environments. From each camera, we provide the event stream, grayscale images and IMU readings. In addition, we utilize a combination of IMU, a rigidly mounted lidar system, indoor and outdoor motion capture and GPS to provide accurate pose and depth images for each camera at up to 100Hz. For comparison, we also provide synchronized grayscale images and IMU readings from a frame based stereo camera system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge