Todd Zickler

GeCo: A Differentiable Geometric Consistency Metric for Video Generation

Dec 25, 2025Abstract:We introduce GeCo, a geometry-grounded metric for jointly detecting geometric deformation and occlusion-inconsistency artifacts in static scenes. By fusing residual motion and depth priors, GeCo produces interpretable, dense consistency maps that reveal these artifacts. We use GeCo to systematically benchmark recent video generation models, uncovering common failure modes, and further employ it as a training-free guidance loss to reduce deformation artifacts during video generation.

CObL: Toward Zero-Shot Ordinal Layering without User Prompting

Aug 11, 2025Abstract:Vision benefits from grouping pixels into objects and understanding their spatial relationships, both laterally and in depth. We capture this with a scene representation comprising an occlusion-ordered stack of "object layers," each containing an isolated and amodally-completed object. To infer this representation from an image, we introduce a diffusion-based architecture named Concurrent Object Layers (CObL). CObL generates a stack of object layers in parallel, using Stable Diffusion as a prior for natural objects and inference-time guidance to ensure the inferred layers composite back to the input image. We train CObL using a few thousand synthetically-generated images of multi-object tabletop scenes, and we find that it zero-shot generalizes to photographs of real-world tabletops with varying numbers of novel objects. In contrast to recent models for amodal object completion, CObL reconstructs multiple occluded objects without user prompting and without knowing the number of objects beforehand. Unlike previous models for unsupervised object-centric representation learning, CObL is not limited to the world it was trained in.

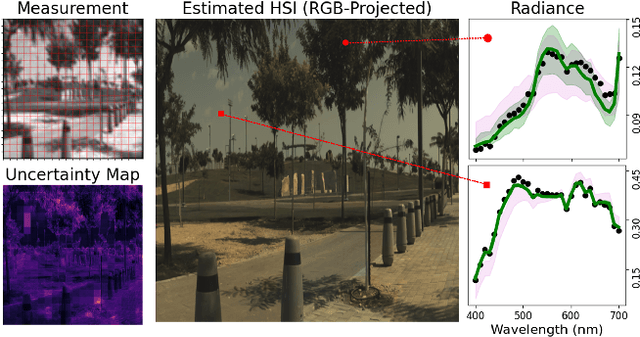

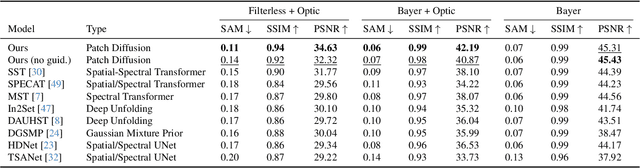

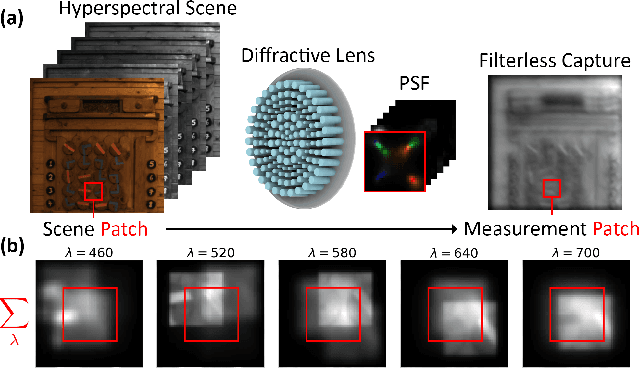

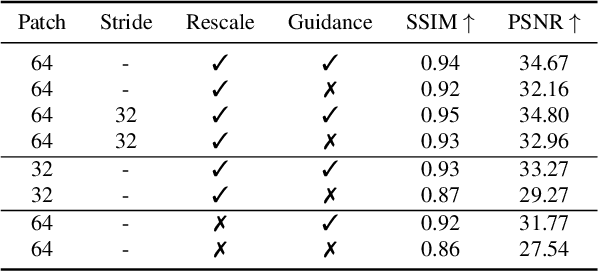

Grayscale to Hyperspectral at Any Resolution Using a Phase-Only Lens

Dec 03, 2024

Abstract:We consider the problem of reconstructing a $H\times W\times 31$ hyperspectral image from a $H\times W$ grayscale snapshot measurement that is captured using a single diffractive optic and a filterless panchromatic photosensor. This problem is severely ill-posed, and we present the first model that is able to produce high-quality results. We train a conditional denoising diffusion model that maps a small grayscale measurement patch to a hyperspectral patch. We then deploy the model to many patches in parallel, using global physics-based guidance to synchronize the patch predictions. Our model can be trained using small hyperspectral datasets and then deployed to reconstruct hyperspectral images of arbitrary size. Also, by drawing multiple samples with different seeds, our model produces useful uncertainty maps. We show that our model achieves state-of-the-art performance on previous snapshot hyperspectral benchmarks where reconstruction is better conditioned. Our work lays the foundation for a new class of high-resolution hyperspectral imagers that are compact and light-efficient.

Multistable Shape from Shading Emerges from Patch Diffusion

May 23, 2024Abstract:Models for monocular shape reconstruction of surfaces with diffuse reflection -- shape from shading -- ought to produce distributions of outputs, because there are fundamental mathematical ambiguities of both continuous (e.g., bas-relief) and discrete (e.g., convex/concave) varieties which are also experienced by humans. Yet, the outputs of current models are limited to point estimates or tight distributions around single modes, which prevent them from capturing these effects. We introduce a model that reconstructs a multimodal distribution of shapes from a single shading image, which aligns with the human experience of multistable perception. We train a small denoising diffusion process to generate surface normal fields from $16\times 16$ patches of synthetic images of everyday 3D objects. We deploy this model patch-wise at multiple scales, with guidance from inter-patch shape consistency constraints. Despite its relatively small parameter count and predominantly bottom-up structure, we show that multistable shape explanations emerge from this model for ''ambiguous'' test images that humans experience as being multistable. At the same time, the model produces veridical shape estimates for object-like images that include distinctive occluding contours and appear less ambiguous. This may inspire new architectures for stochastic 3D shape perception that are more efficient and better aligned with human experience.

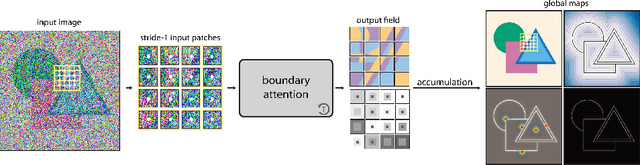

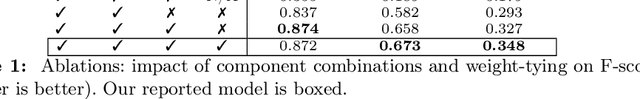

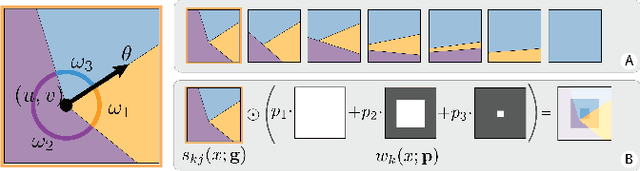

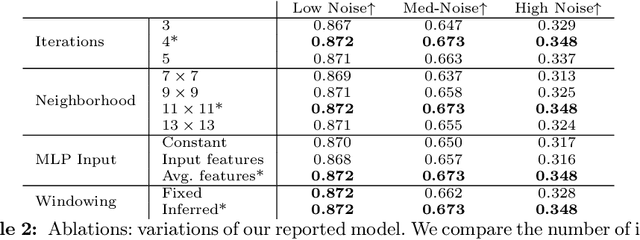

Boundary Attention: Learning to Find Faint Boundaries at Any Resolution

Jan 01, 2024

Abstract:We present a differentiable model that explicitly models boundaries -- including contours, corners and junctions -- using a new mechanism that we call boundary attention. We show that our model provides accurate results even when the boundary signal is very weak or is swamped by noise. Compared to previous classical methods for finding faint boundaries, our model has the advantages of being differentiable; being scalable to larger images; and automatically adapting to an appropriate level of geometric detail in each part of an image. Compared to previous deep methods for finding boundaries via end-to-end training, it has the advantages of providing sub-pixel precision, being more resilient to noise, and being able to process any image at its native resolution and aspect ratio.

Polarization Multi-Image Synthesis with Birefringent Metasurfaces

Jul 16, 2023

Abstract:Optical metasurfaces composed of precisely engineered nanostructures have gained significant attention for their ability to manipulate light and implement distinct functionalities based on the properties of the incident field. Computational imaging systems have started harnessing this capability to produce sets of coded measurements that benefit certain tasks when paired with digital post-processing. Inspired by these works, we introduce a new system that uses a birefringent metasurface with a polarizer-mosaicked photosensor to capture four optically-coded measurements in a single exposure. We apply this system to the task of incoherent opto-electronic filtering, where digital spatial-filtering operations are replaced by simpler, per-pixel sums across the four polarization channels, independent of the spatial filter size. In contrast to previous work on incoherent opto-electronic filtering that can realize only one spatial filter, our approach can realize a continuous family of filters from a single capture, with filters being selected from the family by adjusting the post-capture digital summation weights. To find a metasurface that can realize a set of user-specified spatial filters, we introduce a form of gradient descent with a novel regularizer that encourages light efficiency and a high signal-to-noise ratio. We demonstrate several examples in simulation and with fabricated prototypes, including some with spatial filters that have prescribed variations with respect to depth and wavelength.

Eclipse: Disambiguating Illumination and Materials using Unintended Shadows

Jun 08, 2023

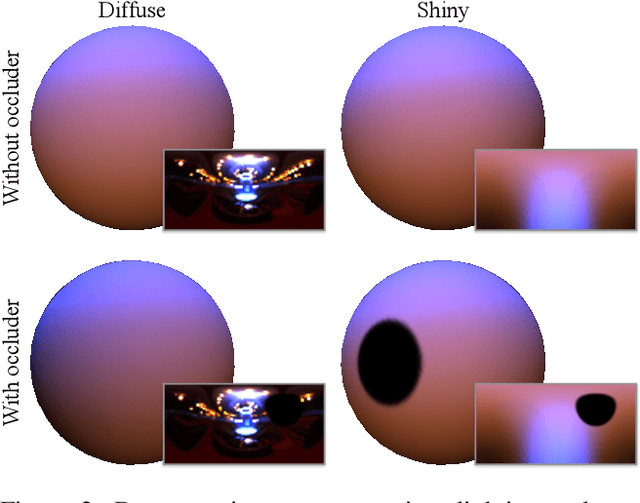

Abstract:Decomposing an object's appearance into representations of its materials and the surrounding illumination is difficult, even when the object's 3D shape is known beforehand. This problem is ill-conditioned because diffuse materials severely blur incoming light, and is ill-posed because diffuse materials under high-frequency lighting can be indistinguishable from shiny materials under low-frequency lighting. We show that it is possible to recover precise materials and illumination -- even from diffuse objects -- by exploiting unintended shadows, like the ones cast onto an object by the photographer who moves around it. These shadows are a nuisance in most previous inverse rendering pipelines, but here we exploit them as signals that improve conditioning and help resolve material-lighting ambiguities. We present a method based on differentiable Monte Carlo ray tracing that uses images of an object to jointly recover its spatially-varying materials, the surrounding illumination environment, and the shapes of the unseen light occluders who inadvertently cast shadows upon it.

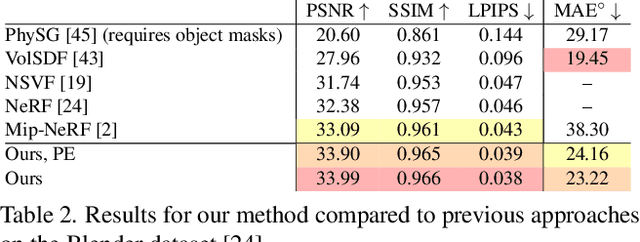

Ref-NeRF: Structured View-Dependent Appearance for Neural Radiance Fields

Dec 07, 2021

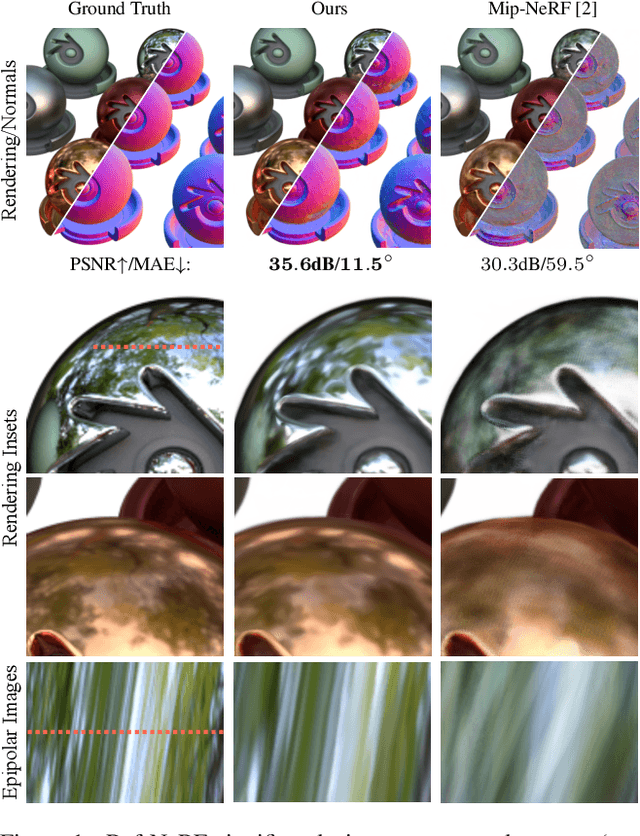

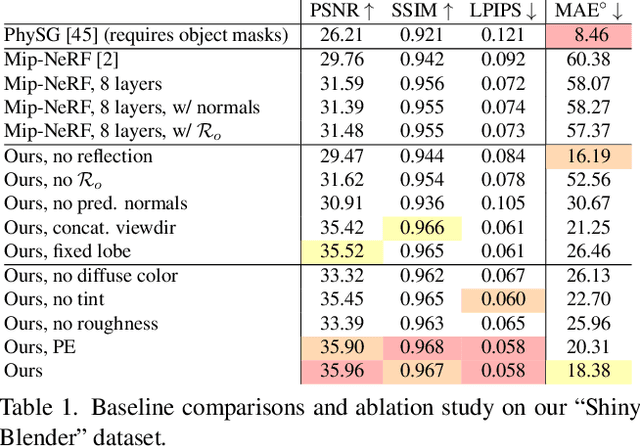

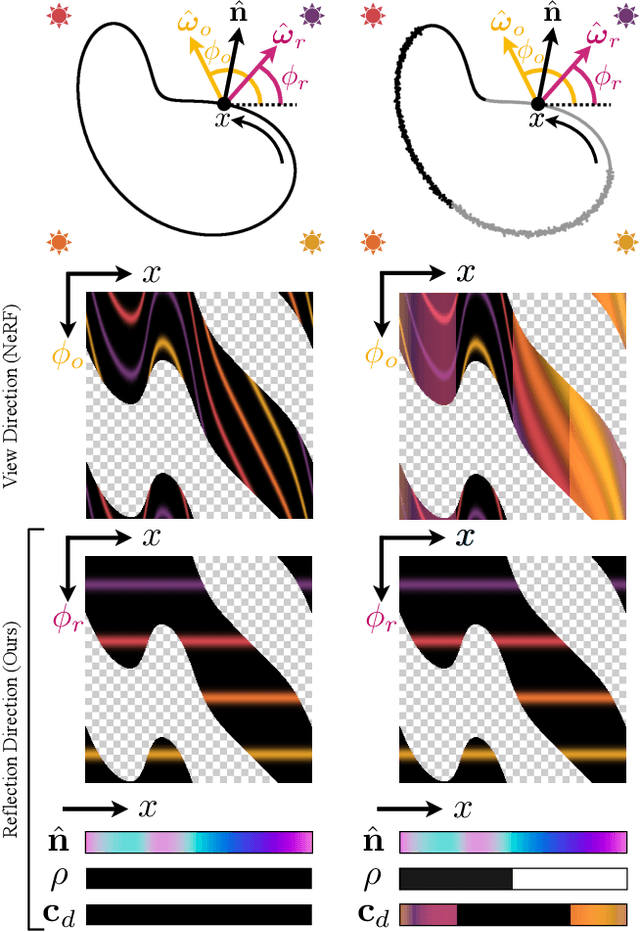

Abstract:Neural Radiance Fields (NeRF) is a popular view synthesis technique that represents a scene as a continuous volumetric function, parameterized by multilayer perceptrons that provide the volume density and view-dependent emitted radiance at each location. While NeRF-based techniques excel at representing fine geometric structures with smoothly varying view-dependent appearance, they often fail to accurately capture and reproduce the appearance of glossy surfaces. We address this limitation by introducing Ref-NeRF, which replaces NeRF's parameterization of view-dependent outgoing radiance with a representation of reflected radiance and structures this function using a collection of spatially-varying scene properties. We show that together with a regularizer on normal vectors, our model significantly improves the realism and accuracy of specular reflections. Furthermore, we show that our model's internal representation of outgoing radiance is interpretable and useful for scene editing.

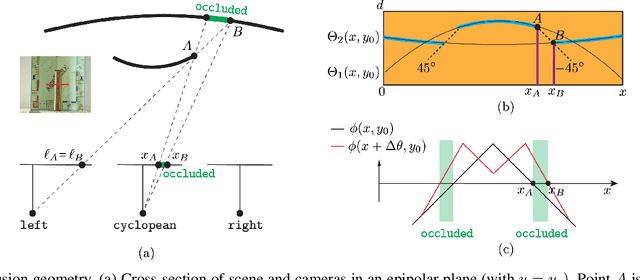

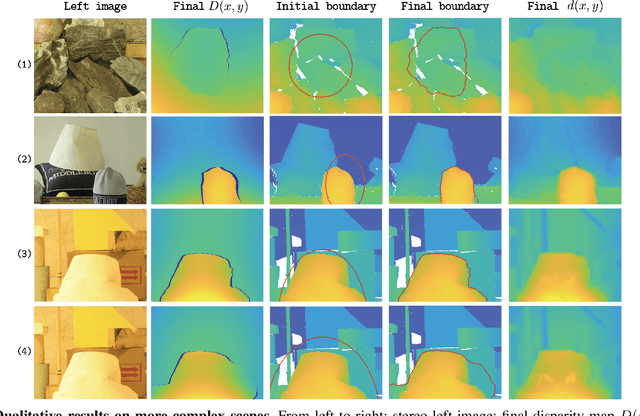

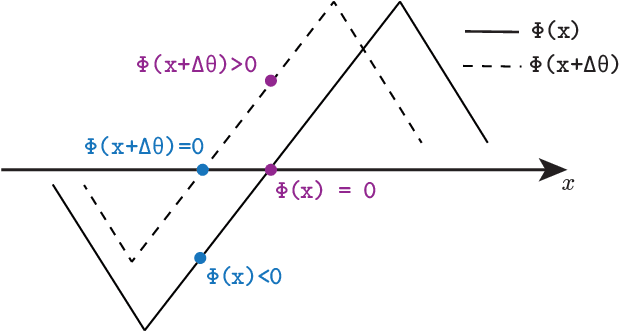

Level Set Binocular Stereo with Occlusions

Sep 08, 2021

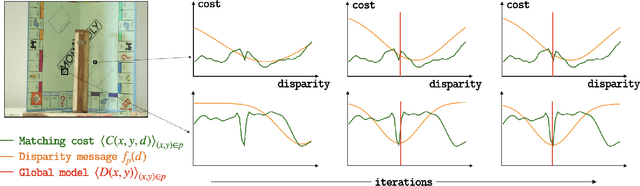

Abstract:Localizing stereo boundaries and predicting nearby disparities are difficult because stereo boundaries induce occluded regions where matching cues are absent. Most modern computer vision algorithms treat occlusions secondarily (e.g., via left-right consistency checks after matching) or rely on high-level cues to improve nearby disparities (e.g., via deep networks and large training sets). They ignore the geometry of stereo occlusions, which dictates that the spatial extent of occlusion must equal the amplitude of the disparity jump that causes it. This paper introduces an energy and level-set optimizer that improves boundaries by encoding occlusion geometry. Our model applies to two-layer, figure-ground scenes, and it can be implemented cooperatively using messages that pass predominantly between parents and children in an undecimated hierarchy of multi-scale image patches. In a small collection of figure-ground scenes curated from Middlebury and Falling Things stereo datasets, our model provides more accurate boundaries than previous occlusion-handling stereo techniques. This suggests new directions for creating cooperative stereo systems that incorporate occlusion cues in a human-like manner.

StegaPos: Preventing Crops and Splices with Imperceptible Positional Encodings

Apr 25, 2021

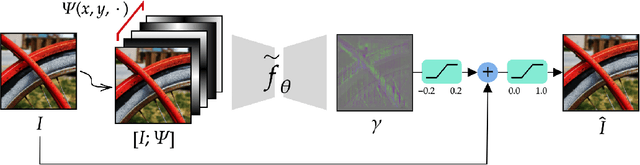

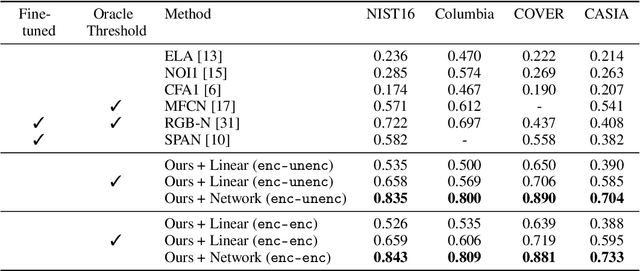

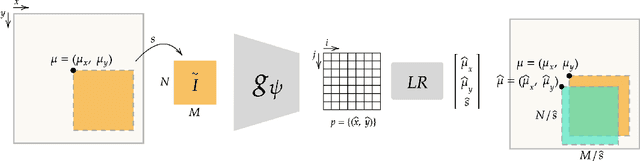

Abstract:We present a model for differentiating between images that are authentic copies of ones published by photographers, and images that have been manipulated by cropping, splicing or downsampling after publication. The model comprises an encoder that resides with the photographer and a matching decoder that is available to observers. The encoder learns to embed imperceptible positional signatures into image values prior to publication. The decoder learns to use these steganographic positional ("stegapos") signatures to determine, for each small image patch, the 2D positional coordinates that were held by the patch in its originally-published image. Crop, splice and downsample edits become detectable by the inconsistencies they cause in the hidden positional signatures. We find that training the encoder and decoder together produces a model that imperceptibly encodes position, and that enables superior performance on established benchmarks for splice detection and high accuracy on a new benchmark for crop detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge