Gokhan Egri

StegaPos: Preventing Crops and Splices with Imperceptible Positional Encodings

Apr 25, 2021

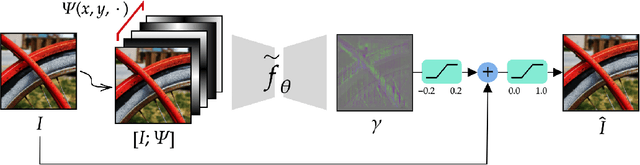

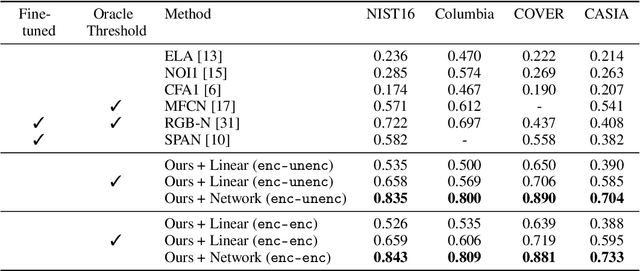

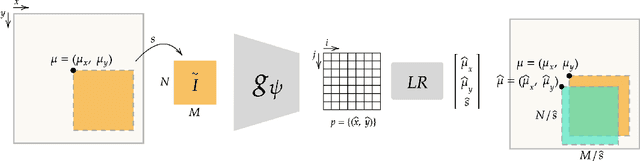

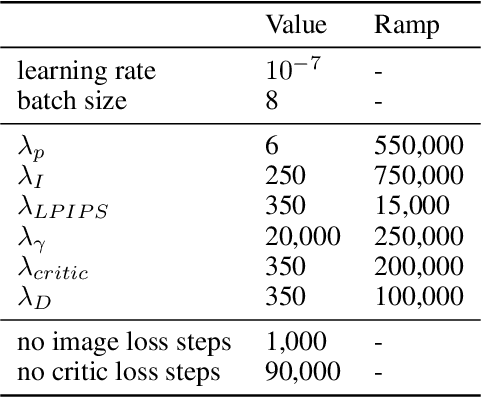

Abstract:We present a model for differentiating between images that are authentic copies of ones published by photographers, and images that have been manipulated by cropping, splicing or downsampling after publication. The model comprises an encoder that resides with the photographer and a matching decoder that is available to observers. The encoder learns to embed imperceptible positional signatures into image values prior to publication. The decoder learns to use these steganographic positional ("stegapos") signatures to determine, for each small image patch, the 2D positional coordinates that were held by the patch in its originally-published image. Crop, splice and downsample edits become detectable by the inconsistencies they cause in the hidden positional signatures. We find that training the encoder and decoder together produces a model that imperceptibly encodes position, and that enables superior performance on established benchmarks for splice detection and high accuracy on a new benchmark for crop detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge