Tobias Grüning

University of Rostock - CITlab

Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes

Oct 13, 2021

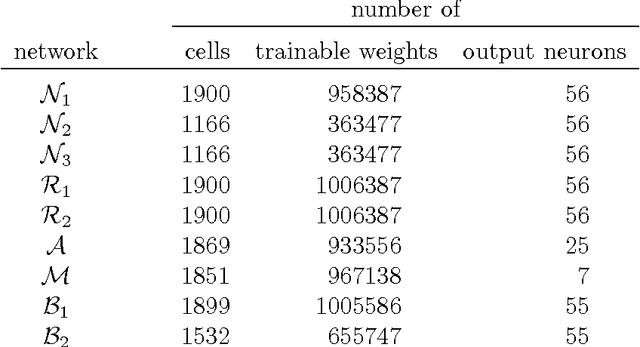

Abstract:In contrast to Connectionist Temporal Classification (CTC) approaches, Sequence-To-Sequence (S2S) models for Handwritten Text Recognition (HTR) suffer from errors such as skipped or repeated words which often occur at the end of a sequence. In this paper, to combine the best of both approaches, we propose to use the CTC-Prefix-Score during S2S decoding. Hereby, during beam search, paths that are invalid according to the CTC confidence matrix are penalised. Our network architecture is composed of a Convolutional Neural Network (CNN) as visual backbone, bidirectional Long-Short-Term-Memory-Cells (LSTMs) as encoder, and a decoder which is a Transformer with inserted mutual attention layers. The CTC confidences are computed on the encoder while the Transformer is only used for character-wise S2S decoding. We evaluate this setup on three HTR data sets: IAM, Rimes, and StAZH. On IAM, we achieve a competitive Character Error Rate (CER) of 2.95% when pretraining our model on synthetic data and including a character-based language model for contemporary English. Compared to other state-of-the-art approaches, our model requires about 10-20 times less parameters. Access our shared implementations via this link to GitHub: https://github.com/Planet-AI-GmbH/tfaip-hybrid-ctc-s2s.

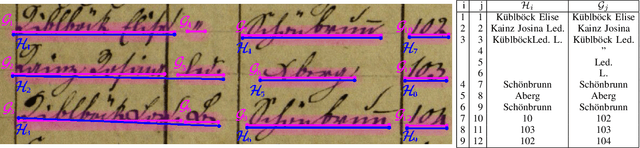

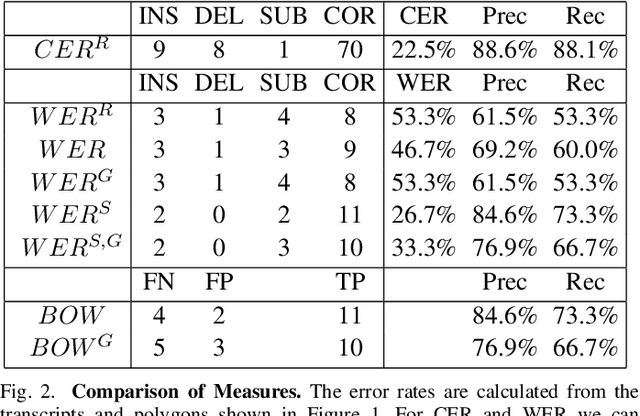

End-To-End Measure for Text Recognition

Aug 26, 2019

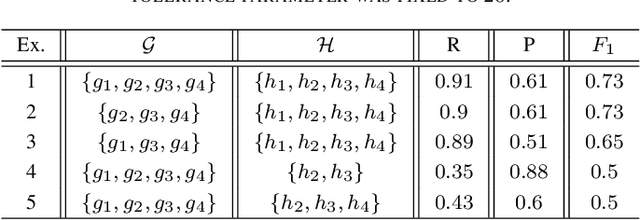

Abstract:Measuring the performance of text recognition and text line detection engines is an important step to objectively compare systems and their configuration. There exist well-established measures for both tasks separately. However, there is no sophisticated evaluation scheme to measure the quality of a combined text line detection and text recognition system. The F-measure on word level is a well-known methodology, which is sometimes used in this context. Nevertheless, it does not take into account the alignment of hypothesis and ground truth text and can lead to deceptive results. Since users of automatic information retrieval pipelines in the context of text recognition are mainly interested in the end-to-end performance of a given system, there is a strong need for such a measure. Hence, we present a measure to evaluate the quality of an end-to-end text recognition system. The basis for this measure is the well established and widely used character error rate, which is limited -- in its original form -- to aligned hypothesis and ground truth texts. The proposed measure is flexible in a way that it can be configured to penalize different reading orders between the hypothesis and ground truth and can take into account the geometric position of the text lines. Additionally, it can ignore over- and under- segmentation of text lines. With these parameters it is possible to get a measure fitting best to its own needs.

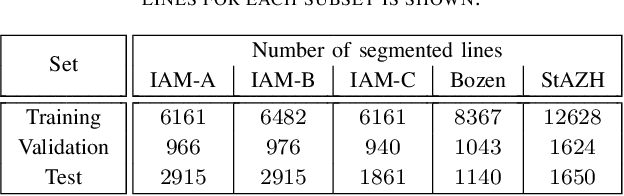

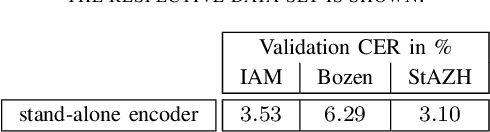

Evaluating Sequence-to-Sequence Models for Handwritten Text Recognition

Mar 18, 2019

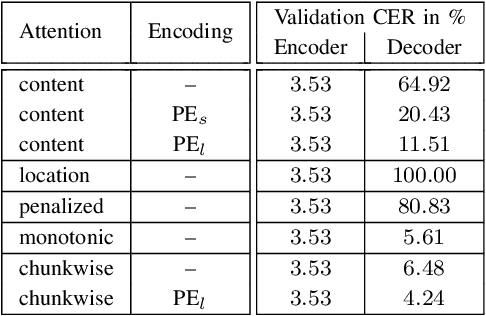

Abstract:Encoder-decoder models have become an effective approach for sequence learning tasks like machine translation, image captioning and speech recognition, but have yet to show competitive results for handwritten text recognition. To this end, we propose an attention-based sequence-to-sequence model. It combines a convolutional neural network as a generic feature extractor with a recurrent neural network to encode both the visual information, as well as the temporal context between characters in the input image, and uses a separate recurrent neural network to decode the actual character sequence. We make experimental comparisons between various attention mechanisms and positional encodings, in order to find an appropriate alignment between the input and output sequence. The model can be trained end-to-end and the optional integration of a hybrid loss allows the encoder to retain an interpretable and usable output, e.g. for keyword spotting purposes without prior indexing, if desired. We achieve competitive results on the IAM and ICFHR2016 READ data sets compared to the state-of-the-art without the use of a language model, and we significantly improve over any recent sequence-to-sequence approaches.

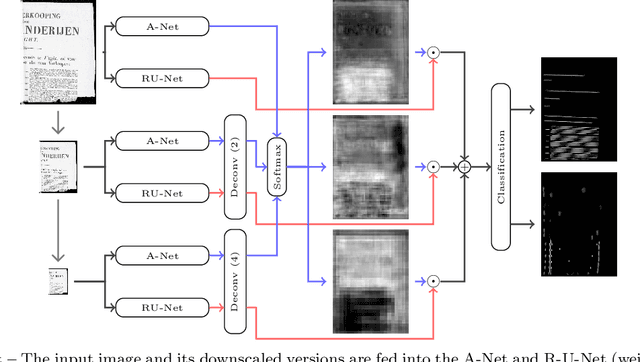

A Two-Stage Method for Text Line Detection in Historical Documents

Feb 09, 2018

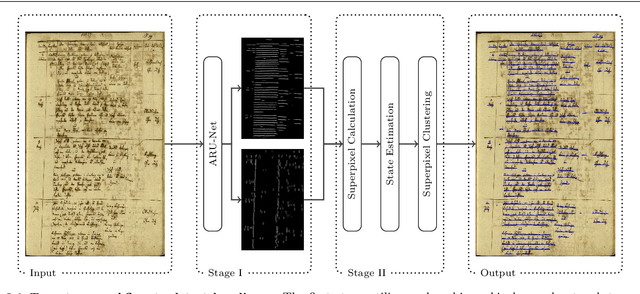

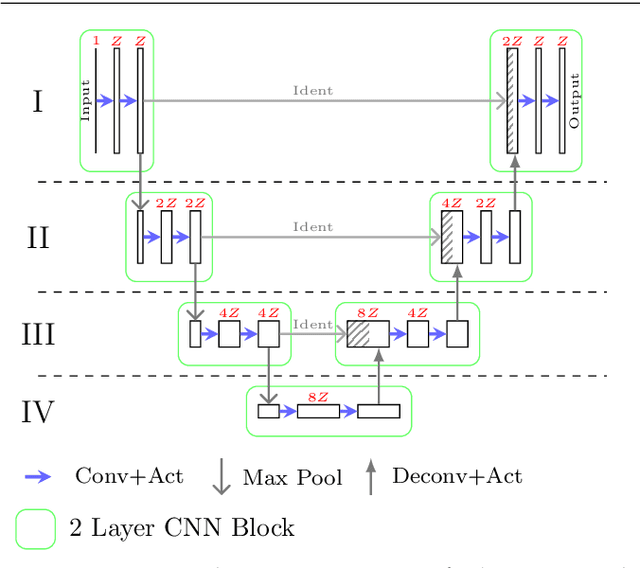

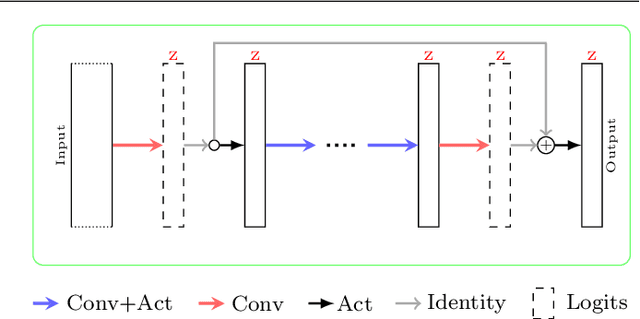

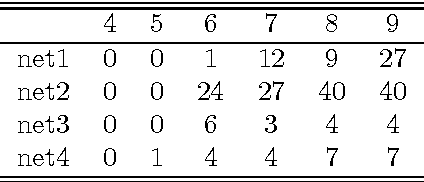

Abstract:This work presents a two-stage text line detection method for historical documents. In a first stage, a deep neural network called ARU-Net labels pixels to belong to one of the three classes: baseline, separator or other. The separator class marks beginning and end of each text line. The ARU-Net is trainable from scratch with manageably few manually annotated example images (less than 50). This is achieved by utilizing data augmentation strategies. The network predictions are used as input for the second stage which performs a bottom-up clustering to build baselines. The developed method is capable of handling complex layouts as well as curved and arbitrarily oriented text lines. It substantially outperforms current state-of-the-art approaches. For example, for the complex track of the cBAD: ICDAR2017 Competiton on Baseline Detection the F-value is increased from 0.859 to 0.922. The framework to train and run the ARU-Net is open source.

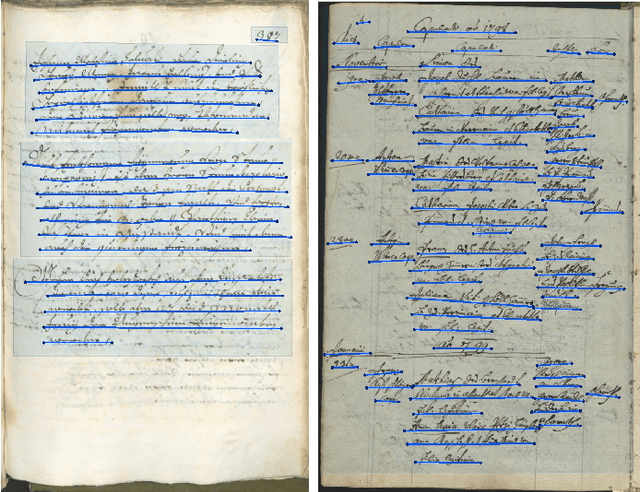

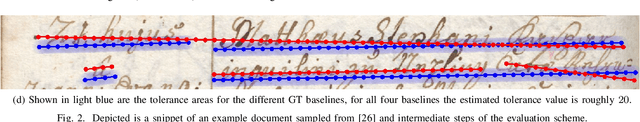

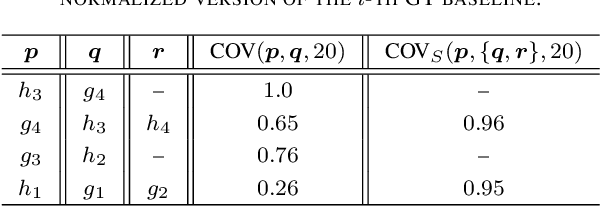

READ-BAD: A New Dataset and Evaluation Scheme for Baseline Detection in Archival Documents

Dec 11, 2017

Abstract:Text line detection is crucial for any application associated with Automatic Text Recognition or Keyword Spotting. Modern algorithms perform good on well-established datasets since they either comprise clean data or simple/homogeneous page layouts. We have collected and annotated 2036 archival document images from different locations and time periods. The dataset contains varying page layouts and degradations that challenge text line segmentation methods. Well established text line segmentation evaluation schemes such as the Detection Rate or Recognition Accuracy demand for binarized data that is annotated on a pixel level. Producing ground truth by these means is laborious and not needed to determine a method's quality. In this paper we propose a new evaluation scheme that is based on baselines. The proposed scheme has no need for binarization and it can handle skewed as well as rotated text lines. The ICDAR 2017 Competition on Baseline Detection and the ICDAR 2017 Competition on Layout Analysis for Challenging Medieval Manuscripts used this evaluation scheme. Finally, we present results achieved by a recently published text line detection algorithm.

CITlab ARGUS for historical handwritten documents

May 26, 2016

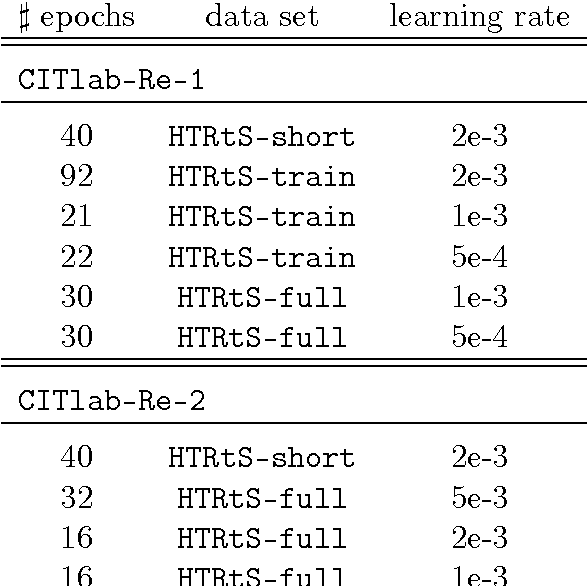

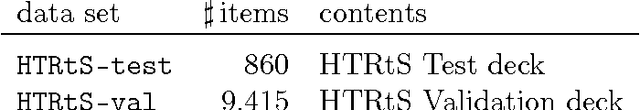

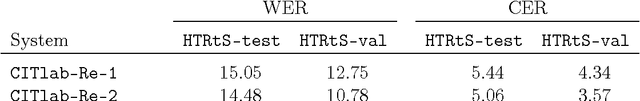

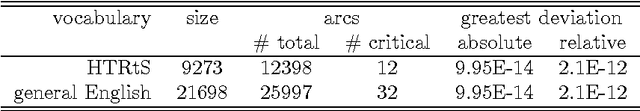

Abstract:We describe CITlab's recognition system for the HTRtS competition attached to the 13. International Conference on Document Analysis and Recognition, ICDAR 2015. The task comprises the recognition of historical handwritten documents. The core algorithms of our system are based on multi-dimensional recurrent neural networks (MDRNN) and connectionist temporal classification (CTC). The software modules behind that as well as the basic utility technologies are essentially powered by PLANET's ARGUS framework for intelligent text recognition and image processing.

Regular expressions for decoding of neural network outputs

Feb 22, 2016

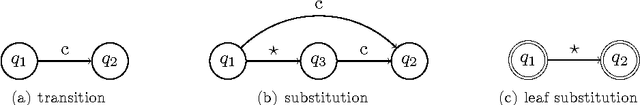

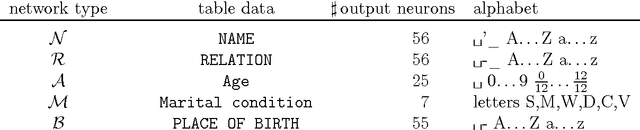

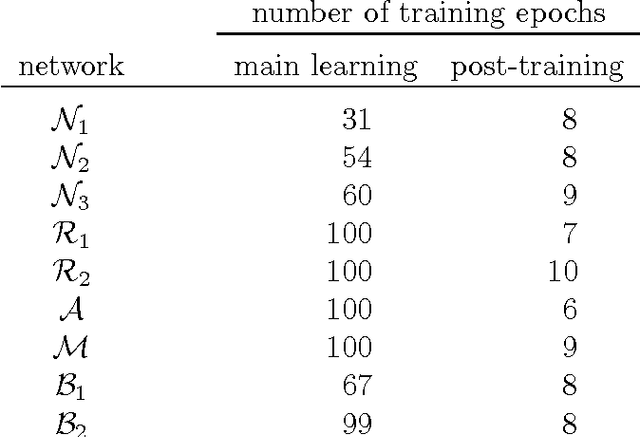

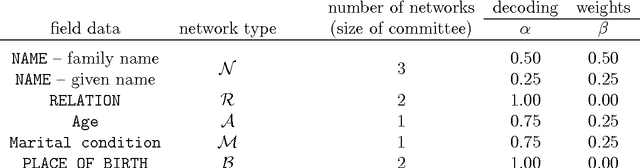

Abstract:This article proposes a convenient tool for decoding the output of neural networks trained by Connectionist Temporal Classification (CTC) for handwritten text recognition. We use regular expressions to describe the complex structures expected in the writing. The corresponding finite automata are employed to build a decoder. We analyze theoretically which calculations are relevant and which can be avoided. A great speed-up results from an approximation. We conclude that the approximation most likely fails if the regular expression does not match the ground truth which is not harmful for many applications since the low probability will be even underestimated. The proposed decoder is very efficient compared to other decoding methods. The variety of applications reaches from information retrieval to full text recognition. We refer to applications where we integrated the proposed decoder successfully.

CITlab ARGUS for historical data tables

Dec 15, 2014

Abstract:We describe CITlab's recognition system for the ANWRESH-2014 competition attached to the 14. International Conference on Frontiers in Handwriting Recognition, ICFHR 2014. The task comprises word recognition from segmented historical documents. The core components of our system are based on multi-dimensional recurrent neural networks (MDRNN) and connectionist temporal classification (CTC). The software modules behind that as well as the basic utility technologies are essentially powered by PLANET's ARGUS framework for intelligent text recognition and image processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge