Tianrun Gao

GenCP: Towards Generative Modeling Paradigm of Coupled Physics

Jan 27, 2026Abstract:Real-world physical systems are inherently complex, often involving the coupling of multiple physics, making their simulation both highly valuable and challenging. Many mainstream approaches face challenges when dealing with decoupled data. Besides, they also suffer from low efficiency and fidelity in strongly coupled spatio-temporal physical systems. Here we propose GenCP, a novel and elegant generative paradigm for coupled multiphysics simulation. By formulating coupled-physics modeling as a probability modeling problem, our key innovation is to integrate probability density evolution in generative modeling with iterative multiphysics coupling, thereby enabling training on data from decoupled simulation and inferring coupled physics during sampling. We also utilize operator-splitting theory in the space of probability evolution to establish error controllability guarantees for this "conditional-to-joint" sampling scheme. We evaluate our paradigm on a synthetic setting and three challenging multi-physics scenarios to demonstrate both principled insight and superior application performance of GenCP. Code is available at this repo: github.com/AI4Science-WestlakeU/GenCP.

RealPDEBench: A Benchmark for Complex Physical Systems with Real-World Data

Jan 05, 2026Abstract:Predicting the evolution of complex physical systems remains a central problem in science and engineering. Despite rapid progress in scientific Machine Learning (ML) models, a critical bottleneck is the lack of expensive real-world data, resulting in most current models being trained and validated on simulated data. Beyond limiting the development and evaluation of scientific ML, this gap also hinders research into essential tasks such as sim-to-real transfer. We introduce RealPDEBench, the first benchmark for scientific ML that integrates real-world measurements with paired numerical simulations. RealPDEBench consists of five datasets, three tasks, eight metrics, and ten baselines. We first present five real-world measured datasets with paired simulated datasets across different complex physical systems. We further define three tasks, which allow comparisons between real-world and simulated data, and facilitate the development of methods to bridge the two. Moreover, we design eight evaluation metrics, spanning data-oriented and physics-oriented metrics, and finally benchmark ten representative baselines, including state-of-the-art models, pretrained PDE foundation models, and a traditional method. Experiments reveal significant discrepancies between simulated and real-world data, while showing that pretraining with simulated data consistently improves both accuracy and convergence. In this work, we hope to provide insights from real-world data, advancing scientific ML toward bridging the sim-to-real gap and real-world deployment. Our benchmark, datasets, and instructions are available at https://realpdebench.github.io/.

EqCollide: Equivariant and Collision-Aware Deformable Objects Neural Simulator

Jun 06, 2025Abstract:Simulating collisions of deformable objects is a fundamental yet challenging task due to the complexity of modeling solid mechanics and multi-body interactions. Existing data-driven methods often suffer from lack of equivariance to physical symmetries, inadequate handling of collisions, and limited scalability. Here we introduce EqCollide, the first end-to-end equivariant neural fields simulator for deformable objects and their collisions. We propose an equivariant encoder to map object geometry and velocity into latent control points. A subsequent equivariant Graph Neural Network-based Neural Ordinary Differential Equation models the interactions among control points via collision-aware message passing. To reconstruct velocity fields, we query a neural field conditioned on control point features, enabling continuous and resolution-independent motion predictions. Experimental results show that EqCollide achieves accurate, stable, and scalable simulations across diverse object configurations, and our model achieves 24.34% to 35.82% lower rollout MSE even compared with the best-performing baseline model. Furthermore, our model could generalize to more colliding objects and extended temporal horizons, and stay robust to input transformed with group action.

ProjectEval: A Benchmark for Programming Agents Automated Evaluation on Project-Level Code Generation

Mar 10, 2025

Abstract:Recently, LLM agents have made rapid progress in improving their programming capabilities. However, existing benchmarks lack the ability to automatically evaluate from users' perspective, and also lack the explainability of the results of LLM agents' code generation capabilities. Thus, we introduce ProjectEval, a new benchmark for LLM agents project-level code generation's automated evaluation by simulating user interaction. ProjectEval is constructed by LLM with human reviewing. It has three different level inputs of natural languages or code skeletons. ProjectEval can evaluate the generated projects by user interaction simulation for execution, and by code similarity through existing objective indicators. Through ProjectEval, we find that systematic engineering project code, overall understanding of the project and comprehensive analysis capability are the keys for LLM agents to achieve practical projects. Our findings and benchmark provide valuable insights for developing more effective programming agents that can be deployed in future real-world production.

SA-FedLora: Adaptive Parameter Allocation for Efficient Federated Learning with LoRA Tuning

May 15, 2024

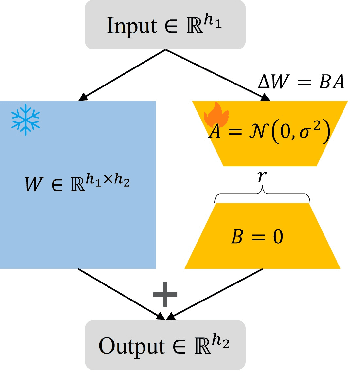

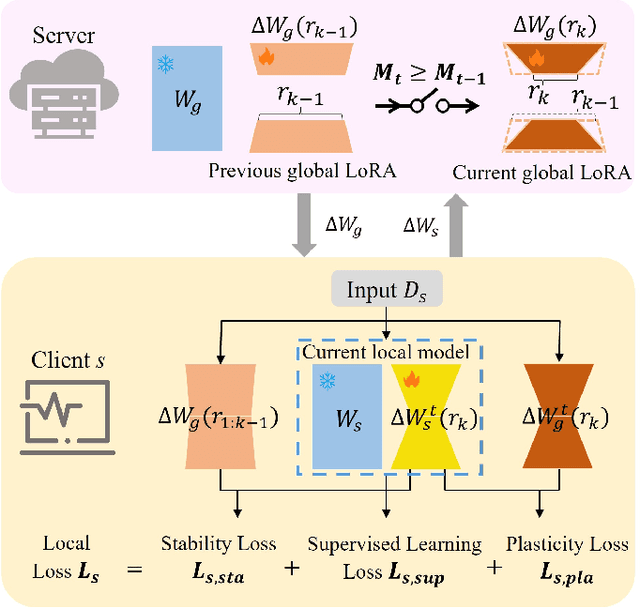

Abstract:Fine-tuning large-scale pre-trained models via transfer learning is an emerging important paradigm for a wide range of downstream tasks, with performance heavily reliant on extensive data. Federated learning (FL), as a distributed framework, provides a secure solution to train models on local datasets while safeguarding raw sensitive data. However, FL networks encounter high communication costs due to the massive parameters of large-scale pre-trained models, necessitating parameter-efficient methods. Notably, parameter efficient fine tuning, such as Low-Rank Adaptation (LoRA), has shown remarkable success in fine-tuning pre-trained models. However, prior research indicates that the fixed parameter budget may be prone to the overfitting or slower convergence. To address this challenge, we propose a Simulated Annealing-based Federated Learning with LoRA tuning (SA-FedLoRA) approach by reducing trainable parameters. Specifically, SA-FedLoRA comprises two stages: initiating and annealing. (1) In the initiating stage, we implement a parameter regularization approach during the early rounds of aggregation, aiming to mitigate client drift and accelerate the convergence for the subsequent tuning. (2) In the annealing stage, we allocate higher parameter budget during the early 'heating' phase and then gradually shrink the budget until the 'cooling' phase. This strategy not only facilitates convergence to the global optimum but also reduces communication costs. Experimental results demonstrate that SA-FedLoRA is an efficient FL, achieving superior performance to FedAvg and significantly reducing communication parameters by up to 93.62%.

Self adaptive global-local feature enhancement for radiology report generation

Nov 21, 2022

Abstract:Automated radiology report generation aims at automatically generating a detailed description of medical images, which can greatly alleviate the workload of radiologists and provide better medical services to remote areas. Most existing works pay attention to the holistic impression of medical images, failing to utilize important anatomy information. However, in actual clinical practice, radiologists usually locate important anatomical structures, and then look for signs of abnormalities in certain structures and reason the underlying disease. In this paper, we propose a novel framework AGFNet to dynamically fuse the global and anatomy region feature to generate multi-grained radiology report. Firstly, we extract important anatomy region features and global features of input Chest X-ray (CXR). Then, with the region features and the global features as input, our proposed self-adaptive fusion gate module could dynamically fuse multi-granularity information. Finally, the captioning generator generates the radiology reports through multi-granularity features. Experiment results illustrate that our model achieved the state-of-the-art performance on two benchmark datasets including the IU X-Ray and MIMIC-CXR. Further analyses also prove that our model is able to leverage the multi-grained information from radiology images and texts so as to help generate more accurate reports.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge