Tianlei Jin

Modeling Output-Level Task Relatedness in Multi-Task Learning with Feedback Mechanism

Apr 01, 2024

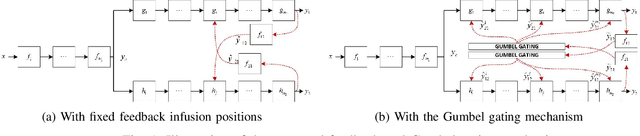

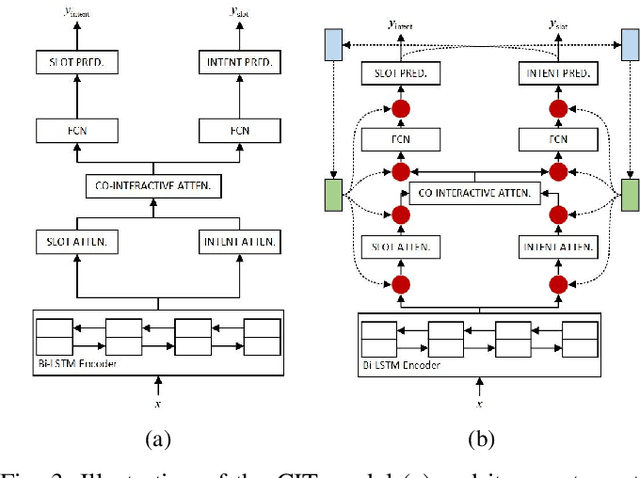

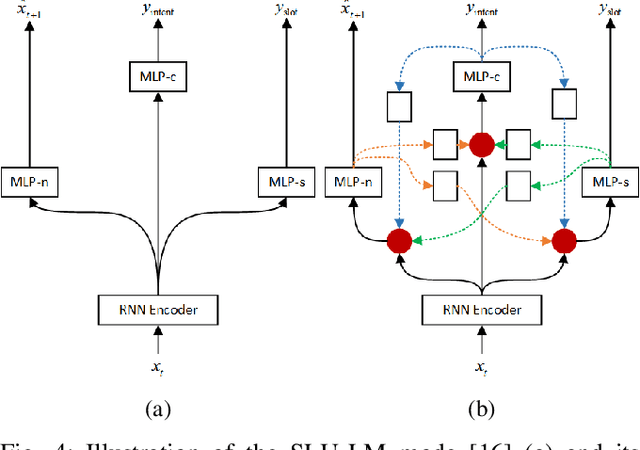

Abstract:Multi-task learning (MTL) is a paradigm that simultaneously learns multiple tasks by sharing information at different levels, enhancing the performance of each individual task. While previous research has primarily focused on feature-level or parameter-level task relatedness, and proposed various model architectures and learning algorithms to improve learning performance, we aim to explore output-level task relatedness. This approach introduces a posteriori information into the model, considering that different tasks may produce correlated outputs with mutual influences. We achieve this by incorporating a feedback mechanism into MTL models, where the output of one task serves as a hidden feature for another task, thereby transforming a static MTL model into a dynamic one. To ensure the training process converges, we introduce a convergence loss that measures the trend of a task's outputs during each iteration. Additionally, we propose a Gumbel gating mechanism to determine the optimal projection of feedback signals. We validate the effectiveness of our method and evaluate its performance through experiments conducted on several baseline models in spoken language understanding.

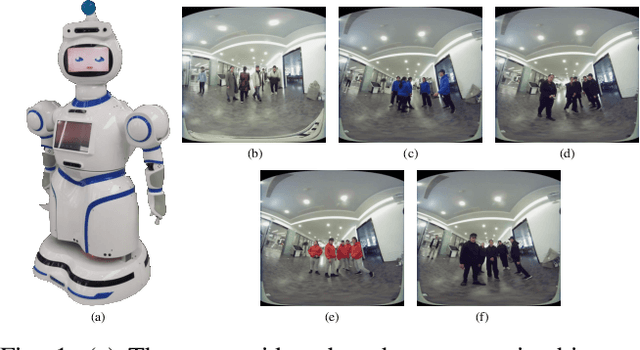

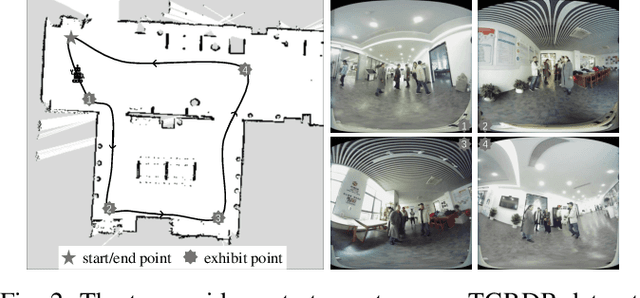

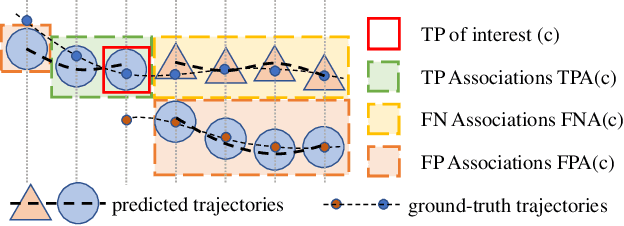

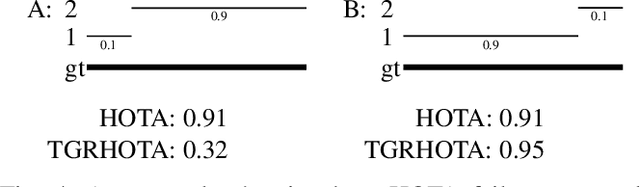

TGRMPT: A Head-Shoulder Aided Multi-Person Tracker and a New Large-Scale Dataset for Tour-Guide Robot

Jul 08, 2022

Abstract:A service robot serving safely and politely needs to track the surrounding people robustly, especially for Tour-Guide Robot (TGR). However, existing multi-object tracking (MOT) or multi-person tracking (MPT) methods are not applicable to TGR for the following reasons: 1. lacking relevant large-scale datasets; 2. lacking applicable metrics to evaluate trackers. In this work, we target the visual perceptual tasks for TGR and present the TGRDB dataset, a novel large-scale multi-person tracking dataset containing roughly 5.6 hours of annotated videos and over 450 long-term trajectories. Besides, we propose a more applicable metric to evaluate trackers using our dataset. As part of our work, we present TGRMPT, a novel MPT system that incorporates information from head shoulder and whole body, and achieves state-of-the-art performance. We have released our codes and dataset in https://github.com/wenwenzju/TGRMPT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge