Shunda Hu

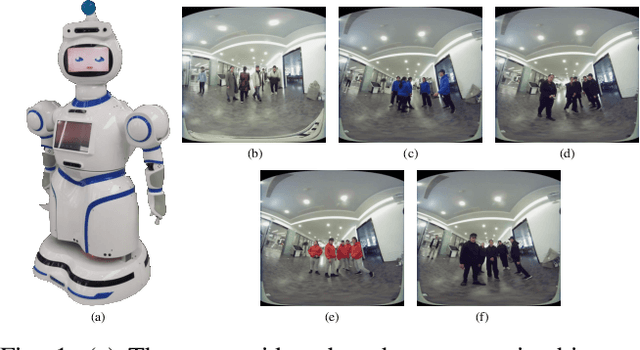

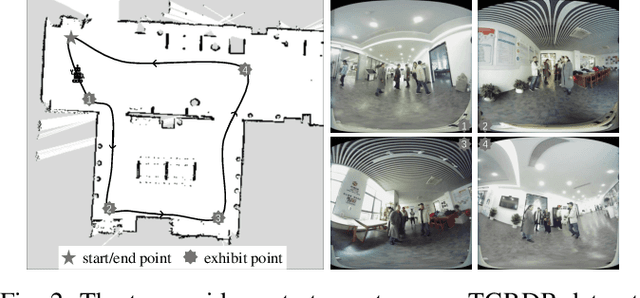

TGRMPT: A Head-Shoulder Aided Multi-Person Tracker and a New Large-Scale Dataset for Tour-Guide Robot

Jul 08, 2022

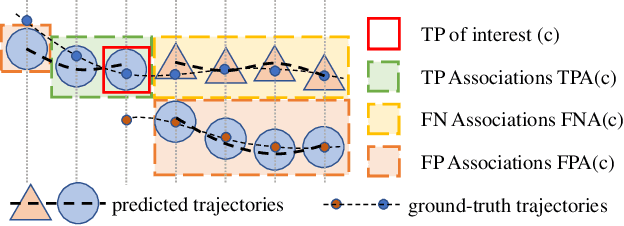

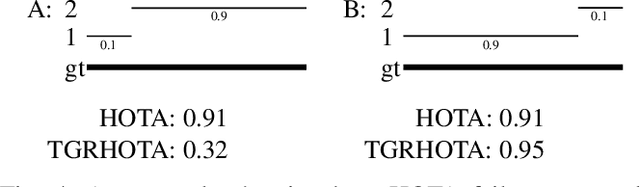

Abstract:A service robot serving safely and politely needs to track the surrounding people robustly, especially for Tour-Guide Robot (TGR). However, existing multi-object tracking (MOT) or multi-person tracking (MPT) methods are not applicable to TGR for the following reasons: 1. lacking relevant large-scale datasets; 2. lacking applicable metrics to evaluate trackers. In this work, we target the visual perceptual tasks for TGR and present the TGRDB dataset, a novel large-scale multi-person tracking dataset containing roughly 5.6 hours of annotated videos and over 450 long-term trajectories. Besides, we propose a more applicable metric to evaluate trackers using our dataset. As part of our work, we present TGRMPT, a novel MPT system that incorporates information from head shoulder and whole body, and achieves state-of-the-art performance. We have released our codes and dataset in https://github.com/wenwenzju/TGRMPT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge