Thomas Trappenberg

Platelet enumeration in dense aggregates

May 05, 2025Abstract:Identifying and counting blood components such as red blood cells, various types of white blood cells, and platelets is a critical task for healthcare practitioners. Deep learning approaches, particularly convolutional neural networks (CNNs) using supervised learning strategies, have shown considerable success for such tasks. However, CNN based architectures such as U-Net, often struggles to accurately identify platelets due to their sizes and high variability of features. To address these challenges, researchers have commonly employed strategies such as class weighted loss functions, which have demonstrated some success. However, this does not address the more significant challenge of platelet variability in size and tendency to form aggregates and associations with other blood components. In this study, we explored an alternative approach by investigating the role of convolutional kernels in mitigating these issues. We also assigned separate classes to singular platelets and platelet aggregates and performed semantic segmentation using various U-Net architectures for identifying platelets. We then evaluated and compared two common methods (pixel area method and connected component analysis) for counting platelets and proposed an alternative approach specialized for single platelets and platelet aggregates. Our experiments provided results that showed significant improvements in the identification of platelets, highlighting the importance of optimizing convolutional operations and class designations. We show that the common practice of pixel area-based counting often over estimate platelet counts, whereas the proposed method presented in this work offers significant improvements. We discuss in detail about these methods from segmentation masks.

Masked strategies for images with small objects

Apr 24, 2025Abstract:The hematology analytics used for detection and classification of small blood components is a significant challenge. In particular, when objects exists as small pixel-sized entities in a large context of similar objects. Deep learning approaches using supervised models with pre-trained weights, such as residual networks and vision transformers have demonstrated success for many applications. Unfortunately, when applied to images outside the domain of learned representations, these methods often result with less than acceptable performance. A strategy to overcome this can be achieved by using self-supervised models, where representations are learned and weights are then applied for downstream applications. Recently, masked autoencoders have proven to be effective to obtain representations that captures global context information. By masking regions of an image and having the model learn to reconstruct both the masked and non-masked regions, weights can be used for various applications. However, if the sizes of the objects in images are less than the size of the mask, the global context information is lost, making it almost impossible to reconstruct the image. In this study, we investigated the effect of mask ratios and patch sizes for blood components using a MAE to obtain learned ViT encoder representations. We then applied the encoder weights to train a U-Net Transformer for semantic segmentation to obtain both local and global contextual information. Our experimental results demonstrates that both smaller mask ratios and patch sizes improve the reconstruction of images using a MAE. We also show the results of semantic segmentation with and without pre-trained weights, where smaller-sized blood components benefited with pre-training. Overall, our proposed method offers an efficient and effective strategy for the segmentation and classification of small objects.

Label-free Monitoring of Self-Supervised Learning Progress

Sep 10, 2024

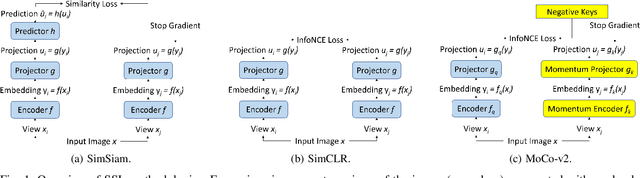

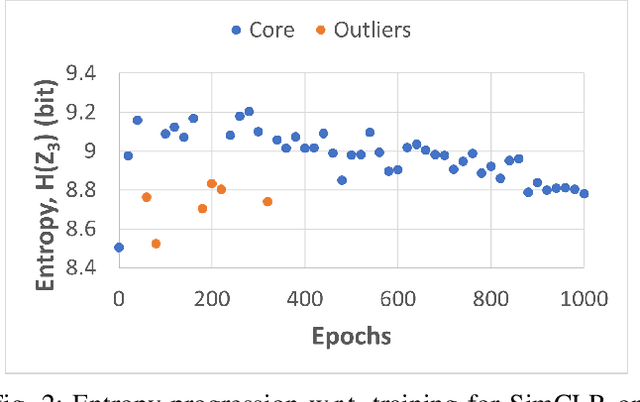

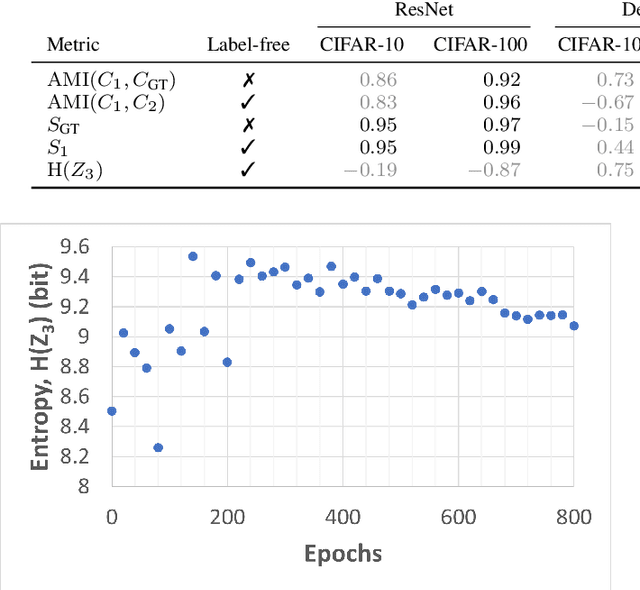

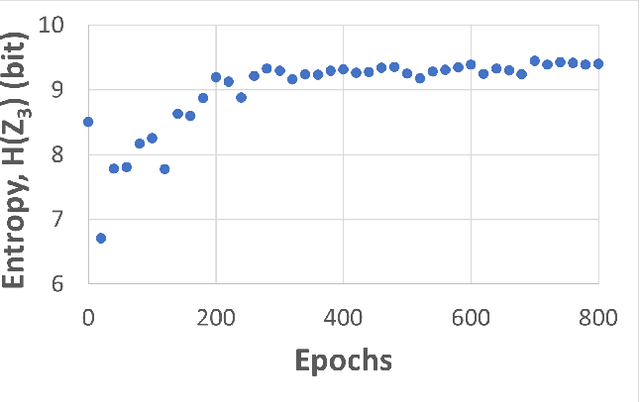

Abstract:Self-supervised learning (SSL) is an effective method for exploiting unlabelled data to learn a high-level embedding space that can be used for various downstream tasks. However, existing methods to monitor the quality of the encoder -- either during training for one model or to compare several trained models -- still rely on access to annotated data. When SSL methodologies are applied to new data domains, a sufficiently large labelled dataset may not always be available. In this study, we propose several evaluation metrics which can be applied on the embeddings of unlabelled data and investigate their viability by comparing them to linear probe accuracy (a common metric which utilizes an annotated dataset). In particular, we apply $k$-means clustering and measure the clustering quality with the silhouette score and clustering agreement. We also measure the entropy of the embedding distribution. We find that while the clusters did correspond better to the ground truth annotations as training of the network progressed, label-free clustering metrics correlated with the linear probe accuracy only when training with SSL methods SimCLR and MoCo-v2, but not with SimSiam. Additionally, although entropy did not always have strong correlations with LP accuracy, this appears to be due to instability arising from early training, with the metric stabilizing and becoming more reliable at later stages of learning. Furthermore, while entropy generally decreases as learning progresses, this trend reverses for SimSiam. More research is required to establish the cause for this unexpected behaviour. Lastly, we find that while clustering based approaches are likely only viable for same-architecture comparisons, entropy may be architecture-independent.

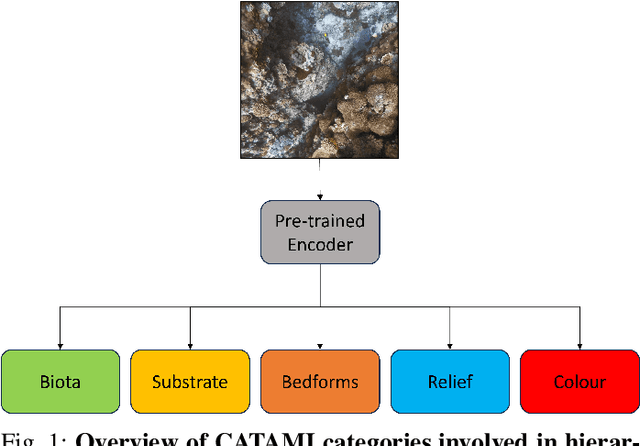

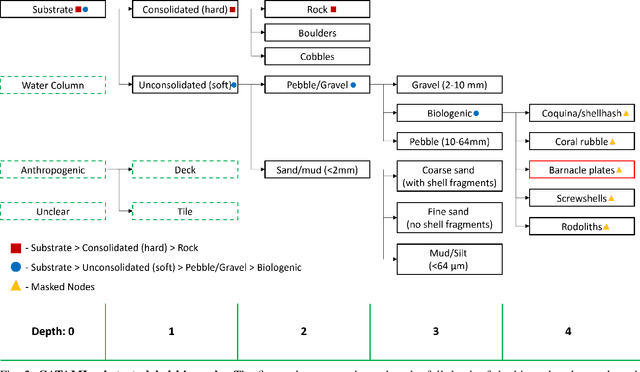

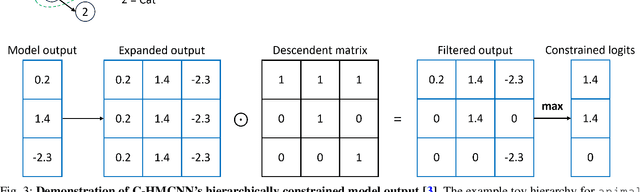

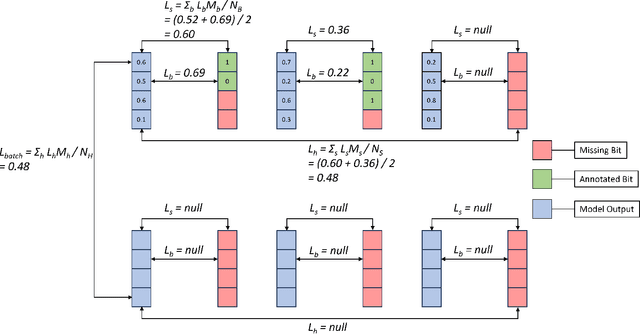

Hierarchical Multi-Label Classification with Missing Information for Benthic Habitat Imagery

Sep 10, 2024

Abstract:In this work, we apply state-of-the-art self-supervised learning techniques on a large dataset of seafloor imagery, \textit{BenthicNet}, and study their performance for a complex hierarchical multi-label (HML) classification downstream task. In particular, we demonstrate the capacity to conduct HML training in scenarios where there exist multiple levels of missing annotation information, an important scenario for handling heterogeneous real-world data collected by multiple research groups with differing data collection protocols. We find that, when using smaller one-hot image label datasets typical of local or regional scale benthic science projects, models pre-trained with self-supervision on a larger collection of in-domain benthic data outperform models pre-trained on ImageNet. In the HML setting, we find the model can attain a deeper and more precise classification if it is pre-trained with self-supervision on in-domain data. We hope this work can establish a benchmark for future models in the field of automated underwater image annotation tasks and can guide work in other domains with hierarchical annotations of mixed resolution.

A Generalized Transformer-based Radio Link Failure Prediction Framework in 5G RANs

Jul 06, 2024Abstract:Radio link failure (RLF) prediction system in Radio Access Networks (RANs) is critical for ensuring seamless communication and meeting the stringent requirements of high data rates, low latency, and improved reliability in 5G networks. However, weather conditions such as precipitation, humidity, temperature, and wind impact these communication links. Usually, historical radio link Key Performance Indicators (KPIs) and their surrounding weather station observations are utilized for building learning-based RLF prediction models. However, such models must be capable of learning the spatial weather context in a dynamic RAN and effectively encoding time series KPIs with the weather observation data. Existing works fail to incorporate both of these essential design aspects of the prediction models. This paper fills the gap by proposing GenTrap, a novel RLF prediction framework that introduces a graph neural network (GNN)-based learnable weather effect aggregation module and employs state-of-the-art time series transformer as the temporal feature extractor for radio link failure prediction. The proposed aggregation method of GenTrap can be integrated into any existing prediction model to achieve better performance and generalizability. We evaluate GenTrap on two real-world datasets (rural and urban) with 2.6 million KPI data points and show that GenTrap offers a significantly higher F1-score (0.93 for rural and 0.79 for urban) compared to its counterparts while possessing generalization capability.

BenthicNet: A global compilation of seafloor images for deep learning applications

May 08, 2024Abstract:Advances in underwater imaging enable the collection of extensive seafloor image datasets that are necessary for monitoring important benthic ecosystems. The ability to collect seafloor imagery has outpaced our capacity to analyze it, hindering expedient mobilization of this crucial environmental information. Recent machine learning approaches provide opportunities to increase the efficiency with which seafloor image datasets are analyzed, yet large and consistent datasets necessary to support development of such approaches are scarce. Here we present BenthicNet: a global compilation of seafloor imagery designed to support the training and evaluation of large-scale image recognition models. An initial set of over 11.4 million images was collected and curated to represent a diversity of seafloor environments using a representative subset of 1.3 million images. These are accompanied by 2.6 million annotations translated to the CATAMI scheme, which span 190,000 of the images. A large deep learning model was trained on this compilation and preliminary results suggest it has utility for automating large and small-scale image analysis tasks. The compilation and model are made openly available for use by the scientific community at https://doi.org/10.20383/103.0614.

LogAvgExp Provides a Principled and Performant Global Pooling Operator

Nov 02, 2021

Abstract:We seek to improve the pooling operation in neural networks, by applying a more theoretically justified operator. We demonstrate that LogSumExp provides a natural OR operator for logits. When one corrects for the number of elements inside the pooling operator, this becomes $\text{LogAvgExp} := \log(\text{mean}(\exp(x)))$. By introducing a single temperature parameter, LogAvgExp smoothly transitions from the max of its operands to the mean (found at the limiting cases $t \to 0^+$ and $t \to +\infty$). We experimentally tested LogAvgExp, both with and without a learnable temperature parameter, in a variety of deep neural network architectures for computer vision.

Logical Activation Functions: Logit-space equivalents of Boolean Operators

Oct 22, 2021

Abstract:Neuronal representations within artificial neural networks are commonly understood as logits, representing the log-odds score of presence (versus absence) of features within the stimulus. Under this interpretation, we can derive the probability $P(x_0 \land x_1)$ that a pair of independent features are both present in the stimulus from their logits. By converting the resulting probability back into a logit, we obtain a logit-space equivalent of the AND operation. However, since this function involves taking multiple exponents and logarithms, it is not well suited to be directly used within neural networks. We thus constructed an efficient approximation named $\text{AND}_\text{AIL}$ (the AND operator Approximate for Independent Logits) utilizing only comparison and addition operations, which can be deployed as an activation function in neural networks. Like MaxOut, $\text{AND}_\text{AIL}$ is a generalization of ReLU to two-dimensions. Additionally, we constructed efficient approximations of the logit-space equivalents to the OR and XNOR operators. We deployed these new activation functions, both in isolation and in conjunction, and demonstrated their effectiveness on a variety of tasks including image classification, transfer learning, abstract reasoning, and compositional zero-shot learning.

Representational Rényi heterogeneity

Dec 13, 2019

Abstract:A discrete system's heterogeneity is measured by the R\'enyi heterogeneity family of indices (also known as Hill numbers or Hannah-Kay indices), whose units are known as the numbers equivalent, and whose scaling properties are consistent and intuitive. Unfortunately, numbers equivalent heterogeneity measures for non-categorical data require a priori (A) categorical partitioning and (B) pairwise distance measurement on the space of observable data. This precludes their application to problems in disciplines where categories are ill-defined or where semantically relevant features must be learned as abstractions from some data. We thus introduce representational R\'enyi heterogeneity (RRH), which transforms an observable domain onto a latent space upon which the R\'enyi heterogeneity is both tractable and semantically relevant. This method does not require a priori binning nor definition of a distance function on the observable space. Compared with existing state-of-the-art indices on a beta-mixture distribution, we show that RRH more accurately detects the number of distinct mixture components. We also show that RRH can measure heterogeneity in natural images whose semantically relevant features must be abstracted using deep generative models. We further show that RRH can uniquely capture heterogeneity caused by distinct components in mixture distributions. Our novel approach will enable measurement of heterogeneity in disciplines where a priori categorical partitions of observable data are not possible, or where semantically relevant features must be inferred using latent variable models.

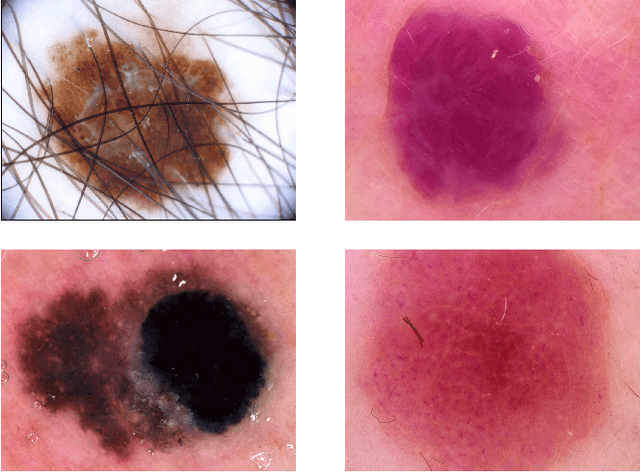

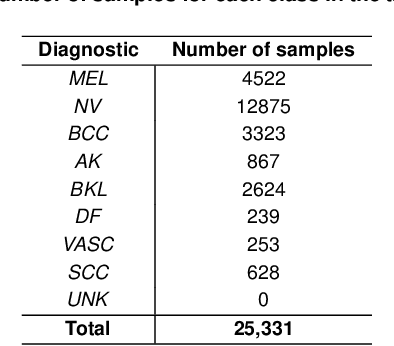

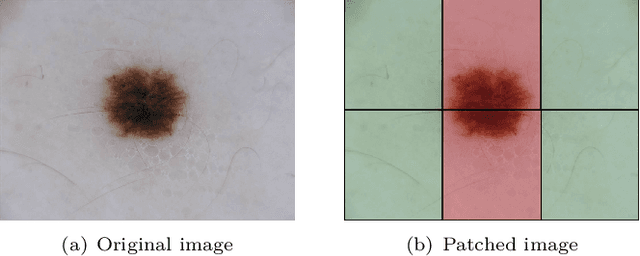

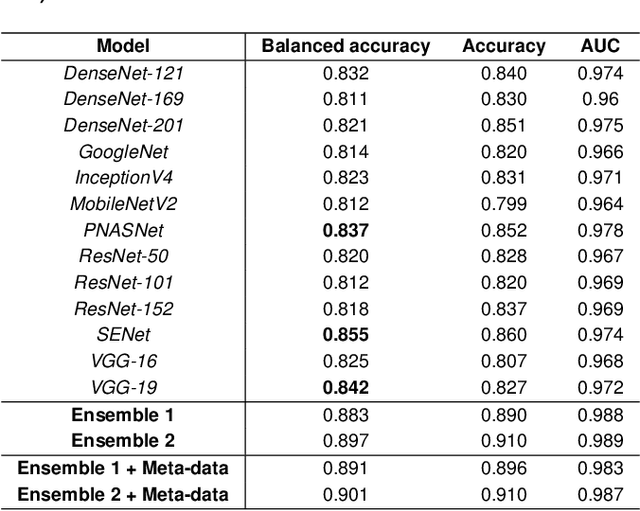

Skin cancer detection based on deep learning and entropy to detect outlier samples

Sep 10, 2019

Abstract:We describe our methods to address both tasks of the ISIC 2019 challenge. The goal of this challenge is to provide the diagnostic for skin cancer using images and meta-data. There are nine classes in the dataset, nonetheless, one of them is an outlier and is not present on it. To tackle the challenge, we apply an ensemble of classifiers, which has 13 convolutional neural networks (CNN), we develop two approaches to handle the outlier class and we propose a straightforward method to use the meta-data along with the images. Throughout this report, we detail each methodology and parameters to make it easy to replicate our work. The results obtained are in accordance with the previous challenges and the approaches to detect the outlier class and to address the meta-data seem to be work properly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge